- Oracle Banking Common Core User Guide

- Machine Learning Framework

- Model Monitoring and Auto Training

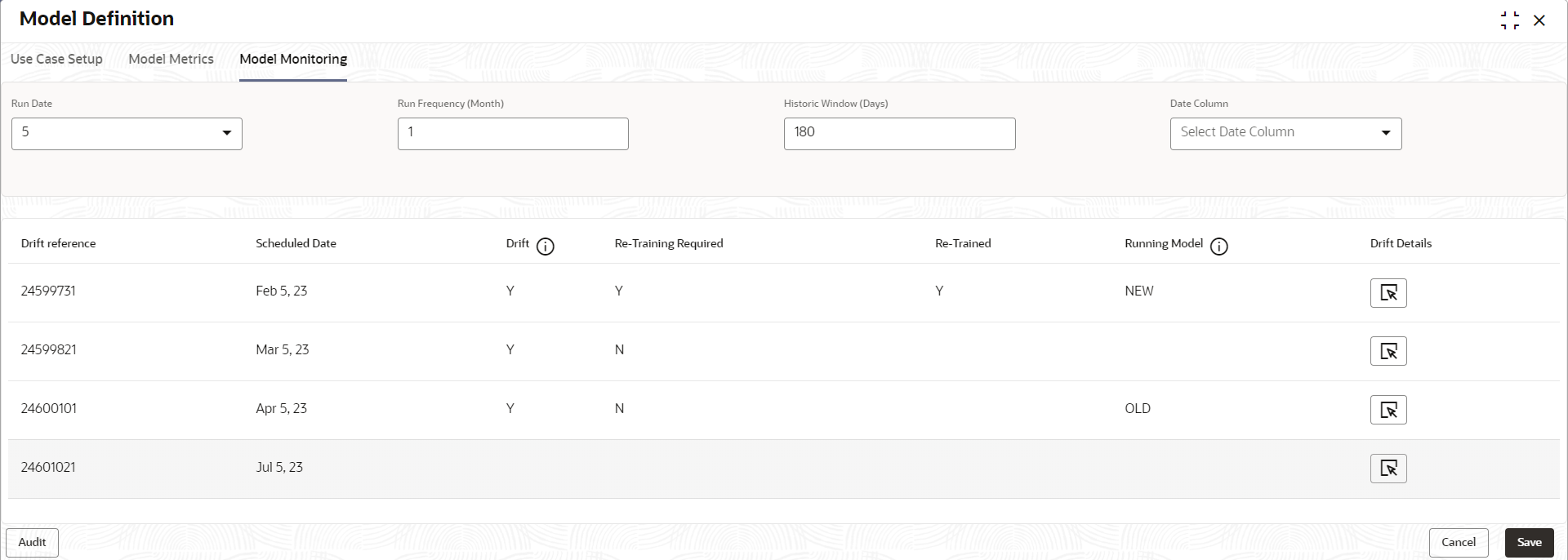

5.10 Model Monitoring and Auto Training

This topic describes about the Model Monitoring and Auto Training.

The underlying data on which a machine learning model is initially trained will eventually undergo changes in distribution over time. This shift in the data distribution away from the original distribution is referred to as data drift.

Not if, but when the underlying data drift is significant enough, the current model may lose its efficacy in predicting outcomes, on setting model decay.

Monitoring of deployed models is required to detect data drift and trigger model re-build or re-training.

Regression and Classification use case types are eligible for setting up model monitoring.

Note:

Model monitoring expects the presence of an existing trained model as a pre-requisite- On Home screen, click Machine Learning. Under Machine Learning, click Model Definition.

- Under Model Definition, Click Model Definition Summary.

- Click

on the Use case tile to Unlock.

on the Use case tile to Unlock. - Select Model Monitoring tab in the Model

Definition screen.The Model Monitoring screen displays.

- This screen allows you to setup Model monitoring for the use case.For more information on fields, refer to the field description table.

Table 5-16 Model Monitoring – Field Description

Field Description Run Case Run date is the calendar date used with ‘Run Frequency (Month)’ to set up a recurring monitoring schedule. On the schedule date, model monitoring routine will analyze the underlying data to detect presence/absence of data drift and trigger model re-build. Permissible values: 1 – 31 and default is 15

Note:

This field is mandatory.Run Frequency (Month) Specify the run frequency in months.

Example: if we want to schedule a run on 17th of every 6 months, then we set up

Run Date: 17

Run Frequency (Month): 6

6 is the set default, the value. Minimum value is 1

Note:

This field is mandatory.Historic Window (Days) Historic window in days determine how far back should we consider, to define the window of data evaluation.

Example: A value of 90 would mean a historic window from T-90 days to T Day, T being the system date. Default is set at 180.

Note:

This field is mandatory.Date Column This field captures the date column in the data source which should be considered for determining the historic window. Keep it empty If the data source does not have a date column. In the absence of a date column in the data source, system will consider the entire available data available in the data source.

- The following fields are populated for reference once the model monitoring

routine is executed on the scheduled date.

Table 5-17 Model Evaluation - Field Description

Field Description Drift Reference Displays the Unique Drift Reference ID, populated by the model monitoring routine initial run Scheduled Date Displays the scheduled date after the initial run of the model monitoring routine. Drift Initially it will be empty and will get populated once the model monitoring routine runs and determines the presence or absence of drift. Display value is Y or N. Re-Training Required Model monitoring routine determines the re-training requirement and populates Y or N values. Re-Trained Model monitoring routine populates the status of re-training with Y or N values. Running Model The model monitoring routine evaluates both the existing and the new model, it re-trained, to determine which model best fits the contemporary changed data. Final values are OLD, if existing model is retained or NEW, for revised re-trained model. - Click

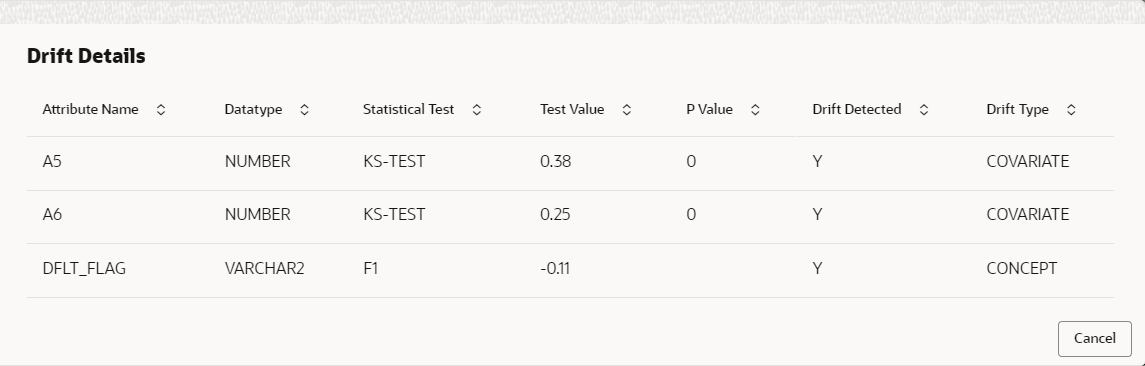

to view drift details.

to view drift details.The Drift Details button will be enabled only if drift is detected; otherwise, it will continue to be disabled.

The model monitoring routine identifies the drift in the data distribution using statistical hypothesis tests. Drift is of two types - Concept drift for target and data drift for the data attributes. Concept drift decides if the current model is to be re-trained or not. If concept drift is detected, this screen displays the analysis and statistical test values for both the concept drift and data drift of the attributes that contribute to the model.

The Drift Details screen displays. For more information on fields, refer to the field description table.Table 5-18 Drift Details - Field Description

Field Description Attribute Name Displays the attributes used in the model Data type Displays the data type of the attribute. Statistical Test Displays the statistical tests results. The available options are:- F1 - concept drift

- KS-TEST - Numerical feature attributes

- CHI-SQR - categorical feature attributes

Test Value Displays the numerical statistical test result P Value The P Value determines the statistical significance. Will be null for F1 statistical test. Drift Detected Indicates whether drift has been detected with a Y or N. Drift Type Displays either concept or covariate (data) drift type. - Select the relevant Drift Reference record.

Click

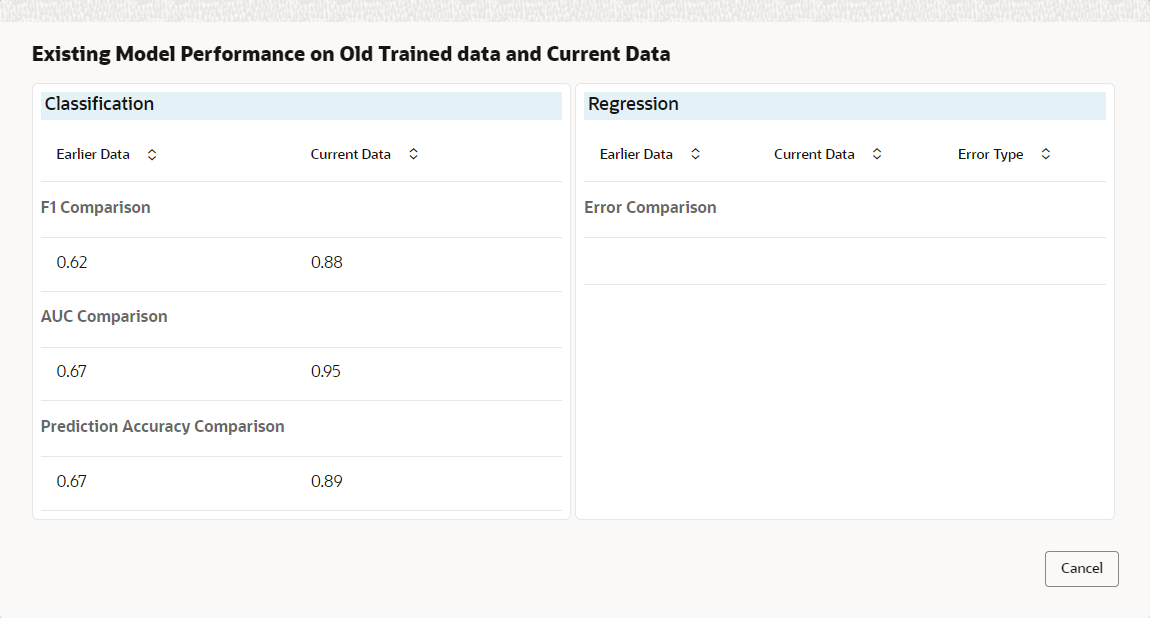

from Drift header to view the comparative Model Performance Screen

to understand how the decision of drift is arrived at.

from Drift header to view the comparative Model Performance Screen

to understand how the decision of drift is arrived at.

Existing model is used to predict on an earlier data sample and the current data sample. The results of both the prediction are captured and displayed.

Classification models are compared on F1, AUC and Prediction accuracy while Regression, models are evaluated on prediction error.

Figure 5-14 Existing Model Performance on Old Trained data and Current Data

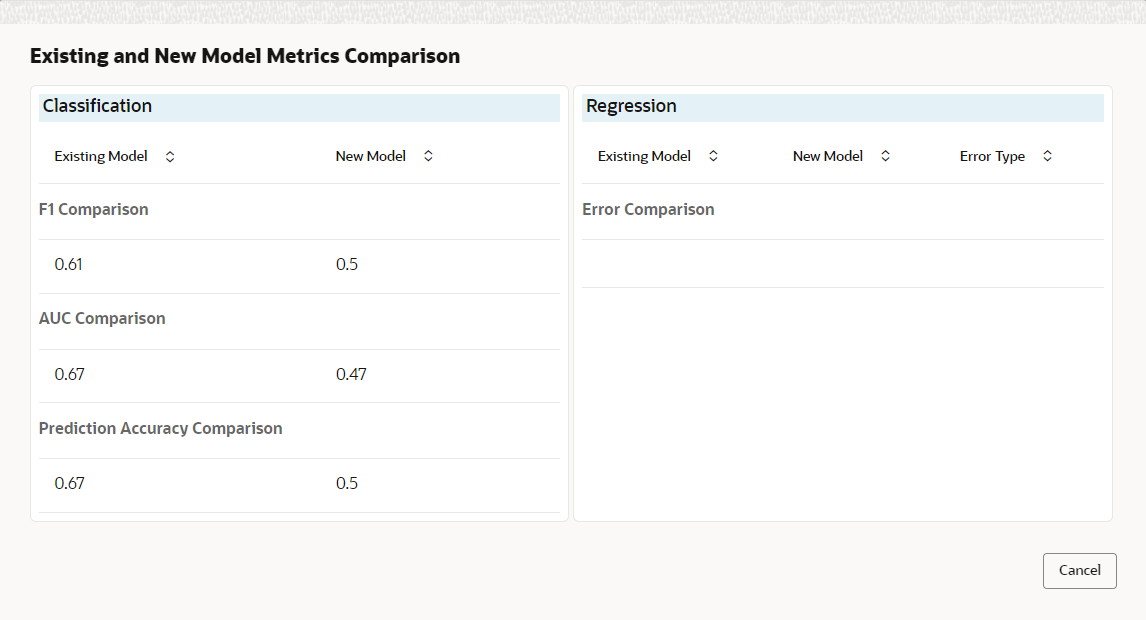

- Select relevant Drift Reference record.

Click

from Running Model header to view the comparative performance of

the re-trained model vs current model, in order to understand how the system

decided on which model best fit the current data distribution.

from Running Model header to view the comparative performance of

the re-trained model vs current model, in order to understand how the system

decided on which model best fit the current data distribution.

Classification models are evaluated on F1, AUC and Prediction accuracy while Regression models, are evaluated on prediction error.

Figure 5-15 Existing and New Model Metrics Comparison

Parent topic: Machine Learning Framework