- Administration and Configuration Guide

- Interpreter Configuration and Connectivity

- Configure Interpreters

3.1 Configure Interpreters

Interpreters are configured when you want to modify URL, data location, drivers, enable or disable connections, etc.

- On the Workspace Summary page, select Launch workspace to display the

CS Production workspace window.

- Click the User Profile drop-down list and select Data Studio

Options widget. The following options are available:

- Interpreters

- Tasks

- Permissions

- Credentials

- Templates

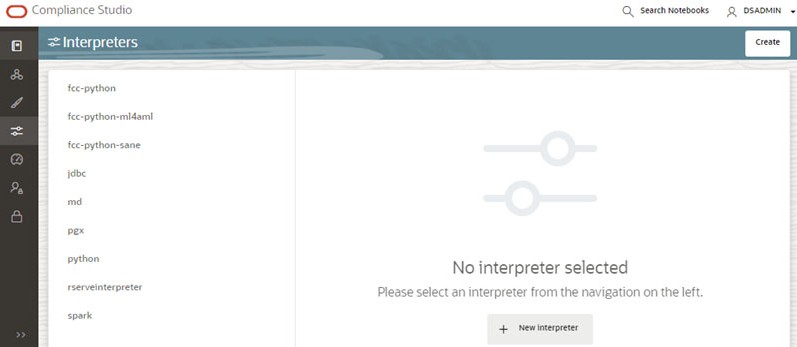

- Click Interpreters that you want to view from the list displayed on the

LHS. The default configured interpreter variant is displayed on the RHS.

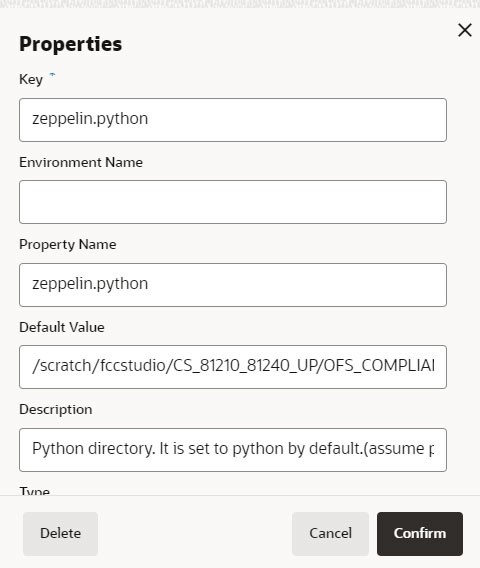

- Modify the values in the fields as per requirement. For example, to modify a

parameter's limit, connect to a different schema, PGX server, etc.You can modify the values in the following UI options:

- Wizard

An interpreter can group multiple interpreter clients that all run in one JVM process and can be stopped together.

For example, the spark interpreter group contains the spark and pyspark interpreter client.

Group Configuration

Initial Code

For example, when using a Spark interpreter group with spark and pyspark interpreter clients. If you define the initialization code for the spark interpreter group, the initialization code will run when the runtime environment is created, i.e., the first time a user runs a paragraph of either spark or pyspark in a notebook with Compliance Studio running in NOTEBOOK session mode.

Initial Code Capability

The initial code capability defines what interpreter client to use to run the group initial code. For example, in the spark interpreter group, you would select the spark capability as the initial code capability to create a spark context for the group JVM process.

Credential Configurations

For linking any credentials to the interpreter, you have to define what credential types should be used and what credential mode to use. For example, the jdbc interpreter supports a credential type of type Password for the credential qualifier jdbc_password and a credential type of type Oracle Wallet for the credential qualifier jdbc_wallet. After defining the credential configuration, a new section for selecting the respective credential values will appear.

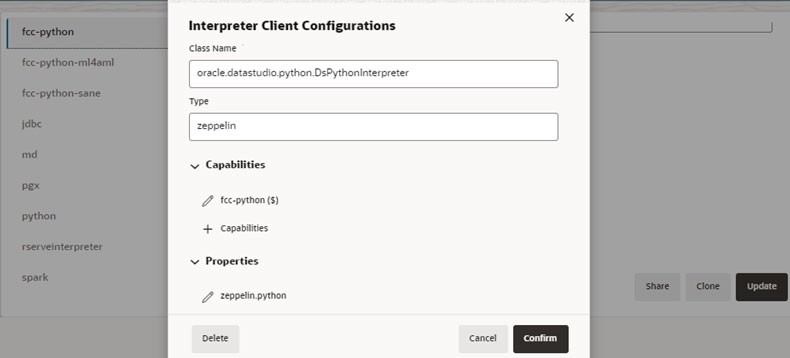

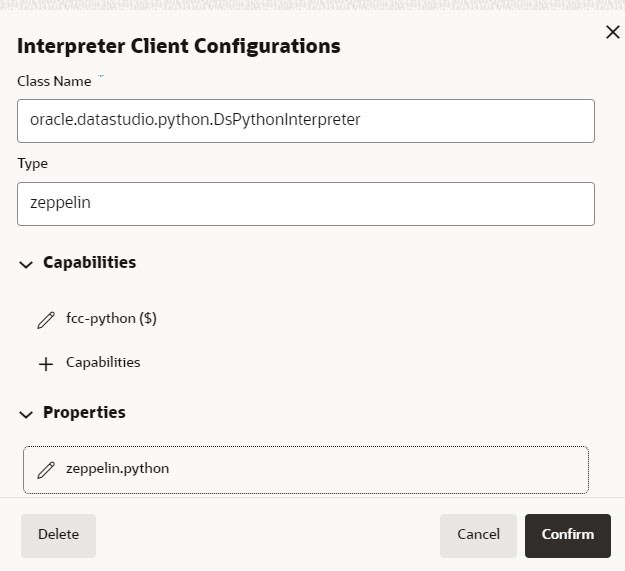

Interpreter Client Configuration

Interpreter properties can be configured for each interpreter client.

Figure 3-6 Interpreter Client Configuration

Lifecycle Configuration

Host Mode

In the Host lifecycle mode, the following properties can be configured:- Host: The hostname on which the interpreter is listening. For example, localhost if the interpreter runs on the same machine as the server.

- Port: The port on which the interpreter is listening.

Credentials

A credential section appears if you have defined a credential configuration as part of the group settings. For each credential qualifier, an already defined credential can be selected. If the credential mode Per User is used, each individual user has to select their own credential.

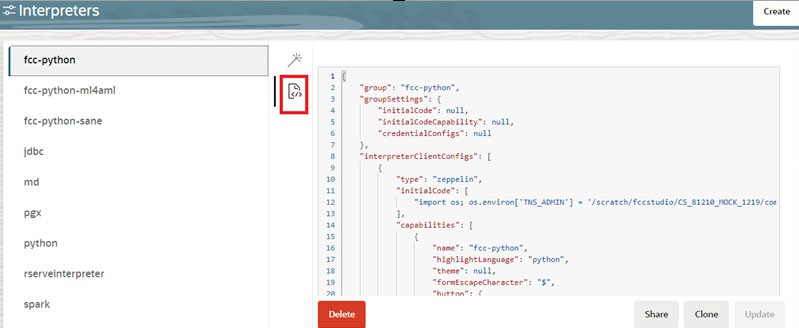

- JSON:

You can modify the values in the properties of the interpreter in the JSON file, as shown in the following figure.

- Wizard

- Click Update. The modified values are updated in the Interpreter.

- The user can also perform Share, Clone, and Delete operations on this

screen.The following table lists the Ready-to-use interpreter in Compliance Studio.

Table 3-1 Ready-to-use interpreter

Interpreters Description python Interpreter The python interpreter is used to write Python code in a notebook to analyze data from different sources, machine learning, artificial intelligence, etc. The python interpreter uses a python conda environment. Compliance Studio comes with predefined conda environments as follows:- default_<CS version>

- ml4aml_<CS version>

- sane_<CS version>

Before executing any python notebooks, you need to attach the conda environment using drop-down list.

jdbc Interpreter The jdbc interpreter is a ready-to-use interpreter used to connect to Studio schema. This Interpreter is used to connect and write SQL queries on any schema without any restriction. In the jdbc Interpreter, you can configure schema details, link Wallet Credentials to the jdbc Interpreter, etc.

Note:

This feature is not recommended approach because it can only be used to connect to a single schema, and all users will have access to that, rather than access being managed per user. In future releases this interpreter will not be enabled by default but instructions will be given to enable if required.

Limitation- Data source configuration is not dynamic; instead, it is static from the Interpreter Configuration screen.

- There is no restriction or secure access of data provided with this interpreter.

jdbc Interpreter Recommendation Users are recommended to use a python interpreter to get dynamic data source configuration; even data access permission features can also be used with this interpreter.

md Interpreter The md interpreter is used to configure the markdown parser type. This Interpreter displays text based on Markdown, which is a lightweight markup language. The connection does not apply to this Interpreter.

pgql Interpreter (part of PGX interpreter) The pgql interpreter is a ready-to-use interpreter used to connect the configured PGX server. This Interpreter is used to perform queries on the graph in Compliance Studio. PGQL is a graph query language built on top of SQL, bringing graph pattern matching capabilities to existing SQL users and new users interested in graph technology but who do not have an SQL background. pgx-python (part of PGX interpreter) The pgx-python interpreter is a ready-to-use interpreter used to connect to the configured PGX server. It is a python based interpreter with a PGX python client embedded in it to query on graph present in the PGX server. By default, this Interpreter points to ml4aml Python Virtual environment. pgx-algorithm Interpreter (part of PGX interpreter) The pgx-algorithm interpreter is a ready-to-use interpreter that connects to the configured PGX server. This Interpreter is used to write an algorithm on the graph and is also used in the PGX interpreter. pgx-java Interpreter (part of PGX interpreter) The pgx-java interpreter is a ready-to-use interpreter that connects to the configured PGX server. It is Java11 based interpreter with a PGX client embedded in it to query on graph present in the PGX server. spark Interpreter The spark interpreter connects to the big data environment by default. Users must write for connection either in the Initialization section or in the notebook's paragraph. This Interpreter is used to perform analytics on data present in the big data clusters in the Scala language. This requires additional configuration, which must be performed as a prerequisite or as post-installation with the manual change of interpreter settings.

In the spark interpreter, you can configure the cluster manager to connect, print the Read Eval Print Loop (REPL) output, the total number of cores to use, etc.

pyspark Interpreter The pyspark interpreter connects to the big data environment by default. Users must write code for connection either in the Initialization section or in the notebook's paragraph. This Interpreter is used to write the pyspark language to query and perform analytics on data present in big data. This requires additional configuration, which must be performed as a prerequisite or as post-installation with the manual change of interpreter settings.

In the pyspark Interpreter, you can configure the Python binary executable to use for PySpark in both driver and workers, set true to use IPython, else set to false, etc.