Spark Interpreter in Local Mode

To start spark interpreter in the local mode, follow these steps:

- Download

spark-3.0.3-bin-hadoop2.7.tgzfrom the website. - Download

spark-3.2.4-bin-hadoop2.7.tgzfrom the website. - Unzip the spark hadoop cluster’s zip file in the below mentioned

locations:

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/mmg-home/mmg-studio/ interpreter-server/spark-interpreter-<version>/extralibs<COMPLIANCE_STUDIO_INSTALLATION_PATH>/mmg-home/mmg-studio/interpreter- server/spark-interpreter-<version>/extralibs

- Navigate to

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/mmg-home/mmgstudio/ bindirectory. - Open the

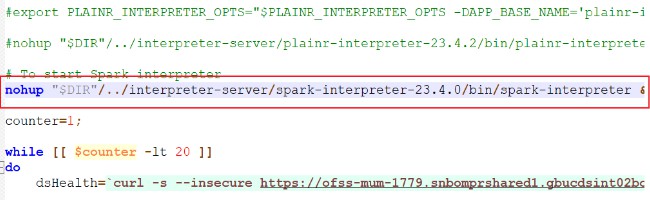

startup.shfile and add following line before the line containing “counter=1”;nohup "$DIR"/../interpreter-server/spark-interpreter-<version>/bin/ spark-interpreter &>> <path_to_save_the_logs>/<log_file_name>.log & - Save and close the file.

- Open the

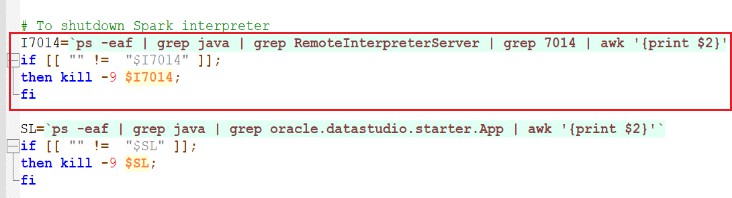

shutdown.shfile and add following line before the line containing “SL=”.I7014=`ps -eaf | grep java | grep RemoteInterpreterServer | grep 7014 | awk '{print $2}'` if [[ "" != "$I7014" ]]; then kill -9 $I7014; fiNote:

In the above step, the port number for the spark interpreter is assumed to be 7014, the default port that comes with the installer. If a different port is used, then change the configuration accordingly. - Save and close the file.

- Navigate to

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/mmg-home/mmgstudio/ bindirectory. - Open the

startup.shfile, navigate to line 29 and update spark value as 7014.For example: . ./"$DIR"/datastudio --port 7008 --markdown 7009 --spark 7014 --python 7012 --jdbc 7011 --shell -1 --pgx 7022 --external - Navigate to

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/mmg-home/mmgstudio/ bindirectory. - Open the

config.shfile and update the following parameters:- MMG_SPARK_ENABLED=true

- SPARK_HOME=

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/mmg-home/ mmg-studio/interpreter-server/spark-interpreter-<version>/extralibs/ spark-<version>-bin-hadoop<version> - HADOOP_HOME=##HADOOP_HOME##

Note:

Retain the placeholder as it is. - SPARK_MASTER=local

- SPARK_DEPLOY_MODE=

Note:

Retain the SPARK_DEPLOY_MODE as blank. - DATASTUDIO_SPARK_INTERPRETER_PORT=7014

- Navigate to

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/mmg-home/bindirectory. - Open the

config.shfile and update the following parameters:- MMG_SPARK_ENABLED=true

Note:

By default, it is set to false. You can configure the following parameters only when MMG_SPARK_ENABLED is set to true. - SPARK_HOME=

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/mmg-home/ mmg-studio/interpreter-server/spark-interpreter-<version>/extralibs/ spark-<version>-bin-hadoop<version> - HADOOP_HOME= ##HADOOP_HOME##

Note:

Retain the placeholder as it is. - SPARK_MASTER= local

- SPARK_DEPLOY_MODE=

Note:

Retain the SPARK_DEPLOY_MODE as blank. - DATASTUDIO_SPARK_INTERPRETER_PORT=7014

- MMG_SPARK_ENABLED=true

- Navigate to

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/mmg-home/mmgstudio/ server/builtin/interpreters/spark.jsondirectory. - Navigate to line 169 and update port value as 7014.

- Update default value as local for spark.master and blank

for spark.submit.deployMode.For example:

"spark.master": { "envName": "MASTER", "propertyName": "spark.master", "defaultValue": "local", "description": "Spark master uri. ex) spark:// masterhost:7077", "type": "string" }, "spark.submit.deployMode": { "envName": null, "propertyName":"spark.submit.deployMode", "defaultValue": "", "description": "The deploy mode of Spark driver program, either 'client' or 'cluster'", "type": "string" }, - Navigate to

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/bindirectory. - Restart Compliance Studio using the following command.

./compliance-studio.sh –restart - Verify if the spark-interpreter has started using the following command:

netstat –nltp | grep 7014