3.4.1 Overview/Design

The Common Staging Area Model (CSA) represents the point of entry of data into the OFSDF. The CSA provides a simplified, unified data sourcing area for inputs required by analytical applications and engines. It consists of over 900 tables and nearly 9000 columns organized into distinct subjects. The salient features of the CSA are as follows:

- Mapping to Analytical Use Cases: Since the primary purpose of the OFSDF is to be a

data repository supporting analytics, each database object in the OFSDF physical

data model is necessarily mapped to a corresponding analytical use case.

These mappings are captured in the data model, in the form of additional metadata called User- defined Properties (UDPs), and can be leveraged to reduce the scope of data gathering efforts by focusing on clearly-defined end use cases such as BASEL II, Market Risk Analytics, ALM and others.

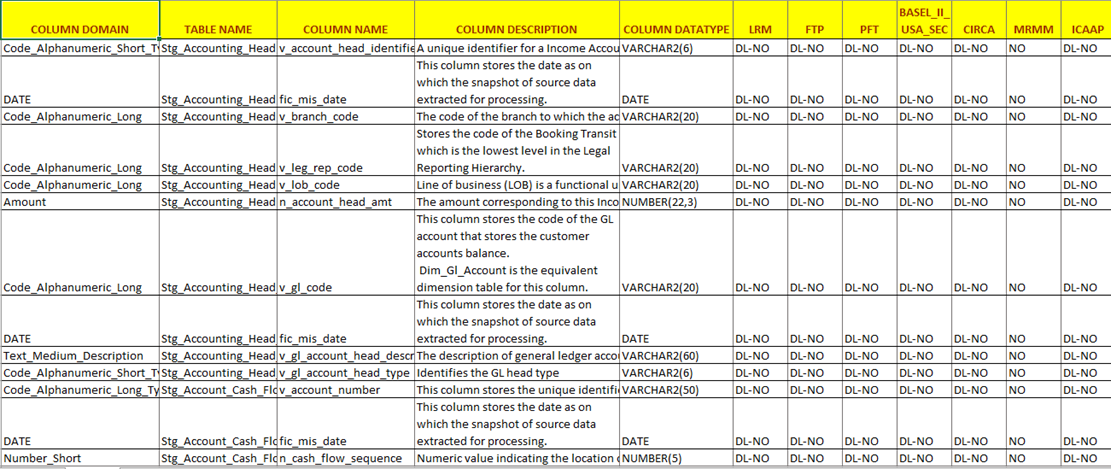

These mappings can readily be extracted into a Download Specification, which lists the data demands for a specific analytical use case. An example is shown below:

Figure 3-2 Download Specifications sample

- Schema Design: The data model of the Common Staging Area is designed to facilitate

loading efficiency. This means that it is denormalized.

The primary focus of the staging area is to efficiently deliver data from operational systems such as Core Banking, Trading and Wealth Management to multiple analytical applications that process this data.

Typically, this data is extracted from source systems and loaded into OFSDF directly, or alternatively into a pre-defined file-based operational image area from which it is subsequently loaded into the OFSDF schema. In a large bank, it is not unusual to have 10s to 100s of millions of accounts and transactions.

Standard ETL (Extract, Transform, Load) approaches can fail to address this requirement adequately because of the complexity imposed by the target schema. If the target schema is increasingly normalized, then the ETL into this schema is correspondingly more complex, requiring careful load ordering to prevent integrity-related load failures, as well as integrity validation prior to loading. Such complex ETL processing is time-consuming and is prone to failure.

To address this, the CSA is designed to support a simplified loading process. De-normalized tables allow efficient data loading, and subsequent transformations can be done to verify data integrity through a series of data quality checks. This represents an 'ELT (Extract Load Transform)' approach to data sourcing, which is far more suited for an analytical data repository.

- Application-managed Referential Integrity (RI): In conjunction with the database

design of the staging schema, a key feature is the management of Referential

Integrity primarily in the application tier, rather than within the database. Rather

than imposing foreign key relationships in the database, which could cause complex

loading order dependencies, relationships between staging tables are managed by the

Data Quality (DQ) framework, a toolkit within the Analytical Application

Infrastructure that captures the relevant data quality checks for a specific table

in the staging model. These checks include:

- Value checks (Nulls, Value ranges, business checks on numeric fields)

- Referential Integrity checks (which are otherwise implemented as foreign key

constraints)

Note:

This is also why the ER model of the staging area in erwin does not contain any relationships – the staging area is a physical data model, which is deployed using the Analytical Application Infrastructure, which manages it.

In summary – the design of the staging area data model is to allow efficient data loading for analytics. It thus has crucial differences from a general-purpose repository of operational/transactional data across a bank.