Frequently Asked Questions

- Why does my console show an unsuccessful message during wallet

creation?

Please check if you have run the following commands correctly. For more information on wallet creation, see Setup Password Stores with Oracle Wallet.

mkstore -wrl <wallet_location> -create//creates a wallet in the specified location.mkstore -wrl <wallet_location> -createCredential <alias-name> <database-user-name>//creates an alias in the Studio Schema.mkstore -wrl <wallet_location> -createCredential <alias-name> <database-user-name>//creates an alias in the Atomic Schema.mkstore -wrl <wallet_location> -createCredential <alias-name> <database-user-name>//creates an alias in the configuration schema.

If your issue is still not resolved, contact My Oracle Support (MOS).

- Where can I find my created wallet?

Your wallet will be in the directory you have set as your wallet location.

If your issue is still not resolved, contact My Oracle Support (MOS).

- When should I create a Database link, and if yes, how do I do it?

Create a Database link to connect the Atomic and Configuration Database Schemas to the Studio Database Schema if the databases are different. You must create the link in the Studio Database.

In the following example, a link has been created from the Configuration Schema to the Atomic Schema by running the following script:

create public database link <studio database link> connect to <Config Schema> identified by password using ' (DESCRIPTION = ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP) (HOST =<host name> (PORT = <port number>)) (CONNECT_DATA = (SERVICE_NAME = <service name>))) ';Config Schema : <Config Schema>/password ' (DESCRIPTION = ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP) (HOST =<host name> (PORT = <port number>)) (CONNECT_DATA = (SERVICE_NAME = <service name>))) ';After running the script, run the FCDM Connector and ICIJ Connector jobs.

- Why does my installed studio setup not have any notebooks?

Some default notebooks are ready to use when you install Compliance Studio. If you do not see any notebooks when you log in to the application, you may not be assigned any roles. Check the <COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/logs directory to see if you have been assigned any roles, and if not, contact your Administrator. If your issue is still not resolved, contact My Oracle Support (MOS).

- What can I do if the Schema Creation fails?

If the Atomic Schema creation fails, login to the BD and ECM Atomic Schemas and run the following query: select * from fcc_orahive_datatypemapping; The fcc_orahive_datatypemapping table must not have duplicate data types. If the Studio schema creation fails, login as a Studio user and run the following query: select * from fcc_datastudio_schemaobjects Run the following query to replace all Y values with '': update fcc_datastudio_schemaobjects set SCHEMA_OBJ_GENERATED='' After the schema creation is successful, the value of the SCHEMA_OBJ_GENERATED attribute changes to Y. You can also check for errors in the application log file in the <COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/logs directory. If your issue is still not resolved, contact My Oracle Support (MOS).

- What can I do if the Import_training_model batch execution fails?

Batch Execution Status always displays success in case of success or failure.

You can also check for errors in the application log file in the <COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/logs directory. You can fix the failure according to the log details and run the same batch again.

- Why is the sqoop job not successful?

The Sqoop job may fail if some of the applicable values are null or if the service name or SID value is not provided. Do one of the following:

- Check if there are any null values for the applicable configurations in the config.sh and FCC_DATASTUDIO_CONFIG tables. If there are any null values, add the required value.

- Check for any errors in the application log file in the <COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/logs directory. If your issue is still not resolved, contact My Oracle Support (MOS).

- Why am I getting the following error when I run the sqoop job:

Error: Could not find or load main class com.oracle.ofss.fccm.studio.batchclient.client.BatchExecuteSet the

FIC_DB_HOMEpath in the<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/ficdbdirectory.You can also check for any errors in the application log file in the

<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/logsdirectory. - 11. Why is the PGX server is not starting even though the graph

service is up and running?

Grant execution rights to the PGX folder to start the PGX server.

- Why is the PGX Server not starting?

The PGX server starts only after the FCDM tables are created after the FCDM Connector Job is run. Check if all FCDM tables are created and then start the PGX Server. You can also check for any errors in the application log file in the <COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/logs directory. If your issue is still not resolved, contact My Oracle Support (MOS).

- Why is the ICIJ Connector job failing?

This can happen because of a missing

csvfile path in the FCC_STUDIO_ETL_FILES table. Add theCSVfile path. You can also check for any errors in the application log file in the<COMPLIANCE_STUDIO_INSTALLATION_PATH>/deployed/logsdirectory. If your issue is still not resolved, contact My Oracle Support (MOS). - What should I do if there is a below Error while selecting edges in

manual Decision UI?

java.lang.IllegalStateException: Unable to create PgxSessionWrapperjava.lang.IllegalStateException: Unable to create PgxSessionWrapper at oracle.datastudio.interpreter.pgx.CombinedPgxDriver.getOrCreateSession(CombinedPgxDriver.java:147) at oracle.pgx.graphviz.driver.PgxDriver.getGraph(PgxDriver.java:334) at oracle.pgx.graphviz.library.QueryEnhancer.createEnhancer(QueryEnhancer.java:223) at oracle.pgx.graphviz.library.QueryEnhancer.createEnhancer(QueryEnhancer.java:209) at oracle.pgx.graphviz.library.QueryEnhancer.query(QueryEnhancer.java:150) at oracle.pgx.graphviz.library.QueryEnhancer.execute(QueryEnhancer.java:136) at oracle.pgx.graphviz.interpreter.PgqlInterpreter.interpret(PgqlInterpreter.java:131) at oracle.datastudio.interpreter.pgx.PgxInterpreter.interpret(PgxInterpreter.java:120) at org.apache.zeppelin.interpreter.LazyOpenInterpreter.interpret(LazyOpenInterpreter.java:103) at org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer$InterpretJob.jobRun(RemoteInterpreterServer.java:632) at org.apache.zeppelin.scheduler.Job.run(Job.java:188) at org.apache.zeppelin.scheduler.FIFOScheduler$1.run(FIFOScheduler.java:140) at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515) at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264) at java.base/java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:304) at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) at java.base/java.lang.Thread.run(Thread.java:834)Caused by: java.util.concurrent.ExecutionException: oracle.pgx.common.auth.AuthorizationException: PgxUser(FCCMDSADMIN) does not own session 6007f00a-8305-4576-9a56-9fa0f061586f or the session does not exist code: PGX-ERROR-CQAZPV67UM4H at java.base/java.util.concurrent.CompletableFuture.reportGet(CompletableFuture.java:395) at java.base/java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1999) at oracle.pgx.api.PgxFuture.get(PgxFuture.java:99) at oracle.pgx.api.ServerInstance.getSession(ServerInstance.java:670) oracle.datastudio.interpreter.pgx.CombinedPgxDriver.getOrCreateSession(CombinedPgxDriver.java:145) ... 17 moreCaused by: oracle.pgx.common.auth.AuthorizationException: PgxUser(FCCMDSADMIN) does not own session 6007f00a-8305-4576-9a56-9fa0f061586f or the session does not exist code: PGX-ERROR-CQAZPV67UM4H at oracle.pgx.common.marshalers.ExceptionMarshaler.toUnserializedException(ExceptionMarshaler.java:107) at oracle.pgx.common.marshalers.ExceptionMarshaler.unmarshal(ExceptionMarshaler.java:123) at oracle.pgx.client.RemoteUtils.parseExceptionalResponse(RemoteUtils.java:130) at oracle.pgx.client.HttpRequestExecutor.executeRequest(HttpRequestExecutor.java:198) at oracle.pgx.client.HttpRequestExecutor.get(HttpRequestExecutor.java:165) at oracle.pgx.client.RemoteControlImpl$10.request(RemoteControlImpl.java:313) at oracle.pgx.client.RemoteControlImpl$ControlRequest.request(RemoteControlImpl.java:119) at oracle.pgx.client.RemoteControlImpl$ControlRequest.request(RemoteControlImpl.java:110) at oracle.pgx.client.AbstractAsyncRequest.execute(AbstractAsyncRequest.java:47) at oracle.pgx.client.RemoteControlImpl.request(RemoteControlImpl.java:107) at oracle.pgx.client.RemoteControlImpl.getSessionInfo(RemoteControlImpl.java:296) at oracle.pgx.api.ServerInstance.lambda$getSessionInfoAsync$14(ServerInstance.java:490) at java.base/java.util.concurrent.CompletableFuture.uniComposeStage(CompletableFuture.java:1106) at java.base/java.util.concurrent.CompletableFuture.thenCompose(CompletableFuture.java:2235) at oracle.pgx.api.PgxFuture.thenCompose(PgxFuture.java:158)Then, perform the below steps as a workaround -

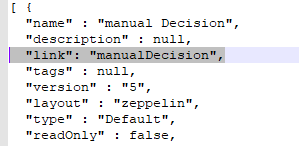

Export the "Manual Decision" Notebook

Add the link parameter just below Description

for Ex - "link": "manualDecision",

Figure 10-1 Manual Decision

Truncate the table “fcc_er_paragraph_manual" in Studio Schema. Import the modified notebook again.

- Data Extraction is truncated to default limit (Approx 197 records)

in Python paragraph widget output in MMG. Setting the

ZEPPELIN_LIMIT_INTERPETER_OUTPUT in Python Interpreter

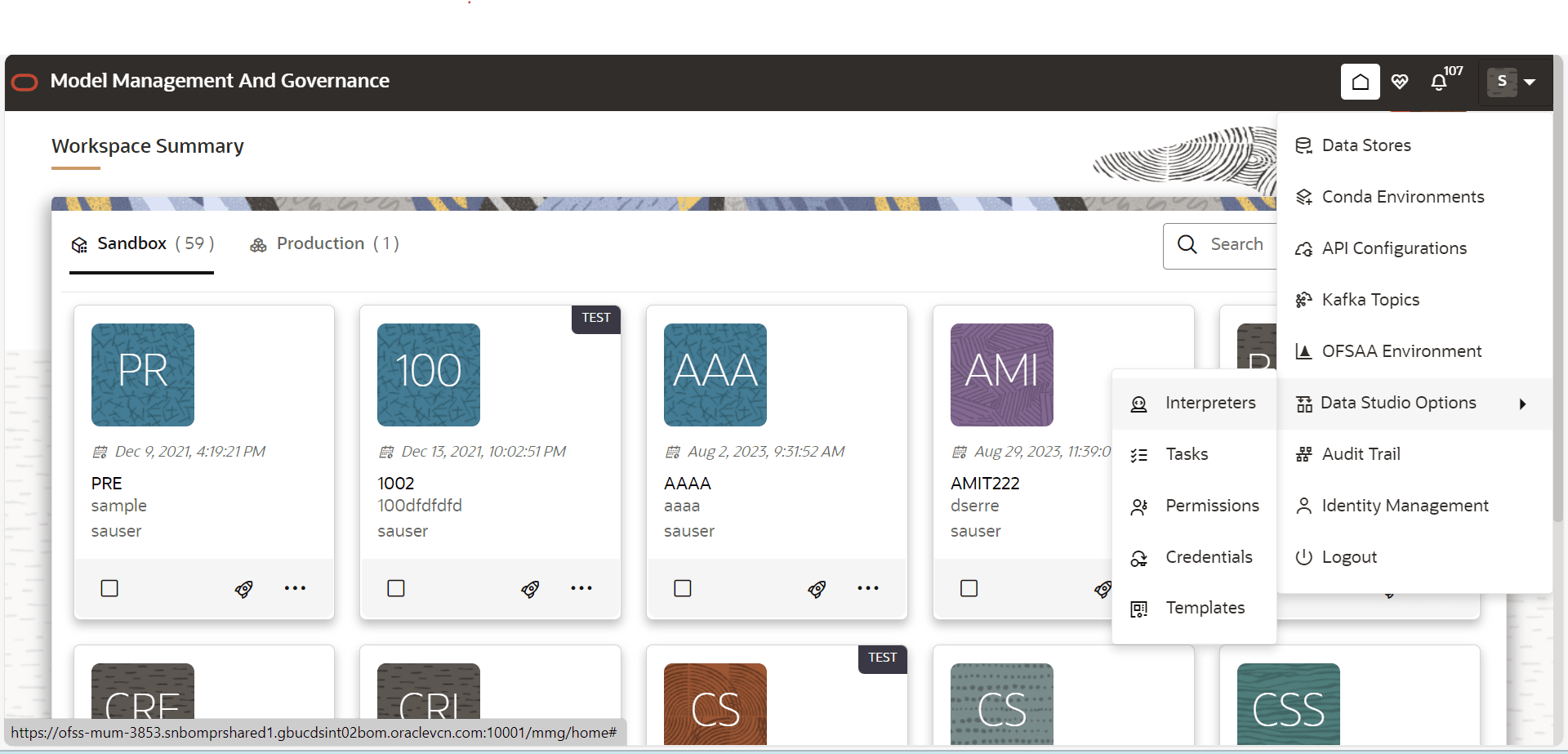

- From UI: Using Wizard screen

Go to Interpreters screen in MMG-Studio from Datastudio Options tab.

Figure 10-2 Datastudio Options tab

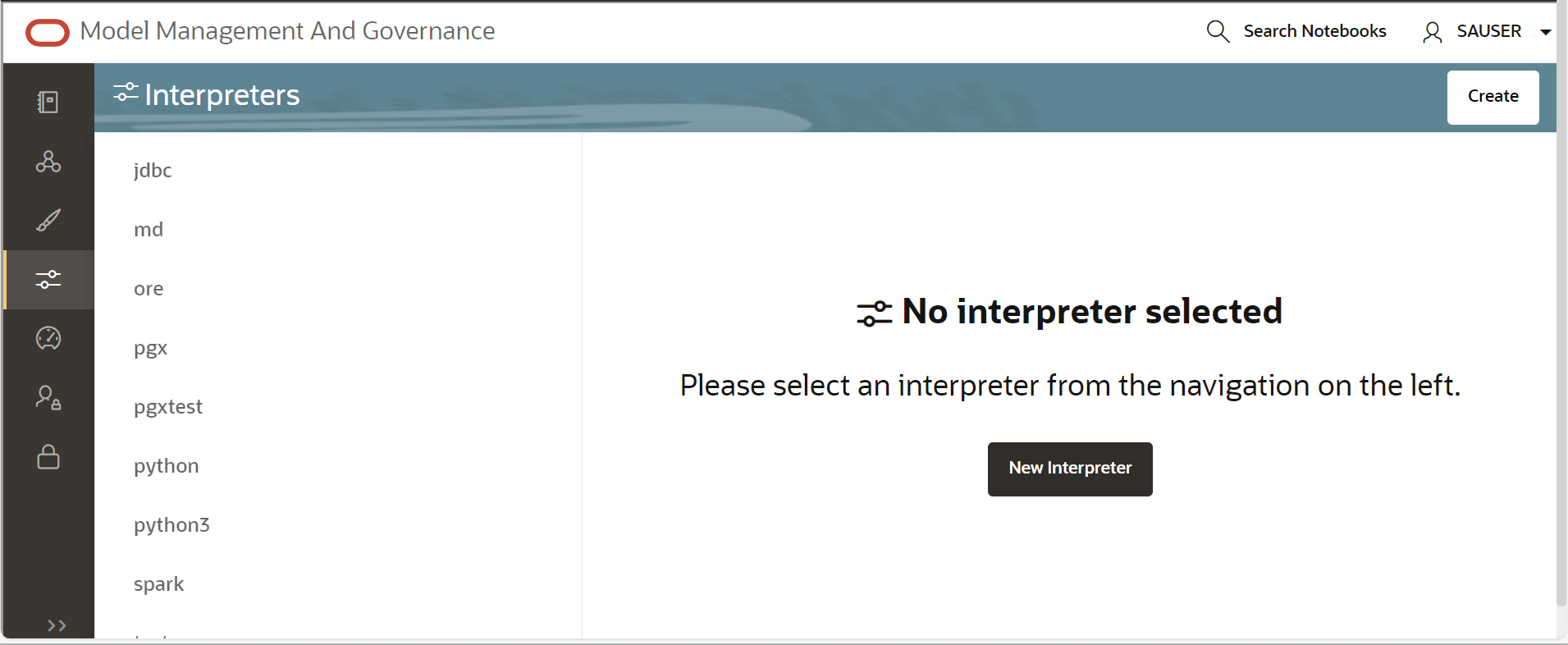

- Once on the interpreters option screen select the Python

Interpreter for which we want to configure the

zeppelin.limit.interpreter.output.

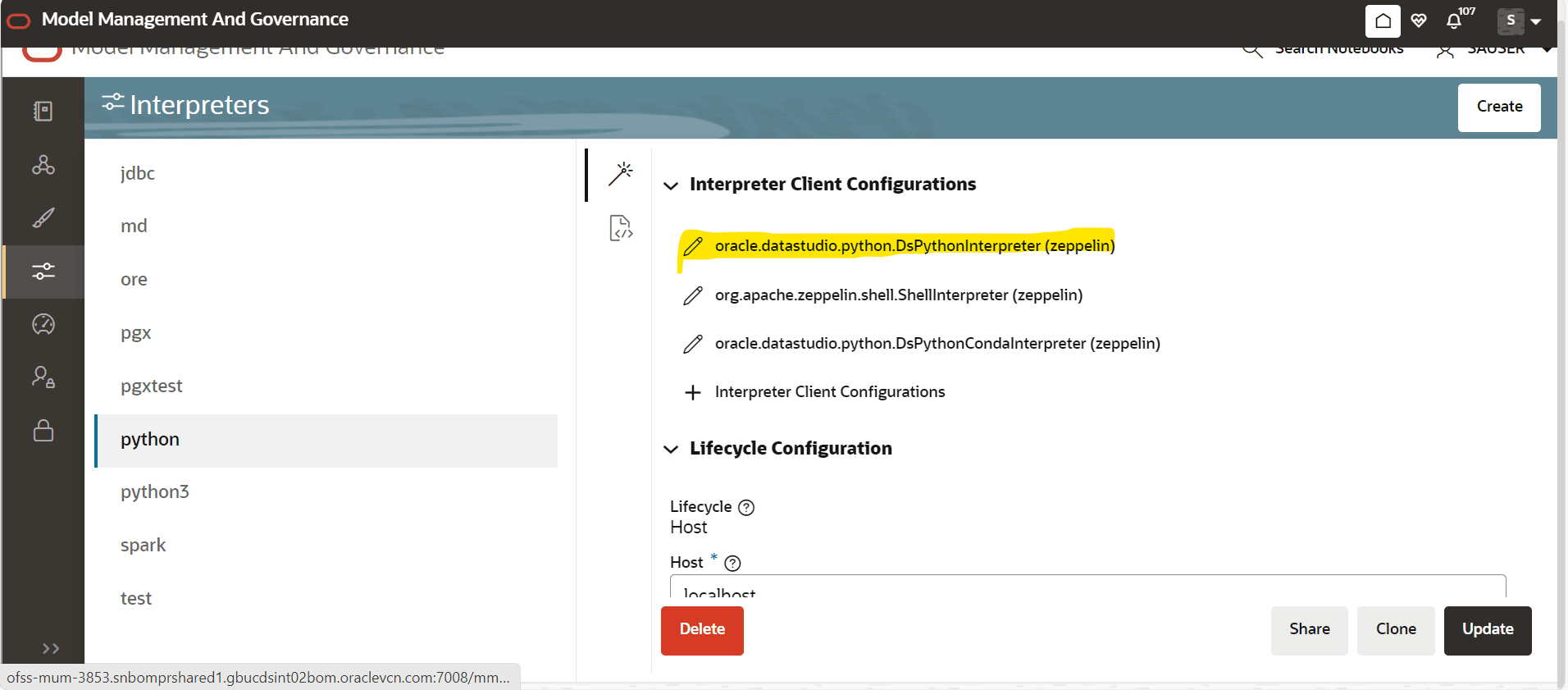

Figure 10-3 Interpreter screen

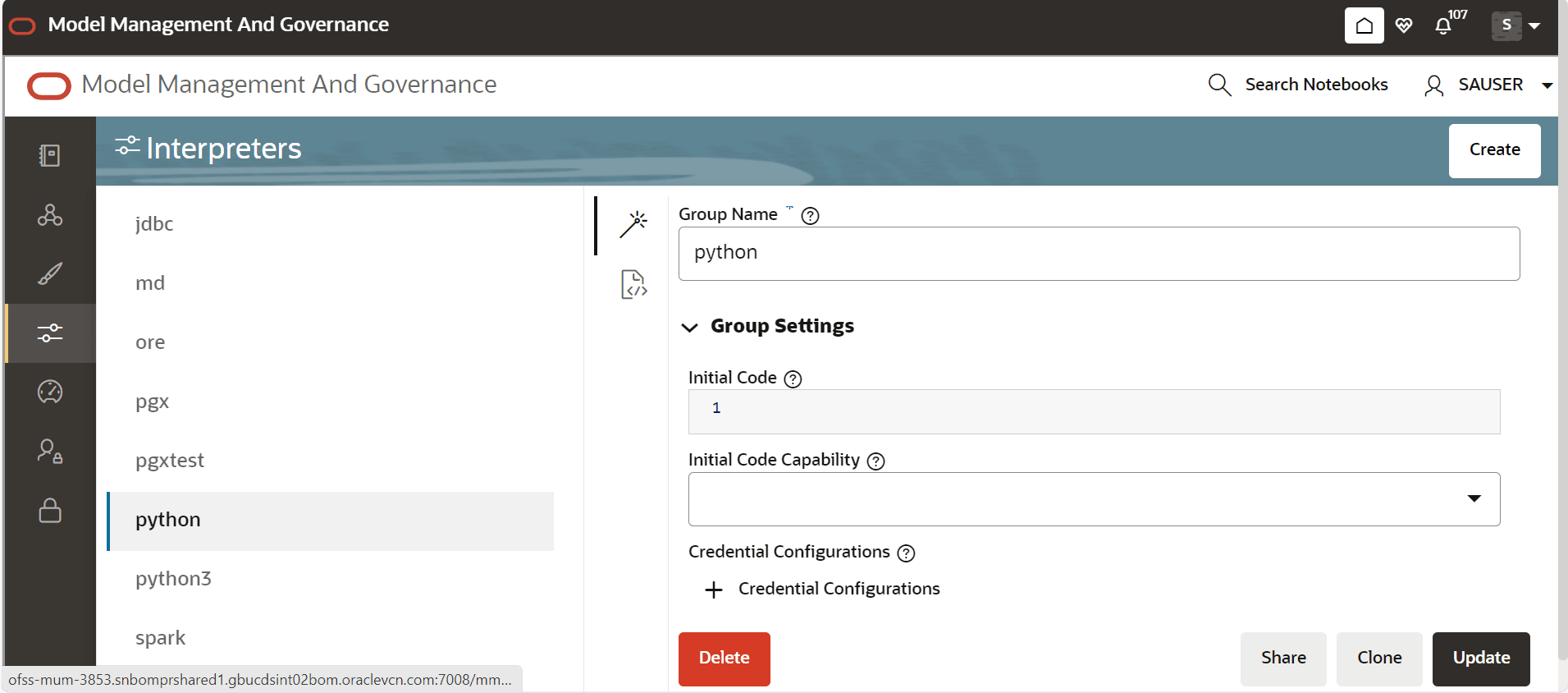

- Select python from the LHS options.

Figure 10-4 Python Interpreter

- Now scroll down in the RHS side and click on the

oracle.datastudio.python.DsPythonInterpreter under Interpreter Client

Configurations it will open a popup.

Figure 10-5 Interpreter Client Configurations

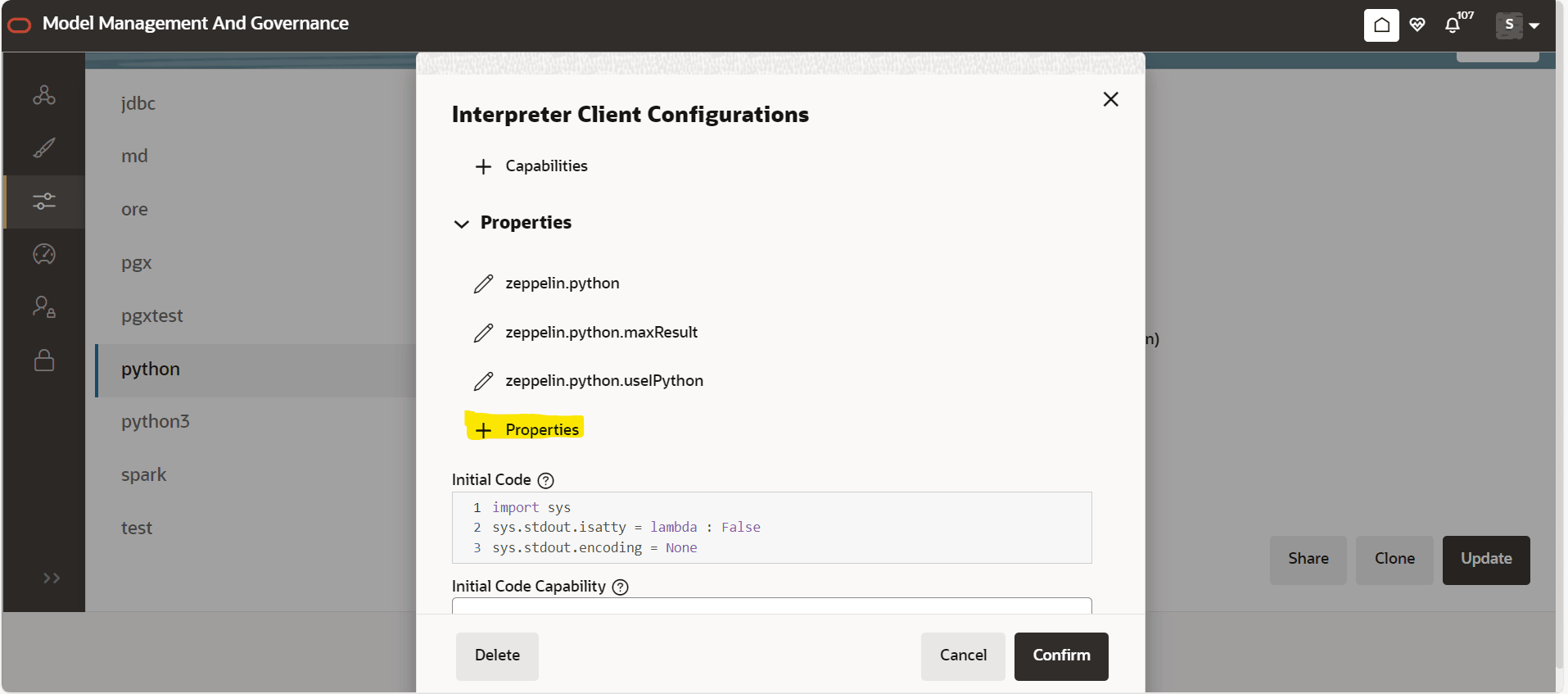

- In the popup scroll down and click on + Properties under

Properties as shown:

Figure 10-6 Properties screen

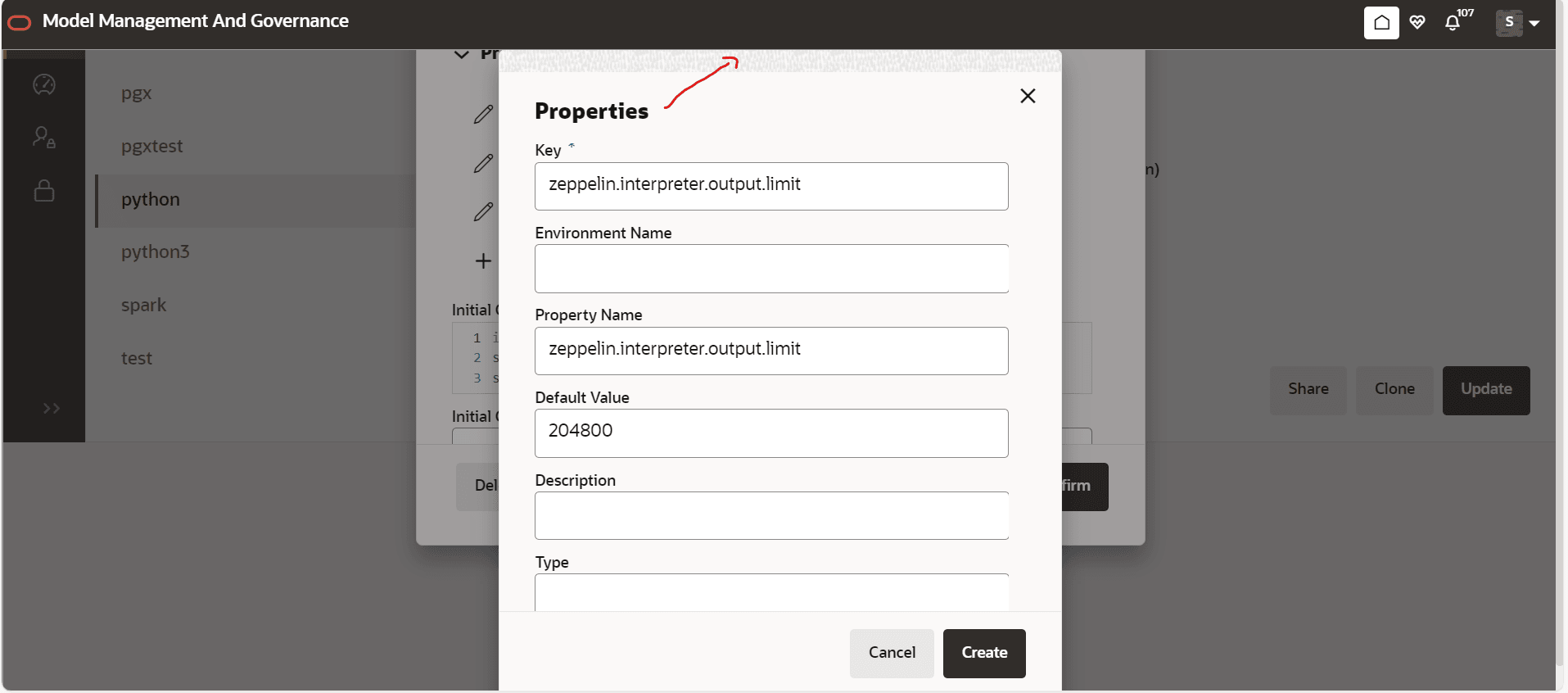

- Another popup will open fill the options as shown and set

the default value according to your needs if you are not able to see the

Create and Cancel button, click on the part of the popup pointed by red

arrow. The default value for zeppelin.interpreter.output.limit if not

set is 102400 (in bytes).

Figure 10-7 Popup box

Note:

Increasing the default option from 102400 to some bigger value will slow down the rendering of outputs of python paragraphs. - Once filled click on Create (you will see zeppelin.interpreter.output.limit under the Properties section), then click on Confirm (if you are not able to see the Confirm button on the UI, either click on the same shaded area on popup as highlighted in above image or zoom out in UI of browser) and then click on Update in the lower right side of the screen.

- After following all the above steps, restart the MMG-Studio for changes to reflect.

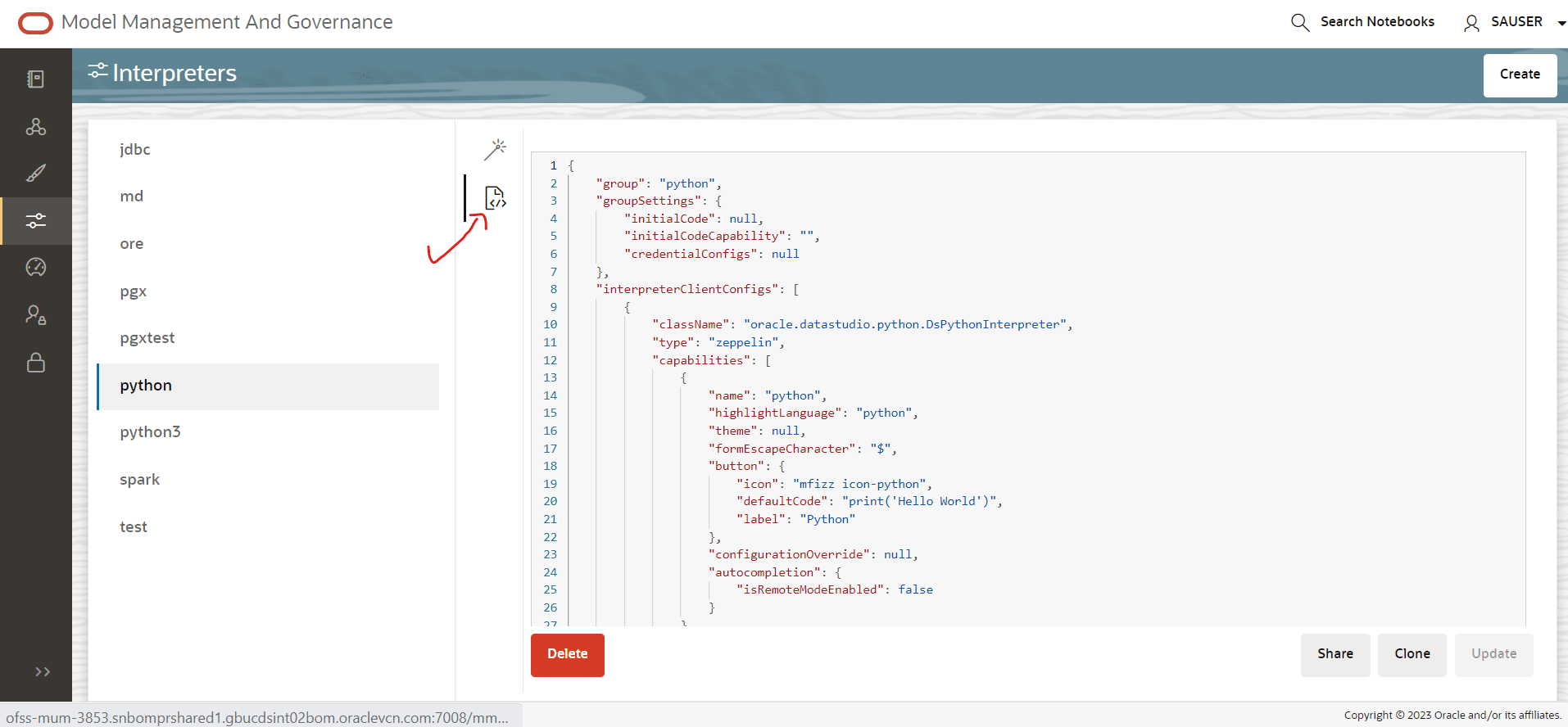

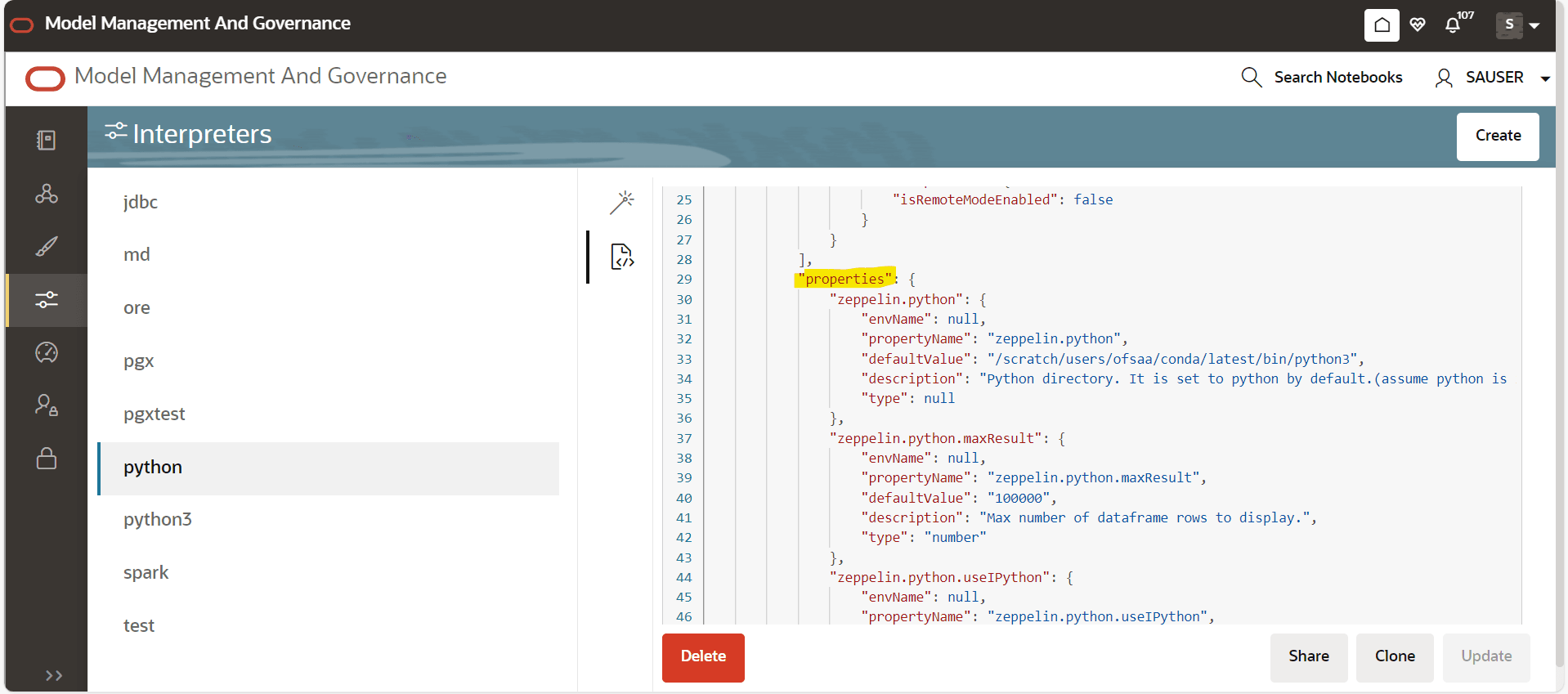

- Using JSON screen

- Follow the steps i, ii and iii from above, then click

on the following icon on UI pointed by red arrow and following

json config view will open.

Figure 10-8 JSON Config View

- Scroll down under interpreterClientConfigs with

className oracle.datastudio.python.DsPythonInterpreter you will

find following properties section with bunch of zeppelin

configurations.

Figure 10-9 Interpreter Client Config

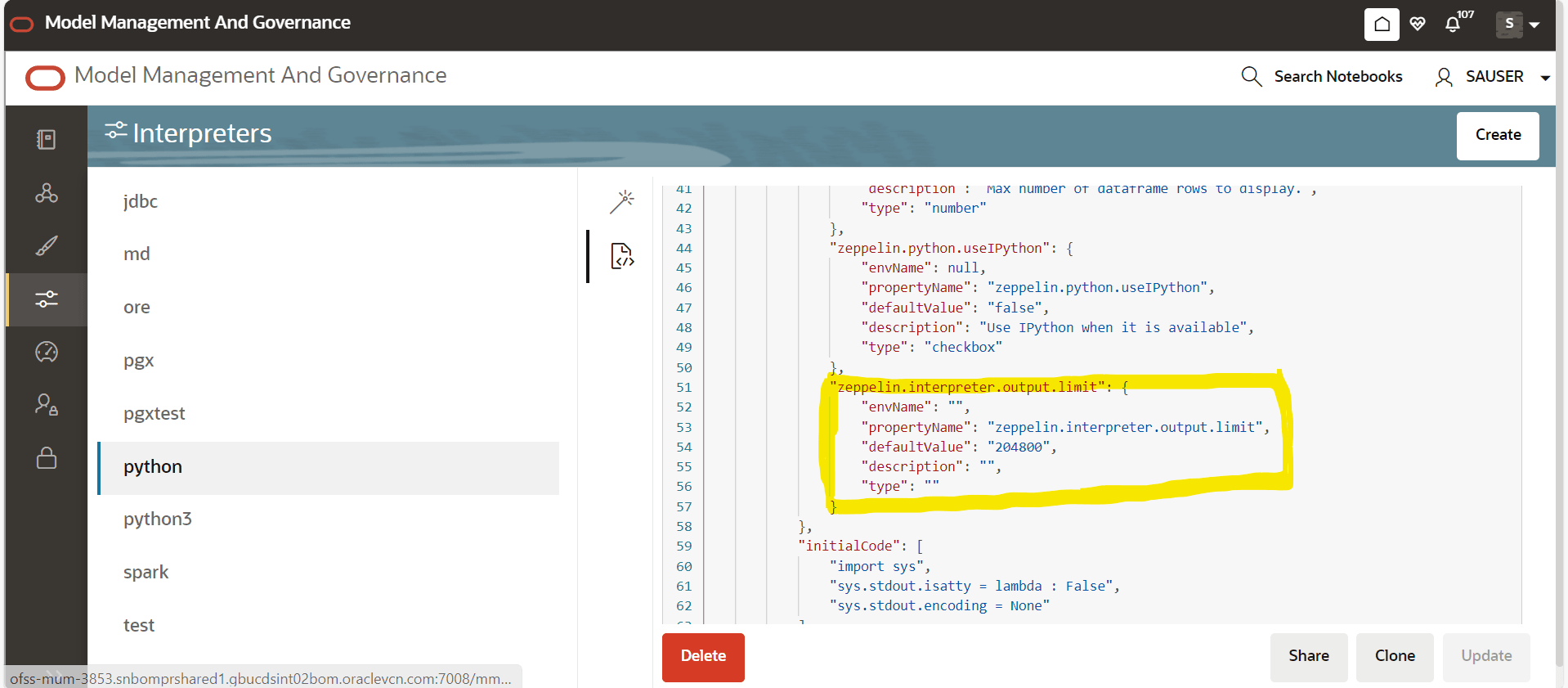

- After the last entry in properties add the

zeppelin.interpreter.output.limit also as shown in the following

image:

Figure 10-10 Properties screen

- After doing the change the Update button will get enabled in the bottom right corner click on it, you will get a message as “python interpreter updated”.

- Now restart the MMG-Studio service for changes to reflect.

- Follow the steps i, ii and iii from above, then click

on the following icon on UI pointed by red arrow and following

json config view will open.

- From filesystem: (Datastudio version 23.4.x onwards)

- Go to the Python Interpreter option as pointed out in From UI using wizard screen option above, if you have already ran the MMG services before you will see the python interpreter listed there. Delete it, if you are running the MMG Application for the first time on a fresh schema then you do not need to do this step.

- After deleting the Python Interpreter or if start has not been done yet, go to filesystem inside mmg-home/mmg-studio/server/builtin/interpreters, open python.json in a text editor.

- Scroll down under interpreter ClientConfigs with className oracle.datastudio.python.DsPythonInterpreter you will find following properties section with bunch of Zeppelin configurations. After the last entry in properties add the Zeppelin Interpreter.output.limit also as shown in step iii) of 1) From UI b) using JSON screen (last image of From UI way). Save the python.json with the desired default value and the changes done.

- Now restart/start the MMG-Studio for your changes

to reflect.

Note:

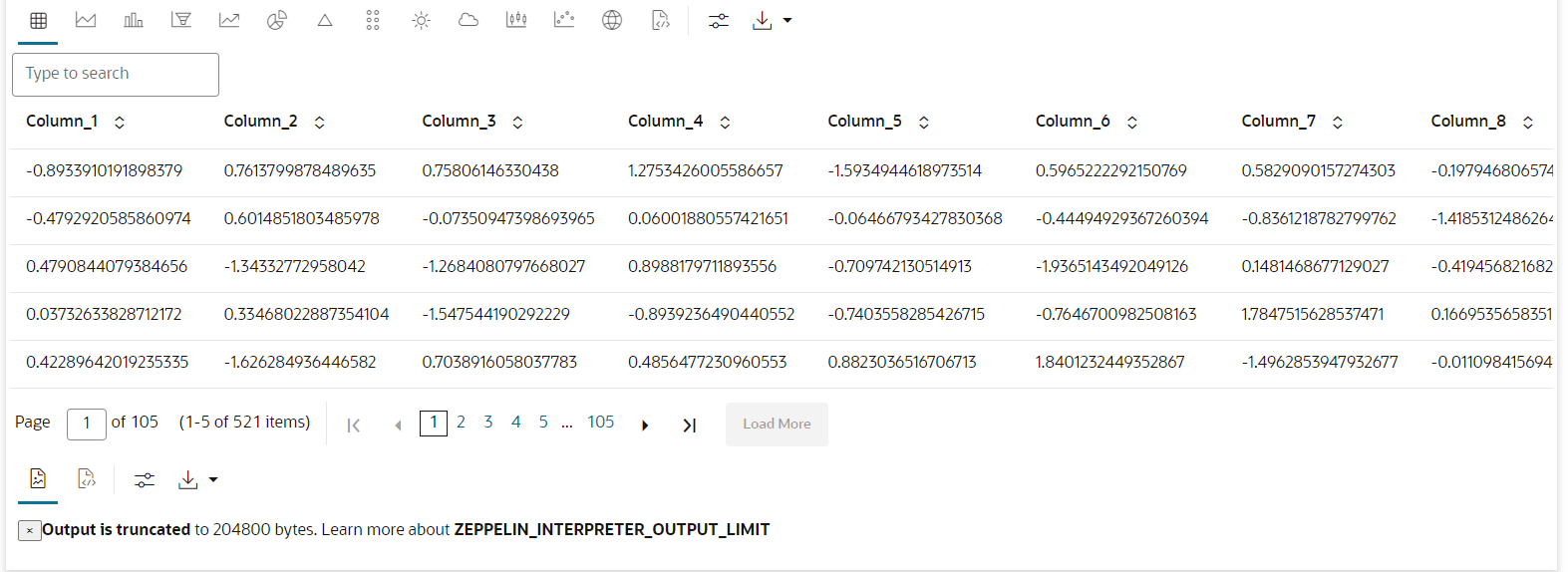

If you have configured the python environment for MMG-Studio (basically you have installed pandas and numpy which are subset of libraries required by MMG as pre-req), you can run the below script on python paragraph.%python

import pandas as pd

import numpy as np

# Create 1000 rows of random data for 20 columns

data = np.random.randn(1000, 20)

# Create column names columns = [f"Column_{i+1}" for i in range(20)]

# Create DataFrame df = pd.DataFrame(data, columns=columns)

# Display the DataFrame

z.show(df)

Output in table view

Figure 10-11 Output in table view

You can see the ZEPPELIN_INTERPRETER_OUTPUT_LIMIT value as warning if the table content is more than the set default value for zeppelin.interpreter.output.limit and accordingly you can modify the default value for same.

- From UI: Using Wizard screen

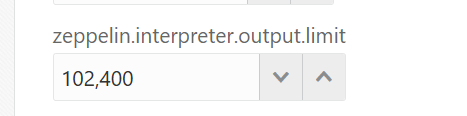

- What should I do when the result set is truncated if the size goes

above '102400' bytes?

Perform the following steps:

- Login to Compliance Studio.

- Navigate to interpreter

zeppelin.interpreter.output.limit.

Figure 10-12 Zeppelin Interpreter

- Set the value to the required size.

- Restart the Studio Application.

- What should I do if there is a below KubernetesClientException in

load-to-elastic-search.log, matching-service.log files after Compliance Studio

installation?

configServicePropertySourceLocator - Could not locate PropertySource: I/O error on GET request for "http://localhost:8888/<Service Name>/default": Connection refused (Connection refused); nested exception is java.net.ConnectException: Connection refused (Connection refused)onfigServicePropertySourceLocator - Could not locate PropertySource: I/O error on GET request for "http://localhost:8888/<Service Name>/default": Connection refused (Connection refused); nested exception is java.net.ConnectException: Connection refused (Connection refused)20:04:55.686 [ main] WARN .cloud.kubernetes.config.ConfigMapPropertySource - Can't read configMap with name: [<Service Name>] in namespace:[null]. Ignoring.io.fabric8.kubernetes.client.KubernetesClientException: Operation: [get] for kind: [ConfigMap] with name: [<Service Name>] in namespace: [null] failed. at io.fabric8.kubernetes.client.KubernetesClientException.launderThrowable(KubernetesClientException.java:64) ~[kubernetes-client-4.4.1.jar!/:?] at io.fabric8.kubernetes.client.KubernetesClientException.launderThrowable(KubernetesClientException.java:72) ~[kubernetes-client-4.4.1.jar!/:?] at io.fabric8.kubernetes.client.dsl.base.BaseOperation.getMandatory(BaseOperation.java:229) ~[kubernetes-client-4.4.1.jar!/:?] at io.fabric8.kubernetes.client.dsl.base.BaseOperation.get(BaseOperation.java:162) ~[kubernetes-client-4.4.1.jar!/:?] at org.springframework.cloud.kubernetes.config.ConfigMapPropertySource.getData(ConfigMapPropertySource.java:96) ~[spring-cloud-kubernetes-config-1.1.3.RELEASE.jar!/:1.1.3.You can ignore the error when the following message is displayed at the end of the log; if you do not see this message, contact My Oracle Support (MOS) and provide the applicable error code and log:

13:52:57.698 [main] INFO org.apache.catalina.core.StandardService - Starting service [Tomcat] 13:52:57.699 [ main] INFO org.apache.catalina.core.StandardEngine - Starting Servlet engine: [Apache Tomcat/9.0.43] - What happens if a new sandbox workspace is created?

When a new sandbox workspace is created, the folders of the older workspace are by default being copied into the new workspace. Here, folder means the Model Objectives. The Model Objectives are global objects and will be visible across the workspaces. However, the models created within those objectives will be private. This has been done purposely as you expect multiple modelers working on the common objective in their private workspaces.

- Not able to access any models in the copied folders in the new

workspace – the folders are being copied as empty folders?

Yes, you should not be able to access other workspace’s private models. Also, as long as other users are working on the objective and have their models in there, you will not be able to delete the objectives.

- What should you do when UI pages does not load due to less network

speed?

The default time to load all the modules of OJET/REDWOOD page is 1 minute. Reload the page to view the UI pages.

- What are the Workspace parameters used in MMG Python Scripts?

The following parameters are used:

- workspace.list_workspaces(): Used to fetch a list of all workspaces. This list is populated in the dropdown menu of datastudio.

- workspace.check_aif(): A method used to check if AIF is enabled or not

- workspace.attach_workspace(“SANDBOX123”): A method used to set workspace

- workspace.get_workspace(): Used to fetch the selected workspace (for example, SB1)

- get_mmg_studio_service_url(): Used to fetch the base URL (for example, http://whf999yyy:0000/mmg)

- get_user(): Used to fetch current user (for example, mmguser)

- How to take connections for Data access?

You need access to the data to work on it. For the workspace, there are some underlying Data Schemas. You can also create a workspace that allows to select multiple underlying Data Schemas. You can use or remove multiple Data Schemas like multi combo box, where 1, 2, 3, and 4, 5 are schemas underlying. When you work with the models, you can access the notebook to fetch data for all these Data Schemas and create some data frames out of it. That can be used for model reading or other purposes.

This happens in workspace of the sandbox where you are building a Notebook. The same Notebooks gets promoted to production workspace. Therefore, the workspace production has its own set of underlying Data Schemas. When you build the model with getting connection for the underlying Schema 1 and 2, and getting the data and building, it makes rules work and will not be affected if the same Notebooks gets promoted to production or deployment is cloned.

Therefore, the Notebook needs to run which should not be fetching this data because it will be working on any 1 and 2 Schemas.

To avoid this issue, you can use connection feature to connect with a schema. This is a wrapper function where you can specify which workspace you are connecting to.

You can enter the workspace details to get the connection and that starts fetching the data.

When you create the Notebook to production, a script runs to not to connect the workspace. This also uses overloaded methods. This method tells how to get the connection. Simple get connection gets the primary connection as first Data Schema which you are using without any overload.

The second connection gets an ID as the name the Data Source which you are using and for the current one will passes as get connection 1.

In the sandbox, this script looks for 1 and it creates a connection and moves to production.

It will again look for an equivalent 1 and tries to get a connection.

Therefore, whatever you select first, becomes the first Data Schema, Second Schema, Third Schema, therefore, Primary, Secondary, Tertiary and so on. You can also pass the number while getting the connection to get the first primary Data Schema as a secondary Data Schema. Therefore, when it runs in sandbox, it gets the Secondary Schema. When it runs in the production, it fetches a Secondary Data Schema of production.

- What are parameters to establish the Connection for data access?

The following section lists the connection details such as the Data Sources and so on: workspace.get_connection(): fetches connection object for the Primary Data Source of the workspace. This is equivalent to executing workspace.get_connection(1). workspace.get_connection('id'): fetches connection for the Data Source by name. For example, workspace.getconnection ('ws_data_1') – here 'ws_data_1' is one of the underlying Data Source for the workspace. workspace.get_connection(n): fetches connection for the Data Source by order. For example, workspace.getconnection(2) – this will fetch connection for the Secondary Data Source. The following section lists the workspace details: After a workspace is attached, we can list Data Sources related to that using: workspace.list_datasources(): will list Data Sources related to attached workspace with default order 1 For example, {'Data Source': [{'name': 'newdatasource1', 'order': '1'}]} workspace.list_datasources(“SB1”): will list Data Sources related to SB1 workspace with default order 1 For example, {'Data Source': [{'name': 'ds1', 'order': '1'}]} workspace.list_datasources(“SB1”, 1): will list Data Sources related to SB1 workspace with order 1 as passed in second argument For example, {'Data Source': [{'name': 'ds1', 'order': '1'}]} Note: This is applicable for Python and Python variants interpreters, and not on any other interpreters.

- What should I do if the Python installation displays the following

error message, " If ModuleNotFoundError: No module named '_lzma'"?

You must install xz-devel library before installing the Python. For more details, see Install MMG Python Library section.

To install, perform the following step:

$yum install -y xz-devel. - What should I do to reconfigure DS Studio server port and its

interpreter’s default port to available ports?

To reconfigure port numbers:

- Run the command

install.sh -uto change the current studio port to the desired port number in the configuration files/tables. - Run the t

startup.shscript of Studio at the location: OFS_MMG/mmg-studio/bin/ and modify the line numbers 24/25 of OFS-MMG/mmg-studio/bin/startup.sh to specify the interpreter name and port number.DS version 22.4.3

nohup "$DIR"/datastudio --jdbc -1 --eventjdbc -1 --shell -1 --eventshell -1 --graalvm -1 --eventgraalvm -1 --pgx -1 --eventpgx -1 --external --port 8008 --jdbc 3011 --eventjdbc 3031 --python 3012 --eventpython 3032 --markdown 3009 --eventmarkdown 3029 --spark 3014 --eventspark 3034 &> "$DIR"/nohup.out &

For PGX Interpreter, modify: OFS_MMG/mmg-studio/interpreter-server/pgx-interpreter-22.4.3/bin/pgx-interpreter file "${1:-7022}" "${2:-7042}" values to "${1:-3022}" "${2:-3042}"

DS version 23.3.5

nohup "$DIR"/datastudio --jdbc -1 --shell -1 --external --port 8008 --jdbc 3011 --python 3012 --markdown 3009 --spark 3014 --pgx 3022 &> "$DIR"/nohup.out

For event ports in DS 23.3.5

Set the environment variables DS_EVENT_HANDLER_HOST and DS_EVENT_HANDLER_PORT before launching the interpreters, else, default values will be used. You can modify these ports in the startup.sh of the Studio.

Example:

export DS_EVENT_HANDLER_HOST=localhost

export DS_EVENT_HANDLER_PORT=3432

To change the ports configured for events in the Data Studio server, modify the following server configuration:

studio-server:

thrift-server:

enabled: true

port: <desired port -defaulted to 8432>

mode: TCP

NOTE:

************

Python Interpreter

Beginning with Data Studio 21.4.0, 6012 is default port on which the REST server for the Python interpreter listens. To overwrite this, set the STUDIO_INTERPRETER_PYTHON_INTERPRETER_REST_SERVER_PORT environment variable.

PGX-Python Interpreter

Beginning with Data Studio 23.1.0, 6022 is the default port on which the REST server for the PGX-Python interpreter listens. To overwrite this, set the STUDIO_INTERPRETER_PGX_PYTHON_INTERPRETER_REST_SERVER_PORT environment variable.

Modify the

startup.shto:export STUDIO_INTERPRETER_PYTHON_INTERPRETER_REST_SERVER_PORT=3038

export STUDIO_INTERPRETER_PGX_PYTHON_INTERPRETER_REST_SERVER_PORT=3039

This configuration changes the default interpreter ports to new ports.

- Ports mentioned in the interpreter json files should be reconfigured. The interpreter file location is: “OFS_MMG/mmg-studio/server/builtin/interpreters/<interpreter>.json” file.

- Execute startup.sh and check the studio/interpreter ports.

- Similarly, execute ./datastudio.sh –help from OFS_MMG/mmg-studio/bin/ for all available options.

DS Studio Server port and its interpreters default port can be reconfigured to any available ports by following these steps:- Change the Datastudio URL with the desired

DS port. install.sh -umust be triggered to change the current studio port to 8008 in the configuration files/tables. - After successful execution of install.sh. The ports can be updated

by the user in the startup.sh of studio in the path

OFS_MMG/mmg-studio/bin/.- Edit line no 24/25 of

OFS-MMG/mmg-studio/bin/startup.shand change as below by specifying the interpreter name and port to be modified.- In DS version 22.4.3

nohup "$DIR"/datastudio --jdbc -1 --eventjdbc -1 --shell -1 --eventshell -1 --graalvm -1 --eventgraalvm -1 --pgx -1 --eventpgx -1 --external --port 8008 --jdbc 3011 --eventjdbc 3031 --python 3012 --eventpython 3032 --markdown 3009 --eventmarkdown 3029 --spark 3014 --eventspark 3034 &> "$DIR"/nohup.out

For PGX Interpreter

Change it directly in the OFS_MMG/mmg-studio/interpreter-server/pgx-interpreter-22.4.3/bin/pgx-interpreter file "${1:-7022}" "${2:-7042}" values to "${1:-3022}" "${2:-3042}"

- In DS version 23.3.5nohup "$DIR"/datastudio --jdbc -1 --shell -1 --external --port 8008 --jdbc 3011 --python 3012 --markdown 3009 --spark 3014 --pgx 3022 &> "$DIR"/nohup.out & For event ports in DS 23.3.5 You need to set the environment variables DS_EVENT_HANDLER_HOST and DS_EVENT_HANDLER_PORT before interpreters are launched. Otherwise, the default values are used. This can be mentioned in the

startup.shof studio. example:- export DS_EVENT_HANDLER_HOST=localhost

- export DS_EVENT_HANDLER_PORT=3432

In order to change the port listening for events in the Data Studio server, adapt following server configuration:- studio-server:

- thrift-server:

- enabled: true

- port: <desired port -defaulted to 8432>

- mode: TCP

- thrift-server:

- Generic

Notes

Python Interpreter

Starting from Data Studio 21.4.0, the REST server for the Python interpreter listens on port 6012 by default. One can overwrite this by setting the STUDIO_INTERPRETER_PYTHON_INTERPRETER_REST_SERVER_PORT environment variable.

PGX-Python Interpreter

Starting from Data Studio 23.1.0, the REST server for the PGX-Python interpreter listens on port 6022 by default. One can overwrite this by setting the STUDIO_INTERPRETER_PGX_PYTHON_INTERPRETER_REST_SERVER_PORT environment variable.

The above can be mentioned in the

startup.shof studio as export STUDIO_INTERPRETER_PYTHON_INTERPRETER_REST_SERVER_PORT=3038 export STUDIO_INTERPRETER_PGX_PYTHON_INTERPRETER_REST_SERVER_PORT=3039The above configuration will change the default interpreter ports and reconfigure to listen to the new ports. (For example: As mentioned in the below table).

- In DS version 22.4.3

- Ports mentioned in the interpreter json files also needs to be reconfigured. The interpreter file locations can be found at “OFS_MMG/mmg-studio/server/builtin/interpreters/<interpreter>.json” file.

- Execute startup.sh and check the studio/interpreter ports.

- Similarly you can execute as ./datastudio.sh –help from OFS_MMG/mmg-studio/bin/ location for all the available options.

The above steps will reconfigure Server/Interpreter to these ports:- Server/Interpreters Modified Port

- DS Studio port 8008

- Jdbc 3011

- eventjdbc 3031

- python 3012

- eventpython 3032

- markdown 3009

- eventmarkdown 3029

- spark 3014

- eventspark 3034

- pgx 3022

- eventpgx 3042

- Edit line no 24/25 of

- Run the command

- Dataset issue with the latest version of pydantic package

(2.18.7)

Pydantic package (2.18.7) is incompatibile with MMG functionality. So, when you reinstall the package the uninstall and reinstall pydantic package version 1.10.13.

- python3 -m pip uninstall pydantic

- python3 -m pip install pydantic==1.10.13 --user

- Installation of Python Packages from Local Repository

In order to install the python dependencies in mmg-8.1.2.6.0.tar.gz from a local repository, use the following command.python3 -m pip install mmg-8.1.2.6.0.tar.gz.tar.gz --index-url http://artifactory.XYZ.com/artifactory/api/pypi/XYZ-py-local/simple --extra-index-url http://artifactory.XYZ.com/artifactory/api/pypi/XYZ-py-local/simple --trusted-host artifactory.XYZ.com

- MMG Configuration steps for Interpreters

For JDBC

Update the below proeprty in jdbc.json underOFS_MMG/mmg-studio/server/builtin/interpreters

"propertyName": "default.url"

"defaultValue": "<JDBC_URL>"

For example: jdbc:oracle:thin:@ofss-mum-1033.snbomprshared1.gbucdsint02bom.oraclevcn.com:15 21/MMG19PDB

"propertyName": "default.user",

"defaultValue": "<schameusername>"

For example: The schema user to which you want to connect, for example: datastudio schema name,

"propertyName": "default.password",

"defaultValue": "<schemapassword>"

For example: Password of the provided schema user.

Start the jdbc interpreter by executing below command under //OFS_MMG/mmg-studio/interpreter-server/jdbc-interpreter-22.4.3/bin ./

jdbc-interpreter

If the jdbc interpreter needs to be included in the datastudio startup script remove the below entry from /OFS_MMG/mmg-studio/bin/startup.sh --jdbc -1.

For Spark

- Copy the configured Spark directory from hadoop cluster to <MMG Studio>/interpreter-server/spark-interpreter/extralibs. For example: spark-2.4.8-bin-hadoop2.7

- Copy the below files to the <MMG Studio>/interpreter-server/spark-interpreter/extralibs krb5.conf <keytabfile>.keytab

- To run Spark in yarn-client mode, configure the following parameters in this

file OFS_MMG/mmg-studio/server/builtin/interpreters/spark.json

spark.master = yarn-client

spark.driver.bindAddress = 0.0.0.0

spark.driver.host = <host> -> Apache Spark hostNote:

When using the Kubernetes interpreter lifecycle, <host> can be the IP address or hostname of any node in your Kubernetes cluster. When using the Host interpreter lifecycle, <host> should be the IP address or hostname of the node that runs the Spark interpreter.Note:

When connecting to a YARN cluster, the Spark driver authenticates as the UNIX user that runs the Spark interpreter. You can set the HADOOP_USER_NAME environment variable to make the Spark driver authenticate as a different user. If you use the Host interpreter lifecycle, then you can do this by exporting the HADOOP_USER_NAME environment variable before starting the Spark interpreter process. If you us the Kubernetes interpreter lifecycle, then you can do this by setting the HADOOP_USER_NAME environment variable in the resource manifest (spark.yml). - Update file spark-defaults.conf keytab location to the location where <keytabfile>.keytab file is copied

- Update file spark-env.sh with the krb5.conf location to the location where

krb5.conf file is copied.

For example: Djava.security.krb5.conf=/OFS_MMG/mmg-studio/interpreter-server/spark-inte rpreter-22.4.2/extralibs/krb5.conf".