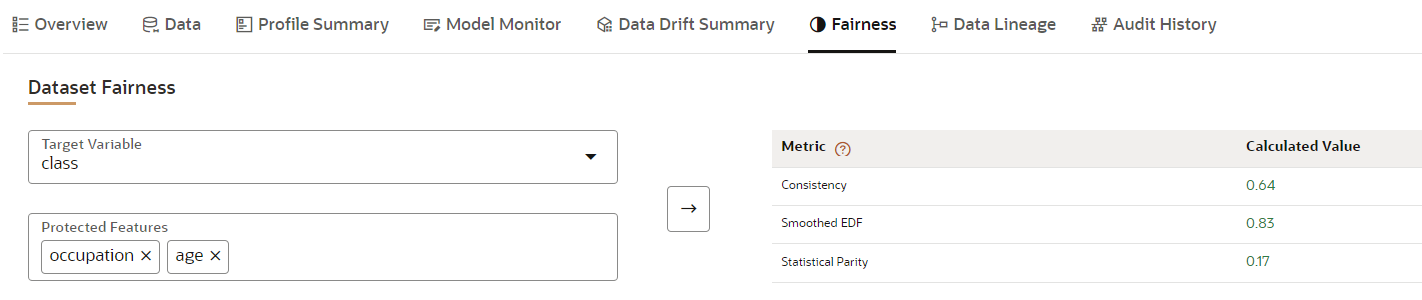

Fairness

You can calculate the fairness metrics of the dataset by choosing the target variable and protected features. The module provides metrics dedicated to assessing and checking whether the model predictions and/or true labels in data comply with a particular fairness metric. For this example, the statistical parity metric also known as demographic parity, measures how much a protected group’s outcome varies when compared to the rest of the population. Thus, such fairness metrics denote differences in error rates for different demographic groups/protected attributes in data. Therefore, these metrics are to be minimizedto decrease discrepancies in model predictions with respect to specific groups of people. Traditional classification metrics such as accuracy, on the other hand, are to be maximized.

- Measure Fairness Metrics of Dataset

- Models Bias Mitigation of Models

- Privacy Estimation of Models

For Example: In model pipeline, a new widget can be added to calculate the fairness metrics of a trained model or an advanced option can be added to model training widget to calculate the fairness metric of the model after training.