- Oracle Financial Services Model Management and Governance Installation and Configuration Guide

- Preinstallation

- Prerequisite for Data Pipeline Script Execution

4.5 Prerequisite for Data Pipeline Script Execution

The below are prerequisites for Data Pipeline. The steps need to be executed

before triggering MMG Installer.

- Create the below directories in the DB Server:

mkdir -p /file_store/fs_list/logs#A directory by the external table to write the logs.mkdir -p /file_store/fs_list/script#A directory to hold a pre-processor script used to list the files in a directory. This needs Read-Execute permissions.mkdir -p /file_store/fs_list/control#A directory to hold files to control which directories can be listed. This needs Read permissions.mkdir -p /scratch/oraofss/fccm-data#A directory to store the csv files.

- Create directory object associated with physical directories and provide

grants:

- Create directory object associated with physical directories and provide grants.

- Create OR Replace Directory fs_list_logs_dir AS '/file_store/fs_list/logs/'; GRANT READ, WRITE ON DIRECTORY fs_list_logs_dir TO amldd;

- Create OR Replace Directory fs_list_script_dir AS '/file_store/fs_list/script/'; GRANT READ, EXECUTE ON DIRECTORY fs_list_script_dir TO amldd;

- Create OR Replace Directory fs_list_control_dir AS '/file_store/fs_list/control/'; GRANT READ ON DIRECTORY fs_list_control_dir TO amldd;

- Create OR Replace Directory external_tables_dir AS '/scratch/oraofss/fccm-data/'; GRANT READ ON DIRECTORY external_tables_dir TO amldd;

- GRANT CREATE TABLE TO <SCHEMA-NAME>;

- Create Pre-processor script. The script lists the files in the directory

provided by the external table LOCATION clause. It specifies the date format in

a more useful form than the default format. The second cat command shows us the

contents of the file once it is written.

- This will contain the script to monitor the path

cat> /fs_list_logs_dir/fs_list/script/list_directory.sh<<EOF!/bin/bash /usr/bin/ls -l --time-style=+"%Y-%m-%d:%H:%M:%S" "\$(/usr/bin/cat \$1)"EOF - This will contain the script to monitor the path

cat > /fs_list_logs_dir/fs_list/control/trace.txt<<EOF/scratch/oraofss/fccm-dataEOF

- This will contain the script to monitor the path

- From SQL window, login to amldd user and create external table to list all the

files in the directory using below query.

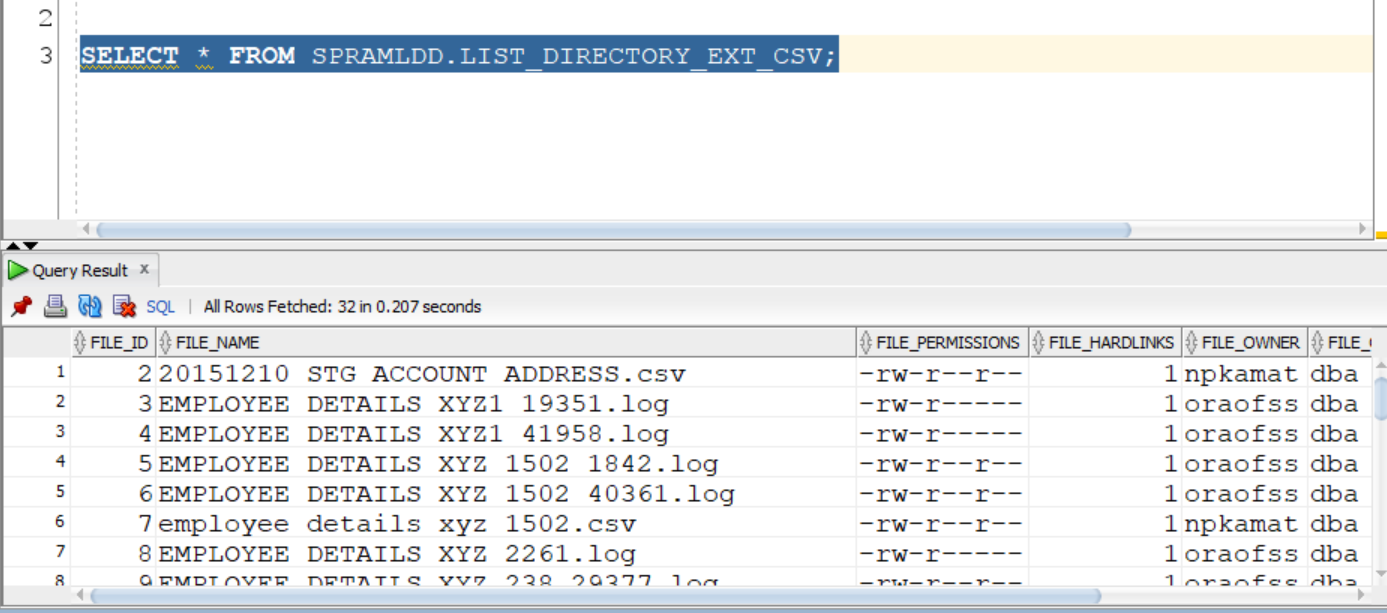

CREATE TABLE LIST_DIRECTORY_EXT_CSV( "FILE_ID" NUMBER,"FILE_NAME" VARCHAR2(200 BYTE),"FILE_PERMISSIONS" VARCHAR2(11 BYTE),"FILE_HARDLINKS" NUMBER,"FILE_OWNER" VARCHAR2(32 BYTE),"FILE_GROUP" VARCHAR2(32 BYTE),"FILE_SIZE" NUMBER, "FILE_DATETIME" DATE)ORGANIZATION EXTERNAL( TYPE ORACLE_LOADERDEFAULT DIRECTORY "FS_LIST_LOGS_DIR"ACCESS PARAMETERS( RECORDS DELIMITED BY NEWLINEPREPROCESSOR fs_list_script_dir:'list_directory.sh'FIELDS TERMINATED BY WHITESPACE(file_id,file_permissions,file_hardlinks,file_owner,file_group,file_size,file_datetime DATE 'YYYY-MM-DD:HH24:MI:SS',file_name))LOCATION( "FS_LIST_CONTROL_DIR":'trace.txt'))REJECT LIMIT UNLIMITED; - Verify the files in the external table using sql : select * from

LIST_DIRECTORY_EXT_CSV

Figure 4-1 List Directory

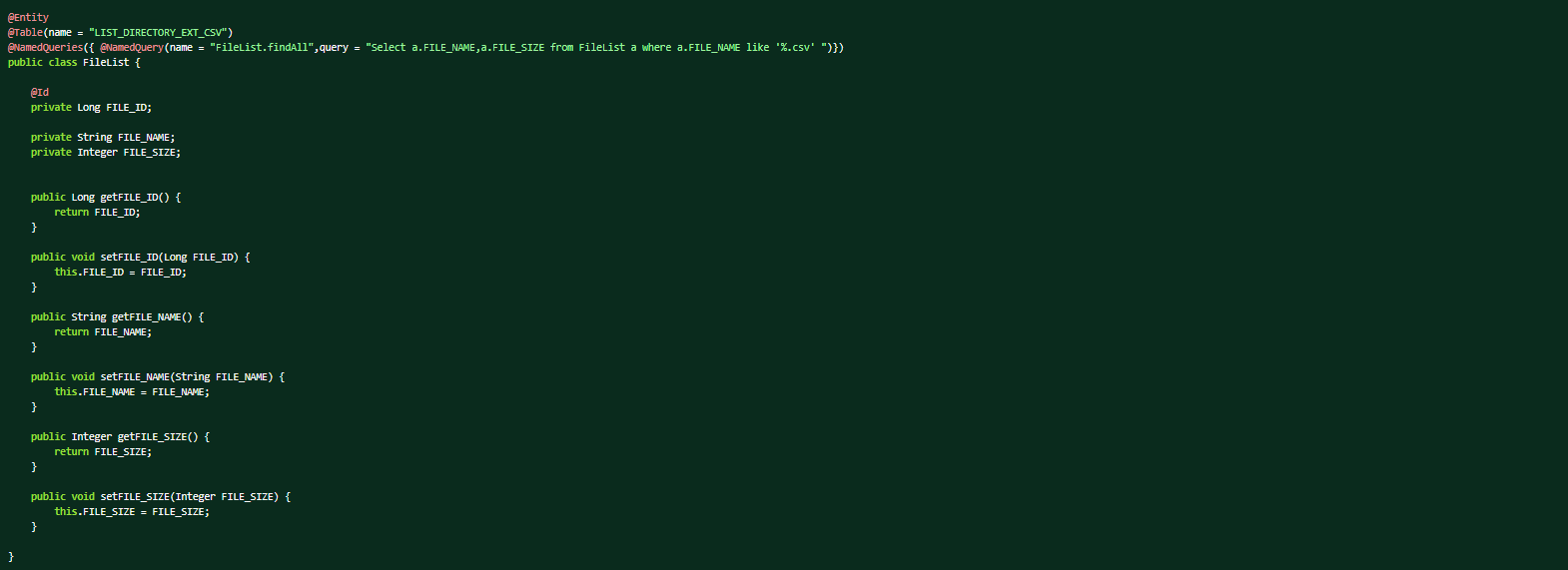

- API for the Listing FileEntity

Figure 4-2 Entity

DAO

DAOFigure 4-3 DAO

Controller

Figure 4-4 Controller

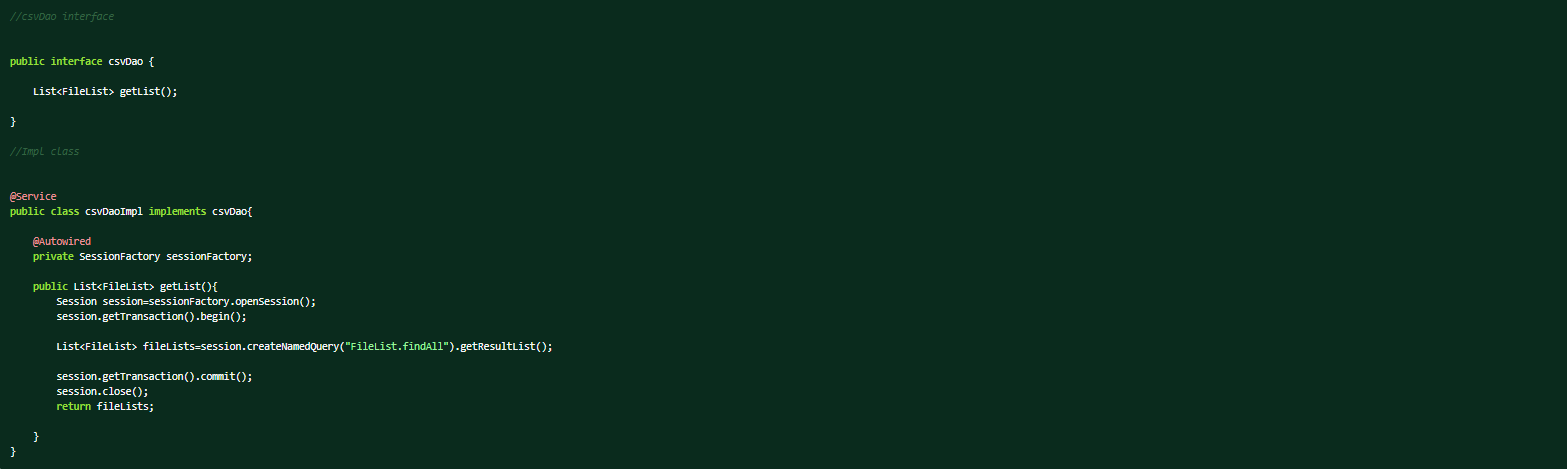

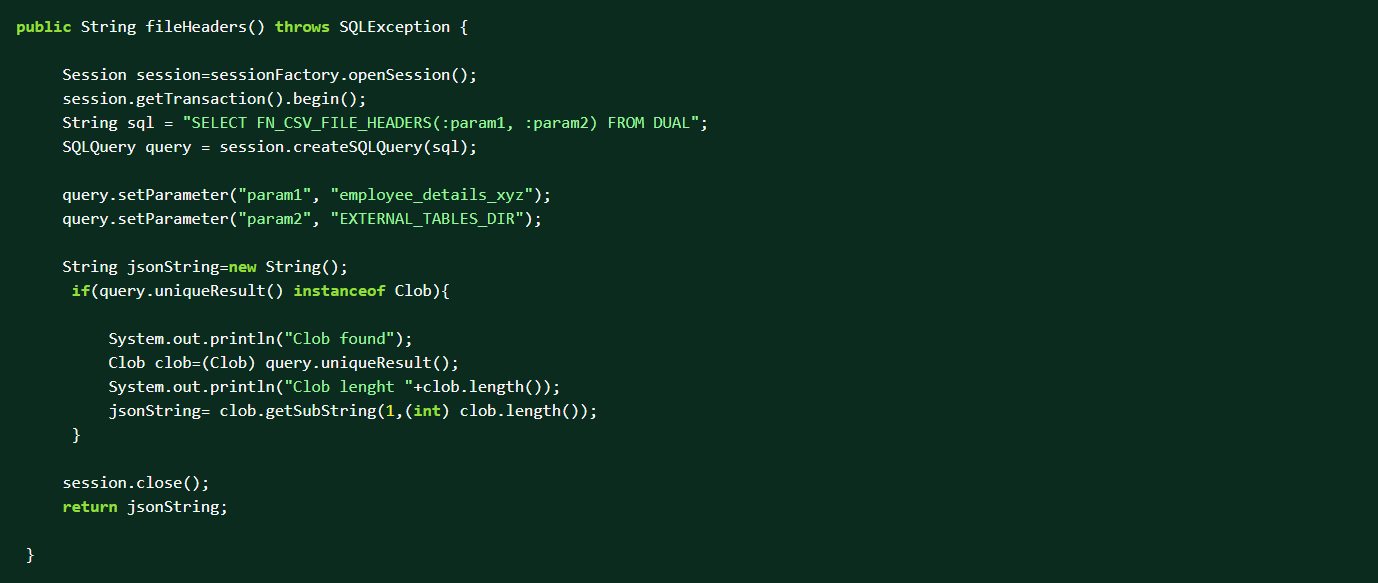

- Get Headers DetailsHeaders of the CSV files used for creating the fields of the external table. The SQL Procedure P_CSV_FILE_HEADERS returns the headers of the given CSV file.

Figure 4-5 Get Header Details

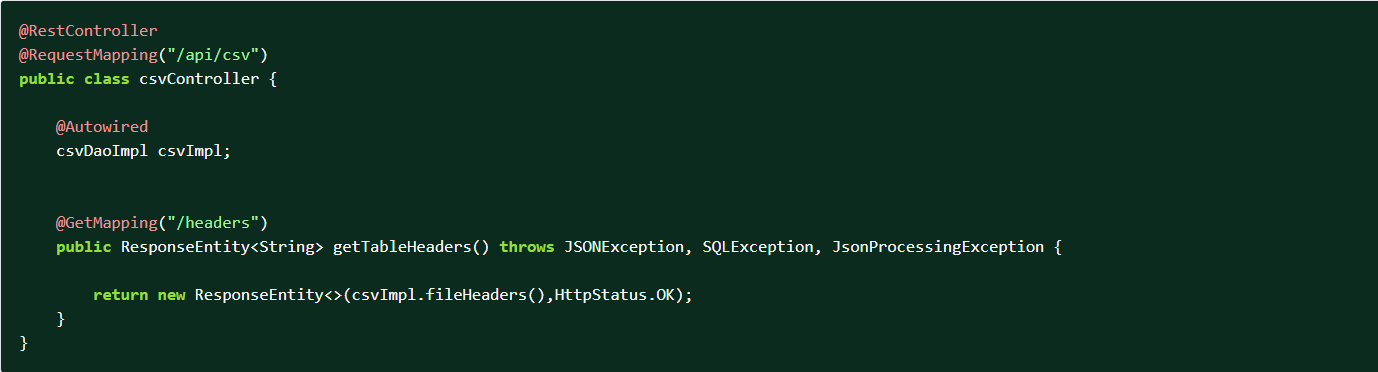

API for getting Headers

API for getting HeadersFigure 4-6 DAO

Figure 4-7 Controller

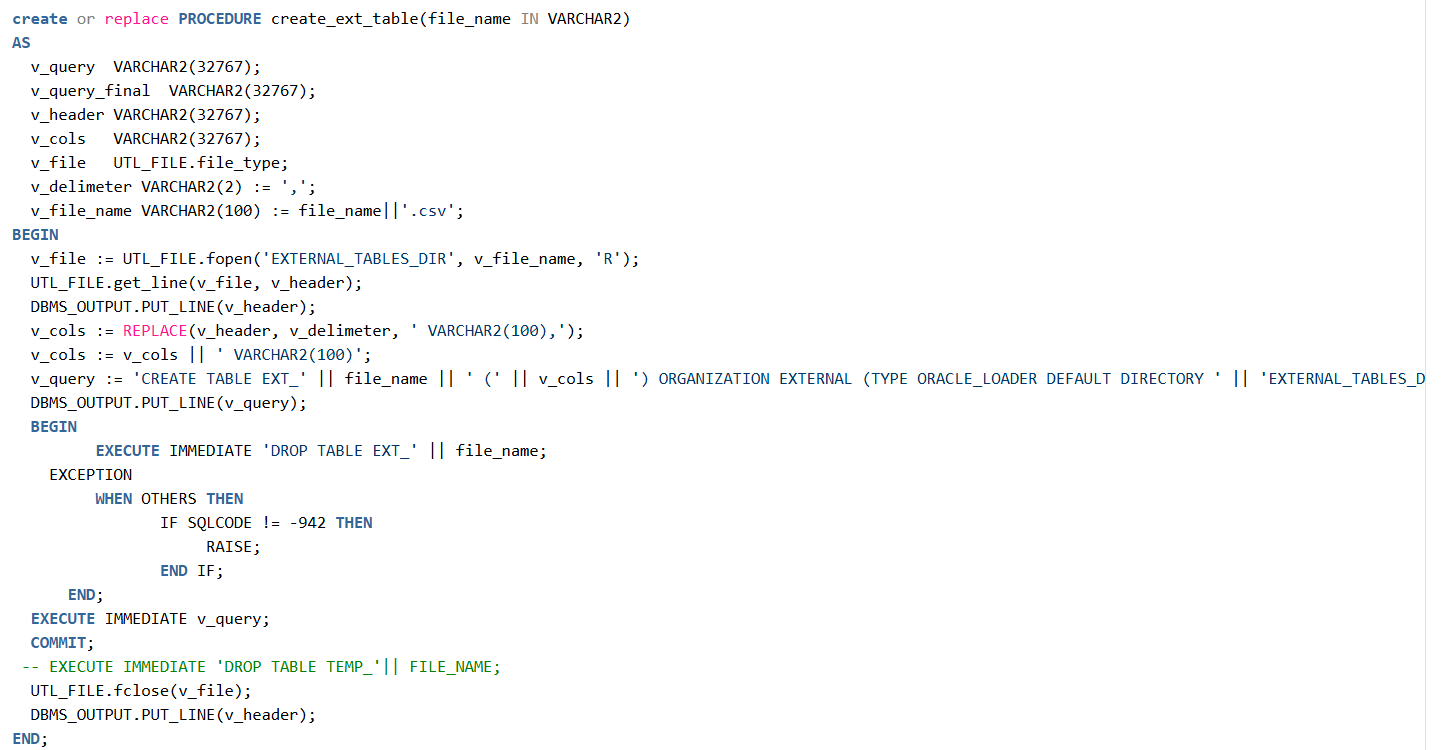

Creating External Table

Creating External TableUsing the headers details of the CSV an external table is created using below PLSQL Procedure

Figure 4-8 External table

Note:

Limitation to show few of the Interpreter icons by default:- %shell: This will not be enabled. It has generally been called out as a security vulnerability.

- %spark: This is the default behaviour in Data Studio. Default configuration provided in spark.json enables both %spark and %pyspark, but ¿add¿ button is enabled only for %pyspark. It will be enabled only if there is any App/Customer requirement.

- %jdbc: Default configuration provided by Data Studio enables ¿add¿ button only for %mysql and not for %jdbc. It will be enabled only if there is any App/Customer requirement.

Parameters required to execute Data Pipeline from SchedulerFor standalone Data Pipeline definitions, the user has to pass the following parameters as optional during execution, after selecting the graph data pipeline as component.$<Runtime Parameter1>=<Value>$<Runtime Parameter2>=<value>$RUNTYPE$=PROD$batchRunType$=run$BATCHTYPE$=DATA$JOBNAME$=<DataPipeline_Name>