6 Support Utilities

Some support utilities will be exposed for implementers directly in APEX, allowing you to run functions such as database cleanup without Oracle involvement. These utilities may also be used by Oracle Support when responding to Service Requests on your RAP environments.

Data Cleanup Utility

Because foundation data is always loaded first through the Retail Insights data warehouse, implementers often need to erase data from the RI tables in preparation for a new load. Database functions have been exposed to APEX to allow targeted deletion of data by table name. The deletion requires a two-step process to prevent accidental execution of the command. The first step is to request an authentication token for the utility, which will return a string value. You must then pass the token into the subsequent call to the utility to execute the data cleanup step. Executing the utility with an expired or invalid token will return an error message.

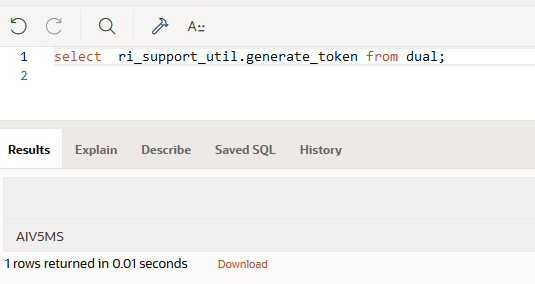

The command to run in APEX to generate tokens is:

select ri_support_util.generate_token from dual

Take the return value from this query and copy it into the TOKEN_VALUE variable in the PL/SQL block below.

Specify the schema name and table name to be truncated, then run the PL/SQL block.

DECLARE

TOKEN_VALUE VARCHAR2(200);

SCHEMANAME VARCHAR2(200);

TABLENAME VARCHAR2(200);

BEGIN

TOKEN_VALUE := 'ABCDEF';

SCHEMANAME := 'RADM01';

TABLENAME := 'W_RTL_SLS_TRX_IT_LC_DY_FS';

RI_SUPPORT_UTIL.CLEAR_SELECTED_RI_TABLES(

TOKEN_VALUE => TOKEN_VALUE,

SCHEMANAME => SCHEMANAME,

TABLENAME => TABLENAME

);

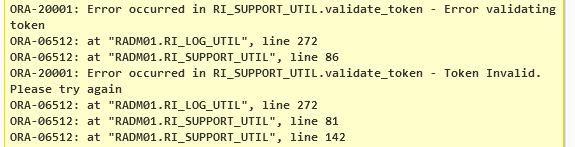

END;If the process is successful, you will see that the PL/SQL block was successfully executed with no further message or results. If the process encounters any error, it will display the error details in the results panel in APEX. For example, if the token used is not valid it will show the following error:

To quickly clean the entire database schema instead of individual tables, you may instead call the following command. This command will erase all customer data except for the calendar, system configuration tables, and seed data records. Use this command if you need to reset the environment in preparation for a new dataload using a different dataset:

DECLARE

v_token VARCHAR2(200);

SCHEMANAME VARCHAR2(200);

BEGIN

v_token := ri_support_util.generate_token;

SCHEMANAME := 'RADM01';

RI_SUPPORT_UTIL.CLEAR_SELECTED_RI_TABLES(

TOKEN_VALUE => v_token,

SCHEMANAME => SCHEMANAME

);

END;Calendar removal is provided as a separate function, because you cannot remove calendar information without also erasing

all partitions (which are specific to your currently loaded calendar). The function name is CLEAR_RI_MCAL_TABLES and can be called the same way as the schema clear script above, passing in the token and schema name as the inputs. Before

you perform any calendar cleanup, review the following:

-

Partition removal is based on the current partition configuration in

C_MODULE_ARTIFACT; it will not modify tables that are not enabled for partitioning. Ensure the configuration table reflects your current cleanup needs. -

Because calendar cleanup includes partition removal, you cannot use the system for a new data load without first re-partitioning the system. Refer to the RAP Implementation Guide for the steps to reload the calendar and partition the database.

When you run either of the cleanup commands above, it may take an hour or longer to complete depending upon the amount

of data in your schema and the number of partitions requiring deletion. Due to APEX limitations, your session may expire or

timeout while waiting for the command to complete, but the process will continue to run in the database. To monitor the activity

after a session timeout, you can query the RI_LOG_MSG table and check for new log messages:

select * from ri_log_msg order by msg_ts desc;If the process is still running, you will see new log entries being added for ri_support_util methods.

If no recent entries are added and the last set of messages show the END messages for a process step, then

you can verify that all your tables are cleared and proceed with your implementation activities.

Aggregation Utility

Retail Insights has over 100 different tables for pre-calculating data at higher levels of aggregation, mainly for the purpose of BI reporting and analytics. These tables do not need to be populated during initial historical data loads but would be needed before end-users begin accessing data in RI. To populate these tables after history loads are complete, an aggregation utility is provided that can use the base intersection of a functional area to calculate all of the higher-level tables.

Prerequisites for using the utility (all steps must be completed):

-

Partitioning has been run for the target functional areas such as sales (

SLS), inventory (INV), and so on. Follow the steps in the RAP Implementation Guide to perform additional partitioning as needed. -

The base fact for the functional area has already been loaded with data for the entire date range you want to aggregate on. For example, the

W_RTL_SLS_TRX_IT_LC_DY_Ftable is loaded before attempting to aggregate it toW_RTL_SLS_IT_LC_WK_A. -

Database statistics have been collected recently using

REFRESH_RADM_JOBandANAYLZE_TEMP_TABLES_JOB(either as part of an ad hoc data load or automatically as part of nightly batch).

The configuration table to control the utility is C_RI_AGGREGATION_MAP, which is available from the Control

Center in the AI Foundation user interface. It contains a list of aggregate tables in RI that can be processed by the utility.

For each table you want to load, set the START_DT as the earliest date to process and the END_DT to the final date to process. The tables are grouped by functional area such as MODULE_CODE=SLS so you

can update all tables relating to that fact.

Once the necessary updates are performed, you will execute an ad hoc process in POM named AGGREGATION_UTILITY_ADHOC. This process has 3 jobs in it:

AGG_UTILITY_PRE_JOB – Calculates a temporary lookup table for product hierarchy relationships

AGG_UTILITY_ORG_PRE_JOB – Calculates a temporary lookup table for organization hierarchy relationships

AGG_UTILITY_JOB – Performs an aggregation action for a specific table name and run type

The AGG_UTILITY_JOB requires two input parameters: the name of the table as found in C_RI_AGGREGATION_MAP and the type of aggregation to perform (FRESH or RESTART). When FRESH is specified, it assumes you want to aggregate the entire date range specified in the configuration table, even if it has

been run before. If RESTART is specified, it will run only from the last completed period (the partition

job aggregates one quarter at a time so it will not re-run earlier quarters that already completed). Also use the RESTART option if you changed the END_DT to some time further in the future and want to only process

incomplete dates resulting from the change. In most use cases you can always specify RESTART as the option

and it will perform the required actions.

Example Postman message body for the process call:

{

"cycleName":"Adhoc",

"flowName":"Adhoc",

"requestType":"POM Scheduler",

"processName":"AGGREGATION_UTILITY_ADHOC",

"requestParameters":"jobParams.AGG_UTILITY_JOB= W_RTL_SLS_CS_IT_LC_DY_A RESTART"

}Once you have issued the command to start the process, you may monitor the detailed run status by querying the table C_BULK_LOAD_STATUS from APEX. A record will be inserted for each calendar quarter that has been processed until

the entire date range is aggregated. The POM job will complete successfully after the table is loaded for all dates. You

may then compare the base fact table with the target aggregate and confirm the values have been rolled up as expected.

The aggregate tables must be populated in a specific sequence based on the value in the AGGREGATION_LEVEL column in C_RI_AGGREGATION_MAP. For each MODULE_CODE, the level 1 tables must be populated

first, then the level 2 tables, and so on. To automate this execution sequence, there is a separate job available in POM,

namedAGG_SRVC_JOB. The aggregation service job accepts a single input parameter for the MODULE_CODE value. The job will execute all tables in the associated record set in C_RI_AGGREGATION_MAP for that module,

following the AGGREGATION_LEVEL sequence as needed. The two PRE jobs (AGG_UTILITY_PRE_JOB and AGG_UTILITY_ORG_PRE_JOB) are prerequisites for this job just as they are for AGG_UTILITY_JOB.

Example Postman message body for the process call:

{

"cycleName":"Adhoc",

"flowName":"Adhoc",

"requestType":"POM Scheduler",

"processName":"AGGREGATION_SRVC_ADHOC",

"requestParameters":"jobParams.AGG_SRVC_JOB=INV"

}If any processes in the AGG_SRVC_JOB have a failure or are taking too long to run, you may also check

the following tables for more information:

-

C_RI_SRVC_REQ_QUEUE– Contains the status of individual service calls invoked by the aggregation process. A status of6means success while7means failed. -

RI_LOG_MSG– If a process does fail, the detailed trace logs will be written to this table to help you identify the problem. Look for records wherePROGRAM_UNIT = RI_AGGREGATION_UTIL.

Database Statistics Utility

A critical part of working with large datasets in Oracle Database is the collection of statistics on your database tables.

The POM processes used to load data generally include a job to collect statistics on the entire database schema to ensure

stats are always up-to-date. The drawback of this program is that it can take a significant amount of time to run, even if

you only need to refresh statistics on a single table. To help implementers collect statistics on specific tables, a utility

is provided using the POM standalone program COLLECT_STATS_JOB.

The COLLECT_STATS_JOB accepts a single input parameter for the database module code you wish to gather

stats on. The module codes are defined from the configuration table C_MODULE_DBSTATS, which is available

from the Control & Tactical Center in the AI Foundation UI. The configuration table will come pre-defined with some core

modules that often need stats collected on them using the codes SLS, INV, and PRICE. You have the ability to insert new rows into the table to define your own custom values for MODULE_CODE. You may specify any value you wish for the MODULE_CODE, along with one or more tables you plan to collect

stats on. You would then pass the MODULE_CODE value into the job parameters to collect stats on your chosen

list of tables. TABLE_NAME and MODULE_CODE are the only required values for tables in the RADM01 schema. If you are collecting stats on a temp table (in the RABE01USER schema) then you

must also populate the OWNER_TYPE as BATCH.

After reviewing the configuration, you may invoke the job from POM or Postman, providing your MODULE_CODE as the only input parameter.

Example Postman message body for the process call:

{

"cycleName":"Adhoc",

"flowName":"Adhoc",

"requestType":"POM Scheduler",

"processName":"COLLECT_STATS_ADHOC",

"requestParameters":"jobParams.COLLECT_STATS_JOB=SLS"

}