Sending Data to AI Foundation

All AI Foundation modules leverage a common batch infrastructure to initialize the core dataset, followed by ad hoc, application-specific programs to generate additional data as needed. Before loading any data into an AI Foundation module, it is necessary to complete initial dimension loads into the data warehouse using AIF DATA jobs and validate that core structures (calendar, products, locations) match what you expect to see. Once you are comfortable with the data that has been loaded in, leverage the following jobs to move data into one or more AI Foundation applications.

Table 3-6 Extracts for AI Foundation

| POM Process Name | Usage Details |

|---|---|

|

INPUT_FILES_ADHOC_PROCESS |

Receive inbound zip files intended for AI Foundation, archive and extract the files.

This process looks for the |

|

RSE_MASTER_ADHOC_PROCESS |

Foundation data movement from the data warehouse to AI Foundation applications, including core hierarchies and dimensional data. Accepts many different parameters to run specific steps in the load process. |

|

<app>_MASTER_ADHOC_PROCESS |

Each AI Foundation module, such as SPO or IPO, has a master job for extracting and

loading data that is required for that application, in addition to the |

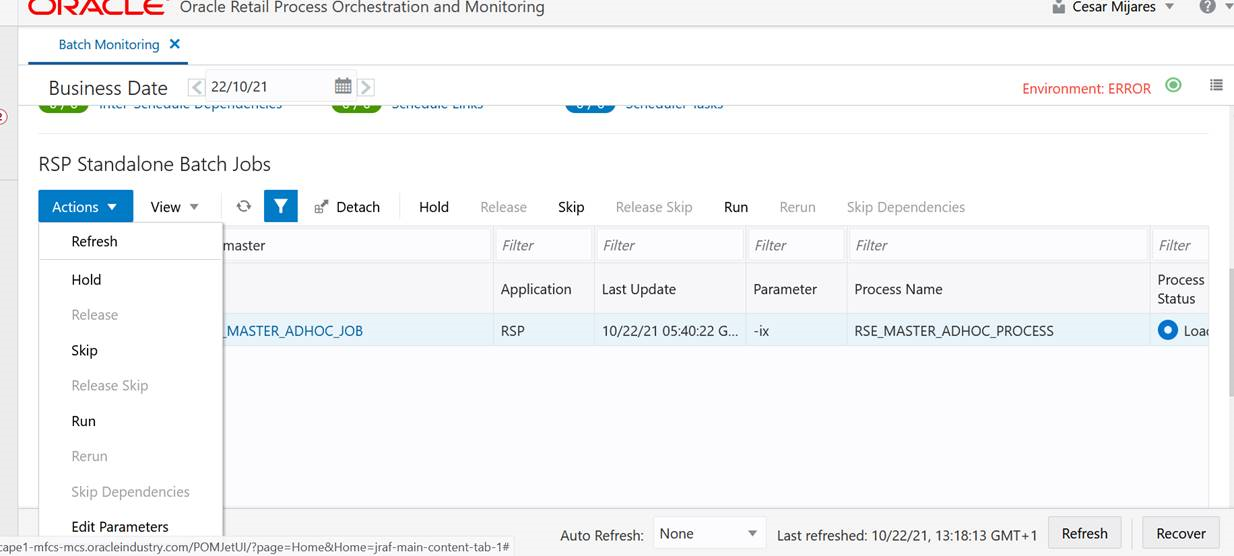

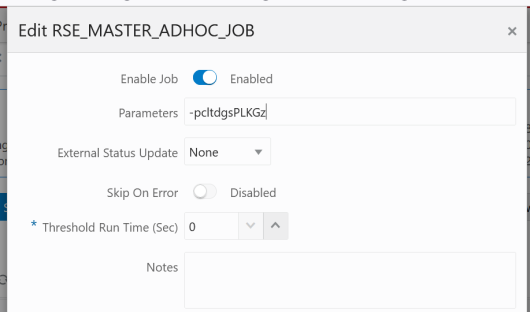

Because AI Foundation Cloud Services ad hoc procedures have been exposed using only one job in POM, they are not triggered like AIF DATA procedures. AI Foundation programs accept a number of single-character codes representing different steps in the data loading process. These codes can be provided directly in POM by editing the Parameters of the job in the Batch Monitoring screen, then executing the job through the user interface.

For example, this string of parameters will move all dimension data from the data warehouse to AI Foundation:

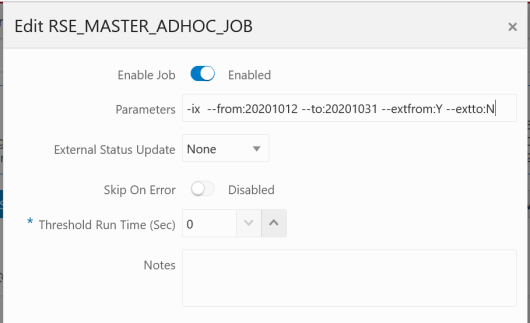

Additional parameters are available when moving periods of historical data, such as inventory and sales:

A typical workflow for moving core foundation data into AI Foundation is:

-

Load the core foundation files (like Calendar, Product, and Organization) using the AIF DATA schedule jobs.

-

Use the

RSE_MASTER_ADHOC_PROCESSto move those same datasets to AI Foundation Apps, providing specific flag values to only run the needed steps. The standard set of first-time flags are-pldg, which loads the core hierarchies required by all the applications. -

Load some ofyour history files for Sales using the AIF DATA jobs to validate the inputs. For example, load 1-3 months of sales for all products or a year of sales for only one department.

-

Load the same range of sales to AI Foundation using the sales steps in the master process with optional from/to date parameters. The flags to use for this are

-xwa, which loads the transaction tableRSE_SLS_TXNas well as all sales aggregates which are shared across AIF. -

Repeat the previous two steps until all sales data is loaded into both RI and AI Foundation.

Performing the process iteratively provides you early opportunities to find issues in the data before you’ve loaded everything, but it is not required. You can load all the data into AI Foundation at one time.

Follow the same general flow for the other application-specific, ad hoc flows into the AI Foundation modules. For a complete list of parameters in each program, refer to the AI Foundation Operations Guide.

When loading hierarchies, it is possible to have data issues on the first run due to missing or incomplete records from the customer. You may get an error like the following:

Error occurred in RSE_DDL_UTIL.create_local_index_stmt - Error while creating index: CREATE UNIQUEINDEX TMP$STG$RSE_LOC_SRC_XREF_2 ON TMP$STG$RSE_LOC_SRC_XREF ( HIER_TYPE_ID,LOC_EXT_ID,LEAF_NODE_FLG,APP_SOURCE_ID) ORA-01452: cannot CREATE UNIQUE INDEX; duplicate keys foundThe INDEX create statement tells you the name of the table and the columns that were attempting to be

indexed. Querying that table is what is required to see what is duplicated or invalid in the source data. Because these tables

are created dynamically when the job is run, you will need to first grant access to it using a procedure like below in IW:

begin RSE_SUPPORT_UTIL.grant_temp_table(p_prefix => 'STG$RSE_LOC_SRC_XREF'); end;The value passed into the procedure should be everything after the TMP$ in the table name (not the index

name). The procedure also supports two other optional parameters to be used instead of or in addition to the prefix:

p_suffix– Provide the ending suffix of a temporary table, usually a number like00001101, to grant access to all tables with that suffixp_purge_flg- Purge flag (Y/N) which indicates to drop temporary tables for a given run

Once this is executed for a given prefix, a query like this can retrieve the data causing the failure:

SELECT * FROM RASE01.TMP$STG$RSE_LOC_SRC_XREF WHERE ( HIER_TYPE_ID,LOC_EXT_ID,LEAF_NODE_FLG,APP_SOURCE_ID ) IN ( SELECT

HIER_TYPE_ID,LOC_EXT_ID,LEAF_NODE_FLG,APP_SOURCE_ID FROM

RASE01.TMP$STG$RSE_LOC_SRC_XREF GROUP BY

HIER_TYPE_ID,LOC_EXT_ID,LEAF_NODE_FLG,APP_SOURCE_ID HAVING count(*) > 1 )ORDER BY HIER_TYPE_ID,LOC_EXT_ID,LEAF_NODE_FLG,APP_SOURCE_ID ;For location hierarchy data the source of the issue will most commonly come from the W_INT_ORG_DH table.

For product hierarchy, it could be W_PROD_CAT_DH. These are the hierarchy tables populated in the data warehouse

by your foundation data loads.