Automate Invoice Images with OCI Vision and OCI Generative AI

Introduction

Companies often receive thousands of invoices in unstructured formats as scanned images or PDFs originating from suppliers and service providers. Manually extracting data from these invoices, such as invoice number, customer name, items purchased, and total amount, is a time-consuming and error-prone process.

These delays in processing not only affect accounts payable cycles and cash flow visibility but also introduce bottlenecks in compliance, auditing, and reporting.

This tutorial demonstrates how to implement an automated pipeline that monitors a bucket in Oracle Cloud Infrastructure (OCI) for incoming invoice images, extracts textual content using OCI Vision, and then applies OCI Generative AI (LLM) to extract structured fiscal data like invoice number, customer, and item list.

OCI services used in this tutorial are:

| Service | Purpose |

|---|---|

| OCI Vision | Performs OCR on uploaded invoice images. |

| OCI Generative AI | Extracts structured JSON data from raw OCR text using few-shot prompts. |

| OCI Object Storage | Stores input invoice images and output JSON results. |

Objectives

-

Automate invoice ingestion from OCI Object Storage.

-

Extract structured data from semi-structured scanned documents.

-

Integrate Optical Character Recognition (OCR) and LLM in real-time pipelines using OCI AI Services.

Prerequisites

-

An OCI account with access to:

-

OCI Vision

-

OCI Generative AI

-

OCI Object Storage

-

-

A Python

3.10or later version. -

A bucket for input images (for example,

input-bucket) and another for output files (for example,output-bucket). -

Download the following files:

Task 1: Configure Python Packages

-

Run the

requirements.txtfile using the following command.pip install -r requirements.txt -

Run the Python script (

main.py). -

Upload invoice images (for example,

.png,.jpg) to your input bucket. -

Wait for the image to be processed and the extracted JSON saved in the output bucket.

Task 2: Understand the Code

-

Load Configuration:

with open("./config", "r") as f: config_data = json.load(f)This code loads all required configuration values such as namespace, bucket names, compartment ID, and LLM endpoint.

Enter your configuration parameters in the

configfile.{ "oci_profile": "DEFAULT", "namespace": "your_namespace", "input_bucket": "input-bucket", "output_bucket": "output-bucket", "compartment_id": "ocid1.compartment.oc1..xxxx", "llm_endpoint": "https://inference.generativeai.us-chicago-1.oci.oraclecloud.com" } -

Initialize OCI Clients:

oci_config = oci.config.from_file("~/.oci/config", PROFILE) object_storage = oci.object_storage.ObjectStorageClient(oci_config) ai_vision_client = oci.ai_vision.AIServiceVisionClient(oci_config)This code sets up the OCI SDK clients to access OCI Object Storage and OCI Vision services. For more information, see OCI Vision.

-

Initialize LLM:

llm = ChatOCIGenAI( model_id="meta.llama-3.1-405b-instruct", service_endpoint=LLM_ENDPOINT, compartment_id=COMPARTMENT_ID, auth_profile=PROFILE, model_kwargs={"temperature": 0.7, "top_p": 0.75, "max_tokens": 2000}, )This code initializes the OCI Generative AI model for natural language understanding and text-to-structure conversion.

-

Few-shot Prompt:

few_shot_examples = [ ... ] instruction = """ You are a fiscal data extractor. ... """This code uses few-shot learning by providing an example of expected output so the model learns how to extract structured fields like

number of invoice,customer,location, anditems. -

OCR with OCI Vision:

def perform_ocr(file_name): print(f"📄 Performing OCR on: {file_name}") response = ai_vision_client.analyze_document( analyze_document_details=oci.ai_vision.models.AnalyzeDocumentDetails( features=[ oci.ai_vision.models.DocumentTableDetectionFeature( feature_type="TEXT_DETECTION")], document=oci.ai_vision.models.ObjectStorageDocumentDetails( source="OBJECT_STORAGE", namespace_name=NAMESPACE, bucket_name=INPUT_BUCKET, object_name=file_name), compartment_id=COMPARTMENT_ID, language="POR", document_type="INVOICE") ) print(response.data) return response.dataThis function:

- Sends the image to OCI Vision.

- Requests text detection.

- Returns the extracted raw text.

-

Data Extraction with LLM:

def extract_data_with_llm(ocr_result, file_name): # 🔍 Extrai texto OCR (usando a estrutura da resposta do OCI Vision) extracted_lines = [] for page in getattr(ocr_result, 'pages', []): for line in getattr(page, 'lines', []): extracted_lines.append(line.text.strip()) plain_text = "\n".join(extracted_lines) # 🧠 Monta o prompt com instrução, few-shot e texto OCR limpo prompt = instruction + "\n" + "\n".join(few_shot_examples) + f"\nInvoice text:\n{plain_text}\nExtracted fields (JSON format):" # 🔗 Chamada ao LLM response = llm([HumanMessage(content=prompt)]) # 🧪 Tenta extrair JSON puro da resposta try: content = response.content.strip() first_brace = content.find("{") last_brace = content.rfind("}") json_string = content[first_brace:last_brace + 1] parsed_json = json.loads(json_string) except Exception as e: print(f"⚠️ Erro ao extrair JSON da resposta do LLM: {e}") parsed_json = {"raw_response": response.content} return { "file": file_name, "result": parsed_json, "timestamp": datetime.utcnow().isoformat() }This function:

- Combines instructions, few-shot example and OCR text.

- Prepares the OCR data returned by OCI Vision.

- Sends it to OCI Generative AI.

- Receives structured JSON fields (as string).

- OCI Vision supports Portuguese OCR (

language="POR"can be used instead of"ENG").

-

Save Output to OCI Object Storage:

def save_output(result, file_name): ...This code uploads the structured result into the output bucket using the original filename with

.jsonextension. -

Main Loop - Monitor and Process:

def monitor_bucket(): ...Main routine that:

- Monitors the input bucket every 30 seconds.

- Detects new

.png,.jpg,.jpegfiles. - Runs OCR, LLM and upload in sequence.

- Keeps track of already processed files in memory.

-

Entry Point:

if __name__ == "__main__": monitor_bucket()This code starts the bucket watcher and begins processing invoices automatically.

Task 3: Run the Code

Run the code using the following command.

python main.py

Task 4: Test Suggestions

-

Use real or dummy invoices with legible product lines and customer name.

-

Upload multiple images at the input-bucket in sequence to see automated processing.

-

Log in to the OCI Console, navigate to Object Storage to verify results in both buckets.

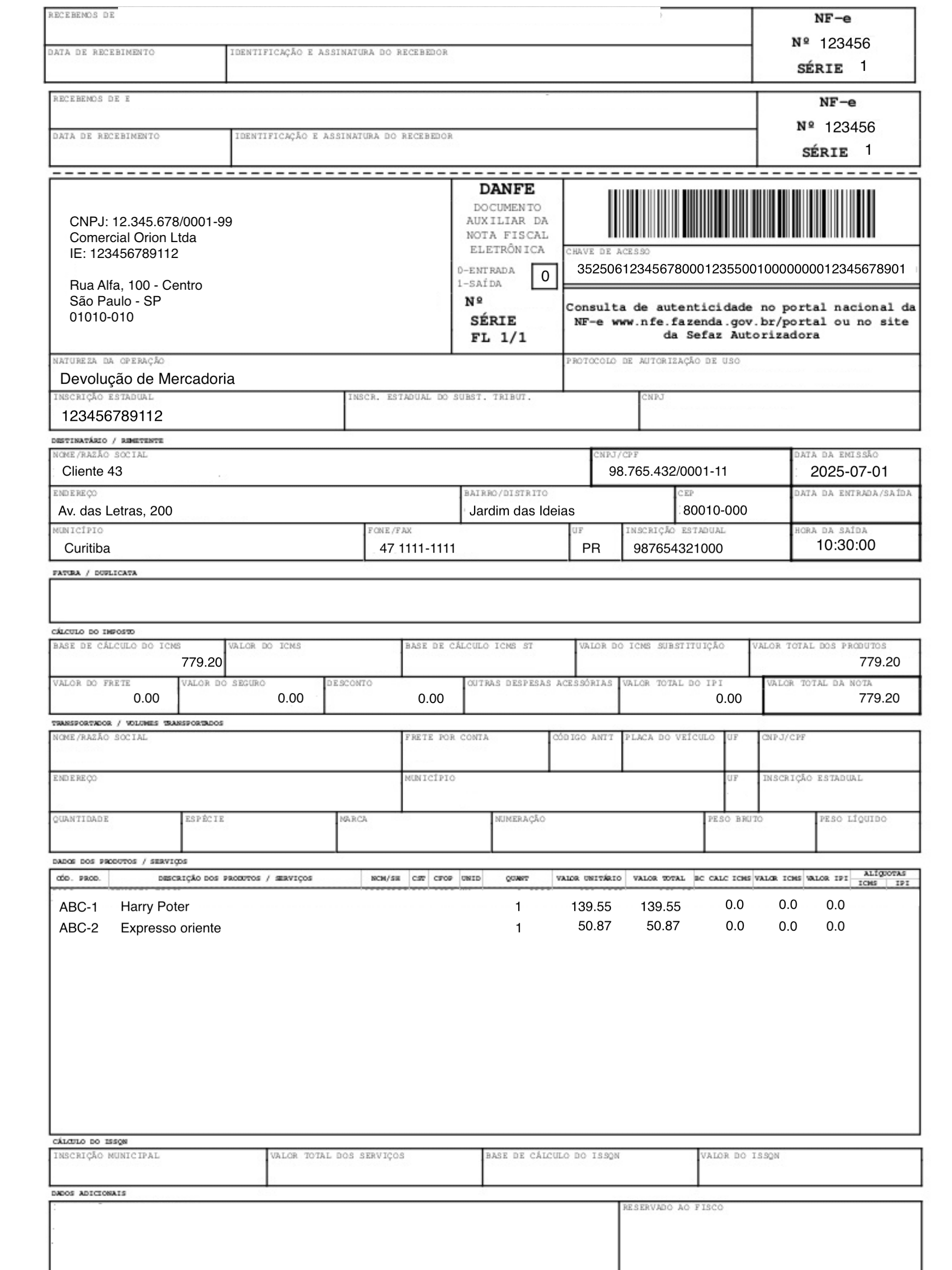

Note: In this tutorial, the sample used is a Brazilian invoice to illustrate the complexity of the attributes and disposition and how the prompt was created to resolve this case.

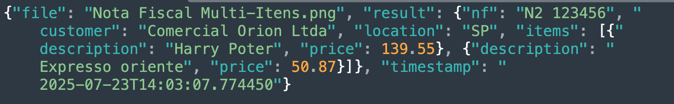

Task 5: View Expected Output

For each uploaded invoice image look at the output bucket file processed. A corresponding .json file is generated with structured content as shown in the following image.

Note:

- OCI Vision supports Portuguese OCR (

language="POR"can be used instead of"ENG").- LLM prompt can be adjusted to extract other fields like

CNPJ,quantidade,data de emissão, and so on.- Consider persisting

processed_fileswith a database or file to make the process fault-tolerant.- This process can be used with the use case: Build an AI Agent with Multi-Agent Communication Protocol Server for Invoice Resolution as a pre-processed invoice image. The invoice is a devolution invoice from the company customer. The fields customer and location are captured from the invoice creator.

Related Links

Acknowledgments

- Author - Cristiano Hoshikawa (Oracle LAD A - Team Solution Engineer)

More Learning Resources

Explore other labs on docs.oracle.com/learn or access more free learning content on the Oracle Learning YouTube channel. Additionally, visit education.oracle.com/learning-explorer to become an Oracle Learning Explorer.

For product documentation, visit Oracle Help Center.

Automate Invoice Images with OCI Vision and OCI Generative AI

G39696-01

Copyright ©2025, Oracle and/or its affiliates.