Introduction

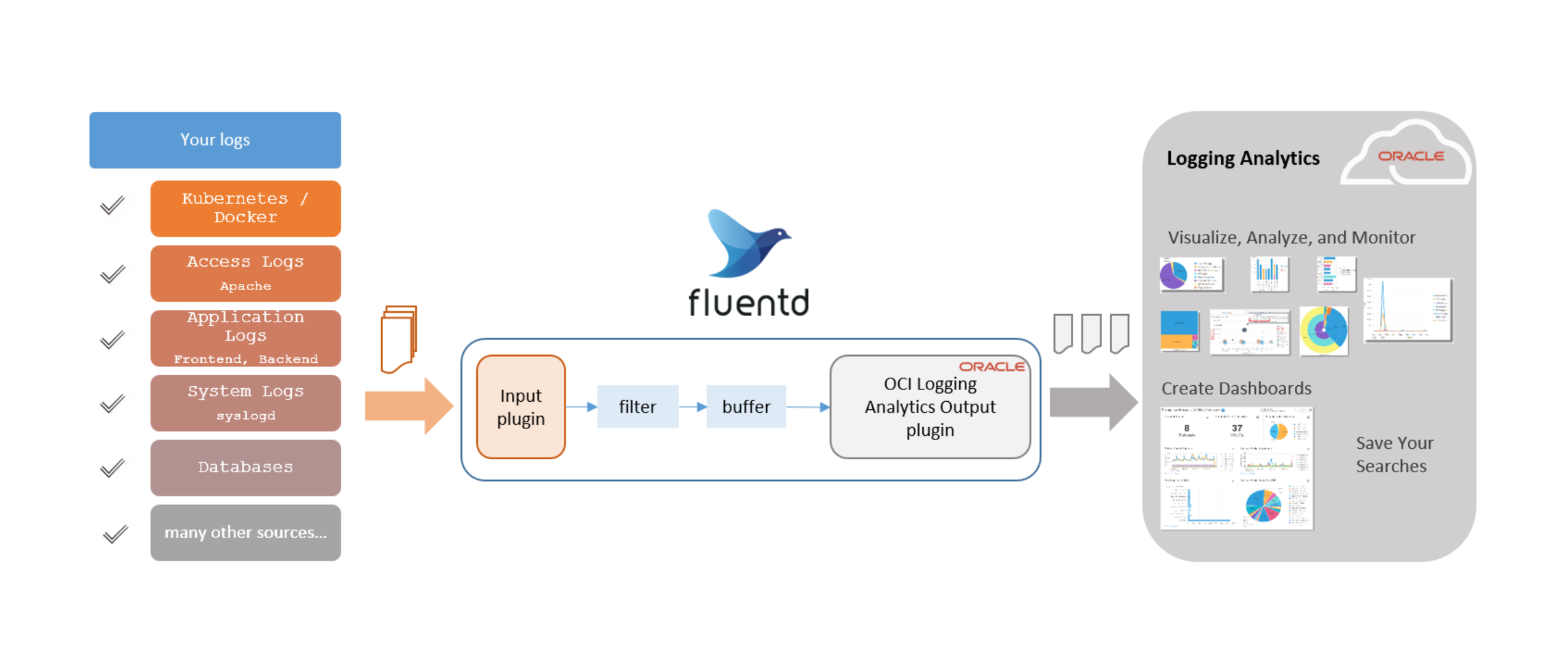

Use the open source data collector software, Fluentd to collect log data from your source. Install the OCI Log Analytics Output Plugin to route the collected log data to Oracle Cloud Log Analytics.

Note: Oracle recommends that you use Oracle Cloud Management Agents for the best experience of ingesting log data into Oracle Cloud Log Analytics. However, if that is not a possible option for your use case, only then use the OCI Log Analytics Output Plugin for Fluentd.

In this tutorial, a Fluentd setup is used which is based on the td-agent rpm package installed on Oracle Linux, but the required steps could be similar for other distributions of Fluentd.

Fluentd has components which work together to collect the log data from the input sources, transform the logs, and route the log data to the desired output. You can install and configure the output plugin for Fluentd to ingest logs from various sources into Oracle Cloud Log Analytics.

Description of the illustration fluentd_plugin_overview.png

Objectives

- Learn how to install the OCI Log Analytics output plugin provided by Oracle to ingest logs from your source.

- Create the Fluentd configuration to establish the log collection from your source to Log Analytics.

Migrate OCI Log Analytics Output Plugin from version 1.x to 2.x

If you are a new user of OCI Log Analytics Output Plugin and are yet to download and install it, skip this section and go to Prerequisites. If you have installed the plugin version 1.x using the file fluent-plugin-oci-logging-analytics-1.0.0.gem, then note that there are changes that you might need to make to migrate to the plugin version 2.x.

| 1.x | 2.x |

|---|---|

| global_metadata | oci_la_global_metadata |

| metadata | oci_la_metadata |

| entityId | oci_la_entity_id |

| entityType | oci_la_entity_type |

| logSourceName | oci_la_log_source_name |

| logPath | oci_la_log_path |

| logGroupId | oci_la_log_group_id |

- Support is added for auto-purging of OCI Log Analytics Output Plugin logs.

- The parameter

plugin_log_rotationis now deprecated. Instead, use the parametersplugin_log_file_sizeandplugin_log_file_countin conjunction to perform the same action. - Install the plugin version 2.x by using the command available in the section Install the Output Plugin.

Perform Prerequisites

-

Install Fluentd and Input Plugins: Before performing the following steps, ensure that you have installed Fluentd and the relevant input plugins for your input sources.

-

Understand the Hierarchy of Key Resources: Entities, sources, and parsers are some of the key resources in Oracle Cloud Log Analytics which are used for setting up the log collection. Understand their interdependencies to perform the prerequisite tasks before you can start viewing the logs in the Log Explorer.

-

Enable Log Analytics: Log Analytics Documentation: Quick Start. Note your entity’s OCID and type, and the OCID of your Log Group to use in the later section.

-

Create Sources and Parsers: Identify existing Oracle-defined or user-defined sources and parsers that you must specify in the configuration to associate with your entities in the later section. Alternatively, you can create your own parsers and sources, to suit your use case.

See Log Analytics Documentation: Create a Parser and Log Analytics Documentation: Configure Sources.

-

Multi Process Workers Feature: With more traffic, Fluentd tends to be more CPU bound. In such a case, consider using the multi-worker feature.

See Fluentd Documentation: Multi Process Workers and Fluentd Documentation: Performance Tuning.

-

Authentication: To connect to OCI, you must have an API signing key which can be created from the OCI Console.

Create the Fluentd Configuration File

To configure Fluentd to route the log data to Oracle Cloud Log Analytics, edit the configuration file provided by Fluentd or td-agent and provide the information pertaining to Oracle Cloud Log Analytics and other customizations.

The Fluentd output plugin configuration will be of the following format:

<match pattern>

@type oci-logging-analytics

namespace <YOUR_OCI_TENANCY_NAMESPACE>

# Auth config file details

config_file_location ~/.oci/config

profile_name DEFAULT

# When there is no credentials for proxy

http_proxy "#{ENV['HTTP_PROXY']}"

# To provide proxy credentials

proxy_ip <IP>

proxy_port <port>

proxy_username <user>

proxy_password <password>

# Configuration for plugin (oci-logging-analytics) generated logs

plugin_log_location "#{ENV['FLUENT_OCI_LOG_LOCATION'] || '/var/log'}"

plugin_log_level "#{ENV['FLUENT_OCI_LOG_LEVEL'] || 'info'}"

plugin_log_rotation "#{ENV['FLUENT_OCI_LOG_ROTATION'] || 'daily'}" **(DEPRECATED)**

plugin_log_file_size "#{ENV['FLUENT_OCI_LOG_AGE'] || '1MB'}"

plugin_log_file_count "#{ENV['FLUENT_OCI_LOG_AGE'] || '10'}"

# Buffer Configuration

<buffer>

@type file

path "#{ENV['FLUENT_OCI_BUFFER_PATH'] || '/var/log'}"

flush_thread_count "#{ENV['FLUENT_OCI_BUFFER_FLUSH_THREAD_COUNT'] || '10'}"

retry_wait "#{ENV['FLUENT_OCI_BUFFER_RETRY_WAIT'] || '2'}" #seconds

retry_max_times "#{ENV['FLUENT_OCI_BUFFER_RETRY_MAX_TIMES'] || '10'}"

retry_exponential_backoff_base "#{ENV['FLUENT_OCI_BUFFER_RETRY_EXPONENTIAL_BACKOFF_BASE'] || '2'}" #seconds

retry_forever true

overflow_action block

disable_chunk_backup true

</buffer>

</match>

It is recommended that a secondary plugin is configured which would be used by Fluentd to dump the backup data when the output plugin continues to fail in writing the buffer chunks and exceeds the timeout threshold for retries. Also, for unrecoverable errors, Fluentd will abort the chunk immediately and move it into secondary or the backup directory. Refer to Fluentd Documentation: Secondary Output.

Output Plugin Configuration Parameters

Provide suitable values to the following parameters in the Fluentd configuration file:

| Configuration parameter | Description |

|---|---|

| namespace (Mandatory parameter) | OCI Tenancy Namespace to which the collected log data to be uploaded |

| config_file_location | The location of the configuration file containing OCI authentication details |

| profile_name | OCI Config Profile Name to be used from the configuration file |

| http_proxy | Proxy with no credentials. Example: www.proxy.com:80 |

| proxy_ip | Proxy ip details when credentials required. Example: www.proxy.com |

| proxy_port | Proxy port details when credentials required. Example: 80 |

| proxy_username | Proxy username details |

| proxy_password | Proxy password details when credentials required |

| plugin_log_location | File path for Output plugin to write its own logs. Make sure that the path exists and is accessible. Default value: Working directory. |

| plugin_log_level | Output plugin logging level: DEBUG < INFO < WARN < ERROR < FATAL < UNKNOWN. Default value: INFO. |

| plugin_log_rotation | (DEPRECATED) Output plugin log file rotation frequency: daily, weekly or monthly. Default value: daily. |

| plugin_log_file_size | The maximum log file size at which point the log file to be rotated.’ (1KB, 1MB etc). Default value: 1MB. |

| plugin_log_file_count | The number of archived/rotated log files to keep (Greater than 0). Default value: 10. |

If you don’t specify the parameters config_file_location and profile_name for the OCI Compute nodes, then instance_principal based authentication is used.

Buffer Configuration Parameters

In the same configuration file that you edited in the previous section, modify the buffer section and provide the following mandatory information:

| Mandatory Parameter | Description |

|---|---|

| @type | This specifies which plugin to use as the backend. Enter file. |

| path | The path where buffer files are stored. Make sure that the path exists and is accessible. |

The following optional parameters can be included in the buffer block:

| Optional Parameter | Default Value | Description |

|---|---|---|

| flush_thread_count | 1 |

The number of threads to flush/write chunks in parallel. |

| retry_wait | 1s |

Wait in seconds before the next retry to flush. |

| retry_max_times | none |

This is mandatory only when retry_forever field is false. |

| retry_exponential_backoff_base | 2 |

Wait in seconds before the next constant factor of exponential backoff. |

| retry_forever | false |

If true, plugin will ignore retry_max_times option and retry flushing forever. |

| overflow_action | throw_exception |

Possible Values: throw_exception / block / drop_oldest_chunk. Recommended Value: block. |

| disable_chunk_backup | false |

When specified false, unrecoverable chunks in the backup directory will be discarded. |

| chunk_limit_size | 8MB |

The max size of each chunks. The events will be written into chunks until the size of chunks become this size. Note: Irrespective of the value specified, Log Analytics output plugin currently defaults the value to 1MB. |

| total_limit_size | 64GB (for file) |

Once the total size of stored buffer reached this threshold, all append operations will fail with error (and data will be lost). |

| flush_interval | 60s |

Frequency of flushing of chunks to output plugin. |

For details of the possible values of the parameters, see Fluentd Documentation: Buffer Plugins.

Verify the Format of the Incoming Log Events

The incoming log events must be in a specific format so that the Fluentd plugin provided by Oracle can process the log data, chunk them, and transfer them to Oracle Cloud Log Analytics.

View the example configuration that can be used for monitoring syslog, apache, and kafka log files at Example Input Configuration.

Source / Input Plugin Configuration

Example source configuration for syslog logs:

<source>

@type tail

@id in_tail_syslog

multiline_flush_interval 5s

path /var/log/messages*

pos_file /var/log/messages*.log.pos

read_from_head true

path_key tailed_path

tag oci.syslog

<parse>

@type json

</parse>

</source>

The following parameters are mandatory to define the source block:

-

@type: The input plugin type. Use tail for consuming events from a local file. The other possible values can be http, forward.

-

path: The path to the source files.

-

tag: The tag that will be used by Oracle’s Fluentd plugin to filter the log events that must be consumed by Log Analytics. Make sure to use the prefix oci, for example,

oci.syslog. -

Parse directive: It is recommended that you don’t define the parse directive in the configuration file. Retain the value

<parse> @type none </parse>. Instead, you can use the Oracle-defined Parsers and Sources provided by Log Analytics or create your own parsers and sources in Log Analytics. For logs wrapped in a json wrapper, use the parse directive<parse> @type json </parse>. Override the message field in record_transformer filter with the value${record["log"]}.Note:

-

It’s recommended that you don’t use any Fluentd parsers. Instead, send the logs to Log Analytics in the original form. The parse directive must be of the form:

<parse> @type none </parse> -

However, in case of multiline log entries, use multiline parser type to send multiple lines of a log as a single record. For example:

<parse> @type multiline format_firstline /^\S+\s+\d{1,2}\s+\d{1,2}:\d{1,2}:\d{1,2}\s+/ format1 /^(?<message>.*)/ </parse> -

For original logs which are wrapped by json wrapper where one of the keys in the key-value pairs is log, we recommend that you use the following parse directive:

<parse> @type json </parse>

And, override

messagefield in record_transformer filter withmessage ${record["log"]}. For example, in the following filter block for kafka logs, the log content is stored in the value of the key log which is wrapped in a json.``` <filter oci.kafka> @type record_transformer enable_ruby true <record> oci_la_metadata KEY_VALUE_PAIRS oci_la_entity_id LOGGING_ANALYTICS_ENTITY_OCID # If same across sources. Else keep this in individual filters oci_la_entity_type LOGGING_ANALYTICS_ENTITY_TYPE # If same across sources. Else keep this in individual filters oci_la_log_source_name LOGGING_ANALYTICS_SOURCENAME oci_la_log_group_id LOGGING_ANALYTICS_LOGGROUP_OCID oci_la_log_path "${record['tailed_path']}" message ${record["log"]} # Will assign the 'log' key value from json wrapped message to 'message' field tag ${tag} </record> </filter> ``` -

The following optional parameters can be included in the source block:

- multiline_flush_interval: Set this value only for multiline logs to ensure that all the logs are consumed by Log Analytics. If the value is not set for multiline logs, then Fluentd will remain in the waiting mode for the next batch of records. By default, this parameter is disabled.

- pos_file: Use this parameter to specify the file in which Fluentd maintains the record of the position it last read.

For information on other parameters, see Fluentd Documentation: tail.

Filter Configuration

Use these parameters to list the Log Analytics resources that must be used to process your logs.

To ensure that the logs from your input source can be processed by the output plugin provided by Oracle, verify that the input log events conform to the prescribed format, for example, by configuring the record_transformer filter plugin to alter the format accordingly.

Tip: Note that configuring the record_transformer filter plugin is only one of the ways of including the required parameters in the incoming events. Refer to Fluentd Documentation for other methods.

Example filter configuration:

<filter oci.kafka>

@type record_transformer

enable_ruby true

<record>

oci_la_metadata KEY_VALUE_PAIRS

oci_la_entity_id LOGGING_ANALYTICS_ENTITY_OCID # If same across sources. Else keep this in individual filters

oci_la_entity_type LOGGING_ANALYTICS_ENTITY_TYPE # If same across sources. Else keep this in individual filters

oci_la_log_source_name LOGGING_ANALYTICS_SOURCENAME

oci_la_log_group_id LOGGING_ANALYTICS_LOGGROUP_OCID

oci_la_log_path "${record['tailed_path']}"

message ${record["log"]} # Will assign the 'log' key value from json wrapped message to 'message' field

tag ${tag}

</record>

</filter>`

Provide the following mandatory information in the filter block:

<filter oci.kafka>: The parameter to define a filter block for the tag specified in the source block.- @type record_transformer: The record transformer plugin transforms the original log record to a form that can be consumed by OCI Log Analytics output plugin.

- enable_ruby: This enables the use of Ruby expression inside

${...}. - oci_la_entity_id: The OCID of the Log Analytics Entity that you created earlier in the prerequisite task to map your host.

- oci_la_entity_type: The entity type of the Log Analytics Entity that you created earlier in the prerequisite task.

- oci_la_log_source_name: The Log Analytics Source that must be used to process the log records.

- oci_la_log_path: Specify the original location of the log files. If the value of

oci_la_log_pathis not privided or is invalid, then:- if tag is available, then it is used as oci_la_log_path

- if tag is not available, then oci_la_log_path is set to UNDEFINED

- oci_la_log_group_id: The OCID of the Log Analytics Log Group where the logs must be stored.

You can optionally provide the following additional parameters in the filter block:

<filter oci.**>: Use this filter to provide the configuration information which is applicable across all sources. If you use this filter, then make sure that it is first in the order of execution among the filters. If the same key is specified in both global filter and the individual source filter, then the source level filter value will override the global filter. It’s recommended that you useocias prefix for all the tags.- oci_la_global_metadata: Use this parameter to specify additional metadata along with original log content to Log Analytics in the format

'key1': 'value1', 'key2': 'value2'. Here, Key is the Log Analytics Field that must already be defined before specifying it here. The global metadata is applied to all the log files. - oci_la_metadata: Use this parameter to define additional metadata along with original log content to Log Analytics in the format

'key1': 'value1', 'key2': 'value2'. Here, Key is the Log Analytics Field that must already be defined before specifying it here. - tag: Use this parameter to attach a tag to the message for internal use. Specify in the format

tag ${tag}. - message ${record[“log”]}: Include this parameter for logs which are wrapped in a json wrapper where the original log message is the value of log attribute inside the json.

Example configurations you can use for monitoring the following logs:

Install the Output Plugin

Use the gem file provided by Oracle for the installation of the OCI Log Analytics Output Plugin. The steps in this section are for the Fluentd setup based on the td-agent rpm package installed on Oracle Linux.

-

Install the output plugin by running the following command:

gem install fluent-plugin-oci-logging-analytics

For more information, see Fluentd Output plugin to ship logs/events to OCI Log Analytics on RubyGems: https://rubygems.org/gems/fluent-plugin-oci-logging-analytics.

-

Systemd starts td-agent with the td-agent user. Give td-agent user the access to the OCI files and folders. To run td-agent as a service, run the

chownorchgrpcommand for the OCI Log Analytics output plugin folders, and the .oci pem file, for example,chown td-agent [FILE]. -

To start collecting logs in Oracle Cloud Log Analytics, run td-agent:

TZ=utc /etc/init.d/td-agent startYou can use the log file

/var/log/td-agent/td-agent.logto debug if you encounter issues during log collection or while setting up.To stop td-agent at any point, run the following command:

TZ=utc /etc/init.d/td-agent stop

Start Viewing the Logs in Log Analytics

Go to the Log Explorer and use the Visualize panel of Oracle Cloud Log Analytics to view the log data in a form that helps you better understand and analyze. Based on what you want to achieve with your data set, you can select the visualization type that best suits your application.

After you create and execute a search query, you can save and share your log searches as a widget for further reuse.

You can create custom dashboards on the Dashboards page by adding the Oracle-defined widgets or the custom widgets you’ve created.

Monitor Fluentd Using Prometheus

You can optionally monitor Fluentd by using Prometheus. For steps to expose the below metrics and others emitted by Fluentd to Prometheus, see Fluentd Documentation: Monitoring by Prometheus. If you want to monitor only the core Fluentd and these metrics, then skip the steps Step 1: Counting Incoming Records by Prometheus Filter Plugin and Step 2: Counting Outgoing Records by Prometheus Output Plugin in the referred Fluentd documentation.

The Fluentd plugin emits the following metrics in Prometheus format, which provides insights about the data collected and processed by the plugin:

Metric Name: oci_la_fluentd_output_plugin_records_received

labels: [:tag,:oci_la_log_group_id,:oci_la_log_source_name,:oci_la_log_set]

Description: Number of records received by the OCI Log Analytics Fluentd output plugin.

Type : Gauge

Metric Name: oci_la_fluentd_output_plugin_records_valid

labels: [:tag,:oci_la_log_group_id,:oci_la_log_source_name,:oci_la_log_set]

Description: Number of valid records received by the OCI Log Analytics Fluentd output plugin.

Type : Gauge

Metric Name: oci_la_fluentd_output_plugin_records_invalid

labels: [:tag,:oci_la_log_group_id,:oci_la_log_source_name,:oci_la_log_set,:reason]

Description: Number of invalid records received by the OCI Log Analytics Fluentd output plugin.

Type : Gauge

Metric Name: oci_la_fluentd_output_plugin_records_post_error

labels: [:tag,:oci_la_log_group_id,:oci_la_log_source_name,:oci_la_log_set,:error_code, :reason]

Description: Number of records failed posting to OCI Log Analytics by the Fluentd output plugin.

Type : Gauge

Metric Name: oci_la_fluentd_output_plugin_records_post_success

labels: [:tag,:oci_la_log_group_id,:oci_la_log_source_name,:oci_la_log_set]

Description: Number of records posted by the OCI Log Analytics Fluentd output plugin.

Type : Gauge

Metric Name: oci_la_fluentd_output_plugin_chunk_time_to_receive

labels: [:tag]

Description: Average time taken by Fluentd to deliver the collected records from Input plugin to OCI Log Analytics output plugin.

Type : Histogram

Metric Name: oci_la_fluentd_output_plugin_chunk_time_to_post

labels: [:oci_la_log_group_id]

Description: Average time taken for posting the received records to OCI Log Analytics by the Fluentd output plugin.

Type : Histogram

Learn More

Other Learning Resources

Explore other tutorials on Oracle Learn or access more free learning content on the Oracle Learning YouTube channel. Additionally, visit Oracle Education to become an Oracle Learning Explorer.

For product documentation, visit Oracle Help Center.

Ingest Logs to OCI Log Analytics Using Fluentd

F46550-06

July 2025