Note:

- This tutorial is available in an Oracle-provided free lab environment.

- It uses example values for Oracle Cloud Infrastructure credentials, tenancy, and compartments. When completing your lab, substitute these values with ones specific to your cloud environment.

Scale a Kubernetes Cluster on Oracle Cloud Native Environment

Introduction

This tutorial demonstrates how to scale an existing Kubernetes cluster in Oracle Cloud Native Environment.

Scaling up a Kubernetes cluster means adding nodes; likewise, scaling down occurs by removing nodes. Nodes can be either control plane or worker nodes. Oracle recommends against scaling the cluster up and down at the same time. Instead, perform a scale up and then down in two separate commands.

We recommend scaling the Kubernetes cluster control plane or worker nodes in odd numbers to avoid split-brain scenarios and maintain the quorum. For example, 3, 5, or 7 control plane or worker nodes ensure the reliability of the cluster.

We start with an existing Highly Available Kubernetes cluster running on Oracle Cloud Native Environment that consists of the following:

- 1 Operator Node

- 3 Control Plane Nodes

- 5 Worker Nodes

The deployment for this tutorial builds upon these labs:

- Deploy Oracle Cloud Native Environment

- Deploy an External Load Balancer with Oracle Cloud Native Environment

- Use OCI Cloud Controller Manager on Oracle Cloud Native Environment

Objectives

At the end of this tutorial, you should be able to do the following:

- Add two new control plane nodes and two new worker nodes to a cluster

- Scale down the cluster by removing the same nodes from the cluster

Prerequisites

Note: These prerequisites are provided as the starting point and automatically deploy when using the free lab environment.

-

A Highly Available Kubernetes cluster running on Oracle Cloud Native Environment

-

4 additional Oracle Linux instances to use as:

- 2 Kubernetes control plane nodes

- 2 Kubernetes worker nodes

-

Access to a Load Balancer such as OCI Load Balancer

-

The additional Oracle Linux instances need the following:

- The same OS and patch level as the original cluster

- The completion of the prerequisite steps to install Oracle Cloud Native Environment

- Set up the Kubernetes control plane and worker nodes

Set Up Lab Environment

Note: When using the free lab environment, see Oracle Linux Lab Basics for connection and other usage instructions.

Information: The free lab environment deploys a fully functional Oracle Cloud Native Environment on the provided nodes. This deployment takes approximately 60-65 minutes to finish after launch. Therefore, you might want to step away while this runs and then return to complete the lab.

-

Open a terminal and connect via ssh to the ocne-operator system.

ssh oracle@<ip_address_of_operator_node> -

Verify the deployment of the Kubernetes and OCI-CCM modules.

olcnectl module instances --config-file myenvironment.yamlExample Output:

[oracle@ocne-operator ~]$ olcnectl module instances --config-file myenvironment.yaml INSTANCE MODULE STATE mycluster kubernetes installed myoci oci-ccm installed ocne-control-01.lv.vcn03957132.oraclevcn.com:8090 node installed ocne-control-02.lv.vcn03957132.oraclevcn.com:8090 node installed ocne-control-03.lv.vcn03957132.oraclevcn.com:8090 node installed ocne-worker-01.lv.vcn03957132.oraclevcn.com:8090 node installed ocne-worker-02.lv.vcn03957132.oraclevcn.com:8090 node installed ocne-worker-03.lv.vcn03957132.oraclevcn.com:8090 node installed ocne-worker-04.lv.vcn03957132.oraclevcn.com:8090 node installed ocne-worker-05.lv.vcn03957132.oraclevcn.com:8090 node installed -

Verify that

kubectlworks.ssh ocne-control-01 "kubectl get nodes"Example Output:

[oracle@ocne-operator ~]$ ssh ocne-control-01 "kubectl get nodes" NAME STATUS ROLES AGE VERSION ocne-control-01 Ready control-plane 35m v1.28.3+3.el8 ocne-control-02 Ready control-plane 34m v1.28.3+3.el8 ocne-control-03 Ready control-plane 32m v1.28.3+3.el8 ocne-worker-01 Ready <none> 33m v1.28.3+3.el8 ocne-worker-02 Ready <none> 33m v1.28.3+3.el8 ocne-worker-03 Ready <none> 31m v1.28.3+3.el8 ocne-worker-04 Ready <none> 35m v1.28.3+3.el8 ocne-worker-05 Ready <none> 33m v1.28.3+3.el8

Set up the New Kubernetes Nodes

Note: The free lab environment completes the prerequisite steps during the initial deployment.

When scaling up, any new nodes require all of the prerequisites listed in this tutorial’s Prerequisites section.

In the free lab environment, we use the nodes ocne-control-04 and ocne-control-05 as the new control plane nodes, while the nodes ocne-worker-06 and ocne-worker-07 are the new worker nodes. Given the free lab environment handles the prerequisites and enables the Oracle Cloud Native Environment Platform Agent service, we can proceed to generate certificates.

Create X.509 Private CA Certificates

The free lab environments use X.509 Private CA Certificates to secure node communication. Other methods exist to manage and deploy the certificates, such as using the HashiCorp Vault secrets manager or certificates signed by a trusted Certificate Authority (CA). Covering the usage of these other methods is outside the scope of this tutorial.

-

Create a list of new nodes.

VAR1=$(hostname -d) for NODE in 'ocne-control-04' 'ocne-control-05' 'ocne-worker-06' 'ocne-worker-07'; do VAR2+="${NODE}.$VAR1,"; done VAR2=${VAR2%,}The provided bash script grabs the domain name of the operator node and creates a comma separated list of the nodes to add to the cluster during the scale up procedure.

-

Generate and distribute a set of certificates for the new nodes using the existing private CA.

Use the

--byo-ca-certoption to specify the location of the existing CA Certificate and the--byo-ca-keyoption to specify the location of the existing CA Key. Use the--nodesoption and provide the FQDN of the new control plane and worker nodes.olcnectl certificates distribute \ --cert-dir $HOME/certificates \ --byo-ca-cert $HOME/certificates/ca/ca.cert \ --byo-ca-key $HOME/certificates/ca/ca.key \ --nodes $VAR2

Configure the Platform Agent to Use the Certificates

Configure the Platform Agent on each new node to use the certificates copied over in the previous step. We accomplish this task from the operator node by running the command over ssh.

-

Configure for each additional control plane and worker node.

for host in ocne-control-04 ocne-control-05 ocne-worker-06 ocne-worker-07 do ssh $host /bin/bash <<EOF sudo /etc/olcne/bootstrap-olcne.sh --secret-manager-type file --olcne-component agent EOF done

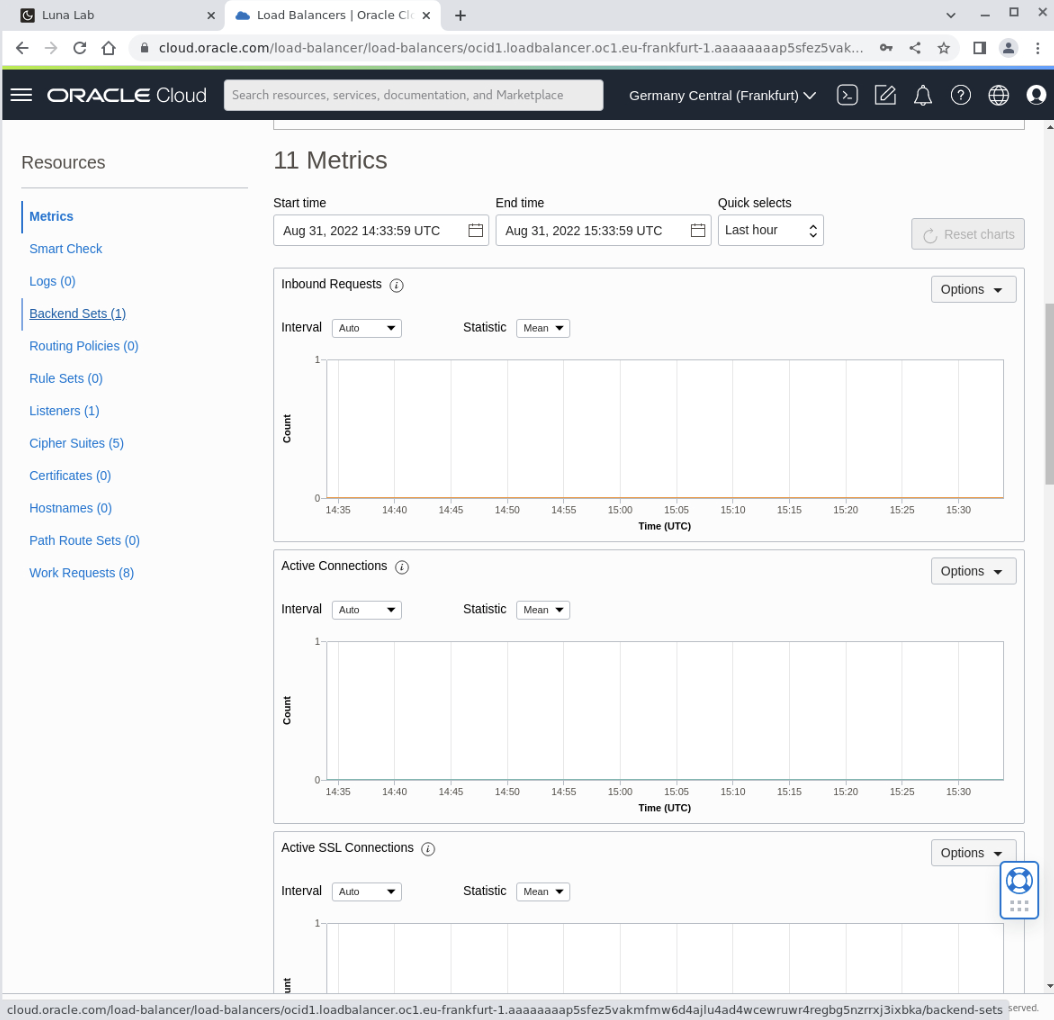

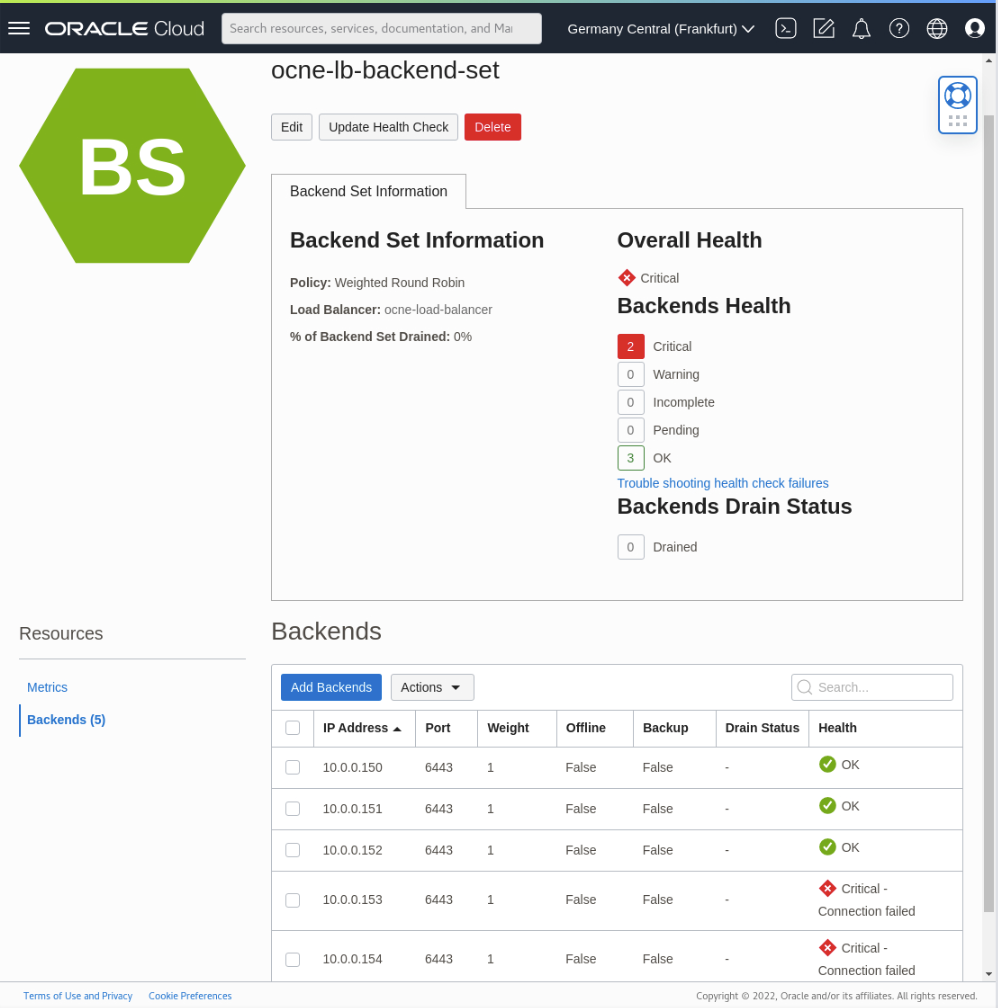

Access the OCI Load Balancer and View the Backends

Because having more than one node defined for the Kubernetes control plane requires a Load Balancer, it is interesting to view the configuration we automatically set up when deploying the free lab environment. These steps show the three nodes deployed and configured when creating the lab as having a Healthy status and the two nodes we add in the upcoming steps as being in Critical status.

-

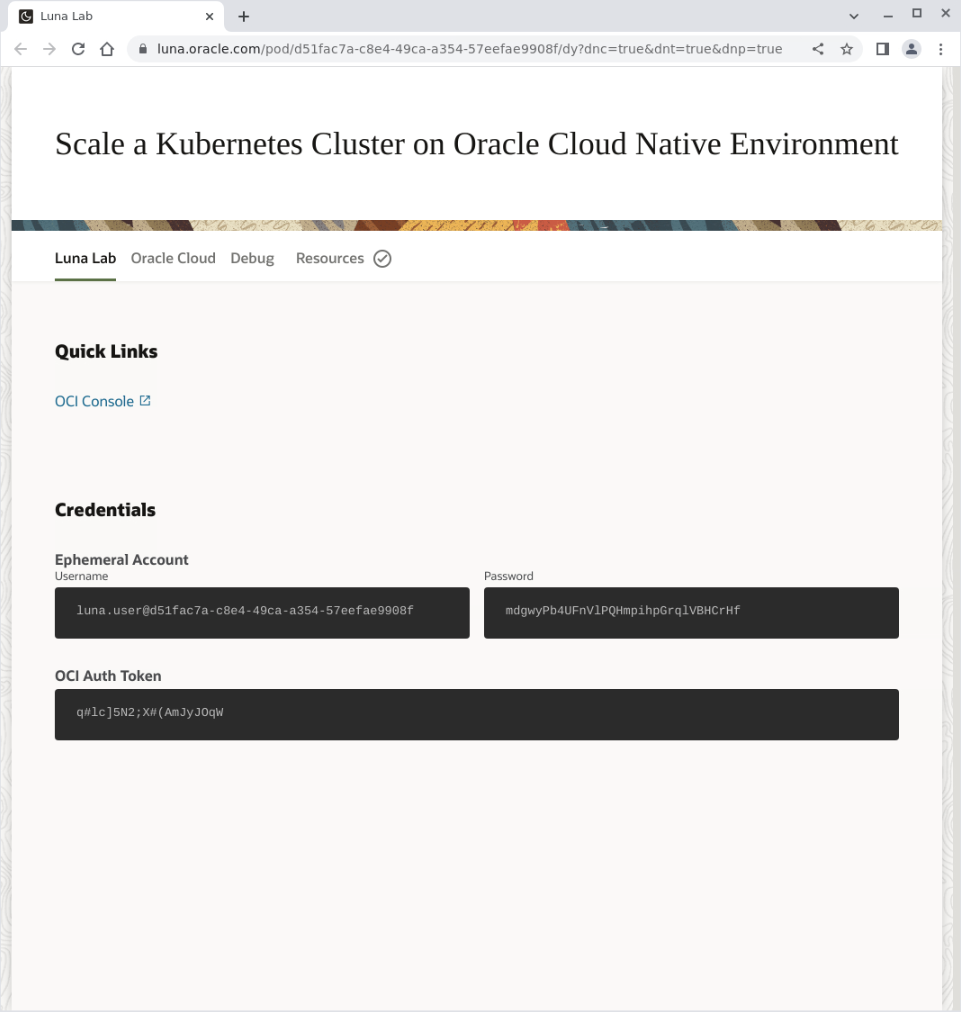

Switch from the Terminal to the Luna desktop

-

Open the Luna Lab details page using the Luna Lab icon.

-

Click on the OCI Console link.

-

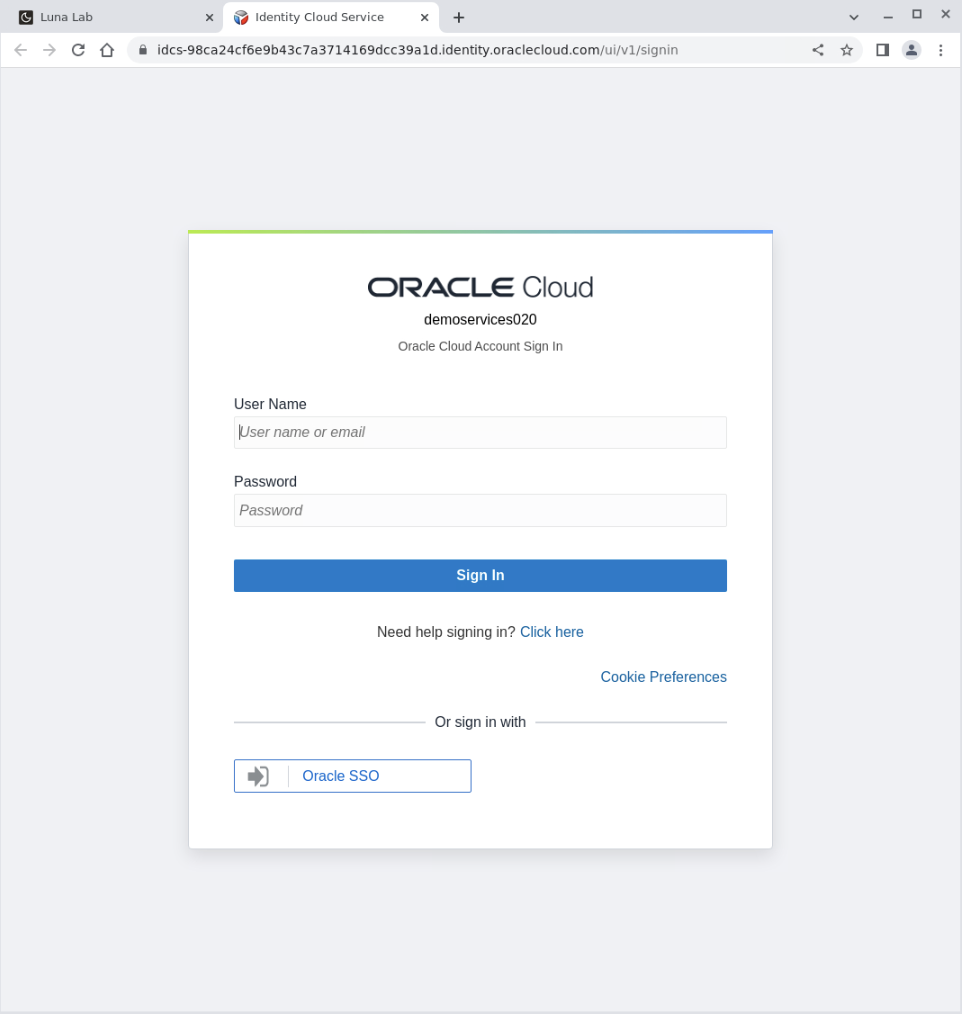

The Oracle Cloud Console login page displays.

-

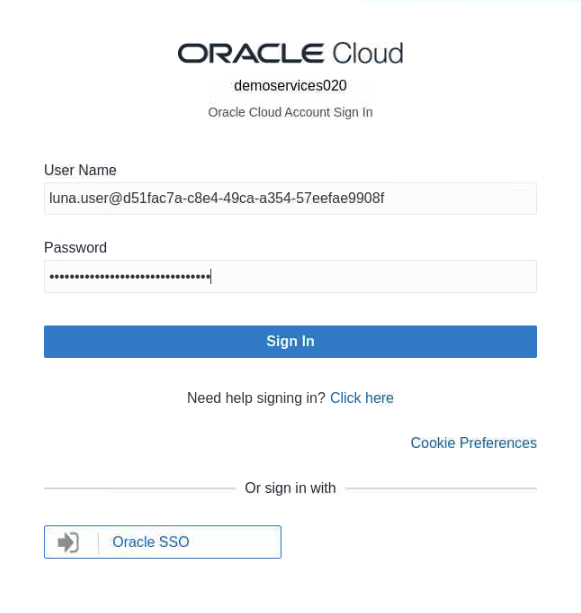

Enter the

User NameandPassword(found on the Luna Lab tab in the Credentials section).

-

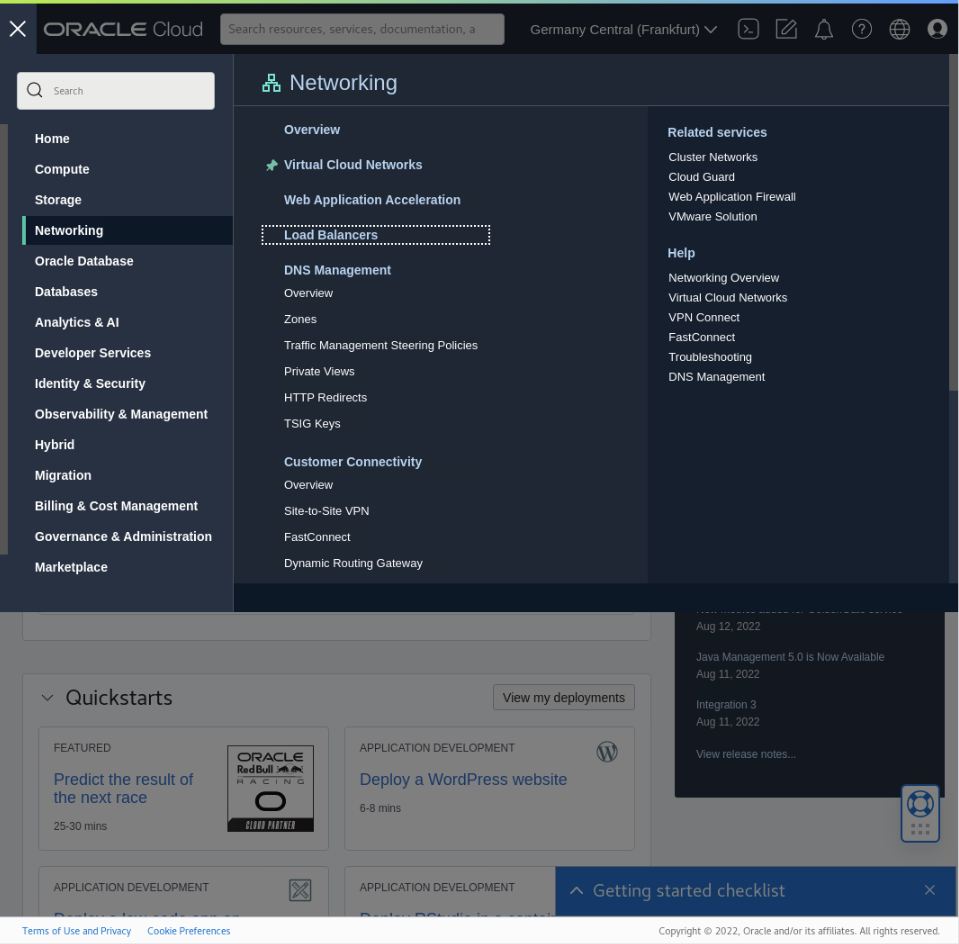

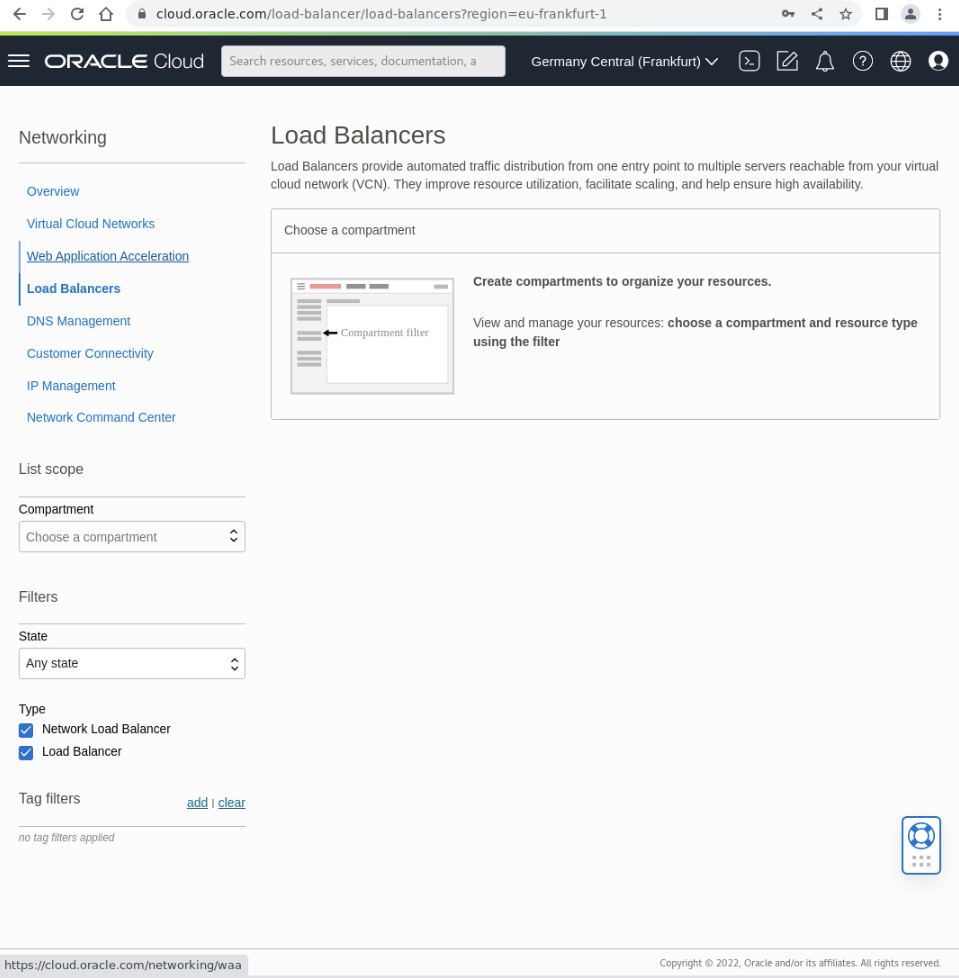

Click on the navigation menu in the page’s top-left corner, then Networking and Load Balancers.

-

The Load Balancers page displays.

-

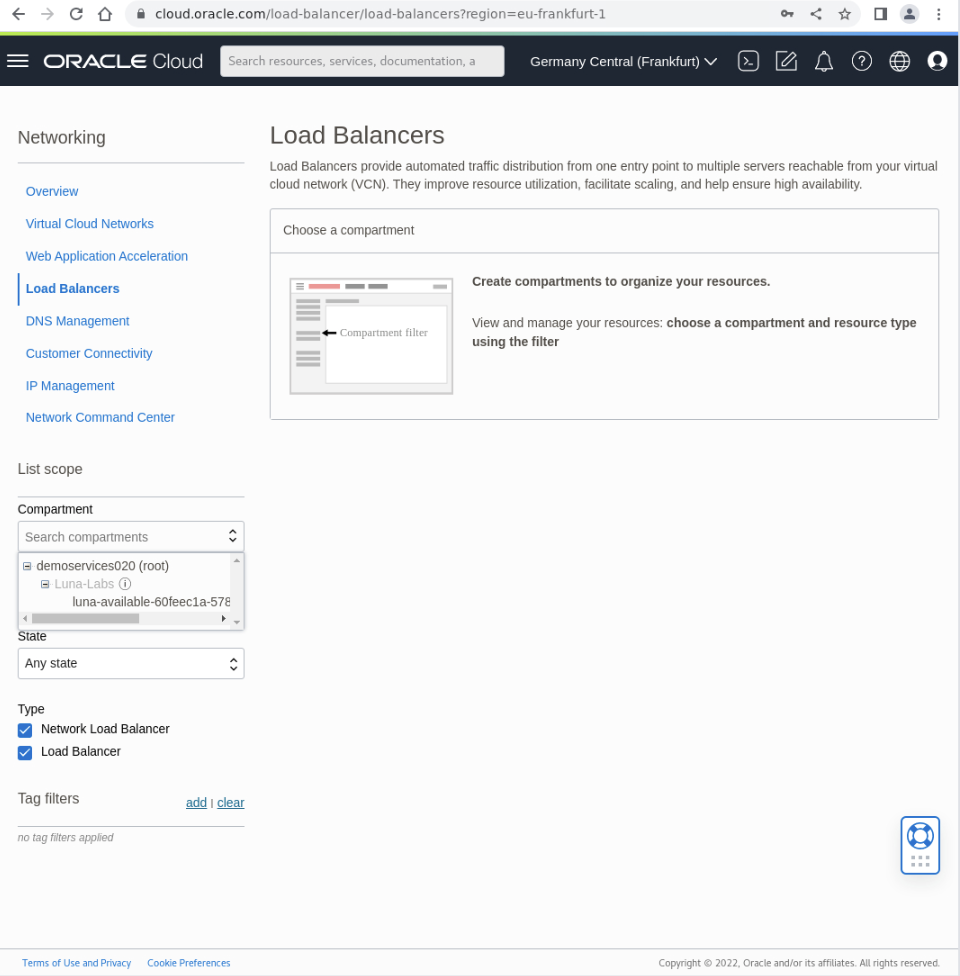

Locate the Compartment being used from the drop-down list.

-

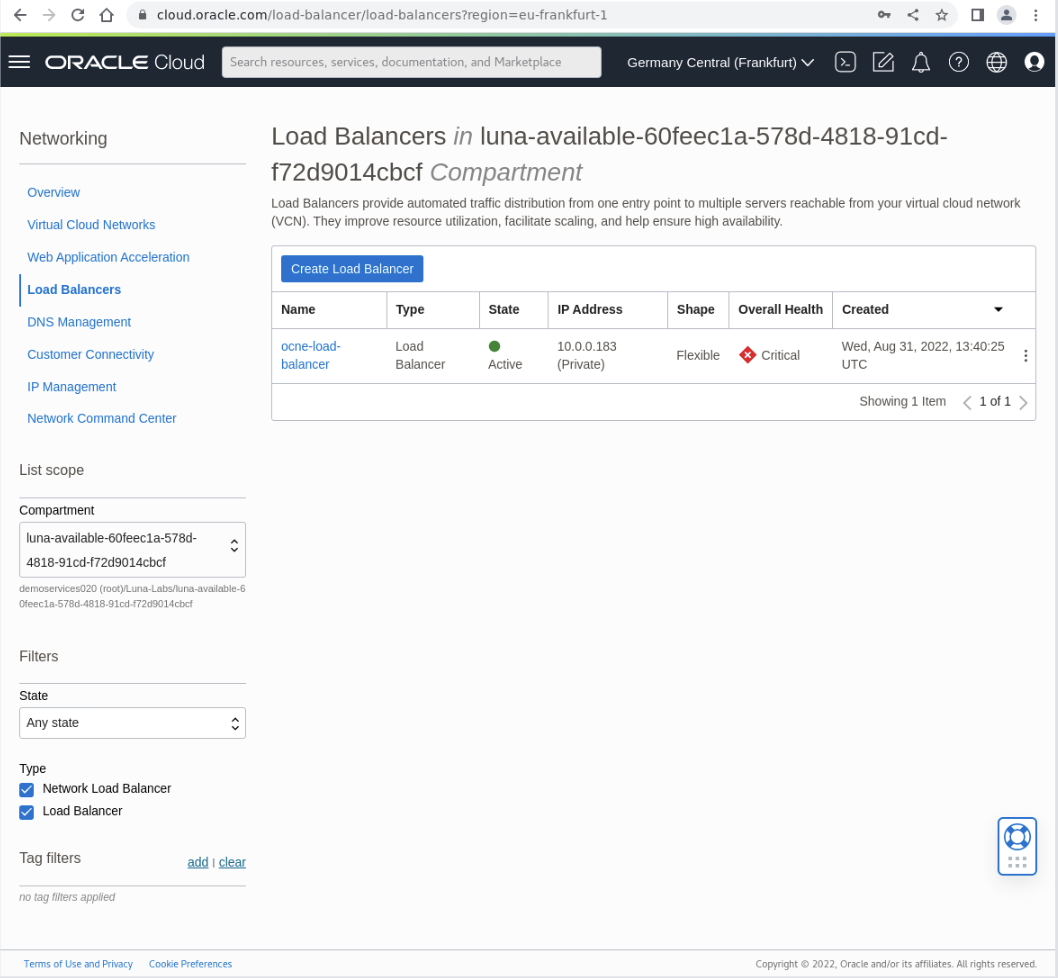

Click on the Load Balancer listed in the table (ocne-load-balancer).

-

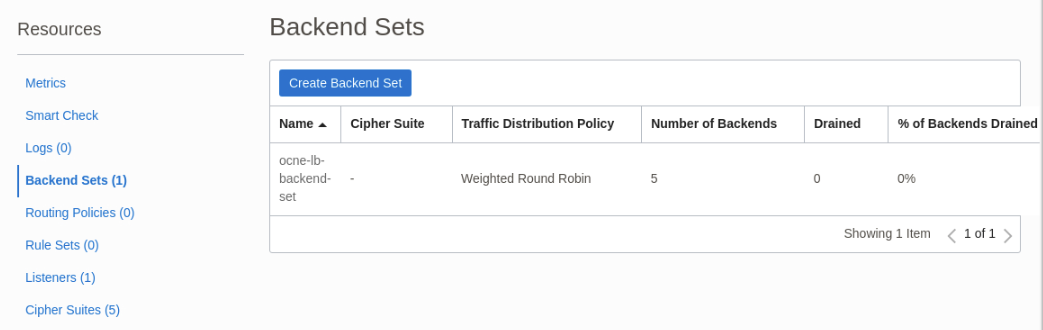

Under the Resources section in the navigation panel on the left-hand side of the browser window, scroll down the page and click on the link to the Backend Sets.

The Backend Sets table displays.

-

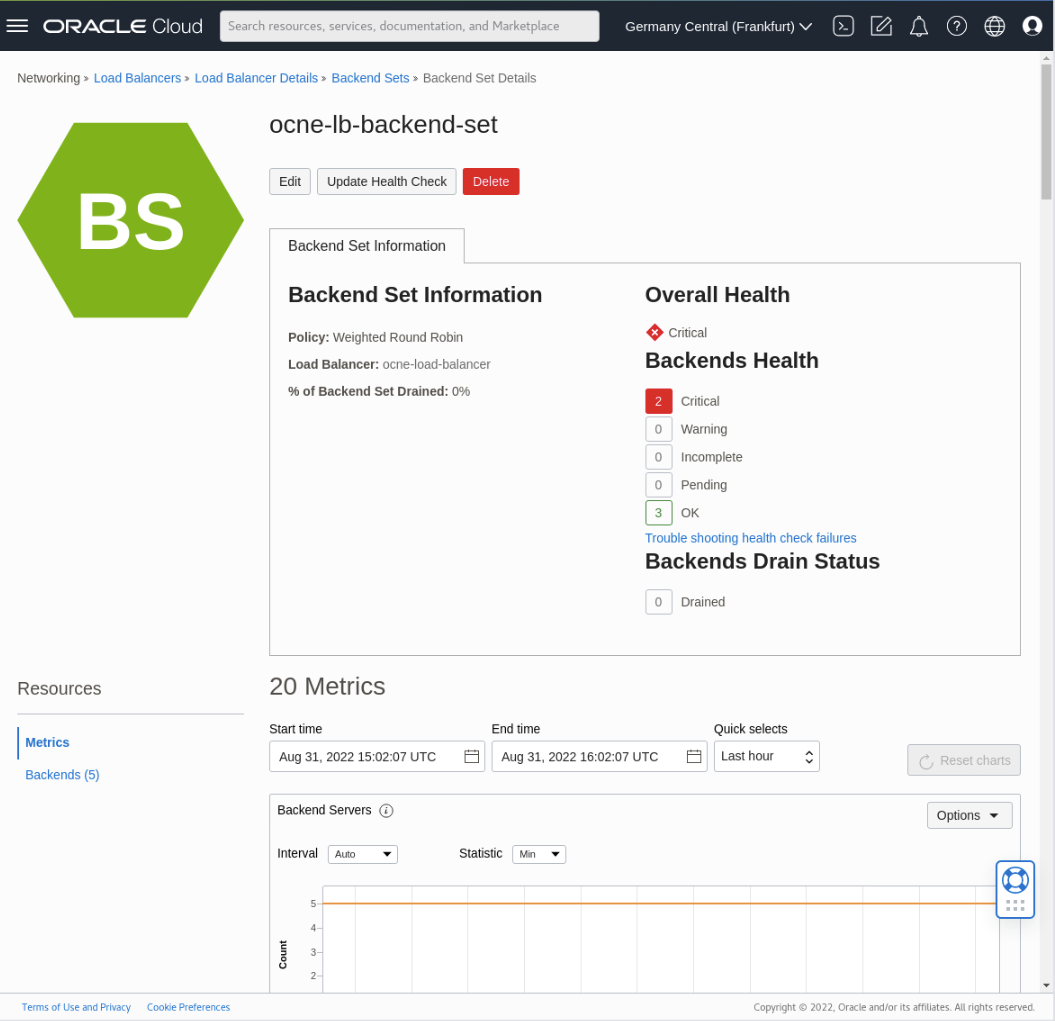

Click on the ocne-lb-backend-set link in the Name column.

-

Under the Resources section in the navigation panel on the left-hand side of the browser window, scroll down the page and click the Backends link.

-

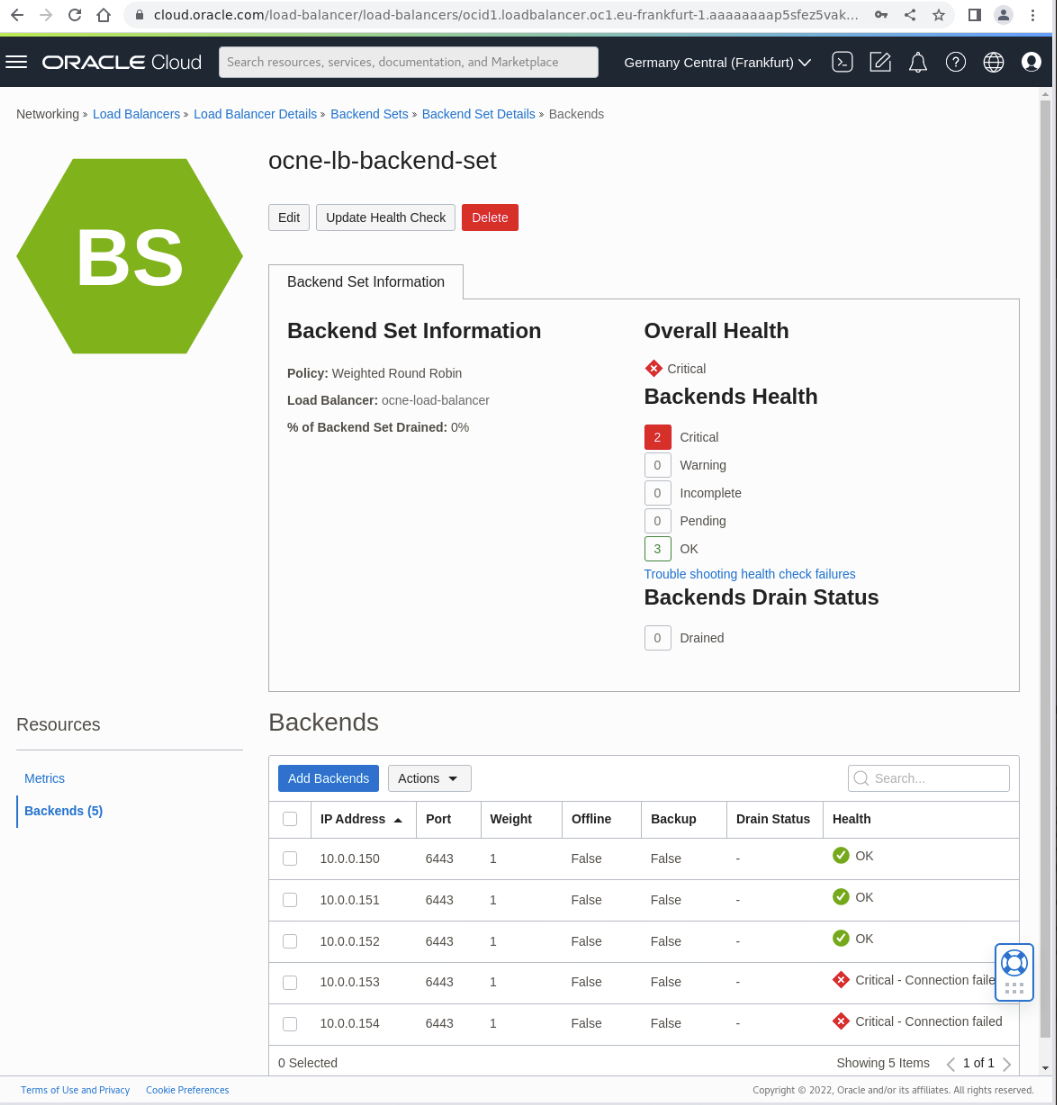

The page displays the Backends representing the control plane nodes.

Note Two of the backend nodes are in the Critical - connection failed state because these nodes are not yet part of the Kubernetes control plane cluster. Keep this browser tab open, as we’ll recheck the status of the backend nodes after completing the scale-up steps.

View the Kubernetes Nodes

Check the currently available Kubernetes nodes in the cluster. Note that there are three control plane nodes and five worker nodes.

-

Confirm that the nodes are all in READY status.

ssh ocne-control-01 "kubectl get nodes"Example Output:

[oracle@ocne-operator olcne]$ ssh ocne-control-01 "kubectl get nodes" NAME STATUS ROLES AGE VERSION ocne-control-01 Ready control-plane 5h15m v1.28.3+3.el8 ocne-control-02 Ready control-plane 5h14m v1.28.3+3.el8 ocne-control-03 Ready control-plane 5h13m v1.28.3+3.el8 ocne-worker-01 Ready <none> 5h14m v1.28.3+3.el8 ocne-worker-02 Ready <none> 5h13m v1.28.3+3.el8 ocne-worker-03 Ready <none> 5h12m v1.28.3+3.el8 ocne-worker-04 Ready <none> 5h13m v1.28.3+3.el8 ocne-worker-05 Ready <none> 5h14m v1.28.3+3.el8

Add Control Plane and Worker Nodes to the Deployment Configuration File

Before scaling the Kubernetes cluster, you must add the Fully Qualified Domain Name (FQDN) and Platform Agent access port of 8090 for each new node to the appropriate section of the deployment configuration file.

-

Confirm the current environment uses three control plane nodes and five worker nodes.

cat ~/myenvironment.yamlExample Output:

... control-plane-nodes: - ocne-control-01.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-02.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-03.lv.vcneea798df.oraclevcn.com:8090 worker-nodes: - ocne-worker-01.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-02.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-03.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-04.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-05.lv.vcneea798df.oraclevcn.com:8090 ... -

Add the new control plane and worker nodes to the deployment configuration file.

The free lab environment uses a file named

myenvironment.yaml.cd ~ sed -i '20 i \ - ocne-control-04.'"$(hostname -d)"':8090' ~/myenvironment.yaml sed -i '21 i \ - ocne-control-05.'"$(hostname -d)"':8090' ~/myenvironment.yaml sed -i '28 i \ - ocne-worker-06.'"$(hostname -d)"':8090' ~/myenvironment.yaml sed -i '29 i \ - ocne-worker-07.'"$(hostname -d)"':8090' ~/myenvironment.yaml -

Confirm the addition of the control plane and worker nodes in the deployment configuration file.

cat ~/myenvironment.yamlExample Excerpt:

... master-nodes: - ocne-control-01.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-02.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-03.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-04.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-05.lv.vcneea798df.oraclevcn.com:8090 worker-nodes: - ocne-worker-01.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-02.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-03.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-04.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-05.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-06.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-07.lv.vcneea798df.oraclevcn.com:8090 ...

The deployment configuration file now includes the new control plane nodes (ocne-control-04 and ocne-control-05) and the new worker nodes (ocne-worker-06 and ocne-worker-07). This change represents all of the control plane and worker nodes that should be in the cluster after the scale-up completes.

Scale Up the Control Plane and Worker Nodes

-

(Optional) Avoid using the

--api-serverflag in future olcnectl commands.Get a list of the module instances and add the

--update-configflag.olcnectl module instances \ --config-file myenvironment.yaml \ --update-configNote:

The myenvironment.yamlfile includes this option already and sets the value totrue -

Run the module update command.

Use the

olcnectl module updatecommand with the--config-fileoption to specify the configuration file’s location. The Platform API Server validates the configuration file and compares it with the state of the cluster. After the comparison, it recognizes there are more nodes to add to the cluster.olcnectl module update --config-file myenvironment.yaml --log-level debugNote: The

--log-level debugshows the command’s output to the console in debug mode, allowing the user to follow along with the progress.Respond with

yto the following prompts during the upgrade process.[WARNING] Update will shift your workload and some pods will lose data if they rely on local storage. Do you want to continue? (y/N) y -

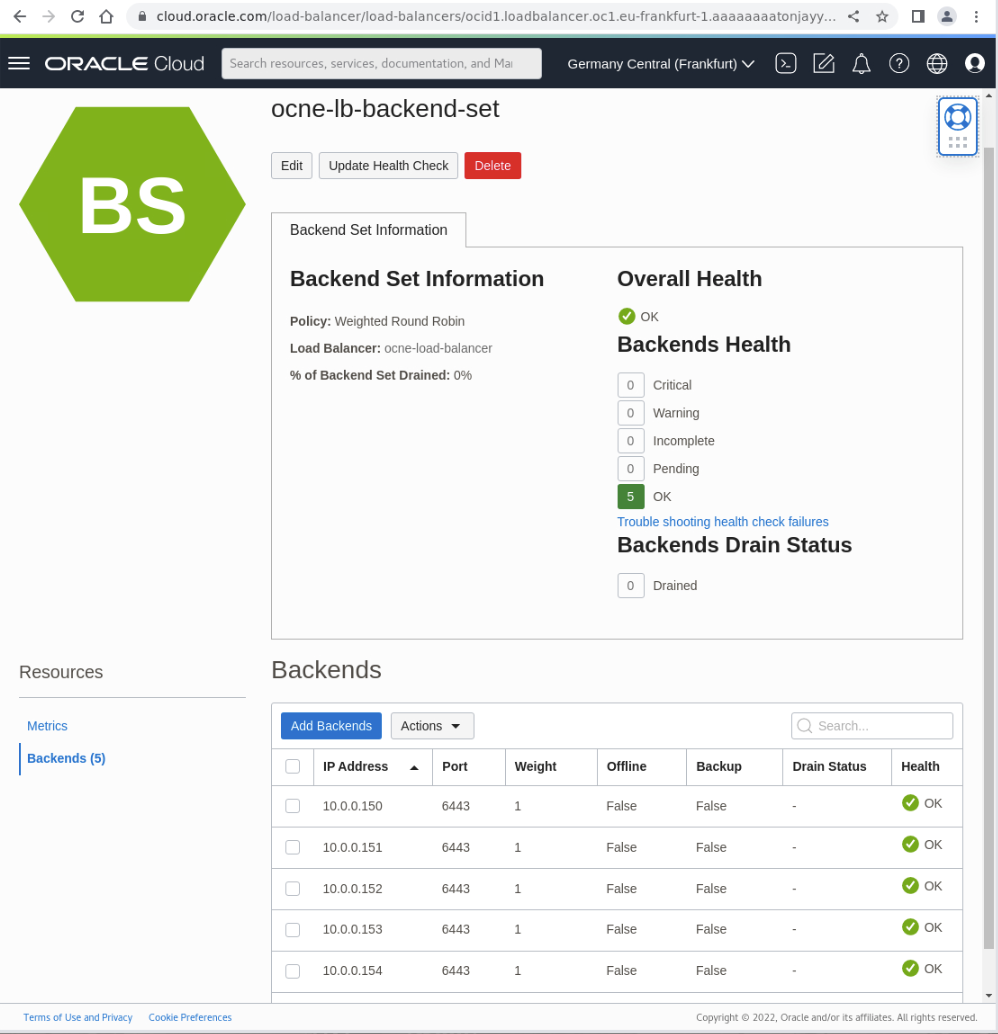

Switch to the browser and the Cloud Console window.

-

Confirm that the Load Balancer’s Backend Set shows five healthy Backend nodes.

-

Confirm the addition of the new control plane and worker nodes to the cluster.

ssh ocne-control-01 "kubectl get nodes"Example Output:

[oracle@ocne-operator ~]$ ssh ocne-control-01 "kubectl get nodes" NAME STATUS ROLES AGE VERSION ocne-control-01 Ready control-plane 99m v1.28.3+3.el8 ocne-control-02 Ready control-plane 97m v1.28.3+3.el8 ocne-control-03 Ready control-plane 96m v1.28.3+3.el8 ocne-control-04 Ready control-plane 13m v1.28.3+3.el8 ocne-control-05 Ready control-plane 12m v1.28.3+3.el8 ocne-worker-01 Ready <none> 99m v1.28.3+3.el8 ocne-worker-02 Ready <none> 98m v1.28.3+3.el8 ocne-worker-03 Ready <none> 98m v1.28.3+3.el8 ocne-worker-04 Ready <none> 98m v1.28.3+3.el8 ocne-worker-05 Ready <none> 98m v1.28.3+3.el8 ocne-worker-06 Ready <none> 13m v1.28.3+3.el8 ocne-worker-07 Ready <none> 13m v1.28.3+3.el8Notice that new control plane nodes (

ocne-control-04andocne-control-05) and the new worker nodes (ocne-work-06andocne-worker-07) are now part of the cluster. This output confirms a successful scale up operation.

Scale Down the Control Plane Nodes

Next, we’ll only scale down the control plane nodes to demonstrate that the control plane and worker nodes can scale independently.

-

Confirm the current environment uses five control plane nodes and seven worker nodes.

cat ~/myenvironment.yamlExample Output:

... master-nodes: - ocne-control-01.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-02.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-03.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-04.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-05.lv.vcneea798df.oraclevcn.com:8090 worker-nodes: - ocne-worker-01.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-02.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-03.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-04.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-05.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-06.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-07.lv.vcneea798df.oraclevcn.com:8090 ... -

To scale the cluster down to the original three control plane, remove the

ocne-control-04andocne-control-05control plane nodes from the configuration file.sed -i '19d;20d' ~/myenvironment.yaml -

Confirm the configuration file now contains only three control plane nodes and the seven worker nodes.

cat ~/myenvironment.yamlExample Excerpt:

... master-nodes: - ocne-control-01.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-02.lv.vcneea798df.oraclevcn.com:8090 - ocne-control-03.lv.vcneea798df.oraclevcn.com:8090 worker-nodes: - ocne-worker01.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker02.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker03.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker04.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker05.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-06.lv.vcneea798df.oraclevcn.com:8090 - ocne-worker-07.lv.vcneea798df.oraclevcn.com:8090 ... -

Suppress the module update warning message.

It is possible to avoid and suppress the confirmation prompt during the module update by adding the

force: truedirective to the configuration file. Place thisdirectiveimmediately under thename: <xxxx>directive for each module defined.cd ~ sed -i '13 i \ force: true' ~/myenvironment.yaml sed -i '37 i \ force: true' ~/myenvironment.yaml -

Confirm the configuration file contains the

force: truedirective.cat ~/myenvironment.yamlExample Excerpt:

[oracle@ocne-operator ~]$ cat ~/myenvironment.yaml ... - module: kubernetes name: mycluster force: true args: container-registry: container-registry.oracle.com/olcne ... - module: oci-ccm name: myoci force: true oci-ccm-kubernetes-module: mycluster ... -

Update the cluster and remove the nodes.

Note: This may take a few minutes to complete.

olcnectl module update --config-file myenvironment.yamlExample Output:

[oracle@ocne-operator ~]$ olcnectl module update --config-file myenvironment.yaml Taking backup of modules before update Backup of modules succeeded. Updating modules Update successful Taking backup of modules before update Backup of modules succeeded. Updating modules Update successful -

Switch to the browser and the Cloud Console window.

-

Confirm the Load Balancer’s Backend Set status.

The page shows three healthy (

Health = 'OK') and two unhealthy (Health = 'Critical - Connection failed') nodes. After removing nodes from the Kubernetes cluster, they appear critical to the load balancer since they are no longer available.

-

Confirm the removal of the control plane nodes.

ssh ocne-control-01 "kubectl get nodes"Example Output:

[oracle@ocne-operator ~]$ ssh ocne-control-01 "kubectl get nodes" NAME STATUS ROLES AGE VERSION ocne-control-01 Ready control-plane 164m v1.28.3+3.el8 ocne-control-02 Ready control-plane 163m v1.28.3+3.el8 ocne-control-03 Ready control-plane 162m v1.28.3+3.el8 ocne-worker-01 Ready <none> 164m v1.28.3+3.el8 ocne-worker-02 Ready <none> 163m v1.28.3+3.el8 ocne-worker-03 Ready <none> 164m v1.28.3+3.el8 ocne-worker-04 Ready <none> 164m v1.28.3+3.el8 ocne-worker-05 Ready <none> 164m v1.28.3+3.el8 ocne-worker-06 Ready <none> 13m v1.28.3+3.el8 ocne-worker-07 Ready <none> 13m v1.28.3+3.el8

Summary

That completes the demonstration of adding and removing Kubernetes nodes from a cluster. While this exercise demonstrated updating the control plane and worker nodes simultaneously, this is not the recommended approach to scaling up or scaling down an Oracle Cloud Native Environment Kubernetes cluster. In production environments, administrators should undertake these tasks separately.

For More Information

- Oracle Cloud Native Environment Documentation

- Oracle Cloud Native Environment Track

- Oracle Linux Training Station

More Learning Resources

Explore other labs on docs.oracle.com/learn or access more free learning content on the Oracle Learning YouTube channel. Additionally, visit education.oracle.com/learning-explorer to become an Oracle Learning Explorer.

For product documentation, visit Oracle Help Center.

Scale a Kubernetes Cluster on Oracle Cloud Native Environment

F30806-14

February 2024

Copyright © 2020, Oracle and/or its affiliates.