Create and Use Oracle Analytics Predictive Models

Oracle Analytics predictive models use several embedded Oracle Machine Learning algorithms to mine your datasets, predict a target value, or identify classes of records. Use the data flow editor to create, train, and apply predictive models to your data.

What Are Oracle Analytics Predictive Models?

An Oracle Analytics predictive model applies a specific algorithm to a dataset to predict values, predict classes, or to identify groups in the data.

You can also use Oracle machine learning models to predict data.

Oracle Analytics includes algorithms to help you train predictive models for various purposes. Examples of algorithms are classification and regression trees (CART), logistic regression, and k-means.

You use the data flow editor to first train a model on a training dataset. After the predictive model has been trained, you apply it to the datasets that you want to predict.

You can make a trained model available to other users who can apply it against their data to predict values. In some cases, certain users train models, and other users apply the models.

Note:

If you're not sure what to look for in your data, you can start by using Explain, which uses machine learning to identify trends and patterns. Then you can use the data flow editor to create and train predictive models to drill into the trends and patterns that Explain found.- First, you create a data flow and add the dataset that you want to use to train the model. This training dataset contains the data that you want to predict (for example, a value like sales or age, or a variable like credit risk bucket).

- If needed, you can use the data flow editor to edit the dataset by adding columns, selecting columns, joining, and so on.

- After you've confirmed that the data is what you want to train the model on, you add a training step to the data flow and choose a classification (binary or multi), regression, or cluster algorithm to train a model. Then name the resulting model, save the data flow, and run it to train and create the model.

- Examine the properties in the machine learning objects to determine the quality of the model. If needed, you can iterate the training process until the model reaches the quality you want.

Use the finished model to score unknown, or unlabeled, data to generate a dataset within a data flow or to add a prediction visualization to a workbook.

Example

Suppose you want to create and train a multi-classification model to predict which patients have a high risk of developing heart disease.

- Supply a training dataset containing attributes on individual patients like age, gender, and if they've ever experienced chest pain, and metrics like blood pressure, fasting blood sugar, cholesterol, and maximum heart rate. The training dataset also contains a column named "Likelihood" that is assigned one of the following values: absent, less likely, likely, highly likely, or present.

- Choose the CART (Decision Tree) algorithm because it ignores redundant columns that don't add value for prediction, and identifies and uses only the columns that are helpful to predict the target. When you add the algorithm to the data flow, you choose the Likelihood column to train the model. The algorithm uses machine learning to choose the driver columns that it needs to perform and output predictions and related datasets.

- Inspect the results and fine tune the training model, and then apply the model to a larger dataset to predict which patients have a high probability of having or developing heart disease.

How Do I Choose a Predictive Model Algorithm?

Oracle Analytics provides algorithms for any of your machine learning modeling needs: numeric prediction, multi-classifier, binary classifier, and clustering.

Oracle's machine learning functionality is for advanced data analysts who have an idea of what they're looking for in their data, are familiar with the practice of predictive analytics, and understand the differences between algorithms.

Note:

If you're using data sourced from Oracle Autonomous Data Warehouse, you can use the AutoML capability to quickly and easily train a predictive model for you, without requiring machine learning skills. See Train a Predictive Model Using AutoML in Autonomous Data Warehouse.Normally users want to create multiple prediction models, compare them, and choose the one that's most likely to give results that satisfy their criteria and requirements. These criteria can vary. For example, sometimes users choose models that have better overall accuracy, sometimes users choose models that have the least type I (false positive) and type II (false negative) errors, and sometimes users choose models that return results faster and with an acceptable level of accuracy even if the results aren't ideal.

Oracle Analytics contains multiple machine learning algorithms for each kind of prediction or classification. With these algorithms, users can create more than one model, or use different fine-tuned parameters, or use different input training datasets and then choose the best model. The user can choose the best model by comparing and weighing models against their own criteria. To determine the best model, users can apply the model and visualize results of the calculations to determine accuracy, or they can open and explore the related datasets that Oracle Analytics used the model to output.

Consult this table to learn about the provided algorithms:

| Name | Type | Category | Function | Description |

|---|---|---|---|---|

| CART |

Classification Regression |

Binary Classifier Multi-Classifier Numerical |

- | Uses decision trees to predict both discrete and continuous values.

Use with large datasets. |

| Elastic Net Linear Regression | Regression | Numerical | ElasticNet | Advanced regression model. Provides additional information (regularization), performs variable selection, and performs linear combinations. Penalties of Lasso and Ridge regression methods.

Use with a large number of attributes to avoid collinearity (where multiple attributes are perfectly correlated) and overfitting. |

| Hierarchical | Clustering | Clustering | AgglomerativeClustering | Builds a hierarchy of clustering using either bottom-up (each observation is its own cluster and then merged) or top down (all observations start as one cluster) and distance metrics.

Use when the dataset isn't large and the number of clusters isn't known beforehand. |

| K-Means | Clustering | Clustering | k-means | Iteratively partitions records into k clusters where each observation belongs to the cluster with the nearest mean.

Use for clustering metric columns and with a set expectation of number of clusters needed. Works well with large datasets. Result are different with each run. |

| Linear Regression | Regression | Numerical | Ordinary Least Squares

Ridge Lasso |

Linear approach for a modeling relationship between target variable and other attributes in the dataset.

Use to predict numeric values when the attributes aren't perfectly correlated. |

| Logistic Regression | Regression | Binary Classifier | LogisticRegressionCV | Use to predict the value of a categorically dependent variable. The dependent variable is a binary variable that contains data coded to 1 or 0. |

| Naive Bayes | Classification |

Binary Classifier Multi-Classifier |

GaussianNB | Probabilistic classification based on Bayes' theorem that assumes no dependence between features.

Use when there are a high number of input dimensions. |

| Neural Network | Classification |

Binary Classifier Multi-Classifier |

MLPClassifier | Iterative classification algorithm that learns by comparing its classification result with the actual value and returns it to the network to modify the algorithm for further iterations.

Use for text analysis. |

| Random Forest | Classification |

Binary Classifier Multi-Classifier Numerical |

- | An ensemble learning method that constructs multiple decision trees and outputs the value that collectively represents all the decision trees.

Use to predict numeric and categorical variables. |

| SVM | Classification |

Binary Classifier Multi-Classifier |

LinearSVC, SVC | Classifies records by mapping them in space and constructing hyperplanes that can be used for classification. New records (scoring data) are mapped into the space and are predicted to belong to a category, which is based on the side of the hyperplane where they fall. |

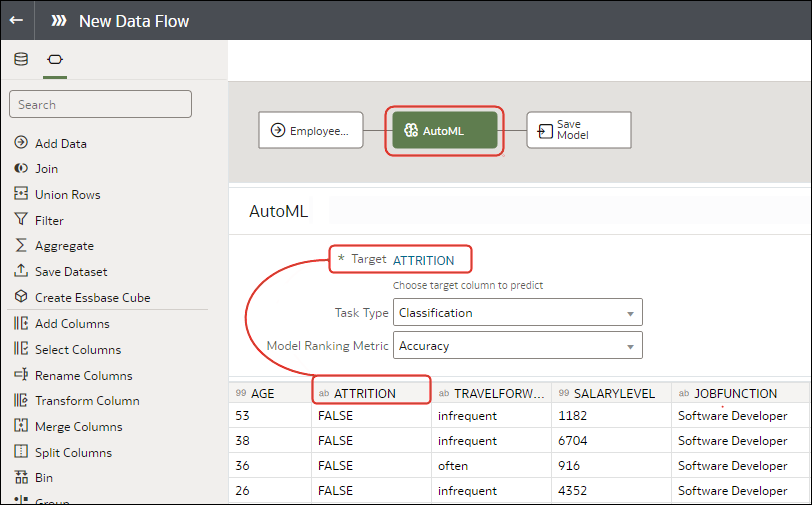

Train a Predictive Model Using AutoML in Oracle Autonomous Data Warehouse

When you use data from Oracle Autonomous Data Warehouse, you can use its AutoML capability to recommend and train a predictive model. AutoML analyzes your data, calculates the best algorithm to use, and registers a prediction model in Oracle Analytics so that you can make predictions on your data.

- Create a dataset based on the data in Oracle Autonomous Data Warehouse that you want to make predictions about. For example, you might have data about employee attrition, including a field named ATTRITION indicating 'Yes' or 'No' for attrition.

- Make sure that the database user specified in the Oracle Analytics connection to Oracle Autonomous Data Warehouse has the role

OML_Developerand isn't an 'admin' super-user. Otherwise, the data flow fails when you try to save or run it.

Create and Train a Predictive Model

Based on the problem that needs to be solved, an advanced data analyst chooses an appropriate algorithm to train a predictive model and then evaluates the model's results.

Arriving at an accurate model is an iterative process and an advanced data analyst can try different models, compare their results, and fine tune parameters based on trial and error. A data analyst can use the finalized, accurate predictive model to predict trends in other datasets, or add the model to workbooks.

Note:

If you're using data sourced from Oracle Autonomous Data Warehouse, you can use the AutoML capability to quickly and easily train a predictive model for you, without requiring machine learning skills. See Train a Predictive Model Using AutoML in Autonomous Data Warehouse.Oracle Analytics provides algorithms for numeric prediction, multi-classification, binary-classification and clustering.

Inspect a Predictive Model

After you create the predictive model and run the data flow, you can review information about the model to determine its accuracy. Use this information to iteratively adjust the model settings to improve its accuracy and predict better results.

View a Predictive Model's Details

A predictive model's detail information helps you understand the model and determine if it's suitable for predicting your data. Model details include its model class, algorithm, input columns, and output columns

- On the Home page, click Navigator, and then click Machine Learning.

- Click the menu icon for a training model and select Inspect.

- Click the Details to view the model's information.

Assess a Predictive Model's Quality

View information that helps you understand the quality of a predictive model. For example, you can review accuracy metrics like model accuracy, precision, recall, F1 value, and false positive rate.

- On the Home page, click Navigator, and then click Machine Learning.

- Click the menu icon for a training model and select Inspect.

- Click the Quality tab to review the model's quality metrics.

What Are a Predictive Model's Related Datasets?

When you run the data flow to create the Oracle Analytics predictive model's training model, Oracle Analytics creates a set of related datasets. You can open and create workbooks on these datasets to learn about the accuracy of the model.

Depending on the algorithm you chose for your model, related datasets contain details about the model such as prediction rules, accuracy metrics, confusion matrix, and key drivers for prediction. You can use this information to fine tune the model to get better results, and you can use related datasets to compare models and decide which model is more accurate.

For example, you can open a Drivers dataset to discover which columns have a strong positive or negative influence on the model. By examining those columns, you find that some columns aren't treated as model variables because they aren't realistic inputs or that they're too granular for the forecast. You use the data flow editor to open the model and based on the information you discovered, you remove the irrelevant or too-granular columns, and regenerate the model. You check the Quality and Results tab and verify if the model accuracy is improved. You continue this process until you're satisfied with the model's accuracy and it's ready to score a new dataset.

Different algorithms generate similar related datasets. Individual parameters and column names may change in the dataset depending on the type of algorithm, but the functionality of the dataset stays the same. For example, the column names in a statistics dataset may change from Linear Regression to Logistic Regression, but the statistics dataset contains accuracy metrics of the model.

These are the related datasets:

CARTree

This dataset is a tabular representation of CART (Decision Tree), computed to predict the target column values. It contains columns that represent the conditions and the conditions' criteria in the decision tree, a prediction for each group, and prediction confidence. The Inbuilt Tree Diagram visualization can be used to visualize this decision tree.

The CARTree dataset is outputted when you select these model and algorithm combinations.

| Model | Algorithm |

|---|---|

| Numeric | CART for Numeric Prediction |

| Binary Classification | CART (Decision Tree) |

| Multi Classification | CART (Decision Tree) |

Classification Report

This dataset is a tabular representation of the accuracy metrics for each distinct value of the target column. For example, if the target column can have the two distinct values Yes and No, this dataset shows accuracy metrics like F1, Precision, Recall, and Support (the number of rows in the training dataset with this value) for every distinct value of the target column.

The Classification dataset is outputted when you select these model and algorithm combinations.

| Model | Algorithms |

|---|---|

| Binary Classification |

Naive Bayes Neural Network Support Vector Machine |

| Multi Classification |

Naive Bayes Neural Network Support Vector Machine |

Confusion Matrix

This dataset, which is also called an error matrix, is a pivot table layout. Each row represents an instance of a predicted class, and each column represents an instance in an actual class. This table reports the number of false positives, false negatives, true positives, and true negatives, which are used to compute precision, recall, and F1 accuracy metrics.

The Confusion Matrix dataset is outputted when you select these model and algorithm combinations.

| Model | Algorithms |

|---|---|

| Binary Classification |

Logistics Regression CART (Decision Tree) Naive Bayes Neural Network Random Forest Support Vector Machine |

| Multi Classification |

CART (Decision Tree) Naive Bayes Neural Network Random Forest Support Vector Machine |

Drivers

This dataset provides information about the columns that determine the target column values. Linear regressions are used to identify these columns. Each column is assigned coefficient and correlation values. The coefficient value describes the column's weight-age used to determine the target column's value. The correlation value indicates the relationship direction between the target column and dependent column. For example, if the target column's value increases or decreases based on the dependent column.

The Drivers dataset is outputted when you select these model and algorithm combinations.

| Model | Algorithms |

|---|---|

| Numeric |

Linear Regression Elastic Net Linear Regression |

| Binary Classification |

Logistics Regression Support Vector Machine |

| Multi Classification | Support Vector Machine |

Hitmap

This dataset contains information about the decision tree's leaf nodes. Each row in the table represents a leaf node and contains information describing what that leaf node represents, such as segment size, confidence, and expected number of rows. For example, expected number of correct predictions = Segment Size * Confidence.

The Hitmap dataset is outputted when you select these model and algorithm combinations.

| Model | Algorithm |

|---|---|

| Numeric | CART for Numeric Prediction |

Residuals

This dataset provides information on the quality of the residual predictions. A residual is the difference between the measured value and the predicted value of a regression model. This dataset contains an aggregated sum value of absolute difference between the actual and predicted values for all columns in the dataset.

The Residuals dataset is outputted when you select these model and algorithm combinations.

| Model | Algorithms |

|---|---|

| Numerics |

Linear Regression Elastic Net Linear Regression CART for Numeric Prediction |

| Binary Classification | CART (Decision Tree) |

| Multi Classificatin | CART (Decision Tree) |

Statistics

This dataset's metrics depend upon the algorithm used to generate it. Note this list of metrics based on algorithm:

- Linear Regression, CART for Numeric Prediction, Elastic Net Linear Regression - These algorithms contain R-Square, R-Square Adjusted, Mean Absolute Error(MAE), Mean Squared Error(MSE), Relative Absolute Error(RAE), Related Squared Error(RSE), Root Mean Squared Error(RMSE).

- CART(Classification And Regression Trees), Naive Bayes Classification, Neural Network, Support Vector Machine(SVM), Random Forest, Logistic Regression - These algorithms contain Accuracy, Total F1.

This dataset is outputted when you select these model and algorithm combinations.

| Model | Algorithm |

|---|---|

| Numeric |

Linear Regression Elastic Net Linear Regression CART for Numeric Prediction |

| Binary Classification |

Logistics Regression CART (Decision Tree) Naive Bayes Neural Network Random Forest Support Vector Machine |

| Multi Classification |

Naive Bayes Neural Network Random Forest Support Vector Machine |

Summary

This dataset contains information such as Target name and Model name.

The Summary dataset is outputted when you select these model and algorithm combinations.

| Model | Algorithms |

|---|---|

| Binary Classification |

Naive Bayes Neural Network Support Vector Machine |

| Multi Classification |

Naive Bayes Neural Network Support Vector Machine |

Find a Predictive Model's Related Datasets

Related datasets are generated when you train a predictive model.

- On the Home page, click Navigator, and then click Machine Learning.

- Click the menu icon for a training model and select Inspect.

- Click the Related tab to access the model's related datasets.

- Double-click a related dataset to view it or to use it in a workbook.

Add a Predictive Model to a Workbook

When you create a scenario in a workbook, you apply a predictive model to the workbook's dataset to reveal the trends and patterns that the model was designed to find.

Note:

You can't apply an Oracle machine learning model to a workbook's data.