19 Test and Debug Oracle JET Apps

Test Oracle JET Apps

Tests help you build complex Oracle JET apps quickly and reliably by preventing regressions and encouraging you to create apps that are composed of testable functions, modules, classes, and components.

We recommend that you write tests as early as possible in your app’s development cycle. The longer that you delay testing, the more dependencies the app is likely to have, and the more difficult it will be to begin testing.

Testing Types

There are three main testing types that you should consider when testing Oracle JET apps.

- Unit Testing

-

Unit testing checks that all inputs to a given function, class, or component are producing the expected output or response.

-

These tests are typically applied to self-contained business logic, components, classes, modules, or functions that do not involve UI rendering, network requests, or other environmental concerns.

Note that REST service APIs should be tested independently.

-

Unit tests are aware of the implementation details and dependencies of a component and focus on isolating the tested component.

-

- Component Testing

-

Component testing checks that individual components can be interacted with and behave as expected. These tests import more code than unit tests, are more complex, and require more time to execute.

-

Component tests should catch issues related to your component's properties, events, the slots that it provides, styles, classes, lifecycle hooks, and more.

-

These tests are unaware of the implementation details of a component; they mock up as little as possible in order to test the integration of your component and the entire system.

You should not mock up child components in component tests but instead check the interactions between your component and its children with a test that interacts with the components as a user would (for example, by clicking on an element).

-

- End-to-End Testing

- End-to-end testing, which often involves setting up a database or other backend service, checks features that span multiple pages and make real network requests against a production-built JET app.

End-to-end testing is meant to test the functionality of an entire app, not just its individual components. Therefore, use unit tests and component tests when testing specific components of your Oracle JET apps.

Unit Testing

Unit testing should be the first and most comprehensive form of testing that you perform.

The purpose of unit testing is to ensure that each unit of software code is coded correctly, works as expected, and returns the expected outputs for all relevant inputs. A unit can be a function, method, module, object, or other entity in an app’s source code.

Unit tests are small, efficient tests created to execute and verify the lowest-level of code and to test those individual entities in isolation. By isolating functionality, we remove external dependencies that aren't relevant to the unit being tested and increase the visibility into the source of failures.

Unit tests should interact with the component's public application programming interface (API) and pass the API as many different combinations of test data as necessary to exercise as much of the component's code paths as possible. This includes testing the component's properties, events, methods, and slots.

Unit tests that you create should adhere to the following principles:

- Easy to write: Unit testing should be your main testing focus; therefore, tests should typically be easy to write because many will be written. The standard testing technology stack combined with recommended development environments ensures that the tests are easily and quickly written.

- Readable: The intent of each test should be clearly documented, not just in comments, but the code should also allow for easy interpretation of what its purpose is. Keeping tests readable is important should someone need to debug when a failure occurs.

- Reliable: Tests should consistently pass when no bugs are introduced into the component code and only fail when there are true bugs or new, unimplemented behaviors. The tests should also execute reliably regardless of the order in which they’re run.

- Fast: Tests should be able to execute quickly and report issues immediately to the developer. If a test runs slowly, it could be a sign that it is dependent upon an external system or interacting with an external system.

- Discrete: Tests should exercise the smallest unit of work possible, not only to ensure that all units are properly verified but also to aid in the detection of bugs when failures occur. In each unit test, individual test cases should independently target a single attribute of the code to be verified.

- Independent: Above all else, unit tests should be independent of one another, free of external dependencies, and be able to run consistently irrespective of the environment in which they’re executed.

To shield unit tests from external changes that may affect their outcomes, unit tests focus solely on verifying code that is wholly owned by the component and avoid verifying the behaviors of anything external to that component. When external dependencies are needed, consider using mocks to stand in their place.

Component Testing

The purpose of component testing is to establish that an individual component behaves and can be interacted with according to its specifications. In addition to verifying that your component accepts the correct inputs and produces the right outputs, component tests also include checking for issues related to your component's properties, events, slots, styles, classes, lifecycle hooks, and so on.

A component is made up of many units of code, therefore component testing is more complex and takes longer to conduct than unit testing. However, it is still very necessary; the individual units within your component may work on their own, but issues can occur when you use them together.

Component testing is a form of closed-box testing, meaning that the test evaluates the behavior of the program without considering the details of the underlying code. You should begin testing a component in its entirety immediately after development, though the tested component may in part depend on other components that have not yet been developed. Depending on the development lifecycle model, component testing can be done in isolation from other components in the system, in order to prevent external influences.

If the components that your component depends on have not yet been developed, then use dummy objects instead of the real components. These dummy objects are the stub (called function) and the controller (called function).

Depending on the depth of the test level, there are two types of component tests: small component tests and large component tests.

When component testing is done in isolation from other components, it is called "small component testing." Small component tests do not consider the component's integration with other components.

When component testing is performed without isolating the component from other components, it is called "large component testing", or "component testing" in general. These tests are done when there is a dependency on the flow of functionality of the components, and therefore we cannot isolate them.

End-to-End Testing

End-to-end testing is a method of evaluating a software product by examining its behavior from start to finish. This approach verifies that the app operates as intended and confirms that all integrated components function correctly in relation to one another. Additionally, end-to-end testing defines the system dependencies of the product to ensure optimal performance.

The primary goal of end-to-end testing is to replicate the end-user experience by simulating real-world scenarios and evaluating the system and its components for proper integration and data consistency. This approach allows for the validation of the system's performance from the perspective of the user.

- Comprehensive test coverage

- Assurance of app's accuracy

- Faster time to market

- Reduced costs

- Identification of bugs

- Verifying the system's flow

- Increasing the coverage of testing areas

- Identifying issues related to subsystems

- Developers appreciate end-to-end testing as it allows them to offload testing responsibilities.

- Testers find it useful as it enables them to write tests that simulate real-world scenarios and avoid potential problems.

- Managers benefit from end-to-end testing as it allows them to understand the impact of a failing test on the end-user.

- Test Planning: Outlining key tasks, schedules, and resources required

- Test Design: Creating test specifications, identifying test cases, assessing risks, analyzing usage, and scheduling tests

- Test Execution: Carrying out the test cases and documenting the results

- Results Analysis: Reviewing the test results, evaluating the testing process, and conducting further testing as required

- Horizontal Testing: This method involves testing across multiple apps and is often used in a single ERP (Enterprise Resource Planning) system.

- Vertical Testing: This approach involves testing in layers, where tests are conducted in a sequential, hierarchical order. This method is used to test critical components of a complex computing system and does not typically involve users or interfaces.

End-to-end testing is typically performed on finished products and systems, with each review serving as a test of the completed system. If the system does not produce the expected output or if a problem is detected, a second test will be conducted. In this case, the team will need to record and analyze the data to determine the source of the issue, fix it, and retest.

- Test Case Preparation Status: This metric is used to track the progress of test cases that are currently being prepared in comparison to the planned test cases.

- Test Progress Tracking: Regular monitoring of test progress on a weekly basis to provide updates on test completion percentage and the status of passed/failed, executed/unexecuted, and valid/invalid test cases.

- Defects Status and Details: Provides a weekly percentage of open and closed defects and a breakdown of defects by severity and priority.

- Environment Availability: Information on the number of operational hours and hours scheduled for testing each day.

Composite Component Unit Testing

Composite components comprise a few different pieces, including the view, the viewModel, and the bindings that connect them. The view is the visible part of the component and the means by which the user interacts with it, whereas the viewModel controls the behavior of the component in response to some stimulus. The view-to-viewModel bindings tie together the view and its user interactions and the viewModel behaviors that are run in response.

There are generally two approaches to unit testing components:

- View testing, or DOM (Document Object Model) testing, interacts with the component's UI in much the same way that a user would: by clicking, typing, and generally interacting with the component through its visible elements. While it may invoke actions in the controller due to bindings written into the view, the purpose is to verify not the behavior but rather that the bindings themselves call the correct actions.

- ViewModel testing focuses solely on the controller layer of the component. This type of testing instantiates the controller class and calls its public functions to assert behavior. Any dependencies that the controller may have on external modules are mocked out so that the test can be concerned with only the viewModel code. This testing is done independently of the view. The test is not interacting with the view to call the controller functions; rather, it calls the functions directly and performs assertions on the results from the call.

Note:

The view-to-viewModel bindings are the key to interactions; they define what is called for each stimulus. However, they are usually only tested during integration, not during unit testing.We recommend unit testing composite components using direct viewModel testing, which focuses on the smallest pieces of the code that are public, namely, the API. For composite components, the public API is defined by the viewModel and surfaced on the custom web element. This makes it possible to test the viewModel methods without running the entire component through the browser and rendering a UI. ViewModel testing allows inputs to methods to be supplied by the test, and the output can be examined for correctness. This form of testing discourages direct UI interactions and instead prefers to simulate the environment in which the component would run.

- Properties

- Events

- Methods

- Slots

- Accessibility

- Security

- Localization

- User Interaction

There are circumstances where viewModel testing alone isn't sufficient to cover all aspects of the component, such as simulating user events to ensure that bindings are correctly applied. For these scenarios, view testing via the DOM or WebDriver can be used.

About the Oracle JET Testing Technology Stack

The recommended technology stack for testing Oracle JET apps includes Karma, Mocha, Chai, and Sinon.

To aid developers in testing their apps and leveraging these technologies, the ojet add testing command was added to the Oracle JET CLI with the release of JET 15. The command configures an app's testing environment and sets up a framework for testing JET components. Once an app is configured for testing, you can use the recommended testing technology stack to write and run your tests.

-

Karma is a test runner for JavaScript that runs on NodeJS. It runs an HTTP server to make project files available to browser instances that it launches and manages. Karma loads the necessary files into the browsers, executing source code against test code.

Note:

Since different browsers can have different DOM implementations, testing against most of the major browsers is essential if you want to ensure that your app will behave properly for the majority of its users.Karma monitors files specified in its main configuration file,

karma.conf.js, for changes, triggering test runs by sending a signal to the testing server to inform all connected browsers to run the test code again. The server collects the test results against each browser and presents them to the developer in the CLI.Essentially, Karma starts both the browsers and Mocha. Mocha in turn executes the tests.

-

Mocha is a JavaScript testing framework, the library against which tests are written; it allows for the declaration of test suites and cases. It can be installed globally and set as a development dependency for your project, or you can set it up to run test cases directly on the web browser.

Mocha tests run serially, allowing for flexible and accurate reporting while mapping uncaught exceptions to the correct test cases. Mocha simplifies asynchronous testing with features that invoke the callback once the test is finished; it enables synchronous testing by omitting the callback.

-

Chai is an assertion library for NodeJS and the browser that can be paired with any JavaScript testing framework. It has several interfaces that a developer can choose from, and tests that you write in Chai resemble English sentence construction.

-

Where mocks are required, we recommend using Sinon, a library that enables the mocking of objects and functions to allow the tests to run without requiring external dependencies.

For UI automation testing, we recommend using Selenium WebDriver in conjunction with the Oracle® JavaScript Extension Toolkit (Oracle JET) WebDriver.

Configure Oracle JET Apps for Testing

The ojet add testing Command

Use the ojet add testing CLI command to add testing capability to your Oracle JET app by setting up the framework and libraries required for testing for JET components.

Run the command from a terminal window in your app's root directory. After it configures your app's testing environment, you can proceed with testing your app by using Karma as a test runner and Mocha and Chai to write your tests. You can also use Sinon to include spies, stubs, and mocks for your tests.

The configuration performed by the ojet add testing command also creates some essential testing directories and files within your app.

The test-config folder is added to your app's root directory. It contains three configuration files that are required for testing: karma.conf.js, test-main.js, and tsconfig.json. The Karma configuration file karma.conf.js is the main configuration file for your tests, test-main.js is the RequireJS configuration file that is loaded by Karma, and tsconfig.json is the TypeScript configuration file for the test files.

.spec.ts so that tooling recognizes them. If spec files are missing from a component, then they are injected. Spec files are located within a __tests__ folder inside the component folder, such as /src/ts/jet-composites/oj-calculate-value/__tests__ . This folder holds the test files you write for your component and, by default, is created with three test files containing dummy tests: oj-calculate-value-knockout.spec.ts, oj-calculate-value-ui.spec.ts, and oj-calculate-value-viewmodel.spec.ts.

Note:

If you create a new component or pack from the command line after running the ojet add testing command on your project, then the __tests__ folder and files are injected by default.

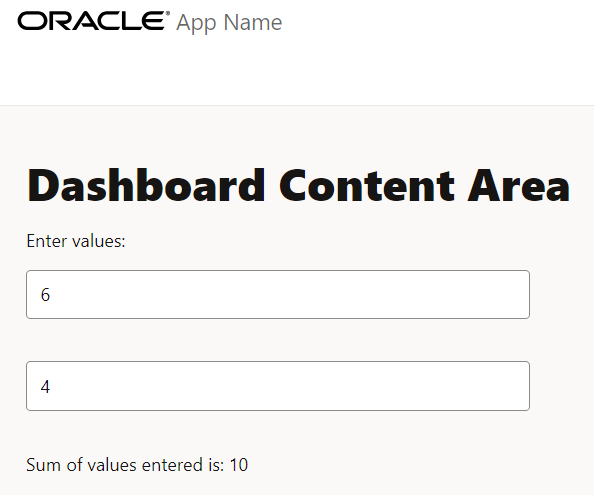

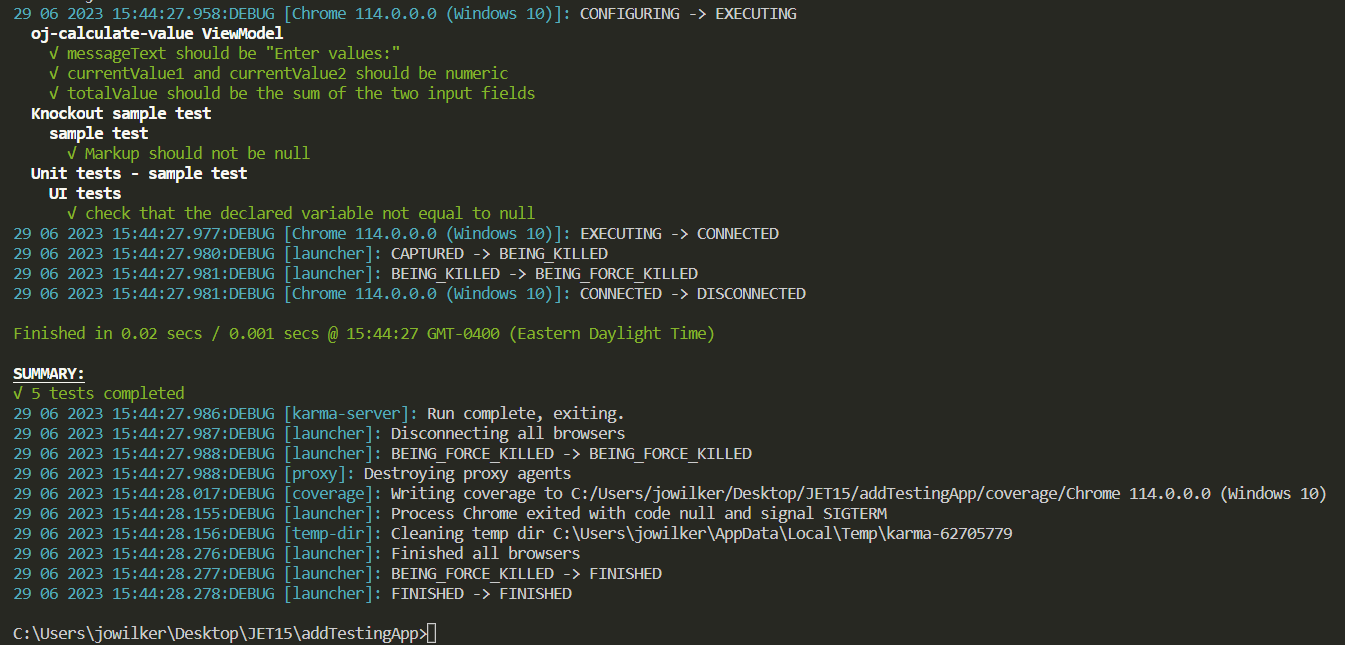

Testing Demo

Here we will use the ojet add testing command to configure a testing environment for an Oracle JET app. The app used in this testing demo contains a calculator component that takes two user-submitted numbers, calculates their sum, and displays the result in the app's dashboard. Once the app's testing environment is set up, we will run tests on the calculator component.

- First, download the

JET-Test-Example.zip and unzip it to your working directory. Open a terminal window in your app's root directory and run the

ojet restorecommand. - Observe the app's directory structure. In the

/src/ts/jet-compositesdirectory is theoj-calculate-valuecomponent. The tests you will run on the calculator component, after you have configured your app for testing, are included in the app's root directory as the text fileoj-calculate-value-viewmodel-spec-ts.txt.Use the

ojet servecommand to run the app and manually test that the calculator component works as expected.

Note:

Before running tests in your project, you must first runojet buildorojet serve, or the tests will fail. - Run the

ojet add testingcommand from a terminal window in your app's root directory.In your app's directory structure, you can see that the

test-configfolder was added to your app's root directory and the__tests__folder was added to the/src/ts/jet-composites/oj-calculate-valuedirectory. - Rename the file

oj-calculate-value-viewmodel-spec-ts.txtin your app's root directory tooj-calculate-value-viewmodel.spec.tsand replace the file with the same name in the\src\ts\jet-composites\oj-calculate-value\__tests__directory. The file contains three sample unit tests written in Chai for theoj-calculate-valuecomponent's viewModel. - Run the tests. Enter the script

npm run testin the command line and observe the results in the terminal window.

- Note the

coveragedirectory that was created in the root directory of your app, after running the tests. It contains theoj-calculate-value-viewModel.js.htmlfile, which you can open in your browser to view more information about the test run.

Use BusyContext API in Automated Testing

Use BusyContext to wait for a component or other condition to complete some action before interacting with it.

BusyContext in test automation when you want to wait for an animation to complete, a JET page to load, or a data fetch to complete.

Note:

Animations should not be disabled during testing. Tests should run on what the user sees and encounters in your app. For example, by turning off animations, you remove the possibility of finding race conditions that would only appear with the animations enabled.

Wait Scenarios

The Busy Context API will block until all the busy states resolve or a timeout period lapses. There are four primary wait scenarios:

-

Components that implement animation effects

-

Components that fetch data from a REST endpoint

-

Pages that load bootstrap files, such as the Oracle JET libraries loaded with RequireJS

-

Customer-defined scenarios that are not limited to Oracle JET, such as blocking conditions associated with app domain logic

Determining the Busy Context’s Scope

The first step for waiting on a busy context is to determine the wait condition. You can scope the granularity of a busy context for the entirety of the page or limit the scope to a specific DOM element. Busy contexts have hierarchical dependencies mirroring the document's DOM structure, with the root being the page context. Depending on your particular scenario, target one of the following busy context scopes:

-

Scoped for the page

Choose the page busy context to represent the page as a whole. Automation developers commonly need to wait until the page is fully loaded before starting automation. Also, automation developers are usually interested in testing the functionality of an app that has multiple Oracle JET components rather than a single component.

var busyContext = Context.getPageContext().getBusyContext(); -

Scoped for the nearest DOM element

Choose a busy context scoped for a DOM node when your app must wait until a specific component’s operation completes. For example, you may want to wait until an

ojPopupcompletes an open or close animation before initiating the next task in the app flow. Use thedata-oj-contextmarker attribute to define a busy context for a DOM subtree.<div id="mycontext" data-oj-context> ... <!-- JET content --> ... </div> var node = document.querySelector("#mycontext"); var busyContext = Context.getContext(node).getBusyContext();

Determining the Ready State

After obtaining a busy context, the next step is to inquire the busy state. BusyContext has two operations for inquiring the ready state: isReady() and whenReady(). The isReady() method immediately returns the state of the busy context. The whenReady() method returns a Promise that resolves when the busy states resolve or a timeout period lapses.

The following example shows how you can use isReady() with WebDriver.

public static void waitForJetPageReady(WebDriver webDriver, long timeoutInMillis)

{

try

{

final WebDriverWait wait = new WebDriverWait(webDriver, timeoutInMillis / _THOUSAND_MILLIS);

// Eat any WebDriverException

// "ExpectedConditions.jsReturnsValue" will continue to be called if it doesn't return a value.

// /ExpectedConditions.java#L1519

wait.ignoring(WebDriverException.class);

wait.until(ExpectedConditions.jsReturnsValue(_PAGE_WHEN_READY_SCRIPT));

}

catch (TimeoutException toe)

{

String evalString = "return Context.getPageContext().getBusyContext().getBusyStates().join('\\n');";

Object busyStatesLog = ((JavascriptExecutor)webDriver).executeScript(evalString);

String retValue = "";

if (busyStatesLog != null){

retValue = busyStatesLog.toString();

Assert.fail("waitForJetPageReady failed - !Context.getPageContext().getBusyContext().isReady() - busyStates: " +

retValue); }

}

// The assumption with the page when ready script is that it will continue to execute until a value is returned or

// reached the timeout period.

//

// There are three areas of concern:

// 1) Has the app opt'd in on the whenReady wait for bootstrap?

// 2) If the app has opt'd in on the jet whenReady strategy for bootstrap "('oj_whenReady' in window)",

// wait until jet core is loaded and have a ready state.

// 3) If not opt-ing in on jet whenReady bootstrap, make the is ready check if jet core has loaded. If jet core is

// not loaded, we assume it is not a jet page.

// Check to determine if the page is participating in the jet whenReady bootstrap wait period.

static private final String _BOOTSTRAP_WHEN_READY_EXP = "(('oj_whenReady' in window) && window['oj_whenReady'])";

// Assumption is we must wait until jet core is loaded and the busy state is ready.

static private final String _WHEN_READY_WITH_BOOTSTRAP_EXP =

"(window['oj'] && window['oj']['Context'] && Context.getPageContext().getBusyContext().isReady() ?" +

" 'ready' : '')";

// Assumption is the jet libraries have already been loaded. If they have not, it's not a Jet page.

// Return jet missing in action "JetMIA" if jet core is not loaded.

static private final String _WHEN_READY_NO_BOOTSTRAP_EXP =

"(window['oj'] && window['oj']['Context'] ? " +

"(Context.getPageContext().getBusyContext().isReady() ? 'ready' : '') : 'JetMIA')";

// Complete when ready script

static private final String _PAGE_WHEN_READY_SCRIPT =

"return (" + _BOOTSTRAP_WHEN_READY_EXP + " ? " + _WHEN_READY_WITH_BOOTSTRAP_EXP + " : " +

_WHEN_READY_NO_BOOTSTRAP_EXP + ");";The following example shows how you can use whenReady() with QUnit.

// Utility function for creating a promise error handler

function getExceptionHandler(assert, done, busyContext)

{

return function (reason)

{

if (reason && reason['busyStates'])

{

// whenReady timeout

assert.ok(false, reason.toString());

}

else

{

// Unhandled JS Exception

var msg = reason ? reason.toString() : "Unknown Reason";

if (busyContext)

msg += "\n" + busyContext;

assert.ok(false, msg);

}

// invoke done callback

if (done)

done();

};

};

QUnit.test("popup open", function (assert)

{

// default whenReady timeout used when argument is not provided

Context.setBusyContextDefaultTimeout(18000);

var done = assert.async();

assert.expect(1);

var popup = document.getElementById("popup1");

// busy context scoped for the popup

var busyContext = Context.getContext(popup).getBusyContext();

var errorHandler = getExceptionHandler(assert, done, busyContext);

popup.open("#showPopup1");

busyContext.whenReady().then(function ()

{

assert.ok(popup.isOpen(), "popup is open");

popup.close();

busyContext.whenReady().then(function ()

{

done();

}).catch(errorHandler);

}).catch(errorHandler);

});Creating Wait Conditions

JET components use the busy context to communicate blocking operations. You can add busy states to any scope of the busy context to block operations such as asynchronous data fetch.

The following high-level steps describe how to add a busy context:

-

Create a Scoped Busy Context.

-

Add a busy state to the busy context. You must add a description that describes the purpose of the busy state. The busy state returns a resolve function that is called when it’s time to remove the busy state.

Busy context dependency relationships are determined at the point the first busy state is added. If the DOM node is re-parented after a busy context was added, the context will maintain dependencies with any parent DOM contexts.

-

Perform the operation that needs to be guarded with a busy state. These are usually asynchronous operations that some other app flow depends on for its completion.

-

Resolve the busy state when the operation completes.

The app is responsible for releasing the busy state. The app must manage a reference to the resolve function associated with a busy state, and it must be called to release the busy state. If the DOM node that the busy context is applied to is removed in the document before the busy state is resolved, the busy state will be orphaned and will never resolve.

Debug Oracle JET Apps

Since Oracle JET web apps are client-side HTML5 apps written in JavaScript or Typescript, you can use your favorite browser's debugging facilities.

Debug Web Apps

Use your source code editor and browser's developer tools to debug your Oracle JET app.

Developer tools for widely used browsers like Chrome, Edge, and Firefox provide a range of features that assist you in inspecting and debugging your Oracle JET app as it runs in the browser. Read more about the usage of these developer tools in the documentation for your browser.

By default, the ojet build and ojet serve commands use

debug versions of the Oracle JET libraries. If you build or serve your Oracle JET app in

release mode (by appending the --release parameter to the ojet

build or ojet serve command), your app uses minified versions of

the Oracle JET libraries. If you choose to debug an Oracle JET app that you built in release

mode, you can use the --optimize=none parameter to make the minified output

more readable by preserving line breaks and white space:

ojet build --release --optimize=none

ojet serve --release --optimize=noneNote that browser developer tools offer the option to "pretty print" minified source files to

make them more readable, if you choose not to use the --optimize=none

parameter.

You may also be able to install browser extensions that further assist you in debugging your app.

Finally, if you use a source code editor, such as Visual Studio Code, familiarize yourself with the debugging tools that it provides to assist you as develop and debug your Oracle JET app.