3.1.1.2 Configuring the Runtime Server

For runtime, you can use a Spark Standalone or Hadoop YARN cluster. Only users with the Administrator permissions can set the system settings in Oracle GoldenGate Analytics.

3.1.1.2.1 Configuring for Standalone Spark Runtime

- Click the user name at the top right corner of the screen.

- Click System Settings.

- Click Environment.

- Select the Runtime Server as Spark Standalone, and enter the following details:

-

Spark REST URL: Enter the Spark standalone REST URL. If Spark standalone is HA enabled, then you can enter comma-separated list of active and stand-by nodes.

-

Storage: Select the storage type for pipelines. To submit a GGSA pipeline to Spark, the pipeline has to be copied to a storage location that is accessible by all Spark nodes.

- If the storage type is WebHDFS:

-

Path: Enter the WebHDFS directory (hostname:port/path), where the generated Spark pipeline will be copied to and then submitted from. This location must be accessible by all Spark nodes. The user specified in the authentication section below must have read-write access to this directory.

-

HA Namenodes: If the hostname in the above URL refers to a logical HA cluster, specify the actual namenodes here, in the format:

Hostname1:Port, Hostname2:Port.

-

- If storage type is HDFS:

-

Path: The path could be

<HostOrIPOfNameNode><HDFS Path>. For example,xxx.xxx.xxx.xxx/user/oracle/ggsapipelines. Hadoop user must haveWritepermissions. The folder will automatically be created if it does not exist. -

HA Namenodes: If the hostname in the above URL refers to a logical HA cluster, specify the actual namenodes here, in the format:

Hostname1:Port, Hostname2:Port.This field is applicable only when the storage type is HDFS.

-

- Hadoop Authentication for WebHDFS and HDFS Storage Types:

- Simple authentication credentials:

- Protection Policy: Select a protection policy from the drop-down list.

- Username: Enter the user account to use for submitting Spark pipelines. This user must have read-write access to the Path specified above.

- Kerberos authentication credentials:

- Protection Policy: Select a protection policy from the drop-down list.

- Kerberos Realm: Enter the domain on which Kerberos authenticates a user, host, or service. This value is in the

krb5.conf file. - Kerberos KDC: Enter the server on which the Key Distribution Center is running. This value is in the

krb5.conf file. - Principal: Enter the GGSA service principal that is used to authenticate the GGSA web application against Hadoop cluster, for application deployment. This user should be the owner of the folder used to deploy the GGSA application in HDFS. You have to create this user in the yarn node manager as well.

- Keytab: Enter the keytab pertaining to GGSA service principal.

- Yarn Resource Manager Principal: Enter the yarn principal. When Hadoop cluster is configured with Kerberos, principals for hadoop services like hdfs, https, and yarn are created as well.

- Simple authentication credentials:

- If storage type is NFS:

Path: The path could be

/oracle/spark-deploy.Note:

/oracle should exist and spark-deploy will automatically be created if it does not exist. You will needWritepermissions on the /oracle directory.

- If the storage type is WebHDFS:

-

- Spark standalone master console port: Enter the port on which the Spark standalone console runs. The default port is

8080.Note:

The order of the comma-separated ports should match the order of the comma-separated spark REST URLs mentioned in the Path. - Spark master username: Enter your Spark standalone server username.

- Spark master password: Click Change Password, to change your Spark standalone server password.

Note:

You can change your Spark standalone server username and password in this screen. The username and password fields are left blank, by default. - Click Save.

3.1.1.2.2 Configuring for Hadoop Yarn Runtime

- Click the user name at the top right corner of the screen.

- Click System Settings.

- Click Environment.

- Select the Runtime Server as Yarn, and enter the following details:

-

YARN Resource Manager URL: Enter the URL where the YARN Resource Manager is configured.

-

Storage: Select the storage type for pipelines. To submit a GGSA pipeline to Spark, the pipeline has to be copied to a storage location that is accessible by all Spark nodes.

- If the storage type is WebHDFS:

-

Path: Enter the WebHDFS directory (hostname:port/path), where the generated Spark pipeline will be copied to and then submitted from. This location must be accessible by all Spark nodes. The user specified in the authentication section below must have read-write access to this directory.

-

HA Namenodes: Set the HA namenodes. If the hostname in the above URL refers to a logical HA cluster, specify the actual namenodes here, in the format:

Hostname1:Port, Hostname2:Port.

-

- If storage type is HDFS:

-

Path: The path could be

<HostOrIPOfNameNode><HDFS Path>. For example,xxx.xxx.xxx.xxx/user/oracle/ggsapipelines. Hadoop user must haveWritepermissions. The folder will automatically be created if it does not exist. -

HA Namenodes: If the hostname in the above URL refers to a logical HA cluster, specify the actual namenodes here, in the format:

Hostname1:Port, Hostname2:Port.

-

- If storage type is NFS:

Path: The path could be

/oracle/spark-deploy.Note:

/oracle should exist and spark-deploy will automatically be created if it does not exist. You will needWritepermissions on the /oracle directory.

- If the storage type is WebHDFS:

-

- Hadoop Authentication:

- Simple authentication credentials:

- Protection Policy: Select a protection policy from the drop-down list. This value should match the value on the cluster.

- Username: Enter the user account to use for submitting Spark pipelines. This user must have read-write access to the Path specified above.

- Kerberos authentication credentials:

- Protection Policy: Select a protection policy from the drop-down list. This value should match the value on the cluster.

- Kerberos Realm: Enter the domain on which Kerberos authenticates a user, host, or service. This value is in the

krb5.conf file. - Kerberos KDC: Enter the server on which the Key Distribution Center is running. This value is in the

krb5.conf file. - Principal: Enter the GGSA service principal that is used to authenticate the GGSA web application against Hadoop cluster, for application deployment. This user should be the owner of the folder used to deploy the GGSA application in HDFS. You have to create this user in the yarn node manager as well.

- Keytab: Enter the keytab pertaining to GGSA service principal.

- Yarn Resource Manager Principal: Enter the yarn principal. When Hadoop cluster is configured with Kerberos, principals for hadoop services like hdfs, https, and yarn are created as well.

- Simple authentication credentials:

- Yarn master console port: Enter the port on which the Yarn master console runs. The default port is

8088. - Click Save.

3.1.1.2.3 Configuring for OCI Big Data Service

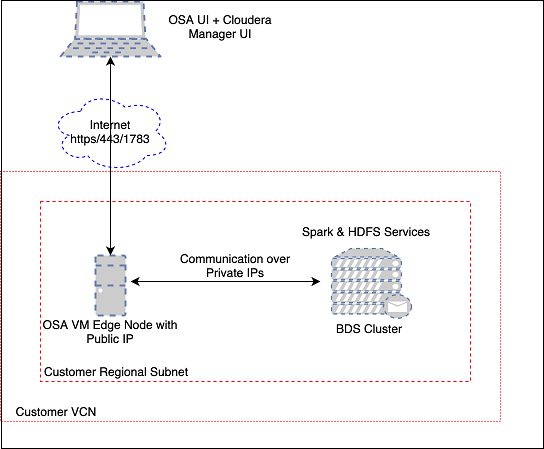

3.1.1.2.3.1 Topology

In this example, the marketplace GGSA instance and the BDS cluster are in the same regional subnet. The GGSA instance is accessed using its public IP address. The GoldenGate Stream Analytics instance seconds as a Bastion Host to your BDS cluster. Once you ssh to GGSA instance, you can ssh to all your BDS nodes, by copying your ssh private key to GGSA instance.

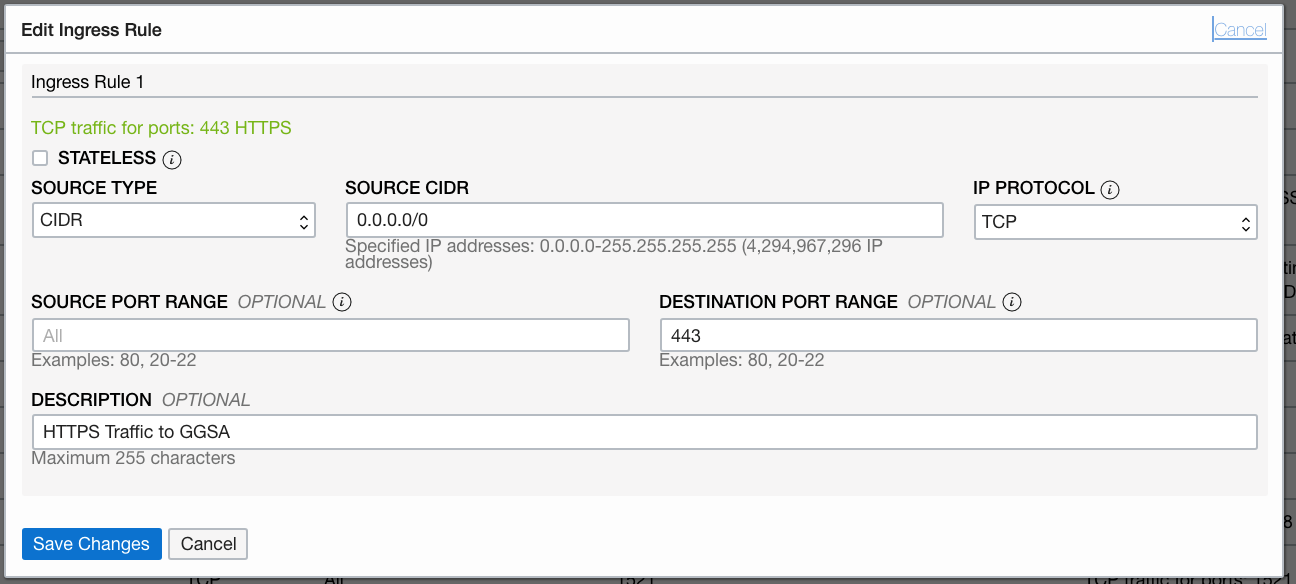

The default security list for the Regional Subnet must allow bidirectional traffic to edge/OSA node so create a stateful rule for destination port 443. Also create a similar Ingress rule for port 7183 to access the BDS Cloudera Manager via the OSA edge node. An example Ingress rule is shown below.

You will also be able to access your Cloudera Manager using the same public IP of GGSA instance by following steps below. Please note this is optional.SSH to GGSA box and run the following port forward commands so you can access the Cloudera Manager via the GGSA instance:

sudo firewall-cmd --add-forward-port=port=7183:proto=tcp:toaddr=<IP address of Utility Node running the Cloudera Manager console>

sudo firewall-cmd --runtime-to-permanent

sudo sysctl net.ipv4.ip_forward=1

sudo iptables -t nat -A PREROUTING -p tcp --dport 7183 -j DNAT --to-destination <IP address of Utility Node running the Cloudera Manager console>:7183

sudo iptables -t nat -A POSTROUTING -j MASQUERADE

You should now be able to access the Cloudera Manager using the URL https://<Public IP of GGSA>:7183.

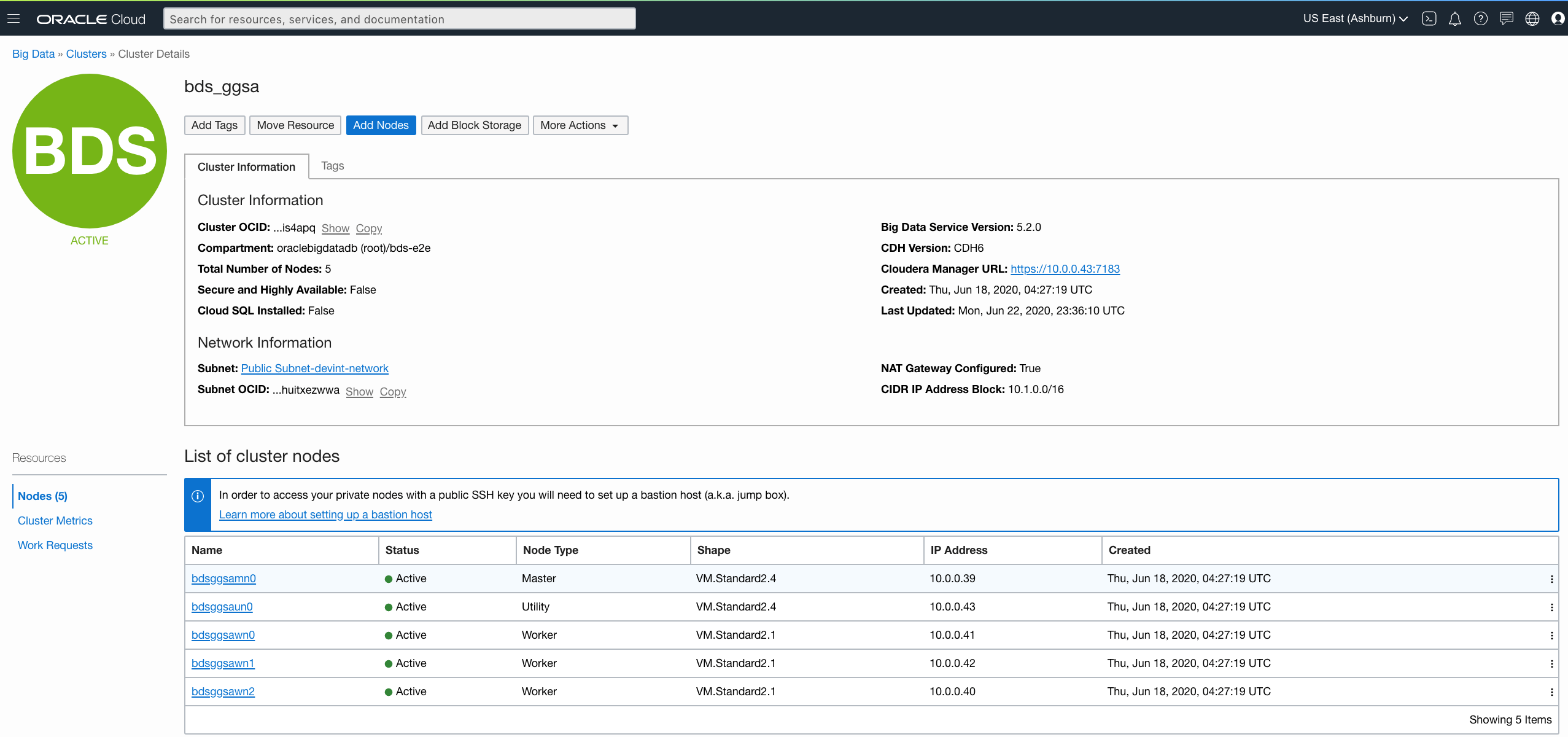

3.1.1.2.3.2 Prerequisites

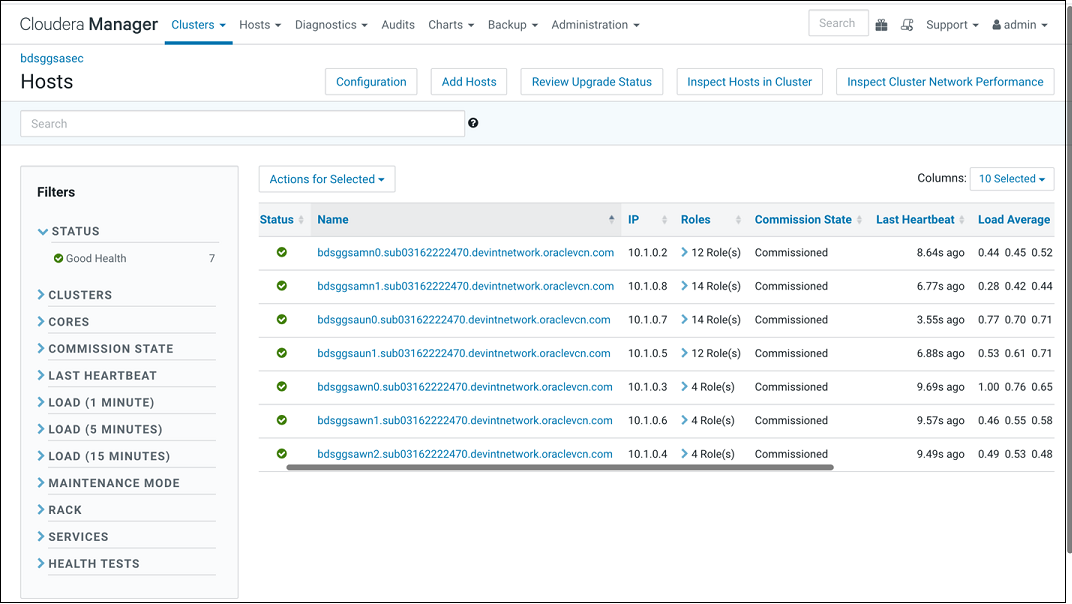

- Retrieve IP addresses of BDS cluster nodes from OCI console as shown in screenshot below. Alternatively, you can get the FQDN for BDS nodes from the Cloudera Manager as shown below.

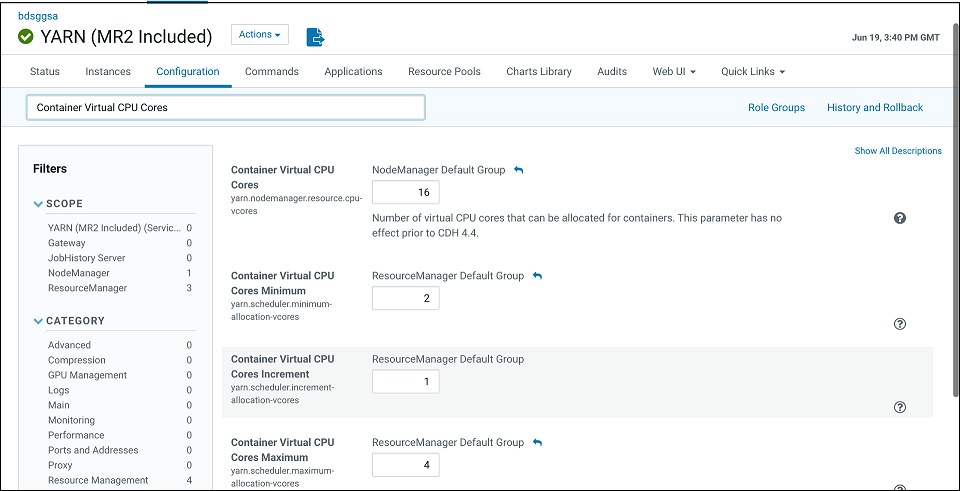

- Reconfigure YARN virtual cores using Cloudera Manager as shown below. This will allow many pipelines to run in the cluster and not be bound by actual physical cores.

-

Container Virtual CPU Cores: This is the total virtual CPU cores available to YARN Node Manager for allocation to Containers. Please note this is not limited by physical cores and you can set this to a high number, say 32 even for VM standard 2.1.

-

Container Virtual CPU Cores Minimum: This is the minimum vcores that will be allocated by YARN scheduler to a Container. Please set this to 2 since CQL engine is a long-running task and will require a dedicated vcore.

Container Virtual CPU Cores Maximum: This is the maximum vcores that will be allocated by YARN scheduler to a Container. Please set this to a number higher than 2 say 4.

Note:

This change will require a restart of the YARN cluster from Cloudera Manager. -

3.1.1.2.3.3 Configuring for Kerberized Big Data Service Runtime

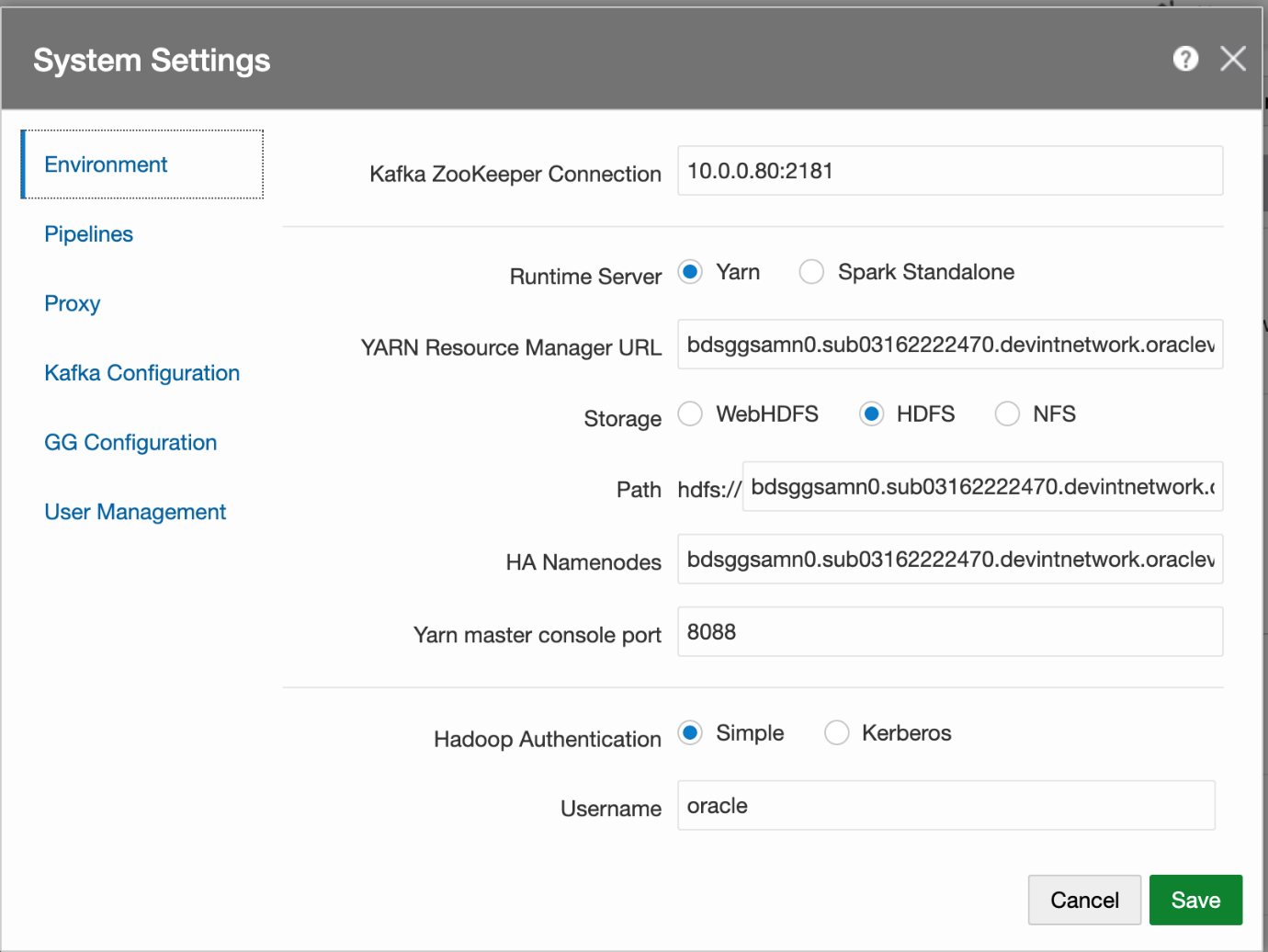

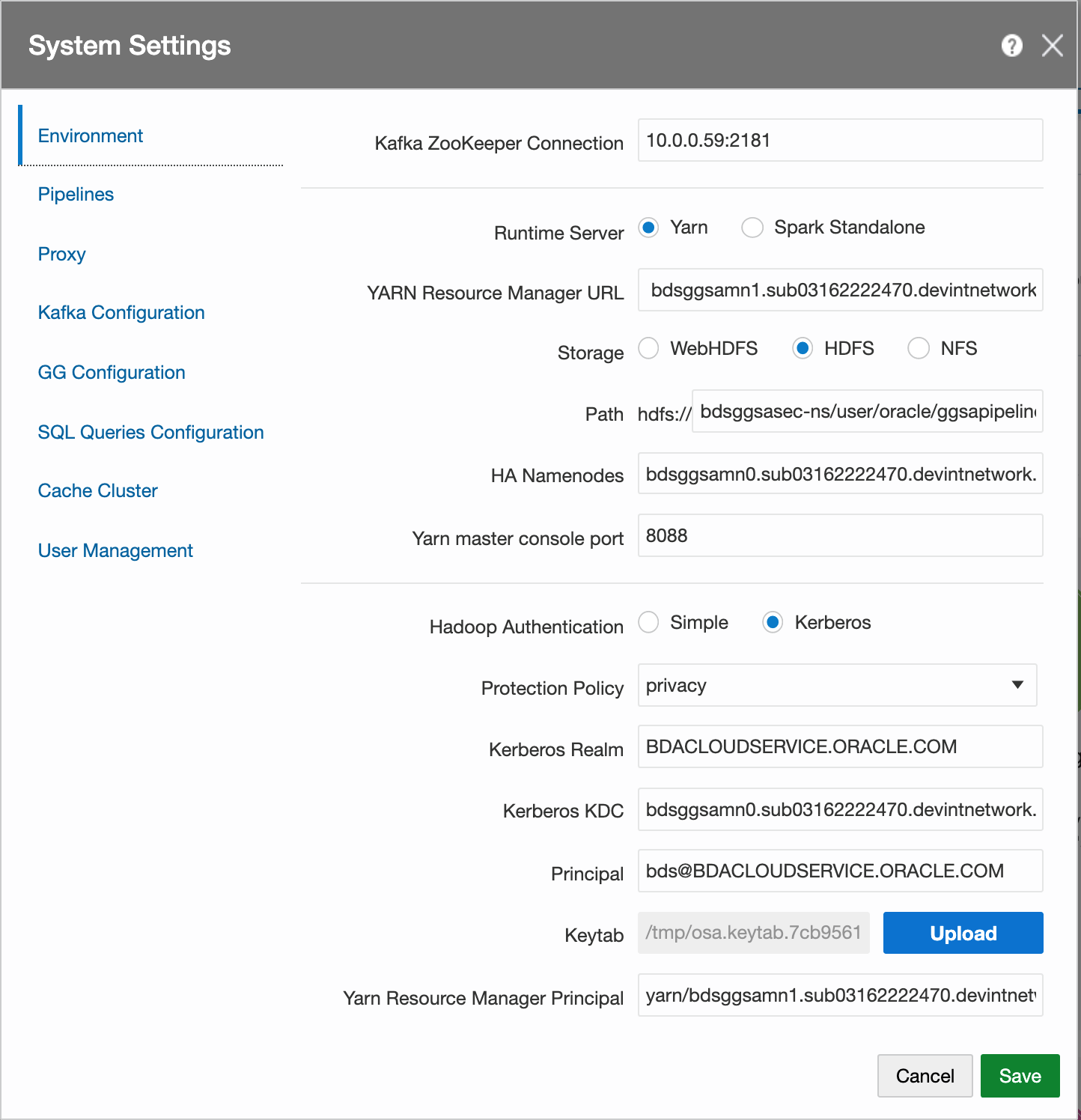

In GGSA System Settings dialog, configure the following:

- Set Kafka Zookeeper Connection to Private IP of the OSA node or list of Brokers that have been configured for GGSA’s internal usage. For example, 10.0.0.59:2181.

- Set Runtime Server to Yarn.

- Set Yarn Resource Manager URL to Private IPs of all master nodes starting with the one running active Yarn Resource Manager. For example,

bdsggsamn1.sub03162222470.devintnetwork.oraclevcn.com,bdsggsamn0.sub03162222470.devintnetwork.oraclevcn.com. - Set storage type to HDFS.

- Set Path to <NameNode Nameservice><HDFS Path>. For example,

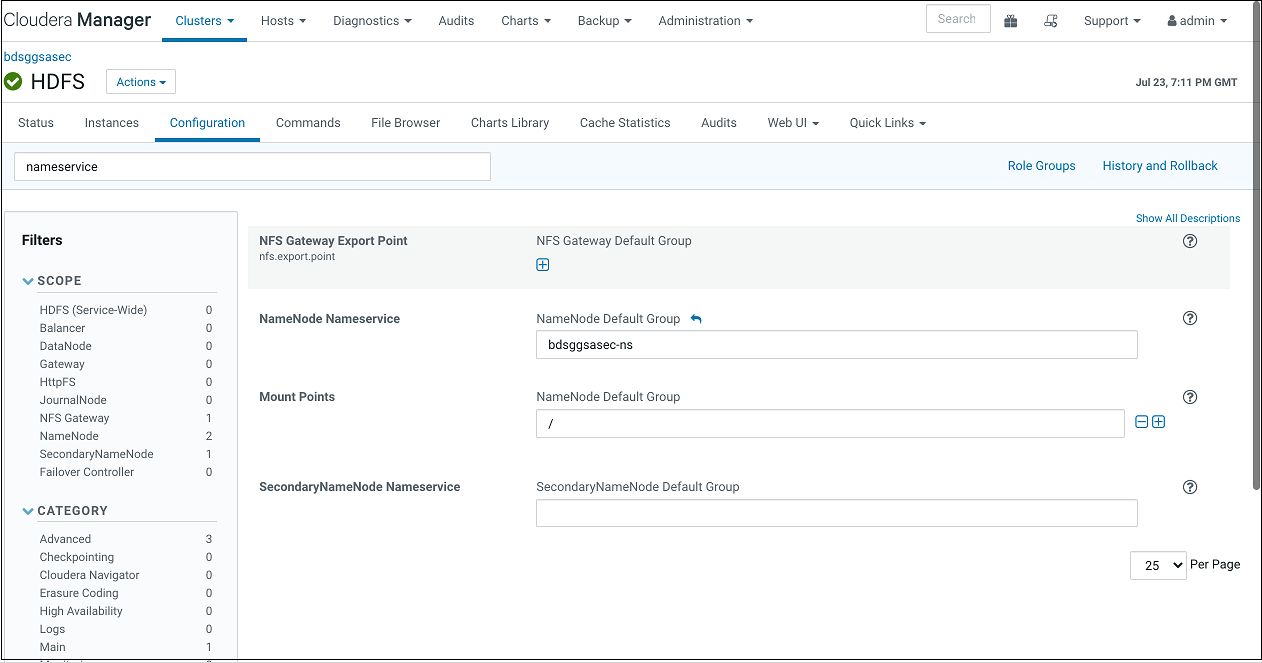

bdsggsasec-ns/user/oracle/ggsapipelines. The path will automatically be created if it does not exist but the Hadoop User must have write permissions. NameNode Nameservice can be obtained from HDFS configuration as shown in the screenshot below. Use search string nameservice in the search field.

- Set HA Namenode to Private IPs or hostnames of all master nodes (comma separated), starting with the one running active NameNode server. In the next version of GGSA, the ordering will not be needed. For example,

bdsggsamn0.sub03162222470.devintnetwork.oraclevcn.com,bdsggsamn1.sub03162222470.devintnetwork.oraclevcn.com. - Set Yarn Master Console port to 8088 or as configured in BDS.

- Set Hadoop authentication to Kerberos.

- Set protection policy to privacy. Please note this should match the value in HDFS configuration property hadoop.rpc.protection.

- Set Kerberos Realm to BDACLOUDSERVICE.ORACLE.COM.

- Set Kerberos KDC to private IP or hostname of BDS master node 0. For example,

bdsggsamn0.sub03162222470.devintnetwork.oraclevcn.com. - Set principal to bds@BDACLOUDSERVICE.ORACLE.COM. See this documentation to create a Kerberos principal (e.g. bds) and add it to hadoop admin group, starting with step Connect to Cluster's First Master Node and through the step Update HDFS Supergroup.

Note:

You can hop/ssh to the master node using your GGSA node as the Bastion. You will need your ssh private key to be available on GGSA node though. Restart your BDS cluster as instructed in the documentation.

[opc@bdsggsa ~]$ ssh -i id_rsa_private_key opc@bdsggsamn0.sub03162222470.devintnetwork.oraclevcn.com - Make sure the newly created principal is added to Kerberos keytab file on the master node as shown:

bdsmn0 # sudo kadmin.local

kadmin.local: ktadd -k /etc/krb5.keytab bds@BDACLOUDSERVICE.ORACLE.COM

- Fetch the keytab file using sftp and set Keytab field in system settings by uploading the same.

- Set Yarn Resource Manager principal which should be in the format

yarn/<FQDN of BDS MasterNode running Active Resource Manager>@KerberosRealm. For example,yarn/bdsggsamn1.sub03162222470.devintnetwork.oraclevcn.com@BDACLOUDSERVICE.ORACLE.COM.Sample System Settings Screen:

3.1.1.2.3.4 Configuring for Non Kerberized Big Data Service Runtime

- Set Kafka Zookeeper Connection to Private IP of the OSA node or list of Brokers that have been configured for GGSA’s internal usage. For example, 10.0.0.59:2181

- Set Runtime Server to Yarn.

- Set Yarn Resource Manager URL to Private IP or Hostname of the BDS Master node. For example,

bdsggsamn0.sub03162222470.devintnetwork.oraclevcn.com. - Set storage type to HDFS.

- Set Path to <PrivateIP or Host Of Master><HDFS Path>. For example,

10.x.x.x/user/oracle/ggsapipelinesorbdsggsamn0.sub03162222470.devintnetwork.oraclevcn.com/user/oracle/ggsapipelines. - Set HA Namenode to Private IP or Hostname of the BDS Master node. For example,

bdsggsamn0.sub03162222470.devintnetwork.oraclevcn.com. - Set Yarn Master Console port to 8088 or as configured in BDS

- Set Hadoop authentication to Simple and leave Hadoop protection policy at authentication if available

- Set username to oracle.

- Click Save.