5.1 Adding Stages to a Pipeline

5.1.1 Adding a Query Stage

You can include simple or complex queries on the data stream without any coding to obtain refined results in the output.

- Open a pipeline in the Pipeline Editor.

- Right-click the stage after which you want to add a query stage, click Add a Stage, and then select Query.

- Enter a Name and Description for the Query Stage.

- Click Save.

5.1.2 Adding a Filter to a Query Stage

You can add filters in a pipeline to obtain more accurate streaming data.

5.1.3 Adding a Summary to a Query Stage

- Open a pipeline in the Pipeline Editor.

- Select the required query stage and click the Summaries tab.

- Click Add a Summary.

- Select the suitable function and the required column.

- Repeat the above steps to add as many summaries you want.

5.1.4 Adding a Summary with Group By

- Open a pipeline in the Pipeline Editor.

- Select the required query stage and click the Summaries tab.

- Click Add a Group By.

- Click Add a Field and select the column on which you want to group by.

When you create a group by, the live output table shows the group by column alone by default. Turn ON Retain All Columns to display all columns in the output table.

You can add multiple group by's.

5.1.5 Adding a Query Group Stage

A query group is a combination of summaries (aggregation functions), group-bys, filters and a range window. Different query groups process your input in parallel and the results are combined in the query group stage output. You can also define input filters that process the incoming stream before the query group logic is applied, and result filters that are applied on the combined output of all query groups together.

A query group stage of the stream type applies processing logic to a stream. It is in essence similar to several parallel query stages grouped together for the sake of simplicity.

A query group stage of the table type can be added to a stream containing transactional semantic. For example, change data capture stream produced by the Oracle GoldenGate BigData plugin. The stage of this type will recreate the original database table in memory using the transactional semantics contained in the stream. You can then apply query groups to this table in memory, to run real-time analytics on your transactional data, without affecting the performance of your database.

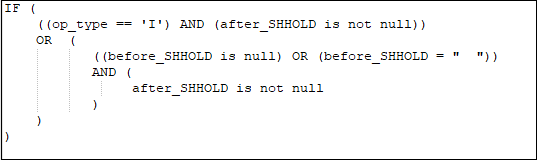

5.1.6 Adding a Rule Stage

Using a rule stage, you can add the IF-THEN logic to your pipeline. A rule is a set of conditions and actions applied to a stream. There is no specific sequence to add rules.

5.1.7 Adding a Pattern Stage

A pattern is a template of an Oracle GoldenGate Stream Analytics application, with a business logic built into it. You can create pattern stages within the pipeline. Patterns are not stand-alone artifacts, they need to be embedded within a pipeline.

For detailed information about the various type of patterns, see Transforming and Analyzing Data using Patterns.

To add a pattern stage:5.1.8 Adding a Scoring Stage

- Open the required pipeline in Pipeline Editor.

- Right-click the stage after which you want to add a scoring stage, click Add a Stage, and then select Scoring.

- Enter a meaningful name and suitable description for the scoring stage and click Save.

- In the stage editor, enter the following details:

- Model name: Select the predictive model that you want to use in the scoring stage

- Model Version: Select the version of the predictive model

- Mapping: Select the corresponding model fields that appropriately map to the stage fields

5.1.9 Adding a Target Stage

- Open the required pipeline in Pipeline Editor.

- Right-click the stage after which you want to add a target stage, click Add a Stage, and then select Target.

- Enter a name and suitable description for the target.

- Click Save.

For more information on creating different target types, see unresolvable-reference.html#GUID-F8E38E61-6F76-46E9-A405-E4F612171E54.

5.1.10 Adding a Custom CQL Stage

- Open the required pipeline in Pipeline Editor.

- Right-click the stage after which you want to add a custom stage. Click Add a Stage, and Custom, and then select Custom CQL.

- Enter a name and suitable description for the custom stage and click Save.

- Type your custom CQL query in the right pane of the pipeline editor.