2.9 GoldenGate Stream Analytics Hardware Requirements for Enterprise Deployment

This chapter provides the hardware requirements for GoldenGate Stream Analytics Design and Data tiers.

Design Tier

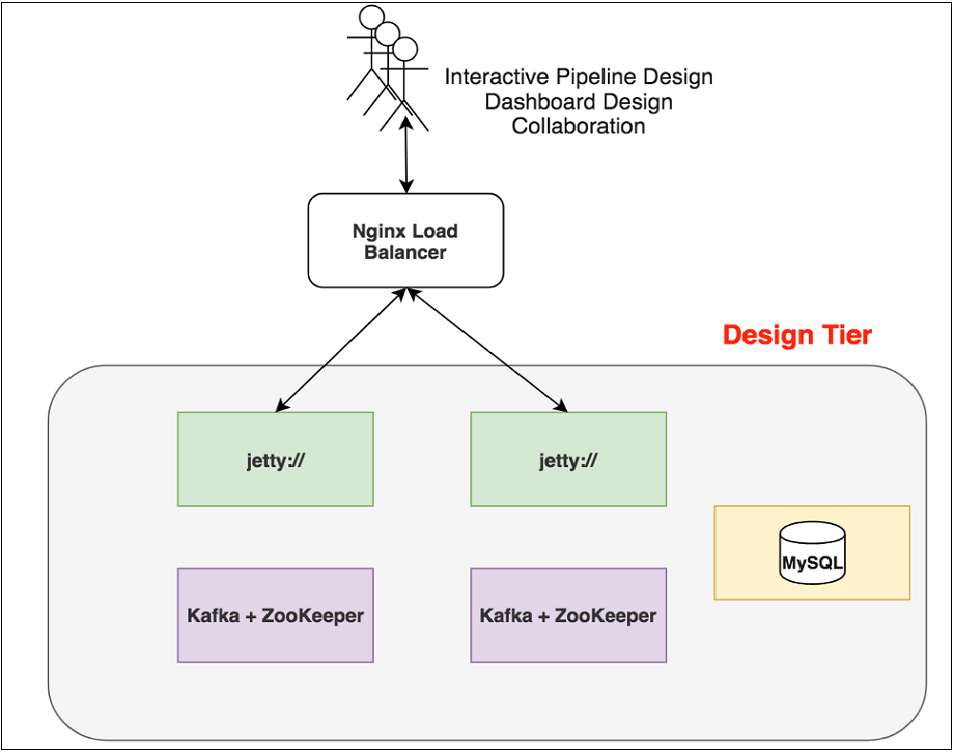

GoldenGate Stream Analytics' Design-tier is a multi-user environment that allows users to implement and test dataflow pipelines. The design-tier also serves dashboards for streaming data. Multiple users can build, test, and deploy pipelines based on the capacity of the Runtime-tier (YARN/Spark cluster) simultaneously.

GGSA uses Jetty as the web-server with support for HA. For production deployments of GGSA Design-tier, you require the minimum hardware configuration listed below:

- Web server – Jetty with High Availability (HA) support

- 2 nodes with 4+ cores and 32+ GB of RAM for running two instances of Jetty.

- 1 node with 4+ cores and 16+ GB of RAM for running MySQL or Oracle meta-store.

- 2 nodes with 4+ cores and 16+ GB of RAM for running two instances of Kafka and 3 instances of ZooKeeper. Please note this is a separate Kafka cluster for GGSA’s internal use and for interactively designing pipelines. ZooKeeper end-point of this Kafka cluster must be specified in GGSA’s system settings UI.

Note:

The two-node Kafka cluster can be avoided if customer already has a Kafka cluster in place and is fine with OSA leveraging that cluster for its internal usage.

Based on the above estimates, total cores for design-tier is 12 and approximate memory is 112 GB RAM. Jetty instances can be independently scaled as the number of users increase. Diagram below illustrates GGSA’s Design-tier topology.

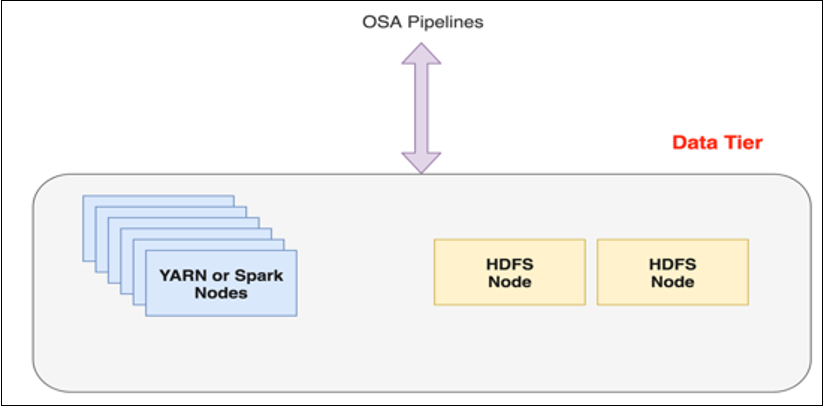

Data Tier

The deployed pipelines are run on the YARN or Spark cluster. You can use existing YARN/Spark clusters if you have sufficient spare capacity.

Sizing Guidelines

-

- 2 nodes with 4+ cores, 16+GB RAM, and 500 GB local disk to run HDFS cluster, two instances of HDFS name and data nodes.

- Number of pipelines that will simultaneously run

- Logic in each pipeline

- Desired degree of parallelism

For each streaming pipeline the number of cores and memory gets computed based on a required degree of parallelism. As an example, consider a pipeline ingesting data from customer’s Kafka topic T with 3 partitions using direct ingestion. Direct ingestion is where no Spark Receivers are used. In this case, the minimum number of processes that you need to run for optimal performance is as follows: 1 Spark Driver Process + 3 Executor processes, 1 for each Kafka Topic partition. Each Executor process needs a minimum of 2 cores.

The number of cores for a pipeline can be computed as

--executor-cores = 1 + Number of Executors * 2

In case of Receiver-based ingestion as in JMS, it is computed as

--executor-cores = 2 + Number of Executors * 2

This is rough estimates and environments where fine-grained scheduling is not available. In environments like Kubernetes, we have the luxury of more fine-grained scheduling.

The formula for sizing memory is

(Number of Windows * Average Window Range * Event Rate * Event Size) + (Number of Lookup/Reference Objects being cached * Size of Lookup Object).

Diagram below illustrates GGSA’s Data-tier topology.

If you are considering GGSA for POCs and not production, then you can use the following configuration:

Design Tier

- An instance of the Jetty running on a 4+ core node with a 32+ GB of RAM.

- An instance of MySQL/Oracle for metadata store on a 4+ core node with a 16+ GB of RAM.

- A node of the Kafka cluster running on a 4+ core node with 16+ GB of RAM.

Note:

This is a separate Kafka cluster for GGSA’s internal use and for interactively designing pipelines.Data Tier

- A Hadoop Distributed File System (HDFS) cluster node running on 4+ core physical node with 16+ GB of RAM.

- 2 nodes of the YARN/Spark cluster each running on a 4+ core physical node with a 16+ GB of RAM.

Development Mode Configurations

Design Tier

- 1 node with 4+ cores and 16+ GB of RAM for 1 instance of Jetty, 1 instance of MySQL DB, and 1 instance of Kafka+ZooKeeper

Data Tier

- 1 node with 4+ cores and 16+ GB of RAM for 1 instance of HDFS and 1 instance of YARN/Spark.