8 Service Migration

This chapter focuses on migrating failed services. WebLogic Server also supports whole server-level migration, where a migratable server instance, and all of its services are migrated to a different physical machine upon failure. For information on failed server migration, see Whole Server Migration.

WebLogic Server also supports replication and failover at the application level. For more information, see Failover and Replication in a Cluster.

Note:

Support for automatic whole server migration on Solaris 10 systems using the Solaris Zones feature can be found in Note 3: Support For Sun Solaris 10 In Multi-Zone Operation at http://www.oracle.com/technetwork/middleware/ias/oracleas-supported-virtualization-089265.html.

This chapter includes the following sections:

- Understanding the Service Migration Framework

In a WebLogic Server cluster, most subsystem services are hosted homogeneously on all server instances in the cluster, enabling transparent failover from one server to another. - Pre-Migration Requirements

WebLogic Server imposes certain constraints and prerequisites for service configuration to support service migration. - Roadmap for Configuring Automatic Migration of JMS-Related Services

For automatic migration, the WebLogic administrator can specify a migratable target for JMS-related services, such as JMS servers and SAF agents. The administrator can also configure migratable services that will be automatically migrated from a failed server based on WebLogic Server health monitoring capabilities. - Best Practices for Configuring JMS Migration

- Roadmap for Configuring Manual Migration of JMS-related Services

WebLogic JMS leverages the migration framework by allowing an administrator to specify a migratable target for JMS-related services. Once properly configured, a JMS service can be manually migrated to another WebLogic Server within a cluster using either the console or a script without setting up automatic migration. This includes both scheduled migrations as well as manual migrations in response to a WebLogic Server failure within the cluster. - Roadmap for Configuring Automatic Migration of the JTA Transaction Recovery Service

The JTA Transaction Recovery Service is designed to gracefully handle transaction recovery after a crash. You can specify to have the Transaction Recovery Service automatically migrated from an unhealthy server instance to a healthy server instance, with the help of the server health monitoring services. This way, the backup server instance can complete transaction work for the failed server instance. - Manual Migration of the JTA Transaction Recovery Service

The JTA Transaction Recovery Service is designed to gracefully handle transaction recovery after a crash. You can manually migrate the Transaction Recovery Service from an unhealthy server instance to a healthy server instance with the help of the server health monitoring services. In this manner, the backup server instance can complete transaction work for the failed server instance. - Automatic Migration of User-Defined Singleton Services

WebLogic Server supports the automatic migration of user-defined singleton services.

Understanding the Service Migration Framework

In a WebLogic Server cluster, most subsystem services are hosted homogeneously on all server instances in the cluster, enabling transparent failover from one server to another.

In contrast, pinned services, such as JMS-related services, the JTA Transaction Recovery Service, and user-defined singleton services are hosted on individual server instances within a cluster. For these services, the WebLogic Server migration framework supports failure recovery with service migration, as opposed to failover. See Migratable Services.

Service-level migration in WebLogic Server is the process of moving the pinned services from one server instance to a different available server instance within the cluster. Service migration is controlled by a logical migratable target, which serves as a grouping of services that is hosted on only one physical server instance in a cluster. You can select a migratable target in place of a server instance or cluster when targeting certain pinned services. High availability is achieved by migrating a migratable target from one clustered server instance to another when a problem occurs on the original server instance. You can also manually migrate a migratable target for scheduled maintenance, or you can configure the migratable target for automatic migration. See Understanding Migratable Targets In a Cluster.

The migration framework provides tools and infrastructure for configuring and migrating targets, and, in the case of automatic service migration, it leverages the WebLogic Server health monitoring subsystem to monitor the health of services hosted by a migratable target. See Migration Processing Tools and Automatic Service Migration Infrastructure. For definitions of the terms that apply to server and service migration, see Migration Terminology.

- Migratable Services

- Understanding Migratable Targets In a Cluster

- Migration Processing Tools

- Automatic Service Migration Infrastructure

- In-Place Restarting of Failed Migratable Services

- Migrating a Service From an Unavailable Server

- JMS and JTA Automatic Service Migration Interaction

Parent topic: Service Migration

Migratable Services

WebLogic Server supports service-level migration for JMS-related services, the JTA Transaction Recovery Service, and user-defined singleton services. These are referred to as migratable services because you can move them from one server instance to another within a cluster. The following migratable services can be configured for automatic or manual migration.

Parent topic: Understanding the Service Migration Framework

JMS-related Services

Note:

If you want to enable service migration on a cluster targeted JMS Server, SAF Agent, Path Service, or Custom Store, see Simplified JMS Cluster and High Availability Configuration in Administering JMS Resources for Oracle WebLogic Server.These are the migratable JMS services:

-

JMS Server: Management containers for the queues and topics in JMS modules that are targeted to them. See JMS Server Configuration in Administering JMS Resources for Oracle WebLogic Server.

-

Store-and-Forward (SAF) Service: Store-and-Forward messages between local sending and remote receiving endpoints, even when the remote endpoint is not available at the moment the messages are sent. Only sending SAF agents configured for JMS SAF (sending capability only) are migratable. See Administering the Store-and-Forward Service for Oracle WebLogic Server.

-

Path Service: A persistent map that can be used to store the mapping of a group of messages in a JMS Message Unit-of-Order to a messaging resource in a cluster. It provides a way to enforce ordering by pinning messages to a member of a cluster hosting servlets, distributed queue members, or Store-and-Forward agents. One path service is configured per cluster. See Using the WebLogic Path Service in Administering JMS Resources for Oracle WebLogic Server.

-

Custom Persistent Store: A user-defined, disk-based file store or JDBC-accessible database for storing subsystem data, such as persistent JMS messages or store-and-forward messages. See Administering the WebLogic Persistent Store.

Cluster targeted JMS services are distributed over the members of the clusters. Weblogic Server can be configured to automatically migrate cluster targeted services across cluster members dynamically. See Simplified JMS Cluster and High Availability Configuration in Administering JMS Resources for Oracle WebLogic Server.

To ensure that singleton JMS services do not introduce a single point of failure for dependent applications in the cluster, WebLogic Server can be configured to automatically or manually migrate them to any server instance in the migratable target list. See Roadmap for Configuring Automatic Migration of JMS-related Services and Roadmap for Configuring Manual Migration of JMS-related Services.

Parent topic: Migratable Services

JTA Transaction Recovery Service

The Transaction Recovery Service automatically attempts to recover transactions on system startup by parsing all transaction log records for incomplete transactions and completing them. See Transaction Recovery After a Server Fails in Developing JTA Applications for Oracle WebLogic Server.

Parent topic: Migratable Services

User-defined Singleton Services

Within an application, you can define a singleton service that can be used to perform tasks that you want to be executed on only one member of a cluster at any give time. See Automatic Migration of User-Defined Singleton Services.

Parent topic: Migratable Services

Understanding Migratable Targets In a Cluster

Note:

If you want to enable service migration on a cluster targeted JMS Server, SAF Agent, Path Service, or Custom Store, see Simplified JMS Cluster and High Availability Configuration in Administering JMS Resources for Oracle WebLogic Server.You can also configure JMS and JTA services for high availability by using migratable targets. A migratable target is a special target that can migrate from one server instance in a cluster to another. As such, a migratable target provides a way to group migratable services that should move together. When the migratable target is migrated, all services hosted by that target are migrated.

A migratable target specifies a set of server instances that can host a target, and can optionally specify a user-preferred host for the services and an ordered list of candidate backup servers should the preferred server instance fail. Only one of these server instances can host the migratable target at a time.

Once you configure a service to use a migratable target, then it is independent from the server member that is currently hosting it. For example, if you configure a JMS server with a deployed JMS queue to use a migratable target, then the queue is independent when a specific server member is available. In other words, the queue is always available when the migratable target is hosted by any server instance in the cluster.

An administrator can manually migrate pinned migratable services from one server instance to another in the cluster, either in response to a server failure or as part of regularly scheduled maintenance. If you do not configure a migratable target in the cluster, migratable services can be migrated to any WebLogic Server instance in the cluster. See Roadmap for Configuring Manual Migration of JMS-related Services.

- Policies for Manual and Automatic Service Migration

- Options For Attempting to Restart Failed Services Before Migrating

- User-Preferred Servers and Candidate Servers

- Example Migratable Targets In a Cluster

- Targeting Rules for JMS Servers

- Targeting Rules for SAF Agents

- Targeting Rules for Path Service

- Targeting Rules for Custom Stores

- Migratable Targets For the JTA Transaction Recovery Service

Parent topic: Understanding the Service Migration Framework

Policies for Manual and Automatic Service Migration

A migratable target provides migration policies that define whether the hosted services will be manually migrated (the system default) or automatically migrated from an unhealthy hosting server instance to a healthy active server instance with the help of the health monitoring subsystem. There is one type of manual service migration and two types of automatic service migration policies, as described in the following sections.

Parent topic: Understanding Migratable Targets In a Cluster

Manual Migration

When a migratable target uses the manual policy (the system default), an administrator can manually migrate pinned migratable services from one server instance to another in the cluster, either in response to a server failure or as part of regularly scheduled maintenance.

See Roadmap for Configuring Manual Migration of JMS-related Services.

Parent topic: Policies for Manual and Automatic Service Migration

Exactly-Once

This policy indicates that if at least one Managed Server in the candidate list is running, then the service will be active somewhere in the cluster if server instances fail or shut down (either gracefully or forcibly). It is important to note that this value can lead to target grouping. For example, if you have five exactly-once migratable targets and only start one Managed Server in the cluster, then all five targets will be activated on that server instance.

Note:

As a best practice, a migratable target hosting a path service should always be set to exactly-once, so if its hosting server member fails or shut down, the path service will automatically migrate to another server instance and will always be active in the cluster.

Example use-case for JMS servers:

A domain has a cluster of three Managed Servers, with one JMS server deployed on a member server in the cluster. Applications deployed to the cluster send messages to the queues targeted to the JMS server. MDBs in another domain drain the queues associated with the JMS server. The MDBs only want to drain from one set of queues, not from many instances of the same queue. In other words, this environment uses clustering for scalability, load balancing, and failover for its applications, but not for its JMS server. Therefore, this environment would benefit from the automatic migration of the JMS server as an exactly-once service to an available cluster member.

See Roadmap for Configuring Automatic Migration of JMS-related Services.

Parent topic: Policies for Manual and Automatic Service Migration

Failure-Recovery

This policy indicates that the service will only start if its user-preferred server (UPS) is started. If an administrator manually shuts down the UPS, either gracefully or forcibly, then a failure-recovery service will not migrate. However, if the UPS fails due to an internal error, then a failure-recovery service will be migrated to another candidate server instance. If such a candidate server instance is unavailable (due to a manual shutdown or an internal failure), then the migration framework will first attempt to reactivate the service on its UPS server. If the UPS server is not available at that time, then the service will be migrated to another candidate server instance.

For migration of cluster targeted JMS services, you can configure a Store with failure recovery parameters.

Example use-case for JMS servers:

A domain has a cluster of three Managed Servers, with a JMS server on each member server and a distributed queue member on each JMS server. There is also an MDB targeted to the cluster that drains from the distributed queue member on the local server member. In other words, this environment uses clustering for overall scalability, load balancing, and failover. Therefore, this environment would benefit from the automatic migration of a JMS server as an failure-recovery service to a UPS member.

Note:

If a server instance is also configured to use the automatic whole server migration framework, which will shut down the server when its expired lease cannot be renewed, then any failure-recovery services configured on that server instance will not automatically migrate, no matter how the server instance is manually shut down by an administrator (for example, force shutdown versus graceful shutdown). See Automatic Whole Server Migration.

See the Roadmap for Configuring Automatic Migration of JMS-related Services.

Parent topic: Policies for Manual and Automatic Service Migration

Options For Attempting to Restart Failed Services Before Migrating

A migratable target provides options to attempt to deactivate and reactivate a failed service, instead of migrating the service. See In-Place Restarting of Failed Migratable Services.

For more information about the default values for all migratable target options, see MigratableTargetMBean in the MBean Reference for Oracle WebLogic Server.

Parent topic: Understanding Migratable Targets In a Cluster

User-Preferred Servers and Candidate Servers

When deploying a JMS service to the migratable target, you can select the UPS target to host the service. When configuring a migratable target, you can also specify constrained candidate servers (CCS) that can potentially host the service should the user-preferred server fail. If the migratable target does not specify a CCS, the JMS server can be migrated to any available server instance in the cluster.

WebLogic Server enables you to create separate migratable targets for JMS services. This allows you to always keep each service running on a different server instance in the cluster, if necessary. Conversely, you can configure the same selection of server instances as the constrained candidate servers for both JTA and JMS, to ensure that the services remain co-located on the same server instance in the cluster.

Parent topic: Understanding Migratable Targets In a Cluster

Example Migratable Targets In a Cluster

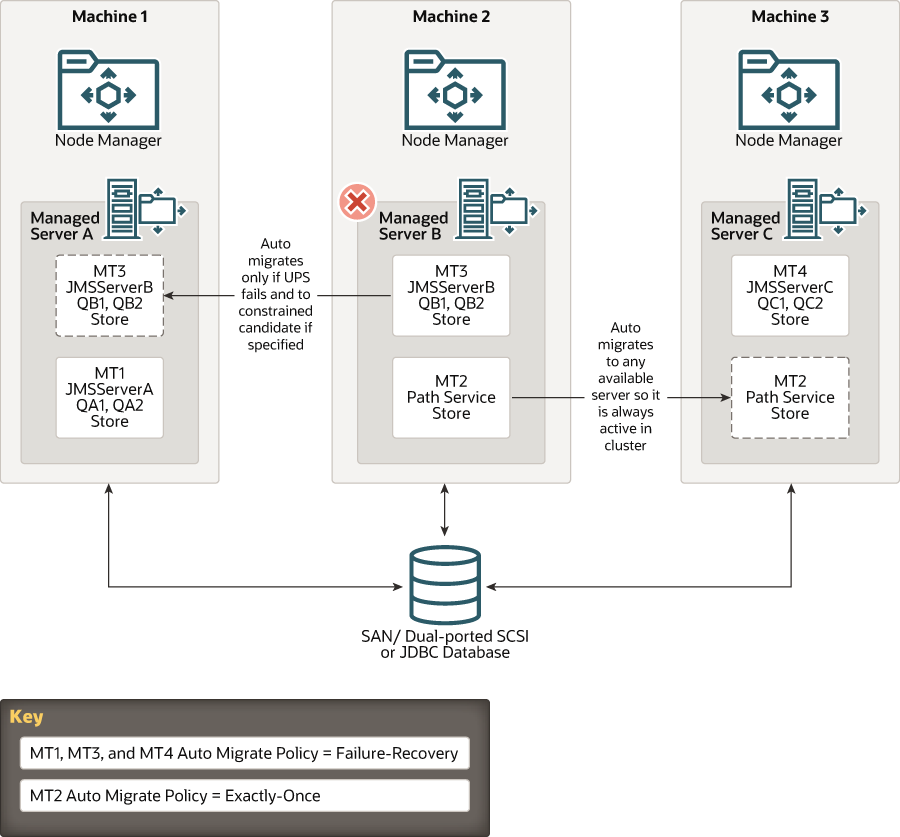

Figure 8-1 shows a cluster of three Managed Servers, all hosting migratable targets. Server A is hosting a migratable target (MT1) for JMS server A (with two queues) and a custom store; Server B is hosting MT2 for a path service and a custom store and is also hosting MT3 for JMS server B (with two queues) and a custom store; Server C is hosting MT4 for JMS Server C (with two queues) and a custom store.

All the migratable targets are configured to migrate automatically with the MT1, MT3, and MT4 targets using the failure-recovery policy, and the MT2 target using the exactly-once policy.

Figure 8-1 Migratable Targets In a Cluster

Description of "Figure 8-1 Migratable Targets In a Cluster"

In the above example, the MT2 exactly-once target will automatically start the path service and store on any running Managed Server in the candidate list. This way, if the hosting server should fail, it guarantees that the services will always be active somewhere in the cluster, even if the target's UPS is shut down gracefully. However, as described in Policies for Manual and Automatic Service Migration, this policy can also lead to target grouping with multiple JMS services being hosted on a single server instance.

Whereas, if the UPS is shut down gracefully or forcibly, then the MT1, MT3, and MT4 failure-recovery targets will automatically start the JMS server and store services on its UPS, but the pinned services will not migrate anywhere. However, if the UPS shuts down due to an internal error, then the services will be migrated to another candidate server.

Parent topic: Understanding Migratable Targets In a Cluster

Targeting Rules for JMS Servers

When you do not use migratable targets, a JMS server can be targeted to a dynamic cluster or to a specific cluster member and can use a custom store. However, when targeted to a migratable target, a JMS server must use a custom persistent store, and must be targeted to the same migratable target used by the custom store. A JMS server, SAF agent, and custom store can share a migratable target. See Custom Store Availability for JMS Services.

If the JMS system resource target is cluster, WebLogic Server will create the migratable targets for each server instance in a cluster and then create separate JMS servers that are targeted individually to each migratable target.

Parent topic: Understanding Migratable Targets In a Cluster

Targeting Rules for SAF Agents

When you do not use migratable targets, a SAF agent can be targeted to an entire cluster or a list of multiple server instances in a cluster, with the requirement that the SAF agent and each server instance in the cluster must use the default persistent store. However, when targeted to a migratable target, a SAF agent must use a custom persistent store, and must be targeted to the same migratable target used by the custom store, similar to a JMS server. A SAF agent, JMS server, and custom store can share a migratable target. See Special Considerations When Targeting SAF Agents or Path Service.

WebLogic Server will create the migratable targets for each server in a cluster and then create separate SAF agents that are targeted individually to each migratable target. This handling increases throughput and high availability.

In addition, consider the following topics when targeting SAF agents to migratable targets.

- Re-targeting SAF Agents to Migratable Targets

- Targeting Migratable SAF Agents For Increased Message Throughput

- Targeting SAF Agents For Consistent Quality-of-Service

Parent topic: Understanding Migratable Targets In a Cluster

Re-targeting SAF Agents to Migratable Targets

To preserve SAF message consistency, WebLogic Server prevents you from retargeting an existing SAF agent to a migratable target. Instead, you must delete the existing SAF agent and configure a new SAF agent with the same values and target it to a migratable target.

Parent topic: Targeting Rules for SAF Agents

Targeting Migratable SAF Agents For Increased Message Throughput

When you do not use migratable targets, a SAF agent can be targeted to an entire cluster or multiple server instances in a cluster for increased message throughput. However, when a SAF agent is targeted to a migratable target, it cannot be targeted to any other server instances in the cluster, including an entire cluster. Therefore, if you want to increase throughput by importing a JMS destination to multiple SAF agents on separate server instances in a cluster, then you should create migratable targets for each server instance in the cluster and then create separate SAF agents that are targeted individually to each migratable target.

Parent topic: Targeting Rules for SAF Agents

Targeting SAF Agents For Consistent Quality-of-Service

A WebLogic Server administrator has the freedom to configure and deploy multiple SAF agents in the same cluster or on the same server instance. As such, there could be situations where the same server instance has both migratable SAF agents and non-migratable SAF agents. For such cases, the behavior of a JMS client application may vary depending on which SAF agent handles the messages.

For example, an imported destination can be deployed to multiple SAF agents, and messages sent to the imported destination will be load-balanced among all SAF agents. If the list of the SAF agents contains non-migratable agents, the JMS client application may have a limited sense of high availability. Therefore, a recommended best practice is to deploy an imported destination to one or more SAF agents that provide the same level of high availability functionality. In other words, to ensure consistent forwarding quality and behavior, you should target the imported destination to a set of SAF agents that are all targeted to migratable targets or to non-migratable targets.

Parent topic: Targeting Rules for SAF Agents

Targeting Rules for Path Service

When you do not use migratable targets, a path service is targeted to a single member of a cluster and can use either the default file store or a custom store. However, when targeted to a migratable target, a path service cannot use the default store, so a custom store must be configured and targeted to the same migratable target. As an additional best practice, the path service and its custom store should be the only users of that migratable target. Whereas, a JMS server, SAF agent, and custom store can share a migratable target.

Parent topic: Understanding Migratable Targets In a Cluster

Special Considerations For Targeting a Path Service

As a best practice, when the path service for a cluster is targeted to a migratable target, the path service and its custom store should be the only users of that migratable target.

When a path service is targeted to a migratable target, its provides enhanced storage of message unit-of-order (UOO) information for JMS distributed destinations, since the UOO information will be based on the entire migratable target instead of being based only on the server instance hosting the distributed destinations member.

Parent topic: Targeting Rules for Path Service

Targeting Rules for Custom Stores

All JMS-related services require a custom persistent store that is targeted to the same migratable targets as the JMS services. When a custom store is targeted to a migratable target, the store <directory> parameter must be configured so that the store directory is accessible from all candidate server members in the migratable target.

If the JMS system resource target is the cluster, WebLogic Server will create the migratable targets for each server instance in a cluster and then create separate JMS servers and file stores that are targeted individually to each migratable target.

For more information, see Custom Store Availability for JMS Services.

Parent topic: Understanding Migratable Targets In a Cluster

Migratable Targets For the JTA Transaction Recovery Service

For JTA, a migratable target configuration should not be configured because a migratable target is automatically defined for JTA at the server level. Select the JTA Migration Policy on the Administration Console to enable JTA automatic migration. The default migration policy for JTA is Manual. For automatic service migration, select either Failure Recovery or Shutdown Recovery migration policy. This means that Transaction Recovery Service will only start if its UPS is started. If an administrator shuts down the UPS, either gracefully or forcibly, this service will not be migrated.

However, if the UPS shuts down due to an internal error, then this service will be migrated to another candidate server instance. If such a candidate server instance is unavailable (due to a manual shutdown or an internal failure), then the migration framework will first attempt to reactivate the service on its UPS server instance. If the UPS server is not available at that time, then the service will be migrated to another candidate server instance.

Parent topic: Understanding Migratable Targets In a Cluster

Migration Processing Tools

WebLogic Server migration framework provides infrastructure and facilities to perform the manual or automatic migration of JMS-related services and the JTA Transaction Recovery Service. By default, an administrator must manually execute the process to successfully migrate the services from one server instance to another server instance. However, these services can also be easily configured to automatically migrate in response to a server failure.

Parent topic: Understanding the Service Migration Framework

Administration Console

An administrator can use the WebLogic Server Administration Console to configure and perform the migration process.

For more information, see the following topics in the Oracle WebLogic Server Administration Console Online Help:

Parent topic: Migration Processing Tools

WebLogic Scripting Tool

An administrator can use the WLST command-line interface utility to manage the life cycle of a server instance, including configuring and performing the migration process.

For more information, see Life Cycle Commands in WLST Command Reference for WebLogic Server.

Parent topic: Migration Processing Tools

Automatic Service Migration Infrastructure

The service migration framework depends on the following components to monitor server health issues and automatically migrate the pinned services to a healthy server instance.

- Leasing for Migratable Services

- Node Manager

- Administration Server Not Required When Migrating Services

- Service Health Monitoring

Parent topic: Understanding the Service Migration Framework

Leasing for Migratable Services

Leasing is the process WebLogic Server uses to manage services that are required to run on only one member of a cluster at a time. Leasing ensures exclusive ownership of a cluster-wide entity. Within a cluster, there is a single owner of a lease. Additionally, leases can failover in case of server or cluster failure. This helps to avoid having a single point of failure. For more information, see Leasing.

The Automatic Migration option requires setting the cluster Migration Basis policy to either Database or Consensus leasing.

Database Leasing

If you are using a high availability database, such as Oracle RAC, to manage leasing information, configure the database for server migration according to the procedures outlined in High availability Database Leasing.

Setting Migration Basis to Database leasing requires that the Data Source For Automatic Migration option is set with a valid JDBC system resource. This implies that there is a table created on that resource that the Managed Servers will use for leasing. For more information, see Configuring JDBC Data Sources in Administering JDBC Data Sources for Oracle WebLogic Server.

Parent topic: Leasing for Migratable Services

Consensus Leasing

Setting Migration Basis to Consensus leasing means that the member servers maintain leasing information in-memory, which removes the requirement of having a high availability database to use leasing. This version of leasing requires that you use Node Manager to control server instances within the cluster. It also requires that all server instances that are migratable, or which could host a migratable target, have a Node Manager instance associated with them. Node Manager is required for health monitoring information about the member server instances involved. For more information, see Non-database Consensus Leasing.

Note:

As a best practice, Oracle recommends configuring database leasing instead of consensus leasing.

Parent topic: Leasing for Migratable Services

Node Manager

When you use automatic service migration, Node Manager is required for health monitoring information about the member servers, as follows:

-

Consensus leasing: Node Manager must be running on every machine hosting Managed Servers within the cluster.

-

Database leasing: Node Manager must be running on every machine hosting Managed Servers within the cluster only if pre/post-migration scripts are defined. If pre/post-migration scripts are not defined, then Node Manager is not required.

For general information about configuring Node Manager, see Using Node Manager to Control Servers in Administering Node Manager for Oracle WebLogic Server.

Parent topic: Automatic Service Migration Infrastructure

Administration Server Not Required When Migrating Services

To eliminate a single point of failure during migration, automatic service migration of migratable services is not dependent on the availability of the Administration Server at the time of migration.

Parent topic: Automatic Service Migration Infrastructure

Service Health Monitoring

To accommodate service migration requests, the migratable target performs basic health monitoring on migratable services that are deployed on it that implement a health monitoring interface. The advantage of having a migratable target perform this job is that it is guaranteed to be local. In addition, the migratable target has a direct communication channel to the leasing system and can request to release the lease (thus triggering a migration) when bad health is detected.

- How Health Monitoring of the JTA Transaction Recovery Service Triggers Automatic Migration

- How Health Monitoring of JMS-related Services Triggers Automatic Migration

Parent topic: Automatic Service Migration Infrastructure

How Health Monitoring of the JTA Transaction Recovery Service Triggers Automatic Migration

When JTA has automatic migration enabled, the server defaults to shutting down if the JTA subsystem reports itself as unhealthy (FAILED). For example, if any I/O error occurs when accessing the transaction log, then JTA health state will change to FAILED.

When the primary server fails, the migratable service framework automatically migrates the Transaction Recovery Service to a backup server. The automatic service migration framework selects a backup server from the configured candidate servers. If a backup server fails before completing the transaction recovery actions and restarted, then the Transaction Recovery Service will eventually be migrated to another server instance in the cluster (either the primary server will reclaim it or the migration framework will notice that the backup server instance's lease has expired).

After successful migration, if the backup server is shut down normally and rebooted, then the Transaction Recovery Service will again be activated on the backup server. This is consistent with manual service migration. As with manual service migration, the Transaction Recovery Service cannot be migrated from a running primary server.

Parent topic: Service Health Monitoring

How Health Monitoring of JMS-related Services Triggers Automatic Migration

When the JMS-related services have automatic migration enabled:

-

JMS Server: Maintains its run-time health state and registers and updates its health to the health monitoring subsystem. When a service the JMS server depends upon, such as its targeted persistent store, reports the

FAILEDhealth state, it is detected by the migration framework. The migration process takes place based on the migratable target's configured automatic migration policy. Typically, the migration framework deactivates the JMS server and other users of the migratable target on the current user-preferred server and migrates them onto a healthy available server instance from the constrained candidate server list. -

SAF Service: The health state of the SAF service comes from its configured SAF agents. If the SAF service detects an unhealthy state, the whole SAF agent instance will be reported as unhealthy. The SAF agent has the same health monitoring capabilities as a JMS server. Typically, the migration framework deactivates the SAF agent on the current user-preferred server instance and migrates it onto a healthy available server instance from the constrained candidate server list.

-

Path Service: The path service itself will not change its health state, but instead depends on the server instance and its custom store to trigger migration.

-

Persistent Store: Registers its health to the health monitoring subsystem. If there are any errors reported by the I/O layer, such that if the persistent store cannot continue with read/write and needs to be restarted before it can guarantee data consistency then the store's health is marked as

FAILEDand reported asFAILEDto the health monitoring subsystem. This is detected by the automatic migration framework and triggers the auto-migration of the store and the subsystem services that are depending on that store from the current user-preferred server instance onto a healthy available server instance from the constrained candidate server list.

Parent topic: Service Health Monitoring

In-Place Restarting of Failed Migratable Services

Migratable services, such as JMS, have a unique feature of restarting the failed services automatically and can recover from temporary store failure without restarting the whole server. It is sometimes beneficial for the service to be restarted in place, instead of migrated. Therefore, migratable targets provide Restart In Place option to attempt to deactivate and reactivate a failed service, instead of migrating the service. A custom store targeted to dynamic cluster also provides Restart In Place configuration option for cluster targeted JMS services.

The migration framework only attempts to restart a service if the server health is satisfactory (for example, in a RUNNING state). If the server instance is not healthy, the framework immediately proceeds to the migration stage, skipping all in-place restarts.

The cluster Singleton Monitor checks for the RestartOnFailure value in the service MigratableTargetMBean. If the value is false, the service is migrated automatically. If the value is true, the migration framework attempts to deactivate and activate in place. If the reactivation fails, the migration framework pauses for the user-specified SecondsBetweenRestarts seconds. The process is repeated for the specified NumberOfRestartAttempts attempts. If all restart attempts fail, the service is migrated to a healthy server member.

For more information, see Restart In Place in Administering the WebLogic Persistent Store.

Parent topic: Understanding the Service Migration Framework

Migrating a Service From an Unavailable Server

There are special considerations when you migrate a service from a server instance that has crashed or is unavailable to the Administration Server. If the Administration Server cannot reach the previously active host of the service when you perform the migration, the Managed Server's local configuration information (for example, migratable target) will not be updated to reflect that it is no longer the active host for the service. In this situation, you must purge the unreachable Managed Server's local configuration cache before restarting it. This prevents the previous active host from hosting a service that has been migrated to another Managed Server.

Parent topic: Understanding the Service Migration Framework

JMS and JTA Automatic Service Migration Interaction

In some automatic service migration cases, the migratable targets for JMS services and the JTA Transaction Recovery Service can be migrated to different candidate servers with uncommitted transactions in progress. However, JMS and JTA service states are independent in time and location; therefore, JMS service availability does not depend on JTA transaction recovery being complete.

However, in-doubt transactions will not resolve until both services are running and can re-establish communication. An in-doubt transaction is an incomplete transaction that involves multiple participating resources (such as a JMS server and a database), where one or more of the resources are waiting for the transaction manager to tell them whether to rollback, commit, or forget their part of the transaction. Transactions can become in-doubt if they are in-progress when a transaction manager or participating resource crashes.

JTA continues to attempt to recover transactions when a resource is not available until the recovery abandon time period expires, which defaults to 24 hours.

Parent topic: Understanding the Service Migration Framework

Pre-Migration Requirements

WebLogic Server imposes certain constraints and prerequisites for service configuration to support service migration.

These constraints are service-specific and depend on your enterprise application architecture.

- Custom Store Availability for JMS Services

- Default File Store Availability for JTA

- Server State and Manual Service Migration

Parent topic: Service Migration

Custom Store Availability for JMS Services

Migratable JMS-related services cannot use the default persistent store, so you must configure a custom store and target it to the same migratable target as the JMS server or SAF agent. As a best practice, a path service should use its own custom store and migratable target.

The custom file store or JDBC store must either be:

-

Accessible from all candidate server members in the migratable target.

-

If the application uses file-based persistence (file store), the store's

<directory>parameter must be configured so that it is accessible from all candidate server members in the migratable target. For highest reliability, use a shared storage solution that is itself highly available (for example, a storage area network (SAN) or a dual-ported SCSI disk). -

If the application uses JDBC-based persistence (JDBC store), then the JDBC connection information for that database instance, such as data source and connection pool, has to be available from all candidate servers members.

-

-

Migrated to a backup server target by pre-migration and post-migration scripts in the

ORACLE_HOME/user_projects/domains/mydomain/bin/service_migrationdirectory, wheremydomainis a domain-specific directory, with the same name as the domain.Note:

Basic directions for creating pre-migration and post-migration scripts are provided in the

readme.txtfile in this directory.In some cases, scripts may be needed to dismount the disk from the previous server and mount it on the backup server. These scripts are configured on Node Manager, using the

PreScript()andPostScript()methods in theMigratableTargetMBeanin the MBean Reference for Oracle WebLogic Server, or by using the WebLogic Server Administration Console. In other cases, a script may be needed to move (not copy) a custom file store directory to the backup server instance. Do not leave the old configured file store directory for the next time if the migratable target is to host the old server instance. Therefore, the WebLogic Server administrator should delete or move the files to another directory.

Parent topic: Pre-Migration Requirements

Default File Store Availability for JTA

To migrate the JTA Transaction Recovery Service from a failed server instance in a cluster to another server instance (the backup server instance) in the same cluster, the backup server instance must have access to the transaction log (TLOG) records from the failed server. TLOG records are stored in the default persistent store for the server.

If you plan to use service migration in the event of a failure, you must configure the default persistent store so that it stores records in a shared storage system that is accessible to any potential machine to which a failed migratable server might be migrated. For highest reliability, use a shared storage solution that is itself highly available—for example, a SAN or a dual-ported disk. In addition, only JTA and other non-migratable services can share the same default store.

Optionally, you may also want to use pre-migration and post-migration scripts to perform any unmounting and mounting of shared storage, as needed. Basic directions for creating pre-migration and post-migration scripts are provided in a readme.txt file in the ORACLE_HOME/user_projects/domains/mydomain/bin/service_migration directory, where mydomain is a domain-specific directory, with the same name as the domain.

Parent topic: Pre-Migration Requirements

Server State and Manual Service Migration

For automatic migration, when the current (source) server fails, the migration framework will automatically migrate the Transaction Recovery Service to a target backup server.

For manual migration, you cannot migrate the Transaction Recovery Service to a backup server instance from a running server instance. You must stop the server instance before migrating the Transactions Recovery Service.

Table 8-1 shows the support for migration based on the running state.

Table 8-1 Server Running State and Manual Migration Support

| Server State Information for Current Server | Server State Information for Backup Server | Messaging Migration Allowed? | JTA Migration Allowed? |

|---|---|---|---|

|

Running |

Running |

Yes |

No |

|

Running |

Standby |

Yes |

No |

|

Running |

Not running |

Yes |

No |

|

Standby |

Running |

Yes |

No |

|

Standby |

Standby |

Yes |

No |

|

Standby |

Not Running |

Yes |

No |

|

Not Running |

Running |

Yes |

Yes |

|

Not Running |

Standby |

Yes |

No |

|

Not Running |

Not Running |

Yes |

Yes |

Parent topic: Pre-Migration Requirements

Roadmap for Configuring Automatic Migration of JMS-Related Services

For automatic migration, the WebLogic administrator can specify a migratable target for JMS-related services, such as JMS servers and SAF agents. The administrator can also configure migratable services that will be automatically migrated from a failed server based on WebLogic Server health monitoring capabilities.

Note:

If you want to enable service migration on a cluster targeted JMS Server, SAF Agent, Path Service, or Custom Store, see Simplified JMS Cluster and High Availability Configuration in Administering JMS Resources for Oracle WebLogic Server.

JMS services can be migrated independently of the JTA Transaction Recovery Service. However, since the JTA Transaction Recovery Service provides the transaction control of the other subsystem services, it is usually migrated along with the other subsystem services. This ensures that the transaction integrity is maintained before and after the migration of the subsystem services.

A dynamic or mixed cluster allows simplified configuration for automatic migration of JMS-related services. For more information, see Simplified JMS Cluster and High Availability Configuration in Administering JMS Resources for Oracle WebLogic Server.

To configure automatic JMS service migration on a migratable target within a cluster, perform the following tasks:

- Step 1: Configure Managed Servers and Node Manager

- Step 2: Configure the Migration Leasing Basis

- Step 3: Configure Migratable Targets

- Step 4: Configure and Target Custom Stores

- Step 5: Configure Destinations and Connection Factories

- Step 6: Target the JMS Services

- Step 7: Restart the Administration Server and Managed Servers With Modified Migration Policies

- Step 8: Manually Migrate JMS Services Back to the Original Server

- Step 9: Test JMS Migration

Parent topic: Service Migration

Step 1: Configure Managed Servers and Node Manager

Configure the Managed Servers in the cluster for migration, including assigning Managed Servers to a machine. In certain cases, Node Manager must also be running and configured to allow automatic server migration.

For step-by-step instructions for using the WebLogic Server Administration Console to complete these tasks, refer to the following topics in the Oracle WebLogic Server Administration Console Online Help:

-

Note:

It is required to configure a unique

Listen Addressfor each managed server instance that will host migratable JMS services. Otherwise, migration may fail. -

Note:

In general, machines are required to enable Node Managers and the WebLogic Administration Console to start and stop WebLogic Servers. When not using a node manager, machines are generally not needed.

-

Note:

For automatic service migration, Consensus leasing requires that you use Node Manager to control server instances within the cluster and that all migratable servers must have a Node Manager instance associated with them. For Database leasing, Node Manager is required only if pre-migration and post-migration scripts are defined. If pre-migration and post-migration scripts are not defined, then Node Manager is not required. For more information, see Database Leasing and Consensus Leasing.

For general information on configuring Node Manager, see Using Node Manager to Control Servers in Administering Node Manager for Oracle WebLogic Server.

Step 2: Configure the Migration Leasing Basis

In the WebLogic Server Administration Console, on the Cluster > Configuration > Migration page, configure the cluster Migration Basis according to how your data persistence environment is configured, selecting either Database Leasing or Consensus Leasing. For more information, see Leasing for Migratable Services.

Database Leasing requires that the Data Source For Automatic Migration field is set with a reference to valid JDBC system resource. It also requires that a leasing table be created on that resource. To create the leasing table in the database to be used for server migration with the DB user configured in the datasource, run the leasing.ddl script located at WL_HOME/server/db/<db_name>/leasing.ddl.

Note:

As a best practice, Oracle recommends configuring database leasing instead of consensus leasing.

Step 3: Configure Migratable Targets

You should perform this step before targeting any JMS-related services or enabling the JTA Transaction Recovery Service migration.

Configuring a Migratable Server as an Automatically Migratable Target

The Migratable Target Summary table in the WebLogic Server Administration Console displays the system-generated migratable targets of servername (migratable), which are automatically generated for each running server instance in a cluster. However, these are only generic templates and still need to be targeted and configured for automatic migration.

Parent topic: Step 3: Configure Migratable Targets

Create a New Migratable Target

When creating a new migratable target, the WebLogic Server Administration Console provides a mechanism for creating, targeting, and selecting a migration policy.

- Select a User Preferred Server

- Select a Service Migration Policy

- Optionally Select Constrained Candidate Servers

- Optionally Specify Pre/Post-Migration Scripts

- Optionally Specify In-Place Restart Options

Parent topic: Step 3: Configure Migratable Targets

Select a User Preferred Server

When you create a new migratable target using the WebLogic Server Administration Console, you can initially choose a preferred server instance in the cluster on which to associate the target. The User Preferred Server is the most appropriate server instance for hosting the migratable target.

Note:

An automatically migrated service may not end up being hosted on the specified User Preferred Server. To verify which server is hosting a migrated service, use the WebLogic Server Administration Console to check the Current Hosting Server information on the Migratable Target > Control page in the WebLogic Server Administration Console. For more information, see Migratable Target: Control in Oracle WebLogic Server Administration Console Online Help.

Parent topic: Create a New Migratable Target

Select a Service Migration Policy

The default migration policy for migratable targets is Manual Service Migration Only, so you must select one of the following auto-migration policies:

-

Auto-Migrate Exactly-Once Services: It indicates that if at least one Managed Server in the candidate list is running, then the service will be active somewhere in the cluster if server instances should fail or are shut down (either gracefully or forcibly).

Note:

This value can lead to target grouping. For example, if you have five

exactly-oncemigratable targets and only start one Managed Server in the cluster, then all five targets will be activated on that server instance. -

Auto-Migrate Failure-Recovery Services: This policy indicates that the service will only start if its UPS is started. If an administrator shuts down the UPS either gracefully or forcibly, this service will not be migrated. However, if the UPS fails due to an internal error, the service will be migrated to another candidate server instance. If such a candidate server instance is unavailable (due to a manual shutdown or an internal failure), then the migration framework will first attempt to reactivate the service on its UPS server. If the UPS server is not available at that time, then the service will be migrated to another candidate server instance.

For more information, see Policies for Manual and Automatic Service Migration.

Parent topic: Create a New Migratable Target

Optionally Select Constrained Candidate Servers

When creating migratable targets that use the exactly-once services migration policy, you may also want to restrict the potential member servers to which JMS servers can be migrated. A recommended best practice is to limit each migratable target's candidate server set to a primary, secondary, and perhaps a tertiary server instance. Then as each server starts, the migratable targets will be restricted to their candidate server instances, rather than being satisfied by the first server instance to come online. Administrators can then manually migrate services to idle server instances.

For the cluster's path service, however, the candidate server instances for the migratable target should be the entire cluster, which is the default setting.

On the migratable target Configuration > Migration page in the WebLogic Server Administration Console, the Constrained Candidate Servers Available box lists all of the Managed Servers that could possibly support the migratable target. The Managed Servers become valid Candidate Servers when you move them into the Chosen box.

Parent topic: Create a New Migratable Target

Optionally Specify Pre/Post-Migration Scripts

After creating a migratable target, you may also want to specify whether you are providing any pre-migration and post-migration scripts to perform any unmounting and mounting of the shared custom file store, as needed.

-

Pre-Migration Script Path: It is the path to the pre-migration script to run before a migratable target is actually activated.

-

Post-Migration Script Path: It is the path to the post-migration script to run after a migratable target is fully deactivated.

-

Post-Migration Script Failure Cancels Automatic Migration: It specifies whether or not a failure during execution of the post-deactivation script is fatal to the migration.

-

Allow Post-Migration Script To Run On a Different Machine: It specifies whether or not the post-deactivation script is allowed to run on a different machine.

The pre-migration and post-migration scripts must be located in the ORACLE_HOME/user_projects/domains/mydomain/bin/service_migration directory, where mydomain is a domain-specific directory, with the same name as the domain. For your convenience, sample pre-migration and post-migration scripts are provided in this directory.

Parent topic: Create a New Migratable Target

Optionally Specify In-Place Restart Options

Migratable targets provide restart-in-place options to attempt to deactivate and reactivate a failed service, instead of migrating the service. See In-Place Restarting of Failed Migratable Services.

Parent topic: Create a New Migratable Target

Step 4: Configure and Target Custom Stores

As per Custom Store Availability for JMS Services, JMS-related services require you to configure a custom persistent store that is targeted to a dynamic cluster or to the same migratable targets as the JMS services. Ensure that the store is either:

-

Configured such that all the candidate server instances in a migratable target have access to the custom store.

-

Migrated by pre-migration and post-migration scripts. For more information, see Optionally Specify Pre/Post-Migration Scripts.

Step 5: Configure Destinations and Connection Factories

-

Use JMS system modules rather than deployment modules. The WebLogic Server Administration Console only provides the ability to configure system modules. For more information, see JMS System Module Configuration in Administering JMS Resources for Oracle WebLogic Server.

-

Create one system module per anticipated target set, and target the module to a single cluster. For example, if you plan to have one destination that spans a single JMS server and another destination that spans six JMS servers, create two modules and target both of them to the same cluster.

-

Configure one subdeployment per module and populate the subdeployment with a homogeneous set of either JMS server of JMS SAF agent targets. Do not include WebLogic Server or cluster names in the subdeployment.

-

Target connection factories to clusters for applications running on the same cluster. You can use default targeting to inherit the module target. Target connection factories to a subdeployment by using the Advanced Targeting choice on the WebLogic Server Administration Console for use by applications running remote to cluster.

-

Custom connection factories are used to control client behavior, such as load balancing. They are targeted just like any other resource, but in the case of a connection factory, the target set has a special meaning.

-

You can target a connection factory to a cluster, WebLogic Server, or to a JMS server or SAF agent (using a subdeployment). There is a performance advantage to targeting connection factories to the exact JMS servers or SAF agents that the client will use, as the target set for a connection factory determines the candidate set of host server instances for a client connection.

-

Targeting to the exact JMS servers or SAF agents reduces the probability of client connections that connect to server instances which do not have a JMS server or SAF agent in cases where there is not a SAF agent on every clustered server instance. If no JMS server or SAF agent exists on a connection host, the client request must always double-hop the route from the client to the connection host server, then ultimately on to the JMS server or SAF agent.

-

-

For other JMS module resources, such as destinations, target using a subdeployment. Do not use default targeting. Subdeployment targeting is available through the Advanced Targeting choice on the WebLogic Server Administration Console.

-

As you add or remove JMS servers or SAF agents, remember to also add or remove JMS servers or SAF agents to your module subdeployment(s).

-

For more information, see Best Practices for JMS Beginners and Advanced Users in Administering JMS Resources for Oracle WebLogic Server.

Step 6: Target the JMS Services

When using migratable targets, you must target your JMS service to the same migratable target used by the custom persistent store. In the event that no custom store is specified for a JMS service that uses a migratable target, then a validation message will be generated, followed by failed JMS server deployment and a WebLogic Server boot failure. For example, attempting to target a JMS server that is using the default file store to a migratable target, will generate the following message:

Since the JMS server is targeted to a migratable target, it cannot use the default store.

Similar messages are generated for a SAF agent or path service that is targeted to a migratable target and attempts to use the default store. In addition, if the custom store is not targeted to the same migratable target as the migratable service, then the following validation log message will be generated, followed by failed JMS server deployment and a WebLogic Server start failure.

The JMS server is not targeted to the same target as its persistent store.

Special Considerations When Targeting SAF Agents or Path Service

There are some special targeting choices to consider when targeting SAF agents and a path service to migratable targets. See Targeting Rules for SAF Agents and Targeting Rules for Path Service.

Parent topic: Step 6: Target the JMS Services

Step 7: Restart the Administration Server and Managed Servers With Modified Migration Policies

You must restart the Administration Server after configuring your JMS services for automatic service migration. Also, you must restart any Managed Servers whose migration policies were modified.

Step 8: Manually Migrate JMS Services Back to the Original Server

You may want to migrate a JMS service back to the original primary server instance once it is back online. Unlike the JTA Transaction Recovery Service, JMS services do not automatically migrate back to the primary server instance when it becomes available, so you need to manually migrate these services.

For instructions on manually migrating the JMS-related services using the WebLogic Server Administration Console, see Manually migrate JMS-related services in the Oracle WebLogic Server Administration Console Online Help.

For instructions on manually migrating the JMS-related services using WLST, see WLST Command and Variable Reference in WLST Command Reference for WebLogic Server.

Step 9: Test JMS Migration

To Test if the Automatic JMS Migration is Working

-

Configure:

-

A cluster with two servers Server1 and Server2.

-

Migratable targets MT1 and MT2 with an "Exactly Once" migration policy.

-

File stores on each migratable target named FStore1 and FStore2.

-

JMS servers on each migratable target named JMS1 and JMS2.

-

A JMS system module targeted to the cluster.

-

A subdeployment named SUBD in the system module that references JMS1 and JMS2.

-

A Uniform Distributed Queue in the system module with JNDI name "UDQ" that targets subdeployment SUBD (instead of using default targeting).

-

-

Boot Server1:

-

Shutdown cluster.

-

Start only Server1.

-

Check Server1's JNDI tree to verify there is a UDQ member with JNDI name "JMS1@UDQ" and another with "JMS2@UDQ". You can view a server's JNDI tree on the console by clicking the server's General tab and clicking on the JNDI link at the top of this tab.

-

-

Boot Server2:

-

Start Server2.

-

Note that “JMS1@UDQ” and “JMS2@UDQ” remain on Server1.

-

Manually migrate MT2 back to the Server2.

-

Verify that "JMS1@UDQ" and "JMS2@UDQ" are now on Server1 and Server2 respectively (this may take a few seconds).

Note:

The cluster targeted HA alternative would automatically migrate JMS2 back to Server2 without a need for manual intervention. For more information, see Simplified JMS Cluster and High Availability Configuration in Administering JMS Resources for Oracle WebLogic Server.

-

To Test if the Manual JMS Migration is Working

Follow same testing steps as automatic migration, except configuring a Manual migration policy on each migratable target (the default). Then optionally move the targets from one server to another using the console or a script.

Best Practices for Configuring JMS Migration

-

In most cases, it is sufficient to use the default migratable target for a server instance. There is one default migratable target per server instance. An alternative is to configure one migratable target per server instance. See Step 3: Configure Migratable Targets.

-

Configure one custom store per migratable target and target the store to the migratable target. See Step 4: Configure and Target Custom Stores.

-

When configuring JMS services (JMS servers and SAF agents) for each migratable target, ensure that the services refer to the corresponding custom store. Then target the services to each migratable target. See Step 5: Target the JMS Services.

-

Ensure that all file stores are configured with a directory that references a shared storage location. This ensures that a store that migrates from one server to another can still load its file data. For similar reasons, migratable database stores should be setup to reference the same data source.

-

As you add or remove JMS servers or SAF agents, remember to also add or remove JMS servers or SAF agents to your module subdeployment(s).

For more information, see Best Practices for JMS Beginners and Advanced Users in Administering JMS Resources for Oracle WebLogic Server.

Parent topic: Service Migration

Roadmap for Configuring Manual Migration of JMS-related Services

WebLogic JMS leverages the migration framework by allowing an administrator to specify a migratable target for JMS-related services. Once properly configured, a JMS service can be manually migrated to another WebLogic Server within a cluster using either the console or a script without setting up automatic migration. This includes both scheduled migrations as well as manual migrations in response to a WebLogic Server failure within the cluster.

To setup JMS-related services for manual migration on a migratable target within a configured cluster, follow the Roadmap for Configuring Automatic Migration of JMS-Related Services but account for the following differences:

-

There is no need to configure a node manager.

-

There is no need to configure a cluster migration basis.

-

Leave the Migration Policy attribute on each Migratable Target at the default of Manual.

Parent topic: Service Migration

Roadmap for Configuring Automatic Migration of the JTA Transaction Recovery Service

The JTA Transaction Recovery Service is designed to gracefully handle transaction recovery after a crash. You can specify to have the Transaction Recovery Service automatically migrated from an unhealthy server instance to a healthy server instance, with the help of the server health monitoring services. This way, the backup server instance can complete transaction work for the failed server instance.

To configure automatic migration of the Transaction Recovery Service for a migratable target within a cluster, perform the following tasks:

- Step 1: Configure Managed Servers and Node Manager

- Step 2: Configure the Migration Basis

- Step 3: Enable Automatic JTA Migration

- Step 4: Configure the Default Persistent Store For Transaction Recovery Service Migration

- Step 5: Restart the Administration Server and Managed Servers With Modified Migration Policies

- Step 6: Automatic Failback of the Transaction Recovery Service Back to the Original Server

Parent topic: Service Migration

Step 1: Configure Managed Servers and Node Manager

Configure the Managed Servers in the cluster for migration, including assigning Managed Servers to a machine. Node Manager must also be running and configured to allow automatic server migration. Node Manager is required for health status information about the server instances involved.

For step-by-step instructions on using the WebLogic Server Administration Console to complete these tasks, refer to the following topics in Administration Console Online Help:

-

Note:

-

You must set a unique Listen Address value for the Managed Server instance that will host the JTA Transaction Recovery service. Otherwise, the migration will fail.

-

For information on configuring a primary server instance to not start in Managed Server Independence (MSI) mode, which will prevent concurrent access to the transaction log with another backup server instance in recovery mode, see Managed Server Independence in Developing JTA Applications for Oracle WebLogic Server.

-

-

Note:

In general, machines are required to enable node managers to start and stop WebLogic Servers. When not using a node manager, machines are generally not needed.

-

Note:

For automatic service migration, Consensus leasing requires that you use Node Manager to control server instances within the cluster and that all migratable servers must have a Node Manager instance associated with them. For Database leasing, Node Manager is required only if pre-migration and post-migration scripts are defined. If pre-migration and post-migration scripts are not defined, then Node Manager is not required.

For general information on configuring Node Manager, see Node Manager Overview in Administering Node Manager for Oracle WebLogic Server.

Step 2: Configure the Migration Basis

In the WebLogic Server Administration Console, on the Cluster > Configuration > Migration page, configure the cluster Migration Basis according to how your data persistence environment is configured, selecting either Database Leasing or Consensus Leasing. See Leasing for Migratable Services.

Database Leasing requires that the Data Source For Automatic Migration field is set with a reference to valid JDBC system resource. It also requires that a leasing table to be created on that resource. To create the leasing table in the database to be used for server migration with the DB user configured in the datasource, run the leasing.ddl script located at WL_HOME/server/db/<db_name>/leasing.ddl.

Note:

As a best practice, Oracle recommends configuring database leasing instead of consensus leasing.

Step 3: Enable Automatic JTA Migration

In the JTA Migration Configuration section on the Server > Configuration > Migration page in the WebLogic Server Administration Console, configure the following options:

Select the Automatic JTA Migration Policy

Select the migration policy for services hosted by the JTA migratable target. Valid options are:

-

Manual

-

Failure Recovery

-

Shutdown Recovery

Note:

The Exactly Once migration policy does not apply for JTA.

See Servers: Configuration: Migration in Oracle WebLogic Server Administration Console Online Help.

Configure the automatic migration of the JTA Transaction Recovery Service by selecting a migration policy for services hosted by the JTA migratable target from the JTA Migration Policy drop-down list.

Parent topic: Step 3: Enable Automatic JTA Migration

Optionally Select Candidate Servers

You may also want to restrict the potential server instances to which you can migrate the Transaction Recovery Service to those that have access to the current server instance's transaction log files (stored in the default WebLogic store). If no candidate server instances are chosen, then any server instance within the cluster can be chosen as a candidate server instance.

From the Candidate Servers Available box, select the Managed Servers that can access the JTA log files. The Managed Servers become valid Candidate Servers when you move them into the Chosen box.

Note:

You must include the original server instance in the list of chosen server instances so that you can manually migrate the Transaction Recovery Service back to the original server instance, if required. The WebLogic Server Administration Console enforces this rule.

Parent topic: Step 3: Enable Automatic JTA Migration

Optionally Specify Pre/Post-Migration Scripts

You can specify whether you are providing any pre-migration and post-migration scripts to perform any unmounting and mounting of the shared storage, as needed.

-

Pre-Migration Script Path: It is the path to the pre-migration script to run before a migratable target is actually activated.

-

Post-Migration Script Path: It is the path to the post-migration script to run after a migratable target is fully deactivated.

-

Post-Migration Script Failure Cancels Automatic Migration: It specifies whether or not a failure during execution of the post-deactivation script is fatal to the migration.

-

Allow Post-Migration Script To Run On a Different Machine: It specifies whether or not the post-deactivation script is allowed to run on a different machine.

The pre-migration and post-migration scripts must be located in the ORACLE_HOME/user_projects/domains/mydomain/bin/service_migration directory, where mydomain is a domain-specific directory, with the same name as the domain. Basic directions for creating pre-migration and post-migration scripts are provided in a readme.txt file in this directory.

Parent topic: Step 3: Enable Automatic JTA Migration

Step 4: Configure the Default Persistent Store For Transaction Recovery Service Migration

As per Default File Store Availability for JTA, the Transaction Manager uses the default persistent store to store transaction log files. To enable migration of the Transaction Recovery Service, you must configure the default persistent store so that it stores its data files on a persistent storage solution that is available to other server instances in the cluster if the original server instance fails.

Step 5: Restart the Administration Server and Managed Servers With Modified Migration Policies

You must restart the Administration Server after configuring the JTA Transaction Recovery service for automatic service migration.

Also, you must restart any Managed Servers whose migration policies were modified.

Step 6: Automatic Failback of the Transaction Recovery Service Back to the Original Server

After completing transaction recovery for a failed server instance, a backup server instance releases ownership of the Transaction Recovery Service so that the original server instance can reclaim it when the server instance is restarted. If the backup server stops (crashes) for any reason before it completes transaction recovery, its lease will expire. This way when the primary server instance starts, it can reclaim the ownership successfully.

There are two scenarios for automatic failback of the Transaction Recovery Service to the primary server instance:

-

Automatic failback after recovery is complete:

-

If the backup server instance finishes recovering the log transactions before the primary server instance is restarted, it will initiate an implicit migration of the Transaction Recovery Service back to the primary server instance.

-

For both manual and automatic migration, the post-deactivation script will be executed automatically.

-

-

Automatic failback before recovery is complete:

-

If the backup server instance is still recovering the log transactions when the primary server instance is started, during the Transaction Recovery Service initialization of the primary server startup, it will initiate an implicit migration of the Transaction Recovery Service from the backup server instance.

-

Manual Migration of the JTA Transaction Recovery Service

The JTA Transaction Recovery Service is designed to gracefully handle transaction recovery after a crash. You can manually migrate the Transaction Recovery Service from an unhealthy server instance to a healthy server instance with the help of the server health monitoring services. In this manner, the backup server instance can complete transaction work for the failed server instance.

You can manually migrate the Transaction Recovery Service back to the original server instance by selecting the original server instance as the destination server instance. The backup server instance must not be running when you manually migrate the service back to the original server instance.

Note the following:

-

If a backup server instance fails before completing the transaction recovery actions, the primary server instance cannot reclaim ownership of the Transaction Recovery Service and recovery will not be re-attempted on the restarting server instance. Therefore, you must attempt to manually re-migrate the Transaction Recovery Service to another backup server instance.

-

If you restart the original server instance while the backup server instance is recovering transactions, the backup server instance will gracefully release ownership of the Transaction Recovery Service. You do not need to stop the backup server instance. For more information, see Recovering Transactions For a Failed Clustered Server in Developing JTA Applications for Oracle WebLogic Server.

-

For information on configuring a primary backup server instance to not start in Managed Server Independence (MSI) mode, which will prevent concurrent access to the transaction log with another backup server in recovery mode, see Managed Server Independence in Developing JTA Applications for Oracle WebLogic Server.

For instructions on manually migrating the Transaction Recovery Service using the WebLogic Server Administration Console, see Manually migrate the Transaction Recovery Service in Oracle WebLogic Server Administration Console Online Help.

Parent topic: Service Migration

Automatic Migration of User-Defined Singleton Services

WebLogic Server supports the automatic migration of user-defined singleton services.

Automatic singleton service migration allows the automatic health monitoring and migration of singleton services. A singleton service is a service operating within a cluster that is available on only one server instance at any given time. When a migratable service fails or become unavailable for any reason (for example, because of a bug in the service code, server failure, or network failure), it is deactivated at its current location and activated on a new server instance. The process of migrating these services to another server instance is handled using the singleton master. See Singleton Master.

Note:

Although the JTA Transaction Recovery Service is also a singleton service that is available on only one node of a cluster at any time, it is configured differently for automatic migration than user-defined singleton services. JMS and JTA services can also be manually migrated. See Understanding the Service Migration Framework.

- Overview of Singleton Service Migration

- Implementing the Singleton Service Interface

- Deploying a Singleton Service and Configuring the Migration Behavior

Parent topic: Service Migration

Overview of Singleton Service Migration

This section provides an overview of how automatic singleton service is implemented in WebLogic Server.

Parent topic: Automatic Migration of User-Defined Singleton Services

Singleton Master

The singleton master is a lightweight singleton service that monitors other services that can be migrated automatically. The server instance that currently hosts the singleton master is responsible for starting and stopping the migration tasks associated with each migratable service.

Note:

Migratable services do not have to be hosted on the same server instance as the singleton master, but they must be hosted within the same cluster.