Design for Resiliency Using Oracle Integration

Use these best practices when designing your resilient integrations.

Design Integrations

Here's a basic inbound integration flow that receives requests from an upstream application through a REST API, parses, validates, and sends it to downstream application.

There may be a case where the downstream application becomes unresponsive when the upstream application is sending requests. These requests will not be acknowledged by the downstream. There will be many such processing challenges like batch, complex message correlation/flows, and throttling.

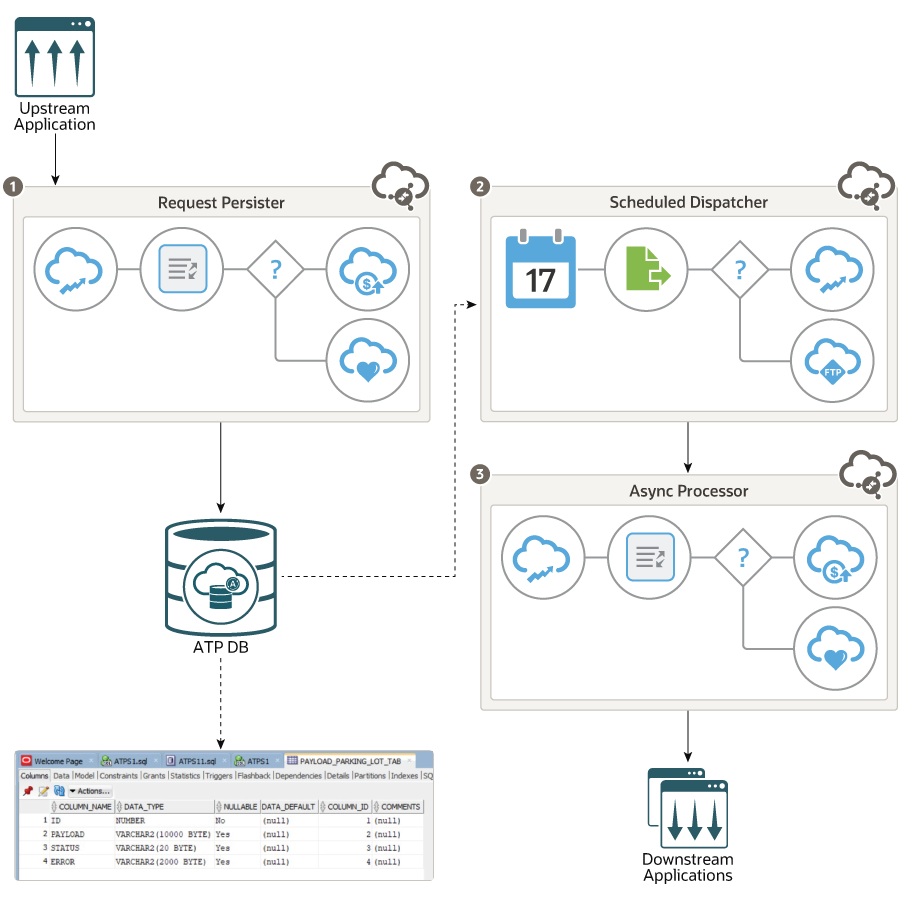

Let's take an example of creating entities on Oracle Financials cloud using the REST APIs. The requests must be received by an Integration REST Endpoint. You should be able to dynamically throttle the requests hitting Financials Cloud and track status of the requests and resubmit any failed requests. For this solution, three integrations and an Autonomous Transaction Processing database are shown. The implementation of the parking lot can be done using various storage technologies like database or Coherence. However, we're using a Autonomous Transaction Processing database table for simplicity.

Description of the illustration oic_extended_parkinglot_eh.png

In the image shown, when the upstream application sends request, the Request Persister intergration sends it to the database and acknowledges the upstream application. In the database, the parking lot pattern stores request metadata and status information and will process the input request based on the order they come in. Each message is parked in the storage for x minutes (parking time) so the integration flow has a chance to throttle the number of messages processed concurrently.

An orchestrated Schedule Dispatcher integration is triggered by a schedule. You can schedule this integration run to copy requests from the database at a date and time of your choosing. You can also define the frequency of the integration. These requests are handed over to the Asynchronous processor integration. Asynchronous processor integration will process the incoming requests and send it to the downstream application.

Design Components

The high level design has three integrations and a database. We're taking account creation as an example, but in reality it could be any business objects exposed by any Oracle SaaS REST API.

Post Requests

The Request Persister integration exposes a REST trigger endpoint, which can be called by an upstream application (client) to POST the account creation requests.

This persister integration loads up account creation requests into Autonomous Transaction Processing database immediately on receipt from client applications and acknowledges a receipt with HTTP 202/Accepted. The Account ID and the entire payload are persisted into the parking lot table for subsequent processing.

Load Requests into Parking Lot Table

The Autonomous Transaction Processing database here holds the parking lot table where all received requests are parked before processing. For simplicity, a simple table is shown to persist the payload and track the request status and any error information.

The Account creation JSON request payload is entirely stored in the parking lot table as a string. There may be use cases to store as a CLOB or an encoded string where it is not desirable to have a visible payload in the table. However, storing the payload as json, provides an opportunity to change the payload during error resubmissions.

- A stage Write using the request payload's JSON sample for creating schema file.

- A stage Read Entire File operation

using opaque schema.

Provides the base64 encoded value of the JSON payload string. Then the inbuilt function decodebase64(opaqueElement) can be used in mapper(or assign) to get the JSON string value !. The opaque schema

xsdfile that is used during stage read is available in the GitHub, which is discussed later in this solution.

Dispatch Requests According to Schedule

Scheduled Integration is scheduled to run at the required frequency. In every run it fetches a configured number of requests and loops through them dispatching each request to an Async Processor integration for processing.

You can configure to fetch a number of requests as a scheduled parameter to throttle or accelerate the request processing, and also to dynamically change the value. For example, you can set up a table in such a way that the requests from parking lot table are fetched based on status of requests. You can fetch NEW and ERROR_RETRY status requests and pass on for processing.

This Scheduled Dispatcher then loops through the fetched number of requests and hands off each request to the Asynchronous processor for account creation. Make sure that the Scheduler (parent) flow calls a one way Asynchronous Integration (child) flow. The Async processor does not return any response, so the scheduler thread is freed to go back and loop through the rest of the requests and dispatch them. This ensures that scheduler threads which are meant for the special use case of scheduling are not held up in long term processing. The business logic of request processing itself is handled by asynchronous processing resources available in Oracle Integrations.

- Scheduled orchestrations are meant to serve particular requirements of scheduling flows, and freeing them up using Async-handoff makes the solution scalable and performant when processing large number of requests.

- Scheduled orchestrations should not be used as a substitute for App Driven orchestrations.

You can add actions to orchestrated integration. If you use for-each action, then you can loop over a repeating element and execute one or more actions within the scope of the for-each action. The number of loop iterations is based on a user-selected repeating element. For example, you may have an integration in which you have downloaded a number of files and want to loop over the output of the files. The for-each action enables you to perform this task. Note that Process Items in Parallel can be selected for some of the for-each loops. This will ensure that the activities within the for-each loop are batched up by integration and executed in parallel. There are certain conditions where integration will ignore the parallelism. In such cases, the degree of parallelism will be set to 1 to avoid concurrency issues.

Create Account

Asynchronous processor integration will process the incoming requests from the Scheduled Dispatcher and sends it to the downstream application.

- You can configure these two in the Configure Rest

Endpoint wizard.

- On your integration canvas, in the Triggers pane, click REST if the REST adapters are not listed already.

- Drag your integration connection to the plus icon below START on the canvas. This displays the Configure REST Endpoint configuration wizard.

- On the Basic Info page of the wizard, select POST from the drop-down list for What action do you want to perform on the endpoint?

- Select the Configure a request payload for this endpoint endpoint.

Since the asynchronous flow does the actual account creation, it will be responsible to update the request status in the parking lot table. After a successful account creation, the STATUS column in parking lot table is updated to PROCESSED.

Handle Failed Requests

Fault handlers set failed requests to ERRORED status in the parking lot table. These requests can be updated in the table to ERROR_RETRY status and they will be picked in the next schedule for reprocessing due to the selection criteria of the Scheduled Dispatcher's Autonomous Transaction Processing database invoke.

- The update of ERRORED requests to ERROR_RETRY can be performed by an administrator on the database.

- You can even add a Resubmission integration flow that runs daily or any desired frequency, and updates all ERRORED records to ERROR_RETRY.

- The Asynchronous Processor integration's fault handler can set the status to ERROR_RETRY directly, so every failure gets resubmitted automatically in the next schedule.

Payload Correction

Storing the account creation payload in the parking lot table has given us a way to correct the payload of data errors prior to resubmission. Update the payload and set status column to ERROR_RETRY to resubmit a request with corrected payload.