Data Platform - Data Lakehouse

You can effectively collect and analyze event data and streaming data from internet of things (IoT) and social media sources, but how do you correlate it with the broad range of enterprise data resources to leverage your investment and gain the insights you want?

Leverage a cloud data lakehouse that combines the abilities of a data lake and a data warehouse to process a broad range of enterprise and streaming data for business analysis and machine learning.

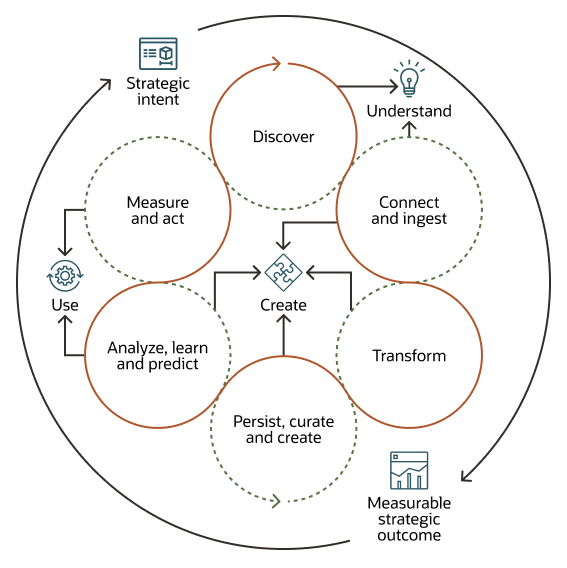

This reference architecture positions the technology solution within the overall business context, where strategic intents drive the creation of measurable strategic outcomes. These outcomes generate new strategic intents, effectively delivering continuous, data-driven business improvements.

A data lake enables an enterprise to store all of its data in a cost-effective, elastic environment while providing the necessary processing, persistence, and analytic services to discover new business insights. A data lake stores and curates structured and unstructured data and provides methods for organizing large volumes of highly diverse data from multiple sources.

With a data warehouse, you perform data transformation and cleansing before you commit the data to the warehouse. With a a data lake, you ingest data quickly and prepare it on the fly as people access it. A data lake supports operational reporting and business monitoring that require immediate access to data and flexible analysis to understand what is happening in the business while it is happening.

Functional Architecture

You can combine the abilities of a data lake and a data warehouse to provide a modern data lakehouse platform that processes streaming and other types of data from a broad range of enterprise data resources so that you can leverage the data for business analysis, machine learning, data services, and data products.

A data lakehouse architecture combines the capabilities of both the data lake and the data warehouse to increase operational efficiency and to deliver enhanced capabilities that allow:

- Seamless data and information usage without the need to replicate it across the data lake and data warehouse

- Diverse data type support in an enhanced multimodel and polyglot architecture

- Seamless data ingest from any consumer using real time, streaming, batch, application programming interface (API), and bulk ingestion mechanisms

- Continuous intelligence extraction from data using artificial intelligence (AI), generative AI, and machine learning (ML) services

- The ability to infuse and serve intelligence to any data consumer by using API, user interface, streaming, and integration mechanisms

- Governance and fine-grained data security that leverages a zero-trust security model

- The ability to fully decouple storage and compute resources and to consume only the resources needed at any point in time

- The ability to leverage multiple compute engines, including open source engines, to process the same data for different use cases to achieve maximum data repurposing, liquidity, and usage

- The ability to store data using different open file and table formats in the data lake

- The ability to leverage Oracle Cloud Infrastructure (OCI) native services that are managed by Oracle and that reduce operational overhead

- Better cloud economics with autoscaling that adjusts cloud resources infrastructure to match the actual demand

- Modularity so that service use is use-case driven

- Interoperability with any system or cloud that adheres to open standards

- Support for a diverse set of use cases including streaming, analytics, data science, and machine learning

- Support for different architectural approaches, from a centralized lakehouse to a decentralized data mesh

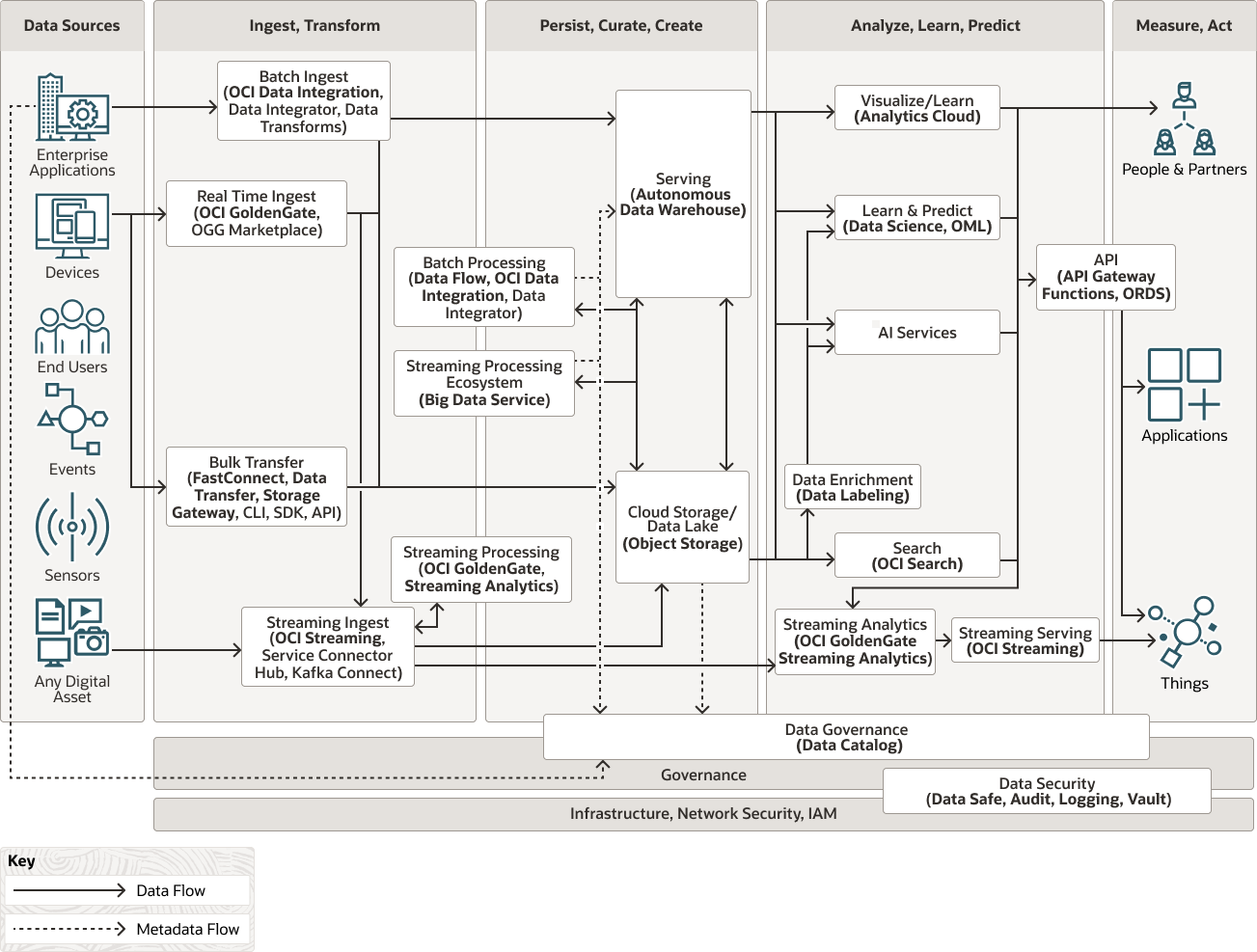

The following diagram illustrates the functional architecture.

lakehouse-functional-oracle.zip

The architecture focuses on the following logical divisions:

- Connect, Ingest, Transform

Connects to data sources, ingests, and refines their data for use in each of the data layers in the architecture.

- Persist, Curate, Create

Facilitates access and navigation of the data to show the current business view. For relational technologies, data may be logically or physically structured in simple relational, longitudinal, dimensional or OLAP forms. For non-relational data, this layer contains one or more pools of data, either output from an analytical process or data optimized for a specific analytical task.

- Analyze, Learn, Predict

Abstracts the logical business view of the data for consumers. This abstraction facilitates agile approaches to development, migration to the target architecture, and the provision of a single reporting layer from multiple federated sources.

The architecture has the following functional components:

- Batch ingest

Batch ingest is useful for data that can't be ingested in real time or that is too costly to adapt for real-time ingestion. It is also important for transforming data into reliable and trustworthy information that can be curated and persisted for regular consumption. You can use the following services together or independently to achieve a highly flexible and effective data integration and transformation workflow.

-

Oracle Cloud Infrastructure Data Integration is a fully managed, serverless, cloud-native service that extracts, loads, transforms, cleanses, and reshapes data from a variety of data sources into target Oracle Cloud Infrastructure services, such as Autonomous Data Warehouse and Oracle Cloud Infrastructure Object Storage. Users design data integration processes using an intuitive, codeless user interface that optimizes integration flows to generate the most efficient engine and orchestration, automatically allocating and scaling the execution environment.

ETL (extract transform load) leverages fully-managed, scale-out processing on Spark, and ELT (extract load transform) leverages full SQL push-down capabilities of the Autonomous Data Warehouse in order to minimize data movement and to improve the time to value for newly ingested data.

Oracle Cloud Infrastructure Data Integration provides interactive exploration and data preparation, and helps data engineers protect against schema drift by defining rules to handle schema changes.

-

Oracle Data Integrator provides comprehensive data integration from high-volume and high-performance batch loads, to event-driven, trickle-feed integration processes, to SOA-enabled data services. A declarative design approach ensures faster, simpler development and maintenance, and provides a unique approach to extract load transform (ELT) that helps guarantee the highest level of performance possible for data transformation and validation processes. Oracle data transforms use a web interface to simplify the configuration and execution of ELT and to help users build and schedule data and work flows using a declarative design approach.

-

Oracle Data Transforms enable ELT for selected supported technologies, simplifying the configuration and execution of data pipelines by using a web user interface that allows users to declaratively build and schedule data flows and workflows. Oracle Data Transforms is available as a fully-managed environment within Oracle Autonomous Data Warehouse (ADW) to load and transform data from several data sources into an ADW instance.

Depending on the use case, these components can be used independently or together to achieve highly flexible and performant data integration and transformation.

-

- API-Based Ingest

API-based ingest allows applications and systems to push events data by using APIs or Webhooks.

-

Oracle Integration is a fully managed, preconfigured environment that allows you to integrate cloud and on-premises applications, automate business processes, and develop visual applications. It uses an SFTP-compliant file server to store and retrieve files and allows you to exchange documents with business-to-business trading partners by using a portfolio of hundreds of adapters and recipes to connect with Oracle and third-party applications.

-

Oracle Cloud Infrastructure API Gateway enables you to publish APIs with private endpoints that are accessible from within your network, and which you can expose to the public internet if required. The endpoints support API validation, request and response transformation, CORS, authentication and authorization, and request limiting.

OCI API Gateway allows API observability to monitor usage and to guarantee SLAs. Usage plans can also be used to monitor and manage API consumers and clients and to set up different API access tiers for different customers. Usage plans are a key feature to support data monetization.

Usage plans support data monetization by creating tiered usage plans to manage API consumers and clients and to track their data usage.

-

Oracle Cloud Infrastructure Functions is a fully managed, multitenant, highly scalable, on-demand, Functions-as-a-Service (FaaS) platform. It is powered by the Fn Project open source engine. Functions enable you to deploy your code, and either call it directly or trigger it in response to events. Oracle Functions uses Docker containers hosted in Oracle Cloud Infrastructure Registry.

-

Oracle REST Data Services (ORDS) is a Java application that enables any developer with SQL and database skills to develop REST APIs for Oracle Database. Any application developer can use these APIs from any language environment without installing and maintaining client drivers in the same way they that they access other external services using REST, the most widely used API technology.

ORDS is deployed as a fully-managed feature in Oracle Autonomous Data Warehouse and can be used to expose lakehouse information by using APIs to data consumers.

-

-

Real-time ingest

Oracle Cloud Infrastructure GoldenGate is a fully-managed service that allows data ingestion from sources residing on premises or in any cloud. It leverages the GoldenGate CDC technology for a non-intrusive and efficient data capture and delivery to Oracle Autonomous Data Warehouse, Oracle Cloud Infrastructure Object Storage, or Oracle Cloud Infrastructure Streaming in real time and at scale to make relevant information available to consumers as quickly as possible.

- Bulk transfer

Bulk transfer allows you to move large, batch volumes of data using different methods. For large scale data lakehouses, we recommend Oracle Cloud Infrastructure FastConnect and Data Transfer services.

-

Oracle Cloud Infrastructure FastConnect provides an easy way to create a dedicated, private connection between your data center and Oracle Cloud Infrastructure. FastConnect provides higher-bandwidth options and a more reliable networking experience when compared with internet-based connections.

- Oracle Cloud Infrastructure (OCI) command line interface (CLI) allows you to execute and automate the transfer of data from on premises to OCI by leveraging the Oracle Cloud Infrastructure FastConnect private circuit. OCI SDKs allow you to write code to copy or synchronize data and files from on premises or from other clouds into Oracle Cloud Infrastructure Object Storage, leveraging a variety of programming languages such as Python, Java or Go to name a few. REST APIs allow you to interface with and control OCI services, such as moving data into object storage by using the Object Storage Service API.

- Oracle Cloud Infrastructure Data Transfer is an offline data migration service that lets you securely move petabyte-scale datasets from your data center to Oracle Cloud Infrastructure Object Storage or Archive Storage. Using the public internet to move data to the cloud is not always feasible due to high network costs, unreliable network connectivity, long transfer times, and security concerns. The Data Transfer service overcomes these challenges and can significantly reduce the time that it takes to migrate data to the cloud. Data Transfer is available through either Disk or Appliance. The choice of one over the other is mostly dependent on the amount of data, with Data Transfer Appliance supporting larger data sets for each appliance.

-

- Streaming ingest

Streaming ingest is supported by using OCI-native services that allow real-time ingestion of large-scale data sets from a broad set of data producers. Streaming ingest persists and synchronizes the data in object storage, which is at the heart of the data lakehouse. Syncing data to object storage allows you to hold historical data that can be curated and further transformed to extract valuable insights.

-

Oracle Cloud Infrastructure Streaming provides a fully-managed, scalable, and durable storage solution for ingesting continuous, high-volume streams of data that you can consume and process in real time. Streaming can be used for messaging, high-volume application logs, operational telemetry, web click-stream data, or other publish-subscribe messaging model use cases in which data is produced and processed continually and sequentially. Data is synced to Oracle Cloud Infrastructure Object Storage and can be curated and further transformed to extract valuable insights.

-

Oracle Cloud Infrastructure Queue is a fully managed serverless service that helps decouple systems and to enable asynchronous operations. Queue handles high-volume transactional data that requires independently processed messages without loss or duplication.

-

Oracle Cloud Infrastructure Service Connector Hub is a cloud message bus platform that offers a single pane of glass for describing, executing, and monitoring movement of data between services in Oracle Cloud Infrastructure. For this particular reference architecture it will be used to move data from Oracle Cloud Infrastructure Streaming or OCI Queue into Oracle Cloud Infrastructure Object Storage to persist the raw and prepared data into the data lakehouse persistence layer.

-

-

Streaming processing

Streaming processing enriches streaming data, detects events patterns, and creates a different set of streams that are persisted in the data lakehouse.

-

Oracle Cloud Infrastructure GoldenGate Stream Analytics processes and analyzes large-scale, real-time information by using sophisticated correlation patterns, data enrichment, and machine learning. Users can explore real-time data through live charts, maps, visualizations, and can graphically build streaming pipelines without any hand coding. These pipelines execute in a fully managed and scalable service to address critical real-time use cases of modern enterprises.

-

Oracle Cloud Infrastructure Data Flow is a fully-managed big data service that lets you run Apache Spark and Spark Streaming applications without having to deploy or manage infrastructure. It lets you deliver big data and AI applications faster, because you can focus on your applications without having to manage operations. Data flow applications are reusable templates that consist of a Spark application and its dependencies, default parameters, and a default run-time resource specification.

-

- Open source ecosystem

You can use the Open Source ecosystem:

- For batch and stream processing leveraging several popular open source engines such as Hadoop, Spark, Flink or Trino

- With Oracle Cloud Infrastructure Streaming both as a producer and as a consumer

- With Oracle Cloud Infrastructure Object Storage where it can both persist data and consume data

You can use Oracle Cloud Infrastructure Object Storage as a data lake to persist data sets that you want to share between the different Oracle Cloud Infrastructure services at different times.

Big Data Service provisions fully configured, secure, highly available and dedicated Hadoop, Spark or Flink clusters among other technologies, on demand. Scale the cluster to fit your big data and analytics workloads by using a range of Oracle Cloud Infrastructure compute shapes that support everything from small test and development clusters to large production clusters. Adjust quickly to business demand and optimize costs by leveraging autoscaling configurations whether based on metrics or on schedule. Leverage cluster profiles to create optimal clusters for a specific workload or technology. - Batch processing

Batch processing transforms large scale data sets stored on the data lakehouse. Batch processing leverages Oracle Cloud Infrastructure native services that seamlessly integrate with Oracle Cloud Infrastructure Object Storage and allows you to create curated data for use cases such as data aggregation and enrichment, data warehouse ingestion, and machine learning and AI data use at scale.

-

Oracle Cloud Infrastructure Data Integration, described above, is a fully-managed, serverless, cloud-native service that extracts, loads, transforms, cleanses, and reshapes data from a variety of data sources into target Oracle Cloud Infrastructure services, such as Autonomous Data Warehouse and Oracle Cloud Infrastructure Object Storage.

-

Oracle Cloud Infrastructure Data Flow is a fully-managed big data service that lets you run Apache Spark and Spark Streaming applications without having to deploy or manage infrastructure. It lets you deliver big data and AI applications faster, because you can focus on your applications without having to manage operations. Data flow applications are reusable templates that consist of a Spark application and its dependencies, default parameters, and a default run-time resource specification.

-

Oracle Data Transforms enable extract-load-transform (ELT) for selected supported technologies, simplifying the configuration and execution of data pipelines by using a web user interface that allows users to declaratively build and schedule data flows and work flows. Oracle Data Transforms is available as a fully-managed environment within Oracle Autonomous Data Warehouse (ADW) to load and transform data from several data sources into an ADW instance.

Depending on the use case, these components can be used independently or together to achieve highly flexible and performant data processing.

-

- Serving

Oracle Autonomous Data Warehouse is a self-driving, self-securing, self-repairing database service that is optimized for data warehousing workloads. You do not need to configure or manage any hardware, or install any software. Oracle Cloud Infrastructure handles creating, backing up, patching, upgrading, and tuning the database.

After provisioning, you can scale the number of CPU cores or the storage capacity of the database at any time without impacting availability or performance.

Oracle Autonomous Data Warehouse can also virtualize data that resides in object storage as external and hybrid partitioned tables so that you can join and consume data derived from other sources with the warehouse data. You can also move historical data from the warehouse into object storage and then consume it seamlessly by using hybrid partitioned tables.

Oracle Autonomous Data Warehouse can use previously harvested metadata stored in the Data Catalog to create external tables, and can automatically synchronize metadata updates in the Data Catalog with the external tables definition to maintain consistency, simplify management, and reduce effort.

Vectors are supported in the Autonomous Database, as it is multi-model database supporting several data types, namely relational, JSON, spatial, and graph. Vectors data type allows to load and store vector embeddings as well as creating vector indexes that can then be used for Retrieval Augmented Generation (RAG) applications, all in a single cloud Autonomous Data Warehouse instance. This multi-model capability enables analytics using all data types that can be joined in a single query, hence reducing the complexity and risk of having specialised siloed databases per data type, while ensuring increased security, reliability, scalability and ease of analysing all data.

Select AI, an Autonomous Database feature, allows querying data using natural language, using LLMs to convert user's input text into Oracle SQL. Select AI processes the natural language prompt, supplements the prompt with metadata, and then generates and runs a SQL query.

Data Sharing, an Autonomous Database feature, enables the ability to securely provide and consume data and metadata, from other parties that use the Autonomous Database or a Delta-Sharing-compliant technology. Data Sharing makes it easy to seamlessly consume data from share providers, as views that abstract the underlying shared tables. In addition, live shares, that allow recipients to consume live and fresh data, can be used when both the provider and recipient use Autonomous Database.

Analytic views, an Autonomous Database feature, provide a fast and efficient way to create analytic queries of data stored in existing database tables and views. Analytic views organize data using a dimensional model. They allow you to easily add aggregations and calculations to data sets and to present data in views that can be queried with relatively simple SQL. This feature allows you to semantically model a star or snowflake schema directly in ADW, using data stored internally and externally, and allows consumption of the model by using SQL and any SQL compliant data consumer.

In addition, Autonomous Data Lake Accelerator, a component of Autonomous Database, can seamlessly consume object storage data, scale processing to deliver fast queries, autoscale the database compute instance when needed, and reduce the impact on the database workload by isolating object storage queries from the database compute instance.

- Cloud storage

Oracle Cloud Infrastructure Object Storage is an internet-scale, high-performance storage platform that offers reliable and cost-efficient data durability. Oracle Cloud Infrastructure Object Storage can store an unlimited amount of unstructured data of any content type, including analytic data. You can safely and securely store or retrieve data directly from the internet or from within the cloud platform. Multiple management interfaces let you easily start small and scale seamlessly, without experiencing any degradation in performance or service reliability.

Oracle Cloud Infrastructure Object Storage can also be used as a cold storage layer for the data warehouse by storing data that is used infrequently and then joining it seamlessly with the most recent data by using hybrid tables in Oracle Autonomous Data Warehouse.

Object level granular access control can be enforced using IAM policies for objects, increasing the data security for data lake direct accesses.

- Visualize and learn

Oracle Analytics Cloud is a scalable and secure public cloud service that provides a full set of capabilities to explore and perform collaborative analytics for you, your workgroup, and your enterprise. It supports citizen data scientists, advanced business analysts training, and executing machine learning (ML) models. Machine learning models can be executed on the analytics service or directly on Oracle Autonomous Data Warehouse as OML-embedded models for large-scale batch predictions that leverage the processing power, scalability, and elasticity of the warehouse and OCI AI services, such as Oracle Cloud Infrastructure Vision.

With Oracle Analytics Cloud you also get flexible service management capabilities, including fast setup, easy scaling and patching, and automated lifecycle management.

-

Learn and predict

-

Data Science provides infrastructure, open source technologies, libraries, packages, and data science tools for data science teams to build, train, and manage machine learning (ML) models in Oracle Cloud Infrastructure. The collaborative and project-driven workspace provides an end-to-end cohesive user experience and supports the lifecycle of predictive models. Data Science enables data scientists and machine learning engineers to download and install packages directly from the Anaconda Repository at no cost and thus allowing them to innovate on their projects with a curated data science ecosystem of machine learning libraries.

The Data Science Jobs feature enables data scientists to define and run repeatable machine learning tasks on a fully-managed infrastructure.

The Data Science Model Deployment feature allows data scientists to deploy trained models as fully-managed HTTP endpoints that can provide predictions in real time, infusing intelligence into processes and applications, and allowing the business to react to relevant events as they occur.

-

Oracle Machine Learning provides powerful machine learning capabilities tightly integrated in Autonomous Database, with support for Python and AutoML. It supports models using open source and scalable, in-database algorithms that reduce data preparation and movement. AutoML helps data scientists speed up time to value of the company’s machine learning initiatives by using auto algorithm selection, adaptive data sampling, auto feature selection, and auto model tuning. With Oracle Machine Learning services available in Oracle Autonomous Data Warehouse, you can not only manage models but you can also deploy those models as REST endpoints in order to democratize real-time predictions within the company allowing business to react to relevant events as they occur, rather than after the fact.

-

- AI and Generative AI services

Oracle Cloud Infrastructure AI services provide a set of ready-to-consume AI services that can be used to support a range of use cases from text analysis to predictive maintenance. These services have prebuilt, finely tuned models that you can integrate into data pipelines, analytics, and applications by using APIs.

-

Oracle Cloud Infrastructure Language performs sophisticated text analytics and translations at scale. With pretrained and custom models, developers can process unstructured text and extract insights without data science expertise. Perform sentiment analysis, key phrase extraction, text classification, named entity recognition and detect PII data in text. Tailor models for domain-specific tasks and effortlessly translate text across various languages. Oracle Cloud Infrastructure Language also supports document translation and asynchronous jobs for efficiently processing large-volume workloads.

- Oracle Cloud Infrastructure Speech harnesses the power of spoken language by allowing you to easily convert media files containing human speech into highly accurate text transcriptions. OCI Speech can be used to transcribe customer service calls, automate subtitling, and generate metadata for media assets to create a fully searchable archive. OCI Speech supports batch and live transcribe jobs.

-

OCI Vision performs image recognition and video analysis tasks such as classifying images, detecting objects and faces, and extracting text. You can either leverage pretrained models or easily create custom vision models for industry- and customer-specific scenarios. OCI Vision is a fully-managed, multitenant, native cloud service that helps with all common computer vision tasks.

- Oracle Cloud Infrastructure Document Understanding performs document classification and document analysis tasks such as extracting text, key values and tables. OCI Document Understanding service is a fully-managed, multitenant, native cloud service that helps with all common document analysis tasks.

- Oracle Cloud Infrastructure Generative AI is a fully managed that provides a set of state-of-the-art, customizable large language models (LLMs) that cover a wide range of use cases, including chat, text generation, summarization, and creating text embeddings. Use the playground to try out the ready-to-use pretrained models or create and host your own fine-tuned custom models based on your own data on dedicated AI clusters.

-

- Data Enrichment

Data enrichment can improve the data that is used to train machine learning models to achieve better and more accurate prediction outcomes.

Oracle Cloud Infrastructure Data Labeling allows you to create and browse data sets, view data records (text or images), and apply labels for the purposes of building AI/ML models. The service also provides interactive user interfaces designed to aid in the labeling process. After records are labeled, the data set can be exported as line-delimited JSON for use in AI/ML model development. - Search

Search capabilities can be used as a complementary function to expose data to end users that require operational analytics data that is preindexed and is therefore served with low latency.

Oracle Cloud Infrastructure Search with OpenSearch is a distributed, fully-managed, maintenance-free, full-text search engine. OpenSearch lets you store, search, and analyze large volumes of data quickly with fast response times. The service supports open source OpenSearch APIs and OpenSearch Dashboards data visualization. - Streaming analytics

Streaming analytics provides dashboards that provide real-time analysis of streamed data contextualized with curated and master data stored in the data lakehouse to detect patterns of interest that it can then serve to users, applications, and things.

Oracle Cloud Infrastructure GoldenGate Stream Analytics processes and analyzes large-scale, real-time information by using sophisticated correlation patterns, data enrichment, and machine learning. Users can explore real-time data through live charts, maps, visualizations, and graphically build streaming pipelines without any hand coding. These pipelines execute in a fully managed and scalable service to address critical real-time use cases of modern enterprises.

- Reverse ETL/Writeback

Reverse ETL, sometimes referred to as writeback, enables data activation into operational systems and devices, allowing to infuse intelligence derived from data, directly into applications and devices used to support business processes.

Data is served to consumers using several mechanisms, namely via streams and queues that support a large set of consumers pulling information concurrently that is in near real time and is decoupled from the streaming analytics system in order to increase resiliency and scalability, via application or data integration to push data via prebuilt adapters, or via serverless functions to invoke virtually any application or device endpoint.

-

Oracle Cloud Infrastructure Streaming service provides a fully-managed, scalable, and durable storage solution for ingesting continuous, high-volume streams of data that you can consume and process in real time. Streaming can be used for messaging, high-volume application logs, operational telemetry, web click-stream data, or other publish-subscribe messaging model use cases in which data is produced and processed continually and sequentially.

-

Oracle Cloud Infrastructure Queue is a fully managed serverless service that helps decouple systems and enable asynchronous operations. Queue handles high-volume transactional data that requires independently processed messages without loss or duplication.

-

Oracle Integration Cloud is a fully managed, preconfigured environment that allows integrating cloud and on-premises applications, automating business processes, developing visual applications, using an SFTP-compliant file server to store and retrieve files, and exchanging business documents with a B2B trading partner using a portfolio of hundreds of adapters and recipes to connect with Oracle and third-party applications.

-

Oracle Data Transforms enable ELT for selected supported technologies, simplifying the configuration and execution of data pipelines by using a web user interface that allows users to declaratively build and schedule data flows and workflows. Oracle Data Transforms is available as a fully-managed environment within Oracle Autonomous Data Warehouse (ADW) to load and transform data from several data sources into an ADW instance.

-

Oracle Cloud Infrastructure Functions is a fully-managed, multitenant, highly-scalable, on-demand, functions-as-a-service platform. It is built on enterprise-grade Oracle Cloud Infrastructure and powered by the Fn Project open source engine.

-

- API

The API layer allows you to infuse the intelligence derived from Data Science and Oracle Machine Learning into applications, business processes, and things to influence and improve their operation and function. The API layer provides secure consumption of the Data Science-deployed models to Oracle Machine Learning REST endpoints and the ability to govern the system to ensure the availability of run-time environments. You can also leverage functions to perform additional logic as needed.

-

Oracle Cloud Infrastructure API Gateway enables you to publish APIs with private endpoints that are accessible from within your network, and that you can expose with public IP addresses if you want them to accept internet traffic. The endpoints support API validation, request and response transformation, CORS, authentication and authorization, and request limiting. It allows API observability to monitor usage and guarantee SLAs. Usage plans can also be used to monitor and manage the API consumers and API clients that access APIs and to set up different access tiers for different customers in order to track data usage that is consumed by using APIs. Usage plans are a key feature to support data monetization.

-

Oracle Cloud Infrastructure Functions is a fully-managed, multitenant, highly-scalable, on-demand, functions-as-a-service platform. It is built on enterprise-grade Oracle Cloud Infrastructure and powered by the Fn Project open source engine.

-

Oracle REST Data Services (ORDS) is a Java application that enables developers with SQL and database skills to develop REST APIs for Oracle Database. Any application developer can use these APIs from any language environment, without installing and maintaining client drivers, in the same way they that they access other external services using REST, the most widely used API technology. ORDS is deployed as a fully-managed feature in ADW and can be used to expose lakehouse information by using APIs to data consumers.

-

- Data Governance

Oracle Cloud Infrastructure Data Catalog provides visibility to where technical assets such as metadata and respective attributes reside and offers the ability to maintain a business glossary that is mapped to that technical metadata. Data Catalog can also serve metadata to Oracle Autonomous Data Warehouse to facilitate external table creation in the data warehouse.

-

Data Security

Data security is crucial in exploring and using lakehouse data to the fullest extent. Leveraging a zero-trust security model with defense-in-depth and RBAC capabilities, and ensuring compliance with the most stringent regulation, data security provides preventive, detective, and corrective security controls to ensure that data exfiltration and breaches are prevented.

-

Oracle Data Safe is a fully-integrated Oracle Cloud service focused on data security. It provides a complete and integrated set of features for protecting sensitive and regulated data in Oracle Cloud databases, such as Oracle Autonomous Data Warehouse. Features include security assessment, user assessment, data discovery, data masking, and activity auditing.

-

Oracle Cloud Infrastructure Audit provides visibility into activities related to Oracle Cloud Infrastructure (OCI) resources and tenancies. Audit log events can be used for security audits to track usage of and changes to OCI resources and to help ensure compliance with standards and regulations.

-

Oracle Cloud Infrastructure Logging provides a highly-scalable and fully-managed single interface for all the logs in the tenancy, including audit logs. Use OCI Logging to access logs from all OCI resources so that you can enable, manage, and search them.

-

Oracle Cloud Infrastructure Vault is an encryption management service that stores and manages encryption keys and secrets to securely access resources. Enables customer managed keys to be used for Oracle Autonomous Data Warehouse and data lake encryption for increased data protection at rest. Enables secrets to securely store services and user credentials to improve your security posture and to ensure credentials aren't compromised and used inappropriately.

-

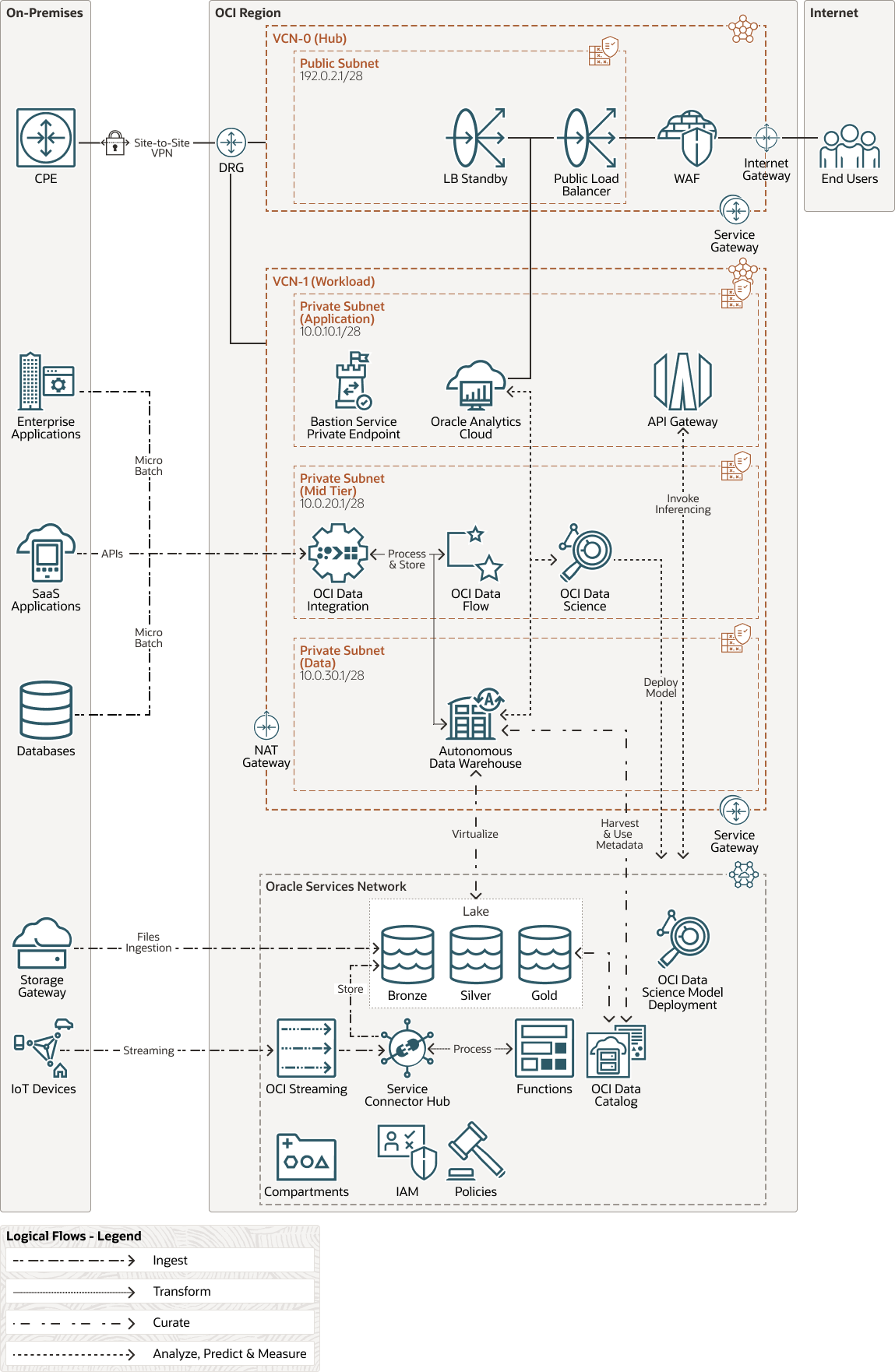

Physical Architecture

The physical architecture for this data lakehouse supports the following:

- Data is ingested securely by using micro batch, streaming, APIs, and files from relational and non-relational data sources

- Data is processed leveraging a combination of Oracle Cloud Infrastructure Data Integration and Oracle Cloud Infrastructure Data Flow

- Data is stored in Oracle Autonomous Data Warehouse and Oracle Cloud Infrastructure Object Storage and is organized according to its quality and value

- Oracle Autonomous Data Warehouse serves warehouse and lake data services securely to consumers

- Oracle Analytics Cloud surfaces data to business users by using visualizations

- Oracle Analytics Cloud is exposed by using Oracle Cloud Infrastructure Load Balancing that is secured by Oracle Cloud Infrastructure Web Application Firewall (WAF) to provide access by using the internet

- Oracle Cloud Infrastructure Data Science is used to build, train, and deploy machine learning (ML) models

- Oracle Cloud Infrastructure API Gateway is leveraged to govern the Data Science ML model deployments

- Oracle Cloud Infrastructure Data Catalog harvests metadata from Oracle Autonomous Data Warehouse and object storage

- Oracle Data Safe evaluates risks to data, implements and monitors security controls, assesses user security, monitors user activity, and addresses data security compliance requirements

- Oracle Cloud Infrastructure Bastion is used by administrators to manage private cloud resources

The following diagram illustrates this reference architecture.

lakehouse-architecture-oracle.zip

The design for the physical architecture:

- Leverages 2 VCNs, one for hub and another for the workload itself

- On premises connectivity leverages both Oracle Cloud Infrastructure FastConnect and site-to-site VPN for redundancy

- All incoming traffic from on premises and from the internet is first routed into the hub VCN and then into the workload VCN

- All data is secure in transit and at rest

- Services are deployed with private endpoints to increase the security posture

- The VCN is segregated into several private subnets to increase the security posture

- Lake data is segregated into several buckets in object storage leveraging a medallion architecture

Potential design improvements not depicted on this deployment for simplicity's sake include:

- Leveraging a full CIS-compliant landing zone

- Leverage a network firewall to improve the overall security posture by inspecting all traffic and by enforcing policies

Recommendations

Use the following recommendations as a starting point to process streaming data and a broad range of enterprise data resources for business analysis and machine learning.

Your requirements might differ from the architecture described here.

- Oracle Autonomous Data Warehouse

This architecture uses Oracle Autonomous Data Warehouse on shared infrastructure.

- Enable auto scaling to give the database workloads up to three times the processing power.

- Consider using Oracle Autonomous Data Warehouse on dedicated infrastructure if you want the self-service database capability within a private database cloud environment running on the public cloud.

- Consider using the hybrid partitioned tables feature of Autonomous Data Warehouse to move partitions of data to Oracle Cloud Infrastructure Object Storage and serve them to users and applications transparently. We recommend that you use this feature for data that is not often consumed and for which you don't need the same performance as for data stored within Autonomous Data Warehouse.

- Consider using the external tables feature to consume data stored in Oracle Cloud Infrastructure Object Storage in real time without the need to replicate it to Autonomous Data Warehouse. This feature transparently and seamlessly joins data sets curated outside of Autonomous Data Warehouse, regardless of the format (parquet, avro, orc, json, csv, and so on), with data residing Autonomous Data Warehouse.

- Consider using the database in-memory feature to significantly improve performance for real-time analytics and mixed workloads. Load lakehouse data into memory that needs to be served with low latency and that resides in ADW internal, hybrid partitioned or external tables.

- Consider using Autonomous Data Lake Accelerator when consuming object storage data to deliver an improved and faster experience to users consuming and joining data between the data warehouse and the data lake.

- Consider storing vector embeddings in Autonomous Data Warehouse alongside other data types such as relational data or JSON data to simplify data engineering and analytics on all data, and efficiently ground RAG agents using all data.

- Consider using Select AI as an accelerator to create simple and complex SQL that can be used in data engineering, business intelligence, application development or any task that needs SQL to be created.

- Consider using Select AI with low code applications to further simplify the application layer.

- Consider using Analytic Views to model semantically the DW star or snowflake underlying schema directly in ADW so that granular data is automatically aggregated without the need to preaggregate it, the semantic model is consumed by using SQL consistently with any SQL compliant client, including Oracle Analytics Cloud, ensuring facts and KPIs are served consistently regardless of the client, and all data can be used on the semantic model regardless if it is stored in ADW or in Object Storage making this feature a perfect semantic modeling layer for a lakehouse architecture where facts and dimensions can traverse both the DW and the Lake.

- Consider using Customer Managed Keys leveraging the Vault service if a full control of ADW encryption keys is needed due to company or regulation policies.

- Consider using Database Vault in ADW to prevent unauthorized privileged users from accessing sensitive data and thus prevent data exfiltration and data breaches.

- Consider using Autonomous Data Guard to support a business continuity plan via setting up and keeping data replicated on a standby instance either on the same region or on another region.

- Consider using dynamic data masking with Data Redaction to serve masked data to users depending on their role and hence guaranteeing appropriate data access without the need for data duplication and static masking.

- Consider using ADW clones to quickly create other transient or non transient environments. Use refreshable clones if the target environment needs to have up-to-date data. Use Oracle Data Safe to statically mask sensitive data in the clones for increased security.

- Consider using Data Sharing as a secure and easy way to consume and provide data, either with other Autonomous Database instances or with any Delta Sharing-compliant technology.

- Consider using live data sharing between Autonomous Database instances to consume and provide data in real time.

- Consider using versioned data sharing to share data with consumers. This avoids the cost of querying the data, as data is processed by consumers and not by the provider.

- Consider using pre-authenticated request URLs for read-only, time-bound data access on ADW to enable sharing non-sensitive data for use cases where the consumer doesn't support Delta Sharing.

- Object Storage/Data Lake

This architecture uses Oracle Cloud Infrastructure Object Storage, a highly scalable and durable cloud storage, as the lake storage.

- Consider organizing your lake across different sets of buckets leveraging a medallion architecture (bronze, silver, gold) or other partitioning logic to segregate data based on its quality and enrichment, enforce fine-grained security for consumers reading the data, and apply different lifecycle management policies to the different tiers.

- Consider using different object storage tiers and lifecycle policies to optimize costs of storing lake data at scale.

- Consider using Customer Managed Keys leveraging the Vault service if a full control of Object Storage encryption keys is needed due to company or regulation policies.

- Consider using Object Storage replication to support a business continuity plan via setting up bucket replication to another region. Since Object Storage is highly durable and maintains several copies of the same object in a single region for recovery on the same region bucket replication is not needed.

- Consider using Oracle Cloud Infrastructure Identity and Access Management (IAM) policies for objects, using object names or patterns, increasing the data security for data lake direct accesses.

- Consider using private endpoints in Oracle Cloud Infrastructure Object Storage to ensure secure and private access to the data lake from the data platform VCN.

- Consider using network sources and IAM policies to refer to them to manage the IP addresses that are authorized to access the data lake buckets and objects.

- Consider using OCIFS, a python-based utility, to mount Oracle Cloud Infrastructure Object Storage buckets as file systems, enabling support for applications that only work with NFS and need to upload files to object storage.

- Oracle Machine Learning and Oracle Cloud Infrastructure Data Science

This architecture leverages Oracle Machine Learning and Oracle Cloud Infrastructure Data Science to run and deliver predictions in real time to people and applications.

- Consider using AutoML in OCI Data Science or Oracle Machine Learning to speed up ML model development.

- Consider using Open Neural Networks Exchange (ONNX) for interoperability. ONNX 3rd party models can be deployed either into OML and exposed as a REST endpoint or into OCI Data Science and exposed as an HTTP endpoint.

- Consider saving the model in OCI Data Science as ONNX and import it into OCI GoldenGate Stream Analytics if there is a need to run scoring and prediction in a real time data pipeline to have more timely predictions that can drive real time business outcomes.

- Consider using OCI Data Science Conda environments for better management and packaging of Python dependencies inside Jupyter notebook sessions. Leverage the Anaconda curated repository of packages within OCI Data Science to use your favorite open-source tools to build, train, and deploy models.

- Consider using Oracle Cloud Infrastructure Data Science AI Quick Actions to deploy, evaluate and fine-tune foundation models in OCI Data Science. Work with curated, open source LLMs available in the model explorer or to bring your own model.

- Consider using Data Science low code AI Operators, available in the Accelerated Data Science Python package, to quickly and efficiently perform forecasts, anomaly detection, or to build recommender functionality.

- Consider using OCI Data Flow within the Data Science Jupyter environment to perform Exploratory Data Analysis, data profiling and data preparation at scale leveraging Spark scale out processing.

- Consider using Data Labeling to label data such as images, text or documents and use it to train ML models built on OCI Data Science or OCI AI Services and thus improving the accuracy of predictions.

- Consider deploying an API Gateway to secure and govern the consumption of the deployed model if real-time predictions are being consumed by partners and external entities.

- Oracle Cloud Infrastructure Data

Integration

This architecture uses Oracle Cloud Infrastructure Data Integration to support declarative and no-code or low-code ETL and data pipeline development.

- Leverage the Oracle Cloud Infrastructure Data Integration to coordinate and schedule Oracle Cloud Infrastructure Data Flow application runs and be able to mix and match declarative ETL with custom Spark code logic. Use functions from within Oracle Cloud Infrastructure Data Integration to further extend the capabilities of data pipelines.

- Consider using SQL pushdown for transformations that have ADW as target to use an ELT approach that is more efficient, performant and secure compared with ETL.

- Consider allowing OCI Data Integration to handle data sources schema drift in order to have more resilient and future proof data pipelines that will sustain data sources schema changes.

- Oracle Cloud Infrastructure Data Flow

This architecture uses Oracle Cloud Infrastructure Data Flow to support large scale Spark and Spark streaming processing without the need to have and manage permanent clusters.

- Consider using Oracle Cloud Infrastructure Data Catalog as a Hive metastore for Oracle Cloud Infrastructure Data Flow in order to securely store and retrieve schema definitions for objects in unstructured and semi-structured data assets such as Oracle Cloud Infrastructure Object Storage.

- Consider using Delta Lake on OCI Data Flow if ACID transactions and unification of streaming and batch processing is needed for lake data.

- Big Data Service

This architecture leverages Oracle Cloud Infrastructure Big Data Service to deploy a highly available and scalable clusters of various open source technologies such as Spark, Hadoop, Trino or Flink that can process batch and streaming data. Big Data Service persists data in HDFS, persists and reads data from Oracle Cloud Infrastructure Object Storage, and can interchange data sets with other Oracle Cloud Infrastructure services such as Oracle Cloud Infrastructure Data Flow and Oracle Autonomous Data Warehouse.

- Consider using autoscaling to automatically scale horizontally or vertically the worker nodes based on metrics or schedule to continuously optimize costs based on resources demand.

- Consider using the OCI HDFS connector for Object Storage to read and write data to and from Object Storage an thus providing a mechanism to produce/consume data shared with other OCI services without the need to replicate and duplicate it.

- Consider using Delta Lake on OCI BDS if ACID transactions and unification of streaming and batch processing is needed for lake data.

- If you need to use other open source software, consider using Oracle Cloud Infrastructure Registry, container instances, or Oracle Cloud Infrastructure Kubernetes Engine to deploy any open source software that can be containerized.

- Oracle Cloud

Infrastructure Streaming

This architecture leverages Oracle Cloud Infrastructure Streaming to consume streaming data from sources as well as to provide streaming data to consumers.

Consider leveraging Oracle Cloud Infrastructure Service Connector Hub to move data from Oracle Cloud Infrastructure Streaming and to persist in on Oracle Cloud Infrastructure Object Storage to support further historical data analysis.

- Oracle

Analytics Cloud

This architecture leverages Oracle Analytics Cloud (OAC) for delivering augmented analytics to end users.

Consider leveraging the prebuilt integration OAC has with OCI AI Services (Language and Vision Models) and OML (any model) to embed intelligence into data flows and visualizations end users consume and thus democratizing AI & ML consumption.

- Oracle Cloud

Infrastructure AI services

This architecture can leverage Oracle Cloud Infrastructure AI services, depending on the use cases deployed.

Consider using Data Labeling to label train data that will be used to tune and get more accurate predictions for AI Services such as Vision, Document Understanding and Language.

- Oracle Cloud Infrastructure Generative AI services

This architecture can leverage Oracle Cloud Infrastructure Generative AI services, depending on the use cases deployed.

- Consider using the on-demand playground and APIs that use pretrained LLMs to address text generation, conversation, data extraction, summarization, classification, style transfer or semantic similarity, and to quickly embed generative AI into your pipelines and processes.

- Consider using dedicated AI clusters to efficiently adapt and fine tune foundational LLMs to your data, ensuring complete isolation and data security.

- Consider sharing hosting dedicated AI clusters within different teams across the organization for cost efficiency. A single cluster can be used to host several custom models, all which can be served with independent endpoints and can be secured with dedicated IAM policies.

- API Gateway

This architecture leverages API Gateway to securely expose data services and real-time inferencing to data consumers.

- Consider using Oracle Cloud Infrastructure Functions to add run-time logic eventually needed to support specific API processing that is out of scope of the data processing and access and interpretation layers.

- Consider using Usage Plans to manage subscriber access to APIs, to monitor and manage API consumption, set up different access tiers for different consumers and support data monetization by tracking usage metrics that can be provided to an external billing system.

- Oracle Cloud Infrastructure Data Catalog

To have a complete and holistic end-to-end view of the data stored and flowing on the platform, consider harvesting not only data stores supporting the data persistence layer but also the source data stores. Mapping this harvested technical metadata to the business glossary and enriching it with custom properties allows you to map business concepts and to document and govern security and access definitions.

- To facilitate the creation of Oracle Autonomous Data Warehouse external tables that virtualize data stored on Oracle Cloud Infrastructure Object Storage, leverage the metadata previously harvested by Oracle Cloud Infrastructure Data Catalog. This simplifies the creation of external tables, enforces consistency of metadata across data stores, and is less susceptible to human error.

- Consider using lineage tracking for Oracle Cloud Infrastructure Data Integration and Oracle Cloud Infrastructure Data Flow to have visibility of how data was ingested, transformed and stored. For increased coverage, use API-based ingestion to leverage the OpenLineage open framework to track lineage for any source and system.

- Oracle Cloud

Infrastructure Data Transfer service

Use Oracle Cloud Infrastructure Data Transfer service when uploading data using public internet connectivity is not feasible. We recommend that you consider using Data Transfer if uploading data over the public internet takes longer than 1-2 weeks.

- Data Safe and Audit

Increasing the security posture leveraging auditing and alert capabilities will allow preventing data exfiltration and be able to perform forensic analysis in case there is a data breach.

- Consider using Oracle Data Safe to audit activity in the data warehouse and consider using Oracle Cloud Infrastructure Audit to audit the traffic to the lake data.

- Consider using Oracle Data Safe for discovery of sensitive data on ADW and to mask it statically when creating ADW clones for non-production environments, thus avoiding security risks.

- Consider using Oracle Data Safe SQL Firewall with ADW to increase the data security posture, protecting against risks such as SQL injection attacks or compromised accounts.

- Deployment and Automation

This physical architecture is deployed using infrastructure as code (IaC) automation to create the resources to deploy a data lakehouse

Oracle Cloud Infrastructure Resource Manager allows you to create Terraform stacks of deployable cloud resources, to share and manage infrastructure configurations, and to state files across multiple teams and platforms. Consider using Oracle Cloud Infrastructure Resource Manager to create deployment stacks for non-production environment-creation, to onboard new teams that need additional services, and to standardize and embed consistent IAM policies and security guardrails that adhere to the organization's security and governance defined policies.

- Business Continuity

This architecture describes a deployment in a single region and can be extended two regions to support disaster recovery and to enable a business continuity plan.

- Oracle Cloud

Infrastructure Full Stack Disaster Recovery Service is a disaster recovery orchestration and

management service that provides comprehensive disaster recovery capabilities for

all layers of an application stack, including infrastructure, middleware, database,

and application.

Consider using Full Stack Disaster Recovery to setup switchover and failover plans for the data lakehouse to automate disaster recovery tasks and to reduce manual steps in the event of a planned or unplanned transition to the standby region.

- Cost Optimization

Consider using Oracle Cloud Infrastructure cost and usage tracking as well as cost optimization features to continuously support your financial operations.

- Consider using cost and usage reports to obtain and track cloud resources usage and respective costs. Leverage Industry-standard FOCUS CSV cost reports that are produced to integrate with 3rd party financial operations solutions.

- Consider using cost analysis to track costs incurred by different teams, projects and environments.

- Consider using cost-tracking tags to tag cloud resources for specific teams, projects or environments.

- Consider using budgets to set soft limits on spending and setting alerts to let you know when you might exceed your budget for project, team, or overall spending.

- Interoperability

This architecture leverages extensively Industry standards to interoperate with any organization's wider IT heterogeneous landscape so it can consume and serve any data to any application, system, or person.

The architecture supports open file formats such as Parquet or Avro, so data can be stored on the more appropriate format for each use case. Additionally it also supports open table formats such as Iceberg and Delta Lake to ensure interoperability between Oracle technologies and other 3rd party technologies.- Consider using Oracle Autonomous Data Warehouse Iceberg support to read Iceberg tables persisted on the data lake and to serve them to consumers. Iceberg tables can either be served as external tables or loaded into ADW.

- Consider using Data Flow Delta Lake Universal Format support to read, process and persist data in the data lake. Using Delta Lake while generating metadata for other open table formats such as Iceberg and Hudi allows different processing engines to read the same data.

- Organizational Approach

This architecture is flexible and can support different types of organizational approaches ranging from a centralized to a completely decentralized approach and thus can be adopted and used by any organization that wants to extract value out of their data.

This architecture leverages, extensively, fine grained controls for authentication and authorization with OCI Identity and Access Management (IAM).

Consider using IAM to segregate the different lines of business and teams using the lakehouse to decentralize ownership of data products creation and enforce data domains segregation if your organization wants to adopt a decentralized organizational approach.

OCI has automation and Infrastructure as Code as keys capabilities for a successful architecture deployment, leveraging frameworks such as Terraform and Ansible.

If your organization is adopting a decentralized approach and implementing data domains under that approach, consider leveraging prebuilt terraform templates and OCI Resource Manager to quickly and consistently on board data domains into the data platform.

Considerations

When collecting, processing, and curating application data for analysis and machine learning, consider the following implementation options.

| Guidance | Recommended | Other Options | Rationale |

|---|---|---|---|

| Data Refinery |

|

|

Oracle Cloud Infrastructure Data Integration provides a cloud native, serverless, fully-managed ETL platform that is scalable and cost efficient. Oracle Cloud Infrastructure GoldenGate provides a cloud native, serverless, fully-managed, non-intrusive data replication platform that is scalable, cost efficient and can be deployed in hybrid environments. |

| Data Persistence |

|

Oracle Exadata Database Service |

Oracle Autonomous Data Warehouse is an easy-to-use, fully autonomous database that scales elastically, delivers fast query performance, and requires no database administration. It also offers direct access to the data from object storage external or hybrid partitioned tables. Oracle Cloud Infrastructure Object Storage stores unlimited data in raw format. |

| Data Processing |

|

Third-party tools |

Oracle Cloud Infrastructure Data Integration provides a cloud native, serverless, fully-managed ETL platform that is scalable and cost effective. Oracle Cloud Infrastructure Data Flow provides a serverless Spark environment to process data at scale with a pay-per-use, extremely elastic model. Oracle Cloud Infrastructure Big Data Service provides enterprise-grade Hadoop-as-a-service with end-to-end security, high performance, and ease of management and upgradeability. |

| Access & Interpretation |

|

Third-party tools |

Oracle Analytics Cloud is fully managed and tightly integrated with the curated data inOracle Autonomous Data Warehouse. Data Science is a fully-managed, self-service platform for data science teams to build, train, and manage machine learning (ML) models in Oracle Cloud Infrastructure. The Data Science service provides infrastructure and data science tools such as AutoML and model deployment capabilities. Oracle Machine Learning is a fully-managed, self service platform for data science available with Oracle Autonomous Data Warehouse that leverages the processing power of the warehouse to build, train, test, and deploy ML models at scale without the need to move the data outside of the warehouse. Oracle Cloud Infrastructure AI services are a set of services that provide pre-built models specifically built and trained to perform tasks such as inferencing potential anomalies or detecting sentiment. |

Deploy

- Deploy by using Oracle Cloud Infrastructure Resource

Manager:

- Click

If you aren't already signed in, enter the tenancy and user credentials.

- Review and accept the terms and conditions.

- Select the region where you want to deploy the stack.

- Follow the on-screen prompts and instructions to create the stack.

- After creating the stack, click Terraform Actions, and select Plan.

- Wait for the job to be completed, and review the plan.

To make any changes, return to the Stack Details page, click Edit Stack, and make the required changes. Then, run the Plan action again.

- If no further changes are necessary, return to the Stack Details page, click Terraform Actions, and select Apply.

- Click

- Deploy by using the Terraform CLI:

- Go to GitHub.

- Clone or download the repository to your local computer.

- Follow the instructions in the

READMEdocument.

Explore More

Learn more about the features of this architecture and about related architectures.

Acknowledgments

- Author: José Cruz

- Contributors: Larry Fumagalli, Ionel Panaitescu, Mike Blackmore, Robert Lies

Change Log

This log lists significant changes:

| October 28, 2024 |

|

| June 21, 2023 |

|