Configure the HPC Cluster Stack from Oracle Cloud Marketplace

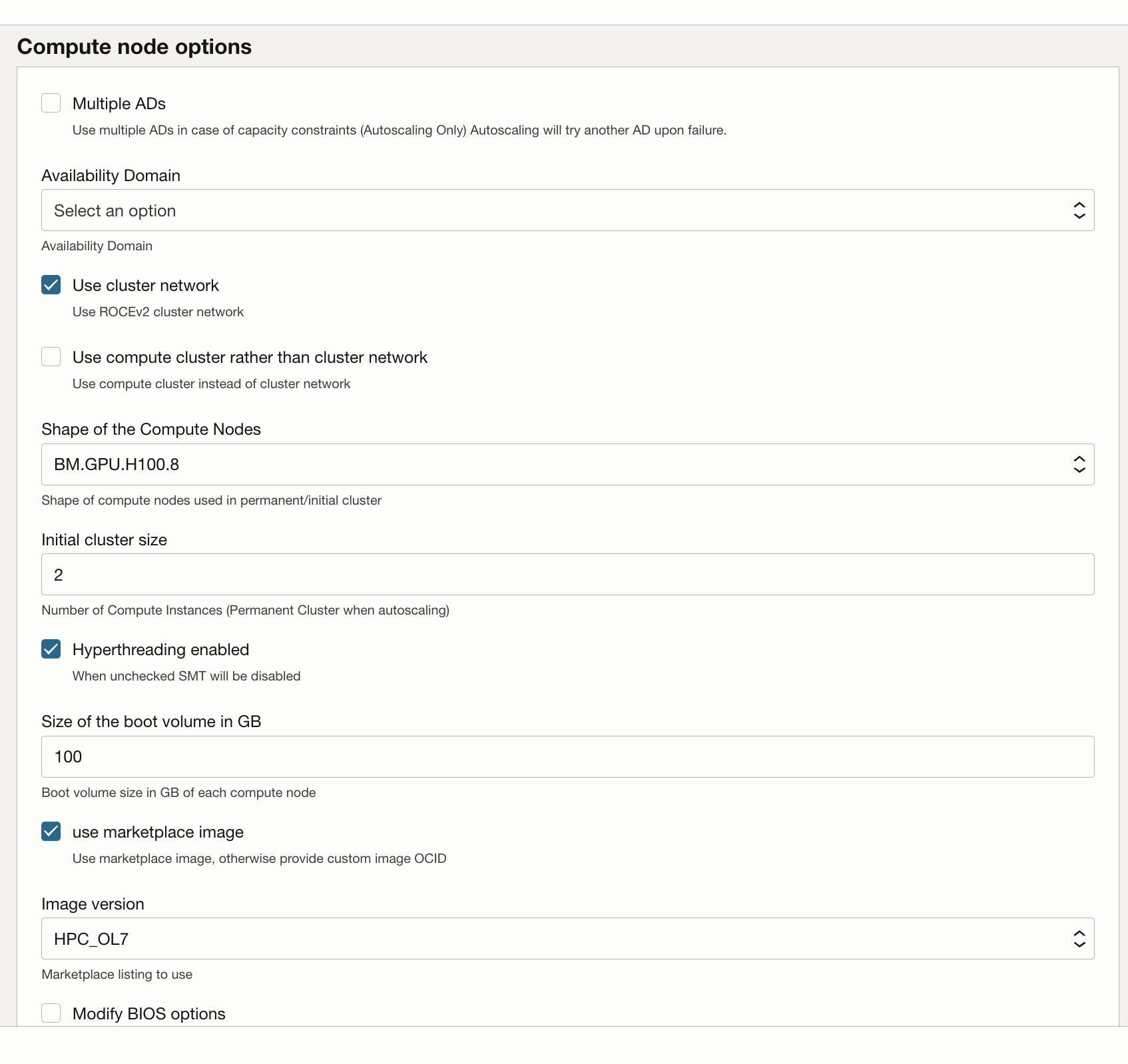

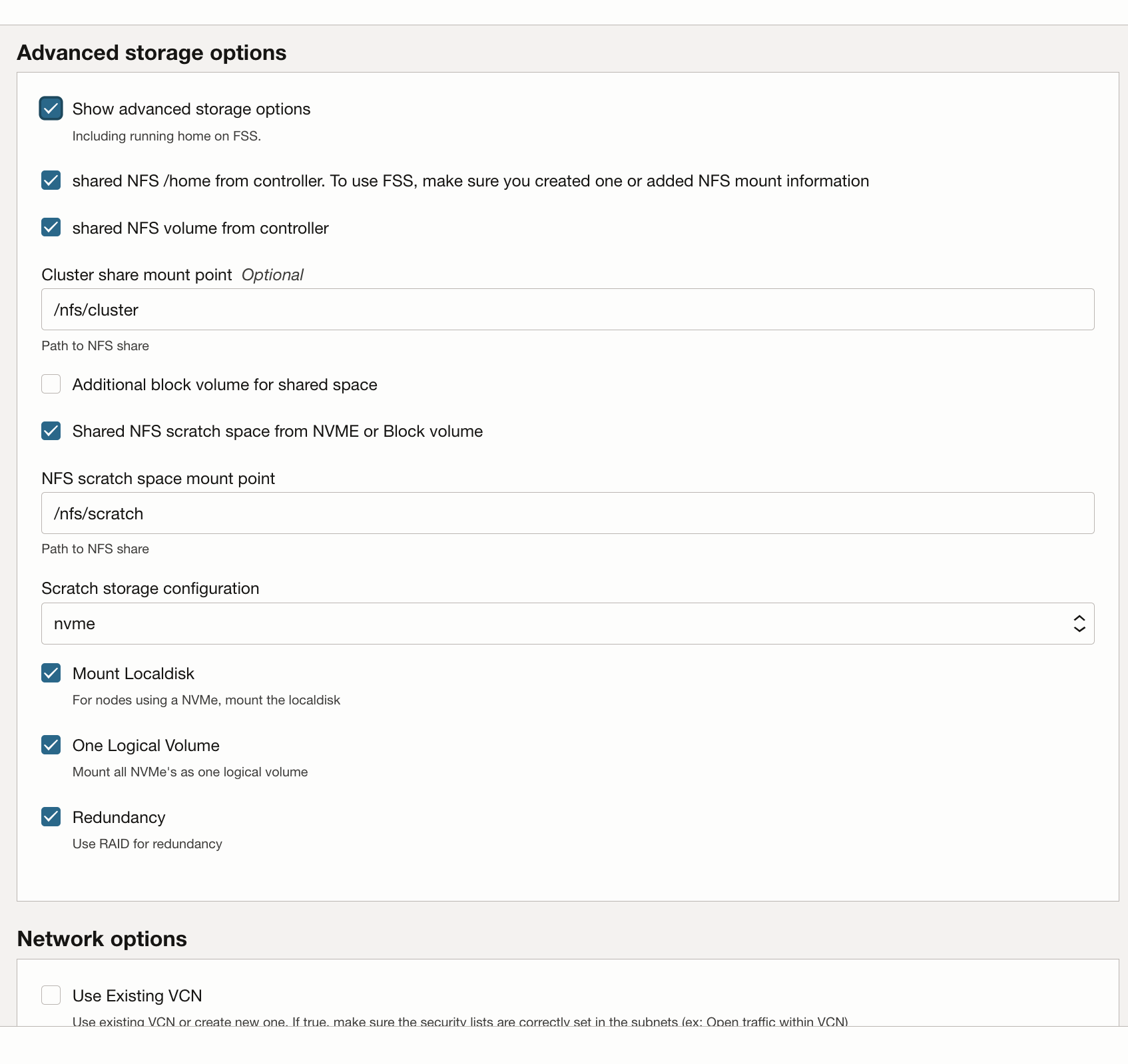

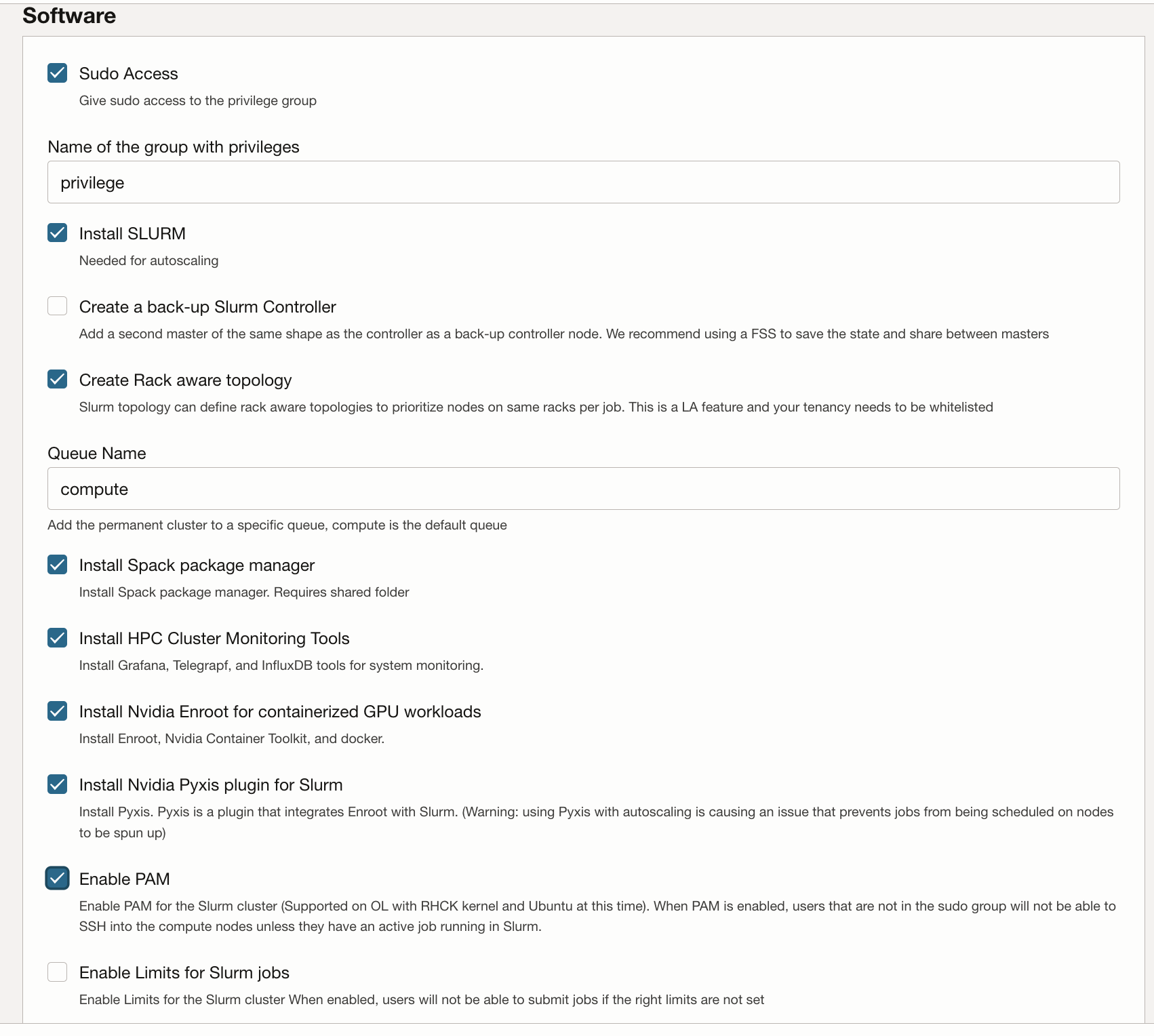

The HPC Cluster stack uses Terraform to deploy Oracle Cloud Infrastructure resources. The stack will create GPU nodes, storage, standard networking and high performance cluster networking, and a bastion/head node for access to and management of the cluster.

Deploy the GPU Cluster

Your Oracle Cloud account must be in a group with permission to deploy and manage these resources. See HPC Cluster Usage Instructions for more details on policy requirements.

You can deploy the stack to an existing compartment, but it may be

cleaner if you create a compartment specifically for the cluster.

Note:

While there is no cost to use the Marketplace stack to provision an environment, you will be charged for the resources provisioned when the stack is launched.- Create a compartment for your tenancy and region and verify policies are

available.

- Use the HPC Cluster stack to deploy the GPU cluster.