Plan

There is a multi-step workflow to implement Data Lakes in OCI using Big Data Service.

-

Requirements: List the requirements for new environments in OCI

-

Assessment: Assess the required OCI services and tools

-

Design: Design your solution architecture and sizing for OCI

-

Plan: Create a detailed plan mapping your time and resources

-

Provision: Provision and configure the required resources in OCI

-

Implement: Implement your data and application workloads

-

Automate Pipeline: Orchestrate and schedule workflow pipelines for automation

-

Test and Validate: Conduct end-to-end validation, functional, and performance testing for the solution.

Determine Requirements

The first thing you should do is make a catalog of the system and application requirements.

The following table provides an example template and can act as the starting point to adapt to your use case.

| Discovery topic | Current setup | OCI requirements | Notes and comments |

|---|---|---|---|

| Data size | - | - | - |

| Growth rate | - | - | - |

| File formats | - | - | - |

| Data compression formats | - | - | - |

| Datacenter details (for hybrid architectures) | - | - | - |

| Network connectivity details for VPN/FastConnect setup | - | - | - |

| DR (RTO, RPO) | - | - | - |

| HA SLA | - | - | - |

| Backup strategy | - | - | - |

| Infrastructure management and monitoring | - | - | - |

| Notifications and alerting | - | - | - |

| Maintenance and upgrade processes | - | - | - |

| Service Desk/incident management | - | - | - |

| Authentication methods | - | - | - |

| Authorization methods | - | - | - |

| Encryption details (at rest and in motion) | - | - | - |

| Keys and certificates processes | - | - | - |

| Kerberos details | - | - | - |

| Compliance requirements | - | - | - |

| Data sources and ingestion techniques for each source | - | - | - |

| ETL requirements | - | - | - |

| Analytics requirements | - | - | - |

| Data querying requirements | - | - | - |

| BI/visualization, reporting requirements | - | - | - |

| Integrations with other solutions | - | - | - |

| Notebook and data science workload details | - | - | - |

| Workflow, orchestration and scheduling requirements | - | - | - |

| Batch workloads – details of each job and application | - | - | - |

| Interactive workloads – number of users, details of each job and application | - | - | - |

| Streaming workloads – details of each job and application | - | - | - |

| Details of each application integrated with the data lake | - | - | - |

| Team details (sys admins, developers, application owners, end users) | - | - | - |

Assessment

In this phase, analyze all the data and information that you gathered during the requirements phase.

You then use that information to determine which services and tools that you need in OCI. At the end of the assessment, you should have a high-level architecture that shows each OCI data service to be used and what functionality will be implemented on it.

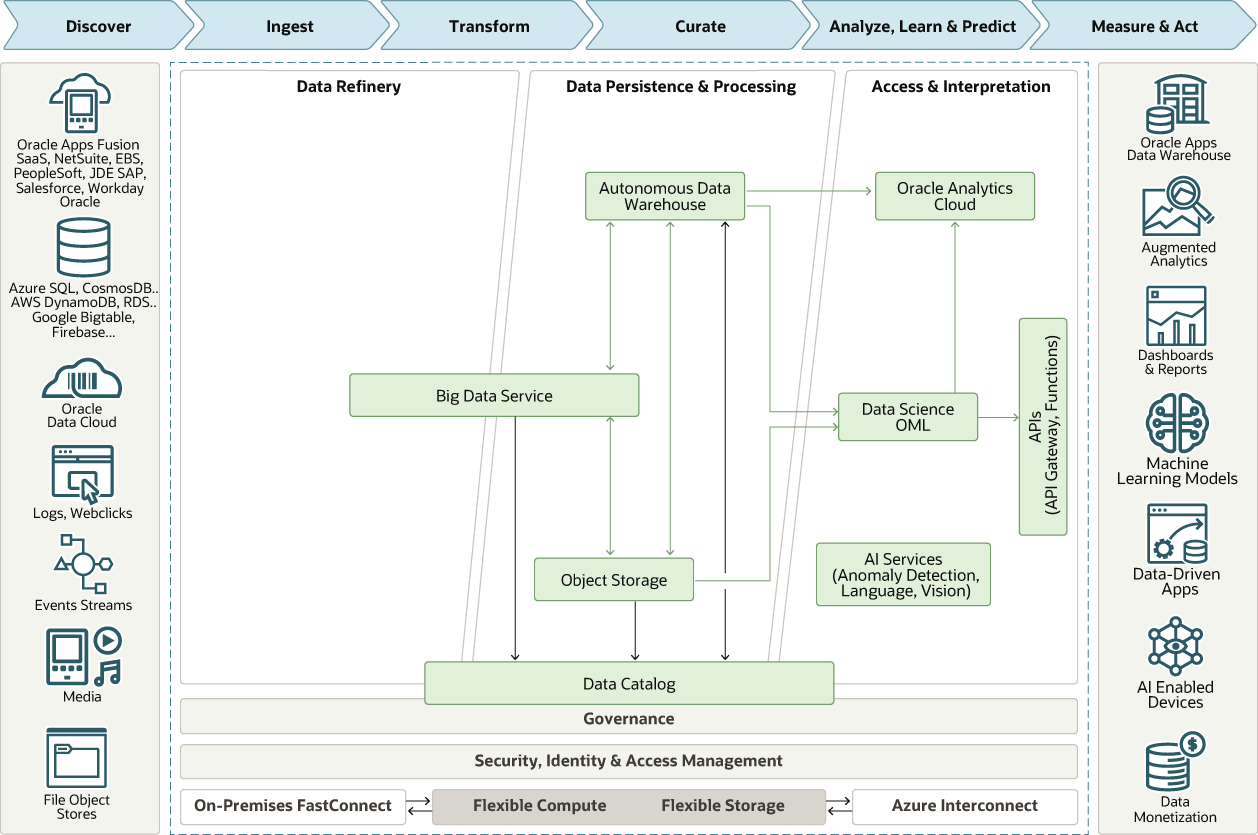

The following diagram is an example of the type of architecture that you create in this phase.

Description of the illustration architecture-hadoop-datalake.png

Design

In this phase, determine the solution architecture and initial sizing for Oracle Cloud Infrastructure (OCI).

Use the reference architecture that you created in the assessment phase as the starting point.

A good understanding of the OCI platform and how to build applications in OCI is required. You will also need to set up networking and IAM policies in OCI.

Plan

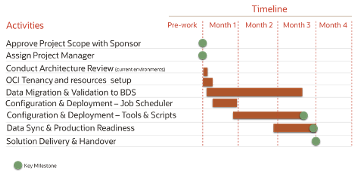

In this phase, create a detailed project plan with time and resource mapping.

For each of the activities, details on tasks, stakeholder RACI and timeline should be determined.

Project Plan

Create a project plan with all the activities, their time lines, and their dependencies.

The following picture shows an example of a high level project plan.

Description of the illustration project-plan.png

Bill of Materials

Based on your assessment and design, create a BOM for the target environment in OCI

List each service to be used, along with its sizing and configuration information. The following table is an example of the items you might include in the bill of materials.

| OCI service | Sizing and configuration |

|---|---|

| Big Data Service | - |

| Data Science | - |

| Data Catalog | - |

| Virtual Machines | - |

| Block Storage | - |

| Object Storage | - |

| Autonomous Data Warehouse | - |

| Virtual Cloud Network | - |

| Identity and Access Management | - |

Big Data Service Planning

In this section we discuss important choices you need make to launch a cluster in Big Data Service (BDS)

BDS Hadoop clusters run on OCI compute instances. You need to determine which instance types you want to use. These instances run in Virtual Cloud Network (VCN) subnets. They need to configured before launching clusters. You must also determing the storage requirements for block volumes that are attached to cluster nodes. In addition, IAM policies need to be configured.

There are two types of nodes:

-

Master and utility nodes. These nodes include the services required for the operation and management of the cluster. They do not store or process data.

-

Worker nodes These nodes store and process data. The loss of a worker node does not affect the operation of the cluster, although it can affect performance.

Clusters can be deployed in Secure and Highly Available or minimal (non-HA) mode. You also need to create a plan for the Hadoop components that you want to configure and their sizing. Review the BDS documentation link in the Explore More section to learn more configuring and sizing clusters.

You can use the following table to help create a plan for BDS clusters.

| Topic | Sizing and configuration |

|---|---|

| Secure and highly available or minimal (non-HA) config | - |

| Number of worker nodes | - |

| Storage per node | - |

| Master node(s) compute instance type and shape | - |

| Worker nodes compute instance type and shape | - |

| Master node 1 Hadoop services configuration | - |

| Master node 2 Hadoop services configuration (if applicable) | - |

| Utility node 1 Hadoop services configuration | - |

| Utility node 2 Hadoop services configuration (if applicable) | - |

| Utility node 3 Hadoop services configuration (if applicable) | - |

| Worker nodes Hadoop services configuration | - |

| Virtual Cloud Network details | - |

| Identity and Access Management policies applied | - |

| Ambari configuration | - |

| HDFS configuration | - |

| Hive configuration | - |

| HBase configuration | - |

| Spark configuration | - |

| Oozie configuration | - |

| Sqoop configuration | - |

| Tez configuration | - |

| Zookeeper configuration | - |

You can use similar tables when planning the composition and size of the other services in your architecture.

Provision

Based on the final state architecture design and sizing information in the BOM, provision and configure required resources in OCI according to the tasks listed in the project plan.

Big Data Service Deployment Workflow

Before you can set up a BDS cluster, you must configure permissions in IAM, and then configure the VCN for the cluster.

Configure IAM

Create additional IAM groups with access privileges for the BDS cluster.

You should delegate BDS cluster administration tasks to one or more BDS administrators.

If the group name is bds-admin-group, and the new cluster is in the Cluster compartment, you would create the following policies:

allow group bds-admin-group to manage virtual-network-family in compartment Cluster

allow group bds-admin-group to manage bds-instance in compartment ClusterAlso create a policy with the following policy statement:

allow service bdsprod to

{VNIC_READ, VNIC_ATTACH, VNIC_DETACH, VNIC_CREATE, VNIC_DELETE,VNIC_ATTACHMENT_READ,

SUBNET_READ, VCN_READ, SUBNET_ATTACH, SUBNET_DETACH, INSTANCE_ATTACH_SECONDARY_VNIC,

INSTANCE_DETACH_SECONDARY_VNIC} in compartment ClusterConfigure the VCN

At minimum, you need a single VCN with a single subnet in a single region with access to the public internet.

For a complex production environment, you can have multiple subnets, and different security rules. You might want to connect your VCN to an on-premises network, or to other VCNs in other regions. For more details on OCI networking see the OCI documentation.

Create a BDS Cluster

Choose a name for your cluster, cluster admin password and sizes for master, utility, and worker nodes.

When you create the cluster, you choose a name for it, a cluster admin password, and sizes for master, utility, and worker nodes. There is also a check box to select secure and highly available (HA) cluster configuration. HA gives you four master and utility nodes instead of two in the minimal non-HA configuration.

Make sure you create the cluster in the compartment that you want it in, and in the VCN that you want it in. Also make sure that the CIDR block for the Cluster Private Network does not overlap with the CIDR block range of the subnet that contains the cluster.

Access the BDS Cluster

Big Data Service nodes are by default assigned private IP addresses, which aren't accessible from the public internet.

You can make the nodes in the cluster available using one of the following methods:

- You can map the private IP addresses of selected nodes in the cluster to public IP addresses to make them publicly available on the internet.

- You can set up an SSH tunnel using a bastion host. Only the bastion host is exposed to the public internet. A bastion host provides access to the cluster's private network from the public internet.

- You can use VPN Connect which provides a site-to-site Internet Protocol Security (IPSec) VPN between your on-premises network and your VCN. You can also use OCI FastConnect to access services in OCI without going over the public internet. With FastConnect, the traffic goes over a private physical connection.

Manage the BDS Cluster

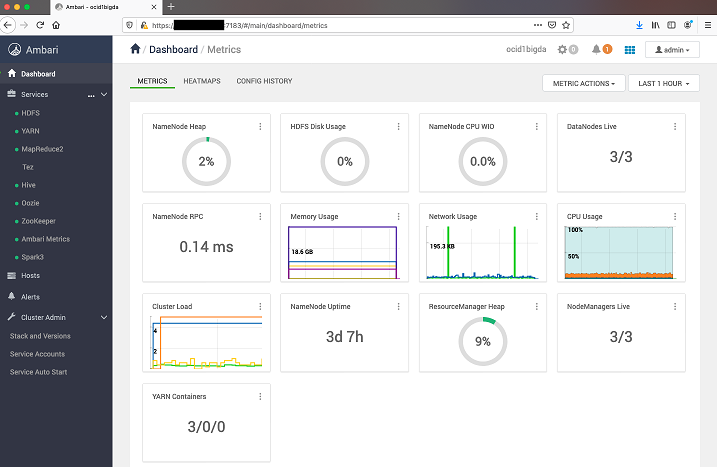

For BDS with Oracle Distribution including Apache Hadoop (ODH), you can use Apache Ambari to manage your cluster.

It runs on the utility node of the cluster. You must open port 7183 on the node by configuring the ingress rules in the network security list.

To access Ambari, open a browser window and enter the URL with IP address of the utility node. for example: https://<ip_address_or_hostname>:7183

Use the cluster admin user (default admin) and password that you entered when creating the cluster.

Description of the illustration ambari-dashboard-metrics.png

Implement

Start implementing applications and services for each phase. There are multiple criteria to consider before selecting a particular service.

Note that some services can be used in more than one phase. For example, Big Data Service has components that can be used in the ingest phase, the store phase, and the transform phase.

Ingest

-

Data Transfer Appliance: If migrating to OCI then you can use Data Transfer Service to migrage data, offline, to Object Storage.

-

Big Data Service: Big Data Service provides popular Hadoop components for data ingestion, including Kafka, Flume and Sqoop. Users can configure these tools based on their requirements. Kafka can be used for real time ingestion of events and data. For example, if users have events coming from their apps or server and they want to ingest events in real time then they can use Kafka and can write data to HDFS or Object Storage. Flume can be used to ingest streaming data into HDFS or Kafka topics. Sqoop is one of the most common Hadoop tools used to ingest data from structured datastores like relational databases and data warehouses.

Store

-

Big Data Service: BDS provides standard Hadoop components including HDFS and HBase. Data can be written to HDFS from Spark streaming, Spark batch, or any other jobs. HBase provides a non-relational distributed database that runs on top of HDFS. It can be used for storing large size datasets which are stored as key-value pairs. Data can be read and written to HBase from Spark jobs as part of ingestion or transformation.

-

Object Storage: OCI Object Storage service is an internet-scale, high-performance storage platform that offers reliable and cost-efficient data durability. It can store an unlimited amount of data of any content type, including analytic data and rich content like images and videos. In this pattern, Object Storage can be used as a general purpose blob store. Big Data Service and other services can read and write data from Object Storage.

Transform and Serve

-

Big Data Service (BDS): BDS offers Hadoop components like Spark and Hive that can be used to process data. Hive and Spark SQL can be used for running SQL queries on HDFS and Object Storage data. Once data is stored in HDFS or Object Storage, tables can be created by pointing to data and then any business intelligence (BI) tool or custom application can connect to these interfaces for running queries against data. Users can write complex batch job in spark which might be processing large size data or can have very complex transformation with multiple stages. Spark can be used for implementing jobs for reading and writing from multiple sources including HDFS, HBase and Object Storage. Oracle Cloud SQL is an available add-on service that enables you to initiate Oracle SQL queries on data in HDFS, Kafka, and Oracle Object Storage.

BI, ML, Visualization, and Governance

-

Data Catalog: Use OCI Data Catalog service to harvest metadata from data sources across the Oracle Cloud Infrastructure ecosystem and on-premises to create an inventory of data assets. You can use it to create and manage enterprise glossaries with categories, subcategories, and business terms to build a taxonomy of business concepts with user-added tags to make search more productive. This helps with governance and makes it easier for data consumers to find the data they need for analytics.

-

Data Science: Data Science is a fully managed and serverless platform for data science teams to build, train, deploy and manage machine learning models in the Oracle Could Infrastructure. It provides data scientists with a collaborative, project-driven workspace with Jupyter notebooks and python centric tools, libraries and packages developed by open source community along with Oracle Accelerated Data Science Library. It integrates with the rest of the stack including Data Flow, Autonomous Data Warehouse, and Object Storage.

-

Oracle Analytics Cloud (OAC): OAC offers AI-powered self-service analytics capabilities for data preparation, discovery, and visualization; intelligent enterprise and ad hoc reporting together with augmented analysis; and natural language processing/generation.