Deploy an HPC Bare Metal Server Cluster Powered by a NVIDIA Tensor Core GPU

Deploying artificial intelligence (AI), machine learning (ML), or deep learning (DL) models, such as BERT-Large for language modeling, often requires High Performance Computing (HPC).

Oracle Cloud Infrastructure (OCI) enables direct access to a bare metal server cluster powered by NVIDIA Tensor Core GPU. The HPC bare metal GPU cluster provides the industry's best price-performance for deploying AI, ML, or DL. You can host 500+ GPU clusters and easily scale, on demand.

Architecture

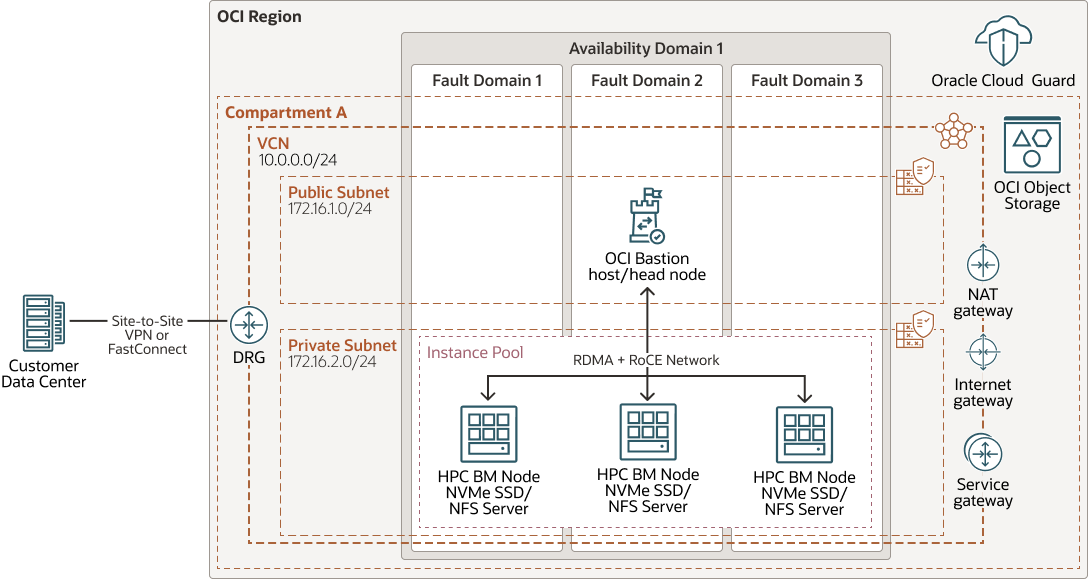

This architecture demonstrates the relationship among the various components in a typical system with HPC bare metal GPU cluster at its core. It can apply to many AI, ML, or DL applications, such as BERT-Large, GPT2/3, Jasper, MaskRCNN, and GNMT.

BERT is a pre-trained deep learning model that's popular in Natural Language Processing (NLP) tasks. it can be fine-tuned for specific applications or domains. The larger variant, BERT-large, contains 340M parameters. The training and inference times are tremendous without large scale distributed cluster over hundreds of identical GPUs. GPU clusters requires high I/O throughput and low latency cluster file system. How much, how fast, and how inexpensive you can process the data is especially critical to the real-time AI inference applications.

Oracle Cloud Infrastructure (OCI) utilizes Oracle’s low latency cluster networking, based on Remote Direct Memory Access (RDMA), running over converged ethernet (RoCE) with less than 2µs latency. RDMA allows for low latency connections between nodes and access to GPU memory without involving the CPU. OCI HPC enables the customer to cluster up 64 bare metal nodes, each with 8 NVIDIA A100 GPUs, to 512 GPUs.

OCI provides multiple high performance, low latency storage solutions for HPC workloads, such as the local NVMe SSD, network, and parallel file systems. The OCI bare metal server comes with NVMe SSD local storage. It can be used to create a scratch NFS or scratch Parallel File System (BeeOND, Weka), for temporary files. Using the Block volume multi-attach feature, you can use a single volume to store your entire training datasets and attach it to multiple GPU instances. Or you can use Intel Ice lake BM or VM and the balanced performance tier Block storage to build highest throughput and lowest cost file servers, with NFS based (NFS-HA, FSS) or Parallel File System (Weka.io, Spectrum Scale, BeeGFS, BeeOND). The training results are saved in Oracle Cloud Infrastructure Object Storage for long term storage.

The following diagram illustrates this reference architecture.

Description of the illustration architecture-hpc-bm-gpu.png

architecture-hpc-bm-gpu-oracle.zip

The architecture has the following components:

- Region

An Oracle Cloud Infrastructure region is a localized geographic area that contains one or more data centers, called availability domains. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

- Cloud Guard

You can use Oracle Cloud Guard to monitor and maintain the security of your resources in Oracle Cloud Infrastructure. Cloud Guard uses detector recipes that you can define to examine your resources for security weaknesses and to monitor operators and users for risky activities. When any misconfiguration or insecure activity is detected, Cloud Guard recommends corrective actions and assists with taking those actions, based on responder recipes that you can define.

- Availability domains

Availability domains are standalone, independent data centers within a region. The physical resources in each availability domain are isolated from the resources in the other availability domains, which provides fault tolerance. Availability domains don’t share infrastructure such as power or cooling, or the internal availability domain network. So, a failure at one availability domain is unlikely to affect the other availability domains in the region.

- Fault domains

A fault domain is a grouping of hardware and infrastructure within an availability domain. Each availability domain has three fault domains with independent power and hardware. When you distribute resources across multiple fault domains, your applications can tolerate physical server failure, system maintenance, and power failures inside a fault domain.

- Compartment

Compartments are cross-region logical partitions within an Oracle Cloud Infrastructure tenancy. Use compartments to organize your resources in Oracle Cloud, control access to the resources, and set usage quotas. To control access to the resources in a given compartment, you define policies that specify who can access the resources and what actions they can perform.

- Virtual cloud network (VCN) and subnets

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Site-to-Site VPN

Site-to-Site VPN provides IPSec VPN connectivity between your on-premises network and VCNs in Oracle Cloud Infrastructure. The IPSec protocol suite encrypts IP traffic before the packets are transferred from the source to the destination and decrypts the traffic when it arrives.

- FastConnect

Oracle Cloud Infrastructure FastConnect provides an easy way to create a dedicated, private connection between your data center and Oracle Cloud Infrastructure. FastConnect provides higher-bandwidth options and a more reliable networking experience when compared with internet-based connections.

- Dynamic routing gateway (DRG)

The DRG is a virtual router that provides a path for private network traffic between a VCN and a network outside the region, such as a VCN in another Oracle Cloud Infrastructure region, an on-premises network, or a network in another cloud provider.

- Network address translation (NAT) gateway

A NAT gateway enables private resources in a VCN to access hosts on the internet, without exposing those resources to incoming internet connections.

- Internet gateway

The internet gateway allows traffic between the public subnets in a VCN and the public internet.

- Service gateway

The service gateway provides access from a VCN to other services, such as Oracle Cloud Infrastructure Object Storage. The traffic from the VCN to the Oracle service travels over the Oracle network fabric and never traverses the internet.

- Security list

For each subnet, you can create security rules that specify the source, destination, and type of traffic that must be allowed in and out of the subnet.

- Route table

Virtual route tables contain rules to route traffic from subnets to destinations outside a VCN, typically through gateways.

- Bastion host

The bastion host is a compute instance that serves as a secure, controlled entry point to the topology from outside the cloud. The bastion host is provisioned typically in a demilitarized zone (DMZ). It enables you to protect sensitive resources by placing them in private networks that can't be accessed directly from outside the cloud. The topology has a single, known entry point that you can monitor and audit regularly. So, you can avoid exposing the more sensitive components of the topology without compromising access to them.

- Bastion Node (Head Node)

The bastion node (head node) uses a web-based portal to connect to the head node and schedule HPC jobs. The job request comes through Oracle Cloud Infrastructure FastConnect or IPSec VPN to the head node. The head node also sends the customer data set to file storage and can do some preprocessing on the data. The head node can provision HPC node clusters and delete HPC clusters on job completion.

The head node contains the BERT model, runs the scheduler, and can serve as a bastion host for access to the cluster. It has the message passing interface (MPI) and autoscales the bare metal nodes through REST APIs. The HPC cluster provisions bare metal nodes on demand. The model training and inference use the 4 x 6.4TB NVMe SSD local storage attached to the bare metal node. If you're using our solutions to launch the infrastructure, the architecture deploys the head node and an

nfs-shareis installed by default on the NVMe SSD storage in/mnt. - HPC Cluster Node

The head node provisions and terminates these compute nodes, which are RDMA-enabled clusters. They process the data stored in file storage and return the results to file storage.

- NFS Server

The head node promotes one of the HPC nodes as an NFS server.

- Instance pool

An instance pool is a group of instances within a region that are created from the same instance configuration and managed as a group.

Instance pools let you create and manage multiple Compute instances within the same region as a group. They also enable integration with other services, such as the Oracle Cloud Infrastructure Load Balancing service and Oracle Cloud Infrastructure Identity and Access Management service.

- Bare Metal DB System

A bare metal (BM) DB system is a single bare metal server running Oracle Linux 7, with locally attached NVMe storage. Use a Bare Metal GPU shape for hardware-accelerated analytics and other computations.

When you launch a bare metal DB system, you select the shape and a single Oracle Database edition that applies to all the databases on that DB system. Each DB system can have multiple database homes, which can be different versions. Each database home can have only one database, which is the same version as the database home.

The shape determines the resources allocated to the DB system. Options, like 2- or 3-way mirroring and the space allocated for data files, affect the amount of usable storage on the system.

- Object storage

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store and then retrieve data directly from the internet or from within the cloud platform. You can seamlessly scale storage without experiencing any degradation in performance or service reliability. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

Recommendations

- VCN

When you create a VCN, determine the number of CIDR blocks required and the size of each block based on the number of resources that you plan to attach to subnets in the VCN. Use CIDR blocks that are within the standard private IP address space.

Select CIDR blocks that don't overlap with any other network (in Oracle Cloud Infrastructure, your on-premises data center, or another cloud provider) to which you intend to set up private connections.

After you create a VCN, you can change, add, and remove its CIDR blocks.

When you design the subnets, consider your traffic flow and security requirements. Attach all the resources within a specific tier or role to the same subnet, which can serve as a security boundary.

Use regional subnets.

- Security

Use Oracle Cloud Guard to monitor and maintain the security of your resources in Oracle Cloud Infrastructure proactively. Cloud Guard uses detector recipes that you can define to examine your resources for security weaknesses and to monitor operators and users for risky activities. When any misconfiguration or insecure activity is detected, Cloud Guard recommends corrective actions and assists with taking those actions, based on responder recipes that you can define.

For resources that require maximum security, Oracle recommends that you use security zones. A security zone is a compartment associated with an Oracle-defined recipe of security policies that are based on best practices. For example, the resources in a security zone must not be accessible from the public internet and they must be encrypted using customer-managed keys. When you create and update resources in a security zone, Oracle Cloud Infrastructure validates the operations against the policies in the security-zone recipe, and denies operations that violate any of the policies.

- Cloud Guard

Clone and customize the default recipes provided by Oracle to create custom detector and responder recipes. These recipes enable you to specify what type of security violations generate a warning and what actions are allowed to be performed on them. For example, you might want to detect Object Storage buckets that have visibility set to public.

Apply Cloud Guard at the tenancy level to cover the broadest scope and to reduce the administrative burden of maintaining multiple configurations.

You can also use the Managed List feature to apply certain configurations to detectors.

- Security Zones

For resources that require maximum security, Oracle recommends that you use security zones. A security zone is a compartment associated with an Oracle-defined recipe of security policies that are based on best practices. For example, the resources in a security zone must not be accessible from the public internet and they must be encrypted using customer-managed keys. When you create and update resources in a security zone, Oracle Cloud Infrastructure validates the operations against the policies in the security-zone recipe, and denies operations that violate any of the policies.

- Network security groups (NSGs)

You can use NSGs to define a set of ingress and egress rules that apply to specific VNICs. We recommend using NSGs rather than security lists, because NSGs enable you to separate the VCN's subnet architecture from the security requirements of your application.

- HPC Nodes

Deploy the HPC bare metal shapes to get full performance.

Use BM.HPC4.8 shapes with 8 A100 Tensor Core GPUs with 40 GB memory, 2 x 32 Core AMD at 2.9 GHz, 2048 GB DDR4, 8 x 200 Gbps networking, 4 x 6.4-TB local NVMe SSD storage up to 1PB block per node.

Cluster up to 64 bare metal nodes to deliver 512 GPUs and 4096 CPUs.

Considerations

Consider the following points when deploying this reference architecture.

- Performance

Depending on the size of the workload, determine how many cores you want BERT to run on. This decision ensures that the simulation is completed in a timely manner.

To get the best performance, choose the correct Compute shape with appropriate bandwidth.

- Availability

Consider using a high-availability option, based on your deployment requirements and region. Options include using multiple availability domains in a region and fault domains.

- Monitoring and Alerts

Set up monitoring and alerts on CPU and memory usage for your nodes, so that you can scale the shape up or down as needed.

- Cost

A bare metal GPU instance provides the necessary CPU power for a higher cost. Evaluate your requirements to choose the appropriate Compute shape.

You can delete the cluster when there are no jobs running.

- Monitoring and Alerts

- Cluster File Systems

There are multiple scenarios:

- Local NVMe SSD storage that comes with the HPC shape.

- Multi-attach Block volumes deliver up to 2,680 MB/s IO throughput or 700k IOPS.

- You can also install your own parallel file system on top of either the NVMe SSD storage or block storage, depending on your performance requirements. OCI provides scratch and permanent NFS based (NFS-HA, FSS ) or Parallel File System (weka.io, Spectrum Scale, BeeGFS, BeeOND, Lustre, Gluster, Quobyte) solutions, see Explore More. Reach out to the HPC Storage Team for designing the most optimal solutions for your needs.

Deploy

The Terraform code for this reference architecture is available as a sample stack in Oracle Cloud Infrastructure Resource Manager. You can also download the code from GitHub, and customize it to your requirements.

- For Oracle Cloud Infrastructure - High Performance Computing with an

RDMA cluster network, deploy by using Oracle Cloud Infrastructure Resource

Manager:

- Click

If you aren't already signed in, enter the tenancy and user credentials.

- Review and accept the terms and conditions.

- Select the region where you want to deploy the stack.

- Follow the on-screen prompts and instructions to create the stack.

- After creating the stack, click Terraform Actions, and select Plan.

- Wait for the job to be completed, and review the plan.

To make any changes, return to the Stack Details page, click Edit Stack, and make the required changes. Then, run the Plan action again.

- If no further changes are necessary, return to the Stack Details page, click Terraform Actions, and select Apply.

- Click

- For Oracle Cloud Infrastructure - High Performance Computing, deploy using the

Terraform code in GitHub:

- Go to GitHub.

- Clone or download the repository to your local computer.

- Follow the instructions in the

READMEdocument.

Explore More

Learn more about high performance computing and NVIDIA-supported learning applications..

- Deploy high-performance computing (HPC) on Oracle Cloud Infrastructure

- Punch Torino: High-performance computing (HPC) cluster deployment on Oracle Cloud

- Deploy virtual desktop infrastructure (VDI) with high-performance computing (HPC)

- High Performance Computing: OpenFOAM on Oracle Cloud Infrastructure

- High Performance Computing: LS-DYNA on Oracle Cloud Infrastructure

- High Performance Computing: Ansys Fluent on Oracle Cloud Infrastructure

- Deploy a scalable, distributed file system using GlusterFS

- Deploy a scalable, distributed file system using Lustre

- Deploy a high-performance storage cluster using IBM Spectrum Scale

- Deploy the BeeGFS parallel file system

Review these additional resources for NVIDIA-supported learning applications: