Configure for Disaster Recovery

Note:

This solution assumes that both Kubernetes clusters, including the control plan and worker nodes, already exist.Plan the Configuration

kubectl, to run commands against them.

Note:

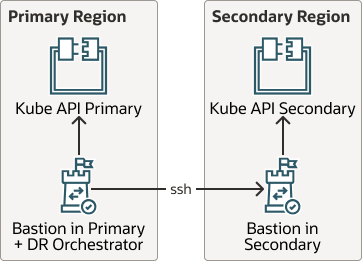

This solution assumes that both Kubernetes clusters, including the control plan and worker nodes, already exist. The recommendations and scripts provided in this playbook do not check resources, control plane, or worker node configuration.The following diagram shows that when configured, you can restore the artifact snapshot in completely different Kubernetes clusters.

Description of the illustration kube-api-dr.png

Complete the following requirements for Restore when planning your configuration:

Verify

maak8DR-apply.sh script, verify

that all of your artifacts which existed in the primary cluster have been replicated to

the secondary cluster. Look at the secondary cluster and verify that the pods in the

secondary site are running without error.

When you run the maak8DR-apply.sh script, the framework creates the

working_dir directory as

/tmp/backup.date. When you run the

maak8-get-all-artifacts.sh and

maak8-push-all-artifacts.sh scripts individually, the working

directory is provided in each case as an argument in the command line.