Design a Microservices-Based Application

When you design an application by using the microservices architecture, consider adhering to the microservices best practices and the 12 patterns of success for developing microservices. These patterns incorporate the 12-factor app considerations, and build from experience in microservices deployments alongside a converged database.

Learn the Best Practices for Designing Microservices

By following certain best practices when designing microservices you can ensure that your application is easy to scale, deploy, and maintain. Note that not all of the best practices discussed here might be relevant to your application.

Each microservice must implement a single piece of the application’s functionality. The development teams must define the limits and responsibilities of each microservice. One approach is to define a microservice for each frequently requested task in the application. An alternative approach is to divide the functionality by business tasks and then define a microservice for each area.

Consider the following requirements in your design:

- Responsive microservices: The microservices must return a response to the requesting clients, even when the service fails.

- Backward compatibility: As you add or update the functionality of a microservice, the changes in the API methods and parameters must not affect the clients. The REST API must remain backward-compatible.

- Flexible communication: Each microservice can specify the protocol that must be used for communication between the clients and the API gateway and for communication between the microservices.

- Idempotency: If a client calls a microservice multiple times, then it should produce the same outcome.

- Efficient operation: The design must facilitate easy monitoring and troubleshooting. A log system is commonly used to implement this requirement.

Understand the 12 Patterns for Success in Microservices

Microservices are hard to implement, and these 12 patterns make it easier to harness the agility and simplicity advantages from a mircoservices architecture using a converged database platform alongside containers and Kubernetes.

- Bounded contexts: Design it upfront, or break monoliths into microservices with a data refactoring advisor.

- Loose coupling: Decouple data by isolating schema to the microservice, and using a reliable event mesh.

- CI/CD for microservices: A multi-tenant database makes it a natural fit for microservices. Add a pluggable database (PDB) to deploy and build independently. An app (with Jenkins) and schema (Liquibase, Flyway, and EBR in the database) are also good choices.

- Security for microservices: Secure each endpoint from API gateway, to load balancer, to event mesh, to database.

- Unified observability for microservices: Metrics, logs, and traces into a single dashboard for tuning and self-healing.

- Transactional outbox: Sending a message and a data manipulation operation in a single local transaction.

- Reliable event mesh: Event mesh for all events with high throughput transactional messaging and pub and sub; with event transformations and event routing.

- Event aggregation: Events are ephemeral and notify or trigger real-time action; after which they’re aggregated into the database – the database is the ultimate compacted topic.

- Command query responsibility segregation (CQRS): Where operational and analytical copies of data available for microservices.

- Sagas: Transactions across microservices, with support from event mesh and escrow journaling in the database.

- Polyglot programming: Support for microservices and message formats in a variety of languages, using JSON as the payload.

- Back-end as a service (BaaS): A microservices infrastructure right sized for test, dev, and production deployments (small to medium) on any cloud or on-premises deployments; for Spring Boot Apps.

Start with a Bounded Context Pattern

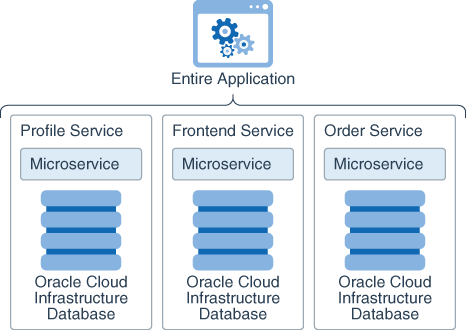

The recommended pattern to implement persistence for a microservice is to use a single pluggable database (PDB) in a container database . For each microservice, keep the persistent data private, and create the pluggable database as a part of the microservice implementation.

In this pattern, the private persistent data is accessible through only the microservice API.

The following illustration shows the persistence design for microservices.

Description of the illustration microservices_persistence.png

- Private tables: Each service owns a set of tables, or documents.

- Schema: Each service owns a private database schema, or collection.

- Database: Each service owns a pluggable database within a container database, as shown in the illustration.

A persistence anti-pattern for your microservices is to share one database schema across multiple microservices. You can implement atomic, consistent, isolated, and durable transactions for data consistency. An advantage with this anti-pattern is that it uses a simple database. The disadvantages are that the microservices might interfere with each other while accessing the database, and the development cycles may slow down because developers of different microservices need to coordinate the schema changes, which also increases inter-service dependencies.

Your microservices can connect to an Oracle Database instance that is running on Oracle Cloud Infrastructure. The Oracle multi-tenant database supports multiple pluggable databases (PDBs) within a container. This is the best choice for the persistence layer for microservices, for bounded context isolation of data, security, and for high availability. In many cases, fewer PDBs can be used with schema-level isolation.

Understand the Value of Deploying Microservices in Containers

After you build your microservice, you must containerize it. A microservice running in its own container doesn’t affect the microservices deployed in the other containers.

A container is a standardized unit of software, used to develop, ship and deploy applications.

Containers are managed using a container engine, such as Docker. The container engine provides the tools that are necessary to bundle all the application dependencies as a container.

You can use the Docker engine to create, deploy, and run your microservices applications in containers. Microservices running in Docker containers have the following characteristics:

- Standard: The microservices are portable. They can run anywhere.

- Lightweight: Docker shares the operating system (OS) kernel, doesn’t require an OS for each instance, and runs as a lightweight process.

- Secure: Each container runs as an isolated process. So the microservices are secure.

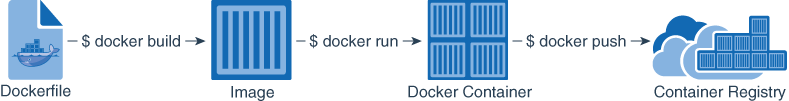

The process of containerizing a microservice involves creating a Dockerfile, creating and building a container image that includes its dependencies and the environmental configuration, deploying the image to a Docker engine, and uploading the image to a container registry for storage and retrieval.

Description of the illustration docker_container_process.png

Learn About Orchestrating Microservices Using Kubernetes

The microservices that are running in containers must be able to interact and integrate to provide the required application functionalities. This integration can be achieved through container orchestration.

Container orchestration enables you to start, stop, and group containers in clusters. It also enables high availability and scaling. Kubernetes is one of the container orchestration platforms that you can use to manage containers.

After you containerize your microservices, you can deploy them to OCI Kubernetes Engine.

Before you deploy your containerized microservices application to the cloud, you must deploy and test it in a local Kubernetes engine, as follows:

- Create your microservices application.

- Build Docker images, to containerize your microservices.

- Run your microservices in your local Docker engine.

- Push your container images to a container registry.

- Deploy and run your microservices in a local Kubernetes engine, such as Minikube.

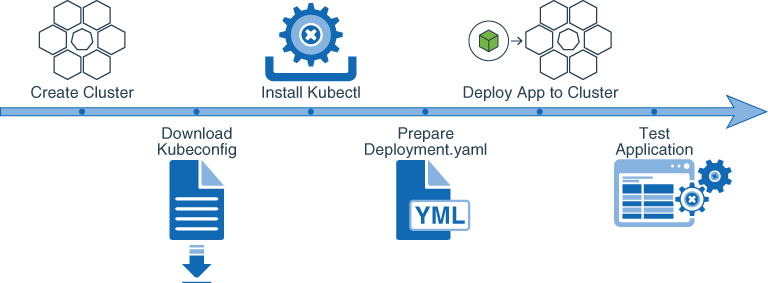

After testing the application in a local Kubernetes engine, deploy it to OCI Kubernetes Engine as follows:

- Create a cluster.

- Download the

kubeconfigfile. - Install

kubectltool on a local device. - Prepare the

deployment.yamlfile. - Deploy the microservice to the cluster.

- Test the microservice.

The following diagram shows the process for deploying a containerized microservices application to OCI Kubernetes Engine.

Description of the illustration oke_deployment_process.png

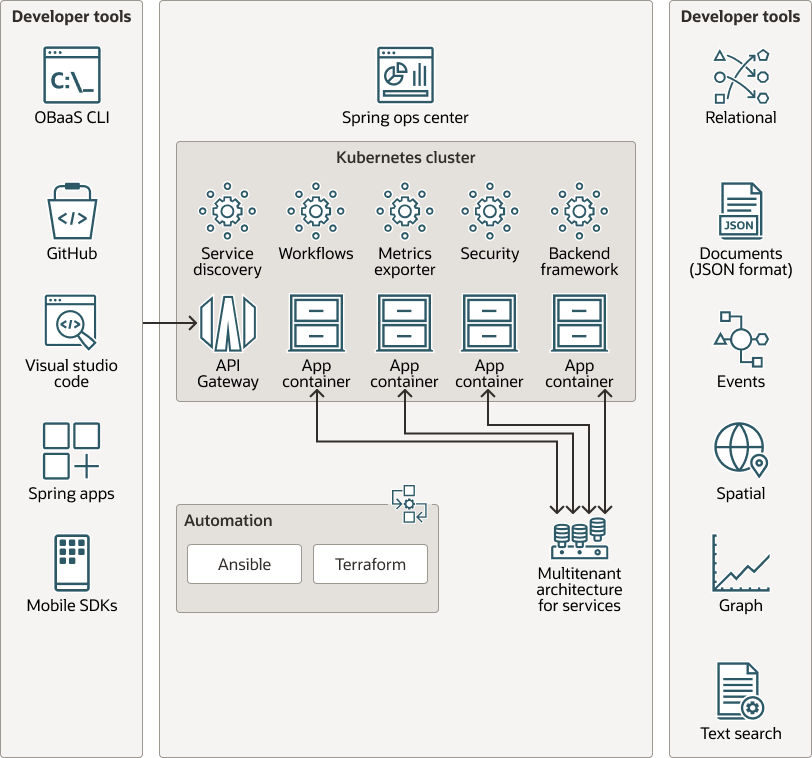

Learn About Oracle Backend for Spring Boot and Microservices

Spring Boot is the most popular framework for building microservices in Java. One of the 12 patterns for success in the microservices architecture deploying successfully is the Backend as a Service (BaaS) pattern. Oracle BaaS is available on the OCI Marketplace to deploy using Terraform and Ansible within 30 minutes. Oracle BaaS provides a set of platform services required to deploy and operate microservices, and are integral to the Spring Boot environment. Details of the Oracle Backend for Spring Boot and Microservices platform are illustrated in the diagram below.