Replication Technologies

There are multiple technologies to replicate file system contents: storage-level replica technologies, operating system tools, and other product-specific features.

The following technologies available in OCI for the mid-tier’s file system replication are covered: OCI Block Volumes replica and the OCI File Storage replica (as storage-level replica technologies), rsync (as an operating system tool), and Database File System (DBFS), which is an Oracle database-specific feature.

The RTO and RPO values are different for each technology. The RTO is determined by the time it takes to enable the storage and make it accessible for the applications. The RPO is determined by the frequency of replication allowed by each technology.

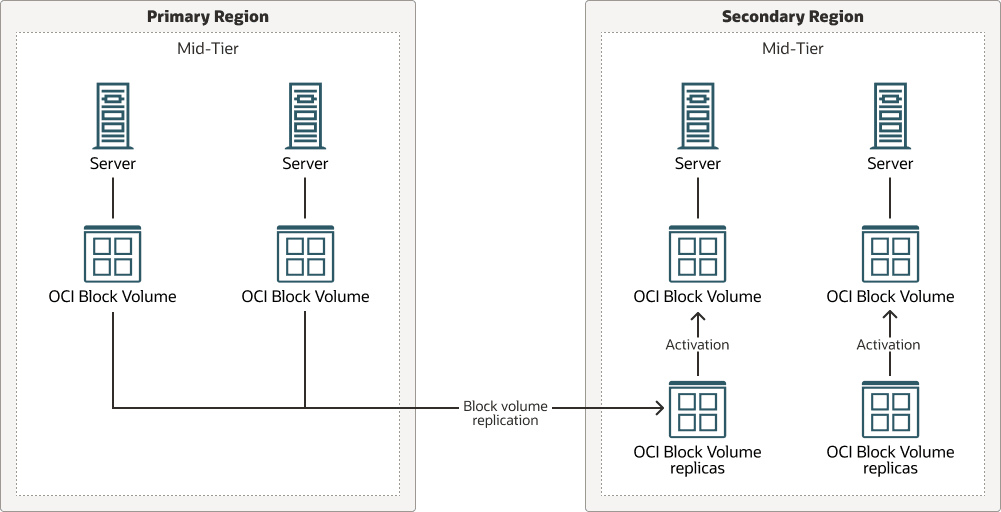

About OCI Block Volumes Replication

When you enable replication for a volume or volume group, the process includes an initial sync of the data from the source to the replica. After the initial synchronization process is complete, the replication process is continuous, with the typical Recovery Point Object (RPO) target rate being less than 30 minutes for replication across regions (however, the RPO can vary depending on the change rate of data on the source volume).

The block volume replica artifacts can’t be directly mounted. To mount a replicated block volume, you need to run the activation on its replica (or the volume group replica when it is replicated within a group). The activation process creates a new volume by cloning the replica, which you can mount as a regular block volume. The RTO of this technology is directly related to the time required to perform this operation (usually 5-10 minutes, can vary depending on the number of nodes and if you perform the actions in parallel). In failover situations, these steps can cause additional operational overhead and increase the total RTO. In a planned switchover, however, you can perform these operations before stopping the primary system, so they don’t incur downtime or increase the total RTO.

This replication doesn’t require specific connectivity between the source and destination; however, they must be listed in the Source and Destination Region Mappings for Block Volume replication.

Note:

OCI Block Volumes are normally used privately: each compute instance has read-write access on its own block volumes. Although you can attach a volume to more than one compute instance at a time, it requires an additional cluster-aware solution to prevent data corruption from uncontrolled read/write operations with multiple instance volume attachments. Hence, when an application needs to share files between nodes, it uses an OCI File Storage file system instead, which is a network file system.About OCI File Storage Replication

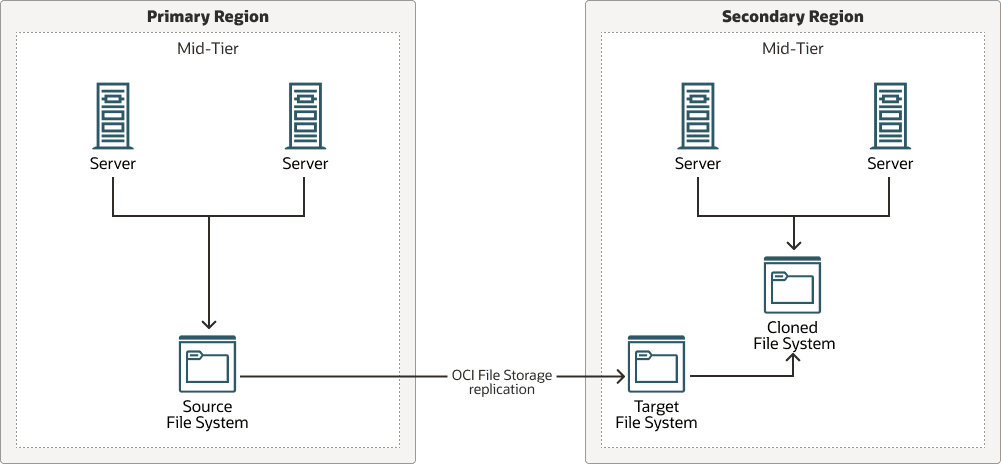

When you enable replication for an OCI File Storage file system, you select a target file system and define how often the data is replicated. The replication feature creates a special replication snapshot in the source file system. Then, it transfers the snapshot to the target, which writes the new data to the target file system. The last completed replication snapshot remains in both the source and target file systems until the next interval. At the next interval, the replication process automatically deletes the old replication snapshots and creates a new one. The replication process continues at the specified interval as long as the replication is in effect. The minimum replication interval is 15 minutes, which defines the minimum RPO for this technology.

The target file system is a file system that has never been exported, so it is marked as “targetable”. While the replication is enabled, the target file system is read-only and updated only by replication. To export and mount a replicated file system, you need to clone it.

Then you can export and mount the cloned file system. The RTO of this technology is directly related to the time required to perform this operation (normally less than 5 minutes to clone, export, and mount a file system, but can vary depending on the number of nodes and if you perform the actions in parallel). In failover situations, these steps can cause additional operational overhead and increase the total RTO. In a planned switchover, however, you can perform these operations before stopping the primary system, so they don’t incur downtime or increase the total RTO.

This replication doesn’t require any specific connectivity between primary and secondary sites; however, they must be in the list of Recommended Target Regions for OCI File Storage (OCI FS) replication.

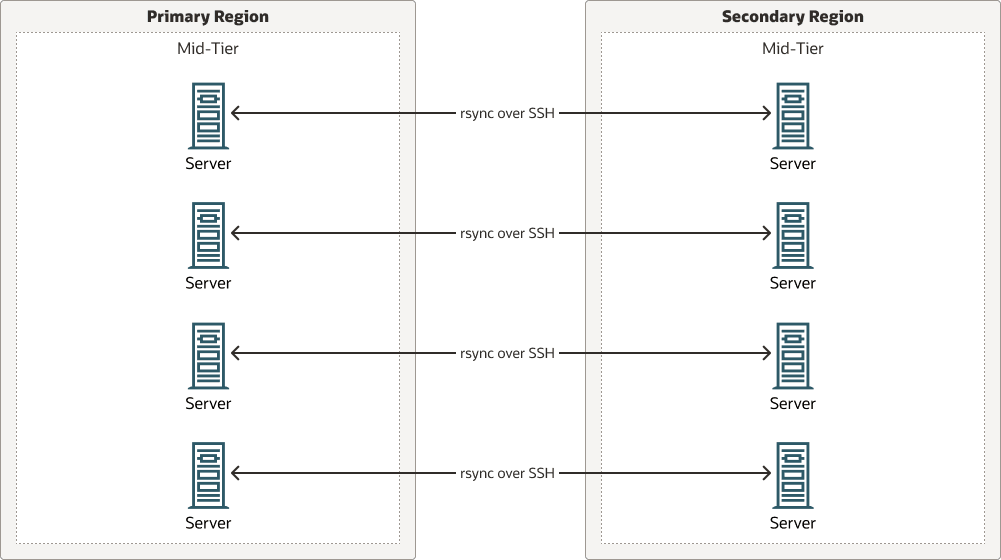

About the Remote Sync (rsync) Utility

The rsync utility enables you to transfer and synchronize files between a host and a storage drive, and across hosts, by comparing the modification times and file sizes. When used with SSH, you can synchronize files and directories between two different systems with minimal network usage.

To use this technology, you're responsible for creating and running the rsync scripts. The scripts must use the appropriate rsync commands to replicate the mid-tier folders, such as the configuration or products folders. The RPO for this technology depends on the frequency of the rsync replica scripts.

When using rsync as replication technology, the storage is already mounted both in primary and secondary, so no time is required during switchover to mount the storage in secondary. This technology does not increase the RTO of the system during switchovers or failovers.

The rsync command provides useful options to perform a good copy operation. For example, the option --exclude skips specific files and folders from the copy. The flag --delete allows to keep an exact copy by deleting in the destination the files that no longer exist in the source. The flag --checksum forces a full checksum comparison on every file present on both systems. Since rsync is an operating system command, you can copy files and folders regardless of whether they reside in a Block Volume, an NFS mount, or even if the underlying storage differs between primary and standby.

This technology requires network connectivity between primary and secondary regions, more specifically between the host running rsync commands and the remote hosts it connects to. OCI has evolved through the years and provides direct communication between regions with remote peering and Dynamic Routing Gateways. This allows communication using private IP addresses, without routing the traffic over the internet or through your on-premises network. This has made the rsync solution reliable and secure enough to be used as a valid replication approach across regions.

The rsync technology allows flexibility in its implementation because the user is responsible for creating the rsync scripts. You can choose between different approaches:

- Peer-to-peer

In this model, the copy is done directly from each host to its remote peer. Each node has SSH connectivity to its peer and uses

rsynccommands over SSH to replicate the primary system. This is easy to set up and does not need additional hardware. However, it requires maintenance across many nodes since scripts are not centralized. That is, large clusters add more complexity to the solution.

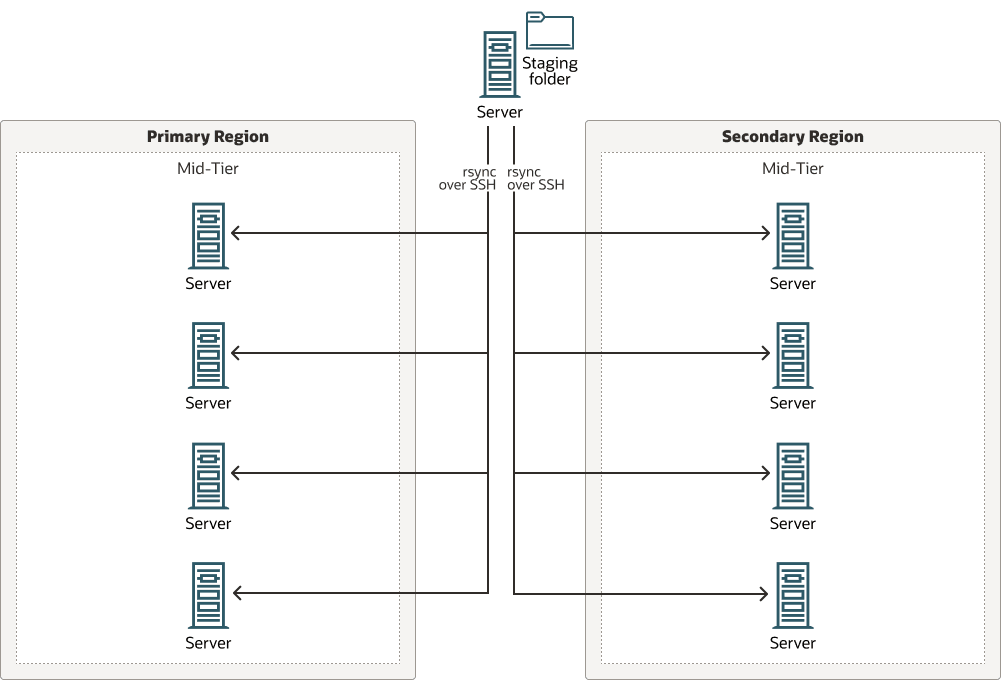

- Central staging location

In this model, a node acts as a coordinator. It connects to each host that needs to be replicated and copies the contents to a common staging location. This node also coordinates the copy from the staging location to the destination hosts. This approach offloads the individual nodes from the overhead of the copies.

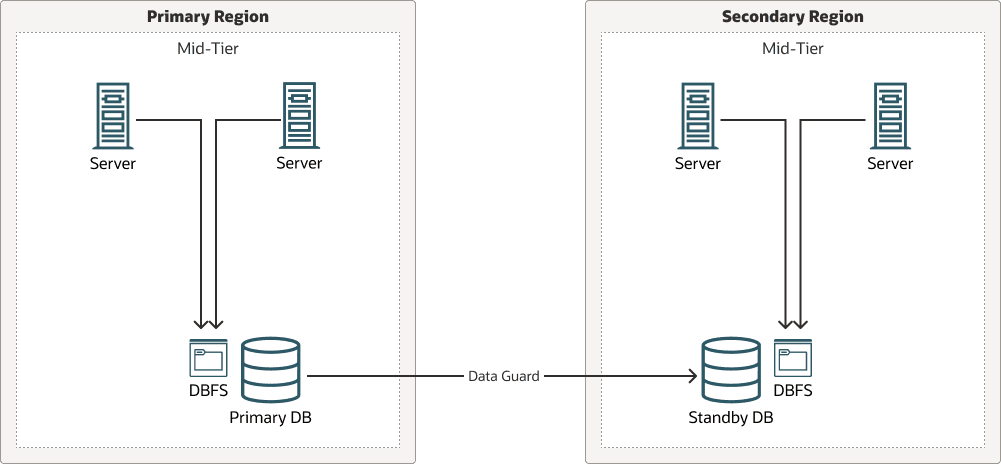

About Database File System

dbfs_client utility.

Note:

The DBFS feature is not available when the database is an Oracle Autonomous Database.

When Oracle Data Guard is configured for the database, the DBFS contents in the primary are automatically replicated to the standby database. Any folder or file that you place in the DBFS folder is available in the secondary site, and the secondary hosts can mount it if the database is open in read-only mode or if it is converted to the snapshot standby mode.

However, Oracle doesn’t recommend storing the mid-tier artifacts (such as the mid-tier configuration or the products) in a DBFS mount directly. That would make the middle tier dependent on the DBFS infrastructure (database client, database, FUSE libraries, and so on). You can use a DBFS mount as an intermediate staging folder to store a copy of the folder that you want to replicate.

To use this technology, you're responsible for creating and running scripts to copy the mid-tier folders, such as the configuration folders, to and from the DBFS staging folder. The RPO for this technology depends on the frequency of these scripts.

Since the DBFS mount is not directly used to store the mid-tier artifacts, the real storage is already mounted both in primary and standby, so no time is required during switchover to mount the storage in standby. This technology does not increase the RTO of the system during switchovers or failovers.

This technology requires the database client on the mid-tier hosts. Depending on the implementation, this method may also require SQL*net connectivity between the hosts and the remote databases for database operations such as role conversions.