Learn How OCI AI and ML Enhance the Patient Experience

As with any business, healthcare needs the ability to access, analyze, manipulate, and store large volumes of data. This kind of data processing is central to the technologies behind the Oracle Cloud Infrastructure Lakehouse (data lakehouse), which facilitates artificial intelligence (AI) and machine learning (ML) to enhance patient experience.

The setup of a healthcare system, in terms of patient experience, should consider:

- Getting accurate patient information to clinicians as quickly as possible, with an ease that removes unnecessary burdens, allowing the clinician to spend as much time on the patient’s case as possible.

- Getting the patient from symptoms to recovery as quickly as possible.

- Ensuring the process is as cost-efficient as possible, regardless of whether the cost is taken on by the patient, their medical insurance, or a public health service (e.g., National Health Service in the UK).

For example, a general practitioner (GP) or a primary care provider (PCP) believe their patient is exhibiting signs of pneumonia. They referred the patient to a medical center (hospital or imaging center) for a chest x-ray to investigate if pneumonia or something else is causing the patient’s symptoms. The first step in this process is to set up an appointment with the medical center and share the patient’s electronic medical record (EMR) or electronic health record (EHR). Ideally, this data would be incorporated in the data lakehouse.

The radiology staff at the medical center would take the x-rays, but from that point forward, the data lakehouse and related technologies play an active role in the diagnosis process. The x-ray image is created using the radiology information system (RIS) and stored using either the picture archiving and communication system (PACS) or a newer generation of data storage such as the Vendor Neutral Archive (VNA). The images are likely stored using a medical imaging format such as Digital Imaging and Communications in Medicine (DICOM), that stores a very high-resolution image with its associated metadata, such as details about where and when the image was generated and the type of modality (how the image was captured). This metadata is linked to the correct patient and their records.

Once images are captured, a clinician reviews and interprets each one to determine the clinical needs of the patient. This is a time-consuming process, often resulting in the patient awaiting further information at home.

This playbook is going to focus on how a data lakehouse participates in solutions that deliver the outcomes we’ve described.

This playbook will focus on the systems involved in one use case involving a regional hospital, and will call out related areas, but won’t go into solution detail.

Architecture

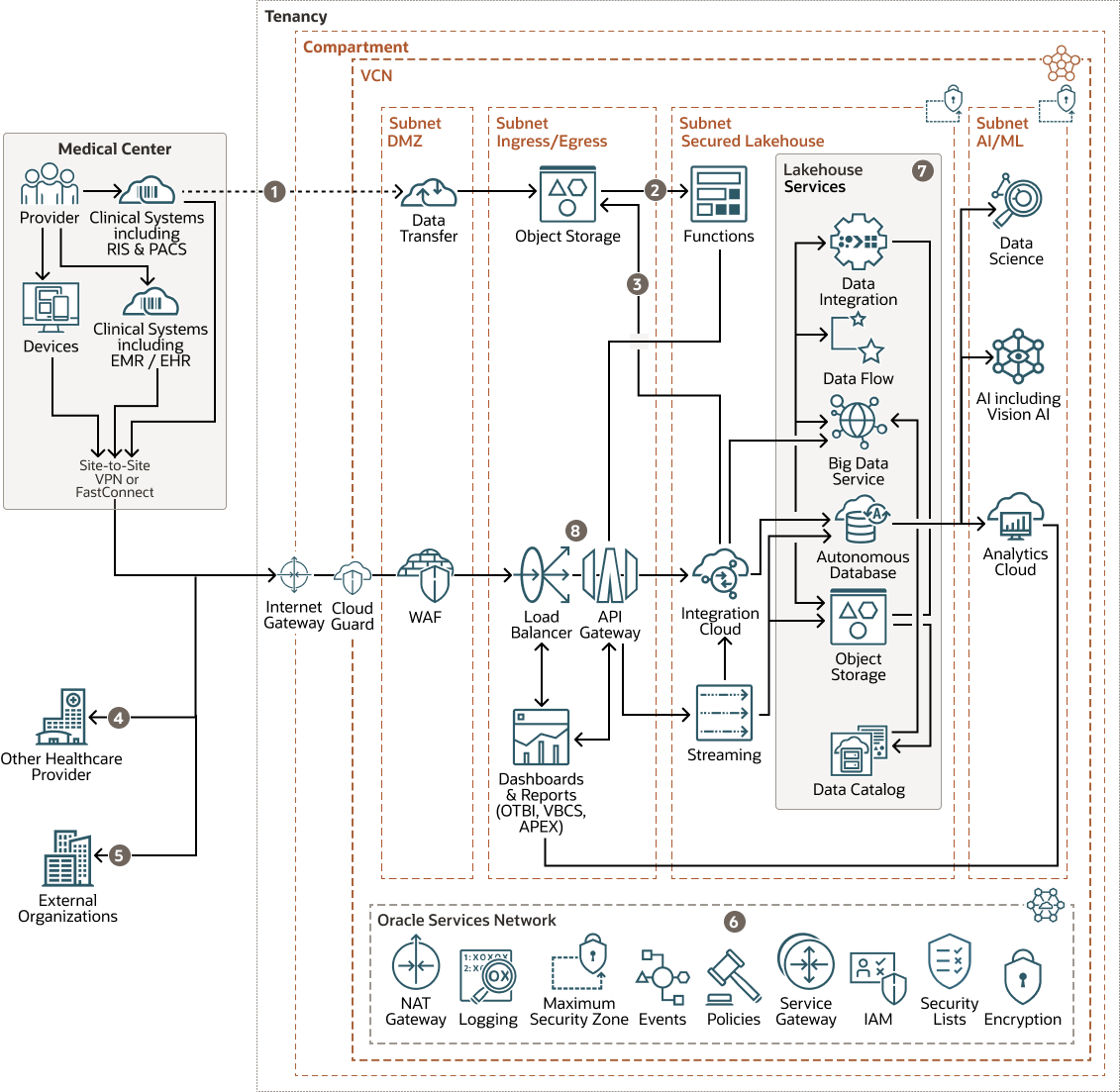

This architecture shows a medical center accessing and using an OCI data lakehouse.

- The initial setup would likely require using the Data Transfer Service as an efficient, cost-effective, and quick way to migrate all the existing data from the PACS solution to Oracle Cloud Infrastructure (OCI) for processing. If the connectivity performance for ongoing operations was problematic, this could be extended to daily transfers. In addition to the PACs data, this may be a means to bulk ship patient records and associated information.

- Additional data sources such as collaboration on sharing EMRs between healthcare providers could include the patient's GP or PCP.

- Functions are provided to perform complex data manipulations. For example, they extract the metadata from a DICOM image. These processes are too complex to be effectively actioned within the persistence tools and business logic. This means we can stitch in new logic each time a new complex unstructured data object is introduced, such as a new type of image.

- Data in and out of the services supports the sharing of patient EHR/EMR.

- As mentioned in the scenario, the more data that can be sourced related to the use cases, such as additional insights on patients, enables additional insights to be achieved. For example, the CDC data set Social Determinants of Health (SDOH) provides APIs for socioeconomic indicators for various health conditions.

- This reflects the number of services used directly or indirectly to support security and platform and solution operability.

- Data lakehouse services are grouped. Depending on the specific use case, not all services may be needed.

- The primary expectation is presumed to be Web Service centric as third parties will conceal how their data is handled. This also reflects the direction of standards development such as FHIR.

As the diagram shows, the data flowing between the medical center and the OCI environment is secured through a VPN and guaranteed in terms of performance using Oracle Cloud Infrastructure FastConnect (FastConnect), despite the high volume of the imaging data moving back and forth.

The PACS or VNA solution can be integrated in several ways depending on the capabilities of the PACS solution, from simply exposing the PACS storage to a restricted FTP server that can be regularly polled (e.g., every 10 to 15 minutes), to API specifications aligned with the DICOM Communication Model for Message Exchange. We can see in the architecture diagram that using OCI capabilities to displace on-premises PACS is also a possibility.

Custom functions are provided that can extract image-related metadata within DICOM. The extracted metadata can be stored separately to support rapid searching of content as the images don’t have to be interrogated each time a search is performed.

Although the integration with other data sources can happen using various protocols and technologies, the preferred mechanism is the adoption of FHIR REST APIs. However, the integration tooling available provides a broad range of integration technologies and protocols. Note that the imaging can't be supported with FHIR as the standard does not presently support such data.

While data is centralized to leverage provisioning of the database, the use of the Oracle Cloud Infrastructure Data Catalog and API gateway allows organizations to develop toward the ideas of a data mesh. In particular, the decentralization of data ownership by exposing data in a manner in which the data owners are comfortable. In addition, the API provides a means to achieve efficient access to data while enforcing access control. This means that the same data can be made available to different consumers for different purposes, while remaining unimpacted by the consumers' behavior and needs (e.g., data is made available to both mobile staff and clinicians with patients on medical visualization platforms).

This architecture supports the following data lakehouse-specific services:

- Object storage

Object storage provides quick access to large amounts of structured and unstructured data of any content type, including database backups, analytic data, and rich content such as images and videos. You can safely and securely store and then retrieve data directly from the internet or from within the cloud platform. You can seamlessly scale storage without experiencing any degradation in performance or service reliability. Use standard storage for "hot" storage that you need to access quickly, immediately, and frequently. Use archive storage for "cold" storage that you retain for long periods of time and seldom or rarely access.

Within this context, the Object Storage would be used to hold unstructured content such as x-ray images and then referenced by more structured data, including the image metadata, to make the images quickly searchable.

- Data Catalog

Oracle Cloud Infrastructure Data Catalog is a fully managed, self-service data discovery and governance solution for your enterprise data. It provides data engineers, data scientists, data stewards, and chief data officers a single collaborative environment to manage the organization's technical, business, and operational metadata.

The Data Catalog within his context will contribute to understanding the data assets and how they could be handled within the lakehouse to provide additional insights. This would cover internally held data and third-party data resources that can be pulled into the lakehouse through mechanisms such as API invocation and file transfer. Other technologies are available to ingest data, but APIs and Files are the most common models.

- Data

Integration

Use Oracle Cloud Infrastructure Data Integration for optimal data flow between systems.

It supports declarative and no-code or low-code ETL and data pipeline development. For the lakehouse, this would be one of the primary data manipulation and processing tools.

Data Integration can also be applied to perform tasks such as transforming data from semi-structured to fully structured, minable data. Perform activities such as data cleansing so that dirty or corrupt data can't distort findings.

- Data Flow

Oracle Cloud Infrastructure Data Flow is a fully managed Apache Spark service that performs processing tasks on huge datasets—without the infrastructure to deploy or manage. Developers can also use Spark Streaming to perform cloud ETL on their continuously produced streaming data. This enables rapid application delivery because developers can focus on app development, not infrastructure management.

Data Flow helps process data in a more event or timer series manner. Doing so means more analysis can be performed as things happen rather than waiting until all the data has been received.

- Big Data Service

Oracle Big Data Cloud Service help data professionals manage, catalog, and process raw data. Oracle offers object storage and Hadoop-based data lakes for persistence.

- Autonomous Database

Oracle Cloud Infrastructure Autonomous Database is a fully managed, preconfigured database environments that you can use for transaction processing and data warehousing workloads. You do not need to configure or manage any hardware, or install any software. Oracle Cloud Infrastructure handles creating the database, as well as backing up, patching, upgrading, and tuning the database.

Recommendations

- Virtual cloud network (VCN) and subnet

A VCN is a customizable, software-defined network that you set up in an Oracle Cloud Infrastructure region. Like traditional data center networks, VCNs give you complete control over your network environment. A VCN can have multiple non-overlapping CIDR blocks that you can change after you create the VCN. You can segment a VCN into subnets, which can be scoped to a region or to an availability domain. Each subnet consists of a contiguous range of addresses that don't overlap with the other subnets in the VCN. You can change the size of a subnet after creation. A subnet can be public or private.

- Cloud Guard

You can use Oracle Cloud Guard to monitor and maintain the security of your resources in Oracle Cloud Infrastructure. Cloud Guard uses detector recipes that you can define to examine your resources for security weaknesses and to monitor operators and users for risky activities. When any misconfiguration or insecure activity is detected, Cloud Guard recommends corrective actions and assists with taking those actions, based on responder recipes that you can define.

- Security zone

Security zones ensure Oracle's security best practices from the start by enforcing policies such as encrypting data and preventing public access to networks for an entire compartment. A security zone is associated with a compartment of the same name and includes security zone policies or a "recipe" that applies to the compartment and its sub-compartments. You can't add or move a standard compartment to a security zone compartment.

- Network security group (NSG)

Network security group (NSG) acts as a virtual firewall for your cloud resources. With the zero-trust security model of Oracle Cloud Infrastructure, all traffic is denied, and you can control the network traffic inside a VCN. An NSG consists of a set of ingress and egress security rules that apply to only a specified set of VNICs in a single VCN.

- Load balancer

The Oracle Cloud Infrastructure Load Balancing service provides automated traffic distribution from a single entry point to multiple servers in the back end.

Considerations

When deploying this reference architecture, consider these options.

- Data Sources

We're seeing the possibility of IoT solutions continually monitoring and reporting data about a patient, such as heart rate, and apps that allow patients to simply record events, such as dietary habits or medical events such as fits and seizures. This information can provide a great deal of additional insight, be incorporated into medical records, and be analyzed using medical expertise supported by AI/ML. Such data feeds suggest using event-stream technologies such as Kafka, which capture these events quickly and efficiently and can store the data in a natural time-series manner.

When finding patterns and anomalies that can help with diagnosis, generally, the more data about a patient that can be captured and organized into (semi-)structured data that can be easily queried, the better. So sourcing patient data, such as family history and data held by other medical organizations, with the patient's permission, may reveal insights without necessarily revealing specific individual facts.

- Performance

AI training requires processing high-resolution images and will need to reoccur periodically (for example, to accommodate newer image formats or higher-quality imaging). This model updating needs to be performed such that it does not impact patients undergoing diagnosis or treatment. This is done by running model updates in an isolated environment, and then scaling the current environment to a point where both the operational environment and training environment can coexist without resource competition or resource allocation issues.

- Security

In a clinical context, security will be paramount as such requirements are highly regulated. Not only can the data be considered of value, but the ability to disrupt access and use of the data through accidental or deliberate actions is also crucial. So the services involved with such data need to be secure.

- Availability

Migrating services into cloud environments removes a number of issues around resilience and availability from the medical center. The cloud provider cannot guarantee the availability of the networking. As a result, network resilience and fallback options must be actively owned to ensure continued availability in case of a "last mile" fault. Additionally, it is best that an organization with critical dependencies on such failure scenarios periodically exercise the cut-over and cut-back. So in the event of really needing it, the process is run as if it was any other regular process.

- Cost

Any particular use case may not require all the lakehouse services identified. These reflect the comment technologies associated with the application of a lakehouse. For example, if Oracle Big Data Cloud Service is not needed, it should not be deployed. Object storage could be used intelligently by placing images not being needed into a lower-performance storage configuration; when an event occurs relating to the patient, the content is moved to a higher-performance storage configuration. This is, in many respects, like the same principles a database applies to its storage—moving data in and out of cache, rapid access storage (e.g., NVMe), and slower spinning disks.