Plan the Data Lakehouse

When planning the data lakehouse, consider the following relevant use cases for banks, brokers, and financial services that have billions of data records:

- Establish an enterprise-wide data hub consisting of a data warehouse for structured data and a data lake for semi-structured and unstructured data. This data lakehouse becomes the single source of truth for your data.

- Integrate relational data sources with other unstructured data sets by using big data processing technologies.

- Use semantic modeling and powerful visualization tools to simplify data analysis.

Understand the Business Use Case

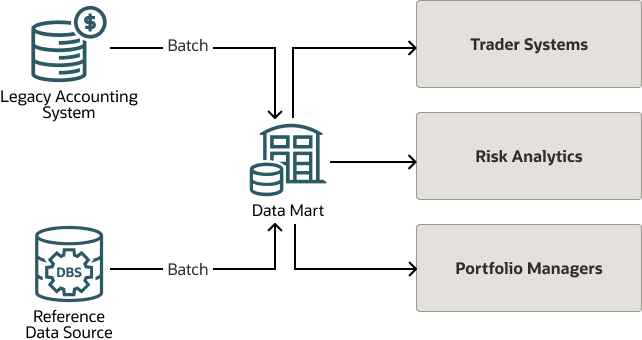

Below is the high-level view of a fund evaluation system that uses a legacy accounting system to provide the valuation data in batches to a data mart.

The data mart also gets batch reference data from other reference data sources. The data mart pushes the fund valuation data in batches to the downstream systems in the workflow.

Because pricing is done is static manner, typically at the end of the day, the legacy system, even when all systems are working fine, it is not as responsive as users need it to be.

For example, in the second quarter of 2022, when there was extreme volatility in the market, all the user groups were on high alert, and everybody wanted to know the latest price and market value so they could identify the holding positions throughout the day. Capturing the latest price and getting the market value in real time was a big ask for traditional fund valuation system.

To capture the latest price and get the market value in real time, the legacy accounting system must capture the latest price, push the data to the data mart, and repeat the batch workflows multiple times a day, which is neither responsive enough nor sustainable.

Understand the Solution

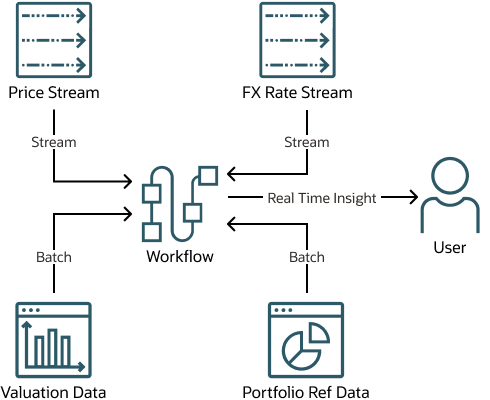

The solution requires no changes to the legacy system which continues to publish batch valuation data as always.

Real time pricing and exchange rates are captured from their respective streams, and applied to the pricing to find the market value in different currencies.

A serverless Oracle Cloud Infrastructure architecture provides support for both batch and real-time data. Batch data includes a snapshot flow for portfolio reference data, incremental delta, and change data capture (CDC) flow for valuation data. Real time data includes price and exchange rate streams. The architecture includes a process to collate batch and real time data to get the real time price, market value in base currency, and market value in foreign currencies.

Example Architecture

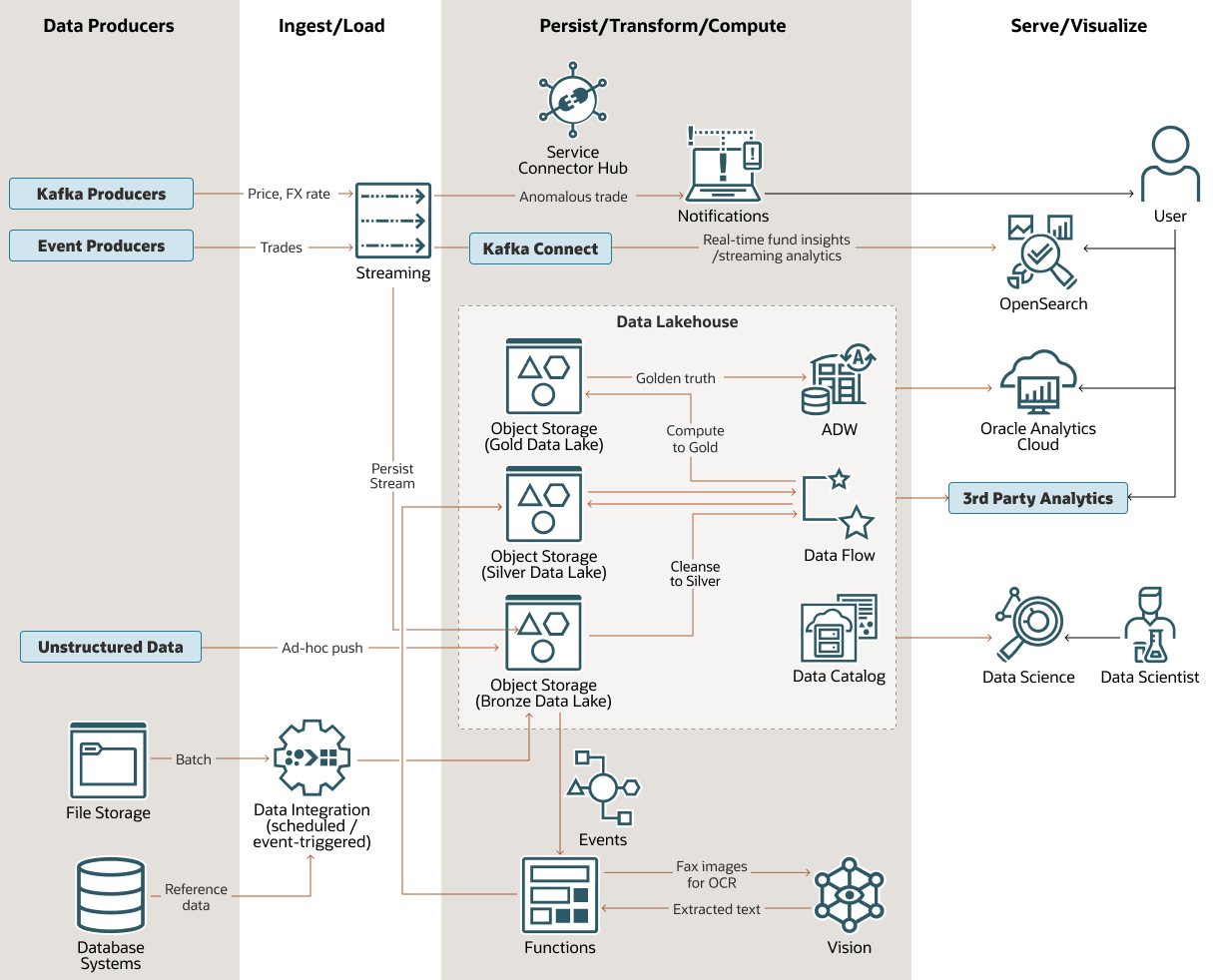

The diagram below shows a customer-inspired, modern data platform architecture on Oracle Cloud Infrastructure (OCI).

This architecture can be used for financial use cases such as those for gaining real-time fund insights, detecting anomalous trades, and for general financial data cleansing, aggregation and visualization.

oci-fund-lakehouse-arch-oracle.zip

One of the central features of this architecture is its multi-tiered data lakehouse. It consists of three distinct levels of data treatment in the data lake, Oracle Autonomous Data Warehouse (ADW) for structured warehousing, Oracle Cloud Infrastructure Data Catalog for metadata and governance, and Data Flow for big data processing and transformation using Spark jobs.

The bronze data lake is the first destination for data in a format which is often raw, or close to it. This includes data which resides in OCI, and data from third party platforms. Oracle Data Integration (ODI) is one of the tools used for this integration.

The Data Flow application handles most of the bronze-to-silver data transformation and cleansing. Oracle Cloud Infrastructure Vision extracts text from fax images with optical character recognition (OCR) technology. The Vision output data (text) is sent from the bronze lake to the silver lake with the help of Oracle Functions.

Data Flow performs additional data transformation from the silver lake tier to the gold data lake, where the data is loaded to ADW, which in turn supplies Oracle Analytics Cloud and 3rd party analytics and visualization tools.

The architecture includes the following additional features:

- Anomalous trading notifications are provided by using OCI Streaming and OCI Notifications, integrated by using OCI Service Connector Hub.

- Streaming analytics are provided for real-time fund insights by sending OCI Streaming data to OCI Search Service with OpenSearch by using Kafka Connect. OpenSearch Dashboards, an integrated component of the OCI Search Service, can provide direct visualization of OpenSearch data.

- Data scientists can explore the data lakehouse using OCI Data Science, a fully managed and serverless platform that you can use to query ADW, Object Storage, third party clouds, and properly connected on-premises systems.