Configure IaaS for Private Cloud Appliance

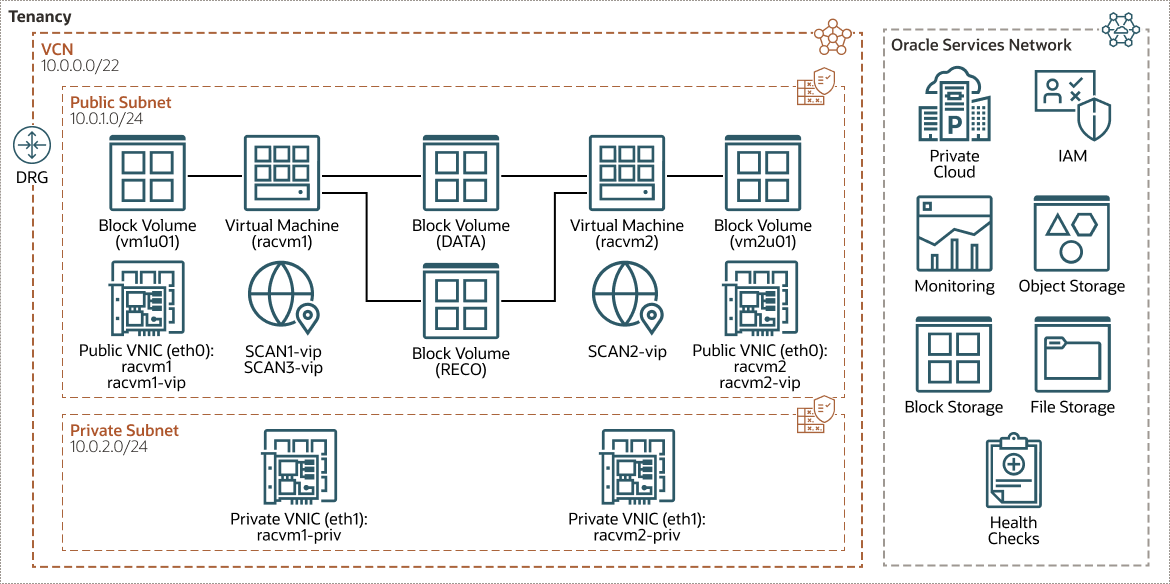

You must configure your network, compute, storage, operating system, and install Oracle Grid Infrastructure and Oracle RAC Database Software before you can create an Oracle RAC Database on Oracle Private Cloud Appliance. The IaaS configuration on Private Cloud Appliance will resemble the following architecture.

Before creating the infrastructure, review these assumptions and considerations:

- All nodes (virtual machines) in an Oracle RAC environment must connect to at least one public network to enable users and applications to access the database.

- In addition to the public network, Oracle RAC requires private network connectivity used exclusively for communication between the nodes and database instances running on those nodes.

- When using Oracle Private Cloud Appliance, only one private network interconnect can be configured. This network is commonly referred to as the interconnect. The interconnect network is a private network that connects all the servers in the cluster.

- In addition to accessing the Compute Enclave UI (CEUI), you must also configure the OCI CLI on a Bastion VM to run the commands using OCI instead of relying on CEUI.

The following sections describe how to create compute, network, and storage constructs on a Private Cloud Appliance system.

Configure Storage

Oracle RAC utilizes Oracle Automatic Storage Management (Oracle

ASM) for efficient shared storage access. Oracle Automatic Storage Management (Oracle

ASM) acts as the underlying, clustered volume manager. It provides the database administrator with a simple storage management interface that is consistent across all server and storage platforms. As a vertically integrated file system and volume manager, purpose-built for Oracle Database files, Oracle Automatic Storage Management (Oracle

ASM) provides the performance of raw I/O with the easy management of a file system.