4 Extract Transform Load Programs

To load data from the source systems to the data mart, Oracle Argus Analytics uses Extract Transform and Load (ETL) programs that

-

Identify and read desired data from different data source systems,

-

Clean and format data uniformly, and

-

Write it to the target data mart.

In Oracle Argus Analytics, Oracle Argus Safety is the source system for which Oracle provides pre-defined ETL.

4.1 ETL Architecture

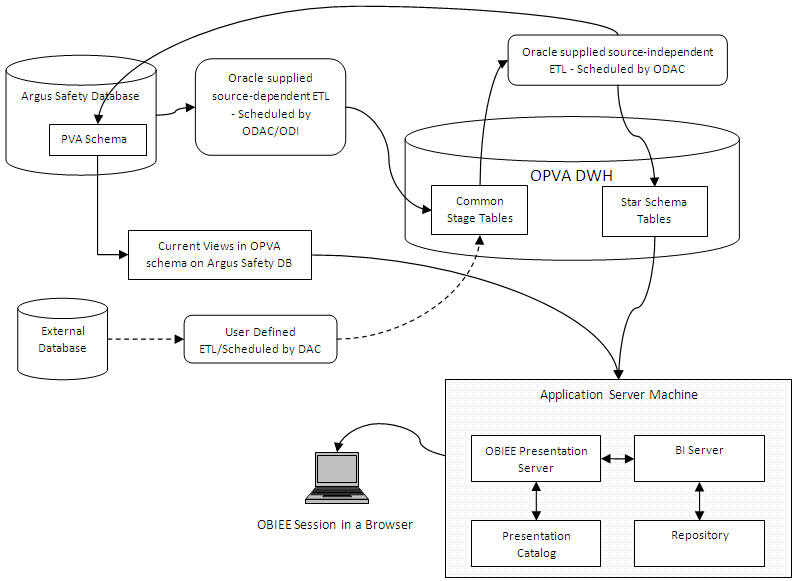

Figure 4-1 displays the ETL process delivered with Oracle Argus Analytics.

Oracle Argus Analytics has ETLs defined in the following two technology flavors:

-

Informatica

-

Oracle Data Integrator (ODI)

Set up as a recurring job in DAC/ODI, the Extraction, Transformation, and Load process (ETL) is designed to periodically capture targeted metrics (dimension and fact data) from multiple Safety databases, transform and organize them for efficient query, and populate the star-schema tables.

While the Oracle Argus Analytics data model supports data extraction from multiple sources, Oracle Argus Analytics includes only source-dependent extract (SDE) mappings (in case of Informatica)/ODI for the Oracle Argus Safety database. However, you can also define SDE mappings from additional external sources that write to the appropriate staging tables. Note that you are responsible for resolving any duplicate records that may be created as a consequence.

The SDE programs map the transactional data to staging tables, in which the data must conform to a standardized format, effectively merging the data from multiple, disparate database sources. This is the architectural feature that accommodates external database sourcing.

The staged data is transformed using the source-independent loads (SILs) to star-schema tables, where such data are organized for efficient query by the Oracle BI Server.

There is one SDE mapping for each target table, which extracts data from the source system and loads it to the staging tables. SDEs have the following features:

-

Incremental Submission Mode: Oracle Argus Analytics-supplied ETL uses timestamps and journal tables in the source transactional system to optimize periodic loads.

-

Bulk and Normal Load (for Informatica ETLs only): Bulk load uses block transfers to expedite loading of large data volume. It is intended for use during initial data mart population. Bulk load is faster, if data volume is sufficiently large. However, if load is interrupted (for example, disk space is exhausted, power failure), load cannot be restarted in the middle; you must restart the load.

Normal load writes one record at a time. It is intended to be used for updates to the data mart, once population has been completed. Normal load is faster, if data volume is sufficiently small. You can also restart load if the load is interrupted.

Setting Bulk or Normal load option should be done at Workflow session in Informatica. Perform the following steps to set the load option:

-

Navigate to Session in a workflow and edit the task properties.

-

Navigate to the Mappings subtab and select 'Bulk/Normal' under Target Load type.

-

Save the workflow.

-

There is one SIL mapping/interface for each target table. The SIL extracts the normalized data from the staging table and inserts it into the data mart star-schema target table. SILs have the following attributes:

-

Concerning changes to dimension values over time, Oracle Argus Analytics overwrites old values with new ones. This strategy is termed as Slowly Changing Dimension approach 1.

-

Oracle Argus Analytics's data model includes aggregate tables and a number of indexes, designed to minimize query time.

-

By default, bulk load is disabled for all SILs for incremental load (in case of Informatica ETLs only).

-

The results of each ETL execution is logged by Informatica/ODI. The logs hold information about errors encountered, during execution.

Informatica provides the following four error tables:

-

PMERR_DATA

-

PMERR_MSG

-

PMERR_SESS

-

PMERR_TRANS

For ODI, the EL$_<TABLE_NAME> will store the erroneous data rows per table. Example: For W_PRODUCT_D ETL the error table is EL$_W_PRODUCT_D.

During ETL execution, records which fail to be inserted in the target table (for example, some records violate a constraint) are placed in the Informatica PowerCenter error tables/ODI error tables, as mentioned above. You can review which records did not make it into the data mart, and decide on appropriate action with respect to them.

-

4.1.1 Oracle Argus Analytics Hierarchy

Figure 4-2 displays the Oracle Argus Analytics hierarchy using DAC:

Oracle Argus Analytics follows the hierarchy using DAC, as listed below:

-

CONTAINER (OPVA_Datawarehouse) - A single container that holds all objects used for Oracle Argus Analytics.

-

EXECUTION PLAN (Oracle Argus Analytics Data Mart Load) - A data transformation plan defined on subject areas that needs to be transformed at certain frequencies of time. An execution plan is defined based on business requirements for when the data mart needs to be loaded. Single Execution Plan to Load Complete Warehouse.

-

SUBJECT AREAS - A logical grouping of tables related to a particular subject or application context. It also includes the tasks that are associated with the tables, as well as the tasks required to load the tables. Subject areas are assigned to execution plans, which can be scheduled for full or incremental loads.

-

TASK GROUPS - This is a group of tasks that should be run in a given order.

-

TASKS - A unit of work for loading one or more tables. A task comprises the following: source and target tables, phase, execution type, truncate properties, and commands for full or incremental loads. A single Informatica workflow.

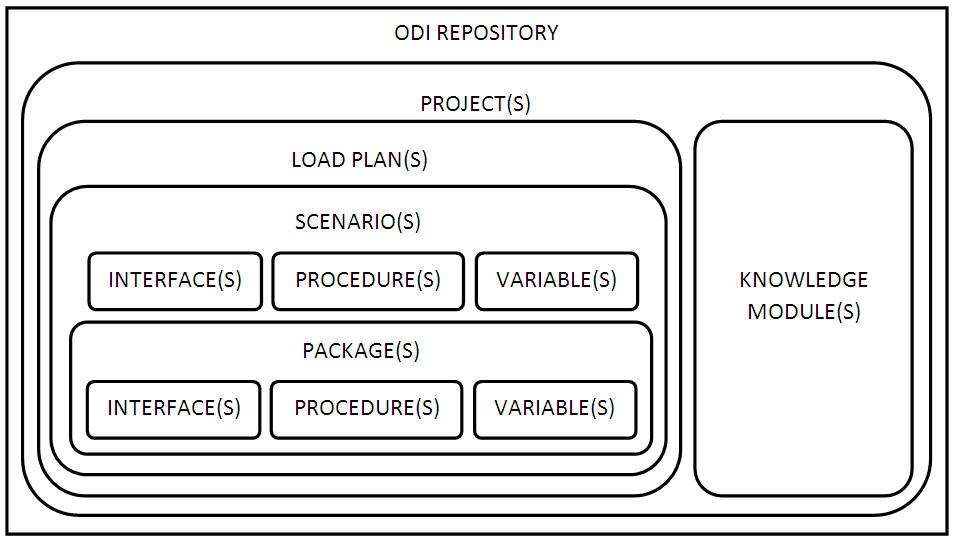

Figure 4-3 displays the Oracle Argus Analytics hierarchy using:

Oracle Argus Analytics follows the hierarchy for ODI, as listed below:

-

ODI REPOSITORY - ODI Master and Work repository, which holds all the ODI objects for Oracle Argus Analytics ETL.

-

PROJECT - A collection of all Argus Analytics-related ODI ETL objects.

-

KNOWLEDGE MODULE - Templates of PLSQL code used in Interfaces/Mappings to achieve a specific ETL flow.

-

LOAD PLAN - ETL execution plan, which holds a pre-defined ordered set of scenarios to load the warehouse appropriately.

-

SCENARIO - The generated executable (and uneditable) code of the objects in ODI like Package, Procedure, Interfaces/Mappings, and Variables. Scenarios can be considered as frozen versions of the code at the time of generation, with subsequent updates of the relevant unit of code not affecting it.

-

PACKAGE - An ordered set of Interfaces/Mappings, Procedures, variables and packages as well to be executed in a defined manner.

-

INTERFACE/MAPPING - Executable ETL code for loading the target tables.

-

PROCEDURE - Modularized executable code for loading the target tables.

-

VARIABLE - Used for making code path decisions based on the user input provided while executing Load Plans.

4.2 Executing the ETL Execution Plans in Informatica

To load data from the source to their target tables in the data mart, run the Execution Plan packaged with Oracle Argus Analytics. Perform the following tasks in DAC:

-

Navigate to the Execute view.

-

Select Oracle Argus Analytics Warehouse execution plan.

-

Set the parameter values under the Parameter tab.

-

Build the execution plan.

-

Click Run.

4.3 Parameters Used by Informatica ETLs

The following parameters are used by Informatica ETLs:

-

Table 4-1 Informatica ETL Parameters

Parameters Description Allowed Values $$p_config_days

Reduces the incremental extract window by the specified number of days. E.g.: Extract all changed rows between LAST_EXTRACT_DATE and (SYSDATE - $$p_config_days)

Integers

Recommended value:

0

$$p_enterprise_id

The specific Enterprise ID to run the ETL for

-1: Runs the Incremental ETL for the entire Warehouse

0: Runs the Incremental ETL for all the enterprises the user ($$p_user_name) has access to.

Integer Value [1,2,3, etc]: Runs the Incremental ETL for the specified Enterprise only.

$$p_etl_proc_id

The unique Identifier for the ETL Process that is run and it takes its value by default from DAC or from ODI

Do not change or specify any other value. Please leave it unmodified.

$$p_include_pseudo_state_flag

The parameter defines whether to include the workflow states present between the Locking record and the Unlocking record of a case in the Case Workflow State Fact table.

Default value is 1.

1: Include the Workflow States between Locking and Unlocking records of the case.

0: Exclude the Workflow States between Locking and Unlocking records of the case.

$$p_last_extract_date

System-defined value for defining the start date of the extract window for Incremental Data or the last time the ETL ran successfully for the enterprise specified

Do not change

$$p_override_last_extract_date

Specify a Date value in the format MM/DD/RRRR in case you want to override the last extract date for the Incremental Data.

Date Values such as 01/01/1999 or 12/23/2007

$$p_rekey_fact

To rekey fact tables in case data in the W_HS_MAPPING_S defined for match and merge has changed

0: Will not rekey the Fact tables

1: Will rekey the Fact tables

$$p_user_name

The user name which the Incremental ETL shall use to set the VPD Context for the specified enterprise in the parameter: $$p_enterprise_id. This value should be passed inside single quotes: such as 'username'.

Default Value: 'admin'

$$START_DATE

The start date of the days to populate from in the W_DAY_D/PVA_DAY table. It should be in the format: MM/DD/RRRR

Default Value: 01/01/1980

$$END_DATE

The end date of the days to populate till in the W_DAY_D/PVA_DAY table. It should be in the format: MM/DD/RRRR

Default Value: 01/01/2020

4.4 Executing the ETL Load Plans in ODI

To load data from the source to their target tables in the data mart, run the Load Plan packaged with Oracle Argus Analytics ODI.

Perform the following tasks in ODI Studio:

-

Navigate to the Operator tab.

-

In the Load Plans and Scenarios sections, right-click the Load Plan: LP_FL_AN for full loads or LP_IL_AN for Incremental Loads option and select Execute/Run.

-

Provide the values for the Load Plan variables, as mentioned in the table below.

Parameters Load Type Description Allowed Values VAR_ALN_PERIOD_FROM_DATE

Full Load

The start date of the days to populate from in the W_DAY_D/PVA_DAY table. It should be in the format: MM/DD/RRRR

Date values such as:

01/01/1980

Recommended value:

01/01/1980

VAR_ALN_PERIOD_TO_DATE

Full Load

The end date of the days to populate till in the W_DAY_D/PVA_DAY table. It should be in the format: MM/DD/RRRR

Date values such as: 12/31/2019

Recommended Value: 12/31/2019

VAR_INT_TRUNCATE_STAGE

Both

This variable is used to decide whether to truncate the stage table or not and is useful in multiple Argus Safety DB support

Valid values:0: Does not truncate Stage table1: Truncate Stage tableShould be specified as 1 always in case of Single Argus Safety Instance as source information

Recommended Value: 1

VAR_INT_COLLECT_STATISTICS

Both

This variable is used to decide whether the statistics of the target tables need to be collected or not.

Default Value: 1

Values Accepted: 0,1

0: Load Plans will not collect statistics

1: Load Plans will collect statistics after loading data

VAR_ALN_ENTERPRISE

Both

The specific Enterprise ID to run the ETL for.

-1: Runs the ETL for the entire Warehouse0: Runs the ETL for all the enterprises the user ($$p_user_name) has access toInteger Value [1,2,3, etc]: Runs the Incremental ETL for the specified Enterprise only.

Note: For Full Load, this value has to be -1.

VAR_ALN_ERROR_REJECT_LIMIT

Both

This variable is used to set the number of rows that will be tracked in the respective error tables prior to aborting the ETL in case of errors.

Valid Values:Positive Integer numbers: (E.g. 0, 100, 1000, etc.)UNLIMITED: All the error records are logged

Recommended Value: UNLIMITED

VAR_ALN_USER_NAME

Both

The user name for which the ETL shall use to set the VPD Context for the specified enterprise in the parameter: VAR_ALN_ENTERPRISE. This value should be passed inside single quotes: such as 'username'.

Default value: 'admin'

VAR_INT_RAISE_ERROR

Both

Setting this variable to 0 or 1 will appropriately either stop a Load Plan/Interfaces/Mappings or continue the same when data errors are encountered during the load.

0: Do not raise data error when encountered during ETLs1: Raise data error when encountered during ETLs

Recommended Value: 1

VAR_INT_CONFIG_DAYS

Incremental Load

Reduces the incremental extract window by the specified number of days. Example: Extract all changed rows between LAST_EXTRACT_DATE and (SYSDATE - $$p_config_days)

Integers

Recommended Value: 0

VAR_INT_REKEY_FACT

Incremental Load

To rekey fact tables in case data in the W_HS_MAPPING_S defined for match and merge has changed

0: Will not rekey the Fact tables 1: Will rekey the Fact tables

Recommended Value: 0

VAR_INT_OVERRIDE_LAST_EXTRACT_DATE

Incremental Load

Specify a Date value in the format MM/DD/RRRR if you want to override the last extract date for the Incremental Data for the specific ETL run. This will cause reload of data in the warehouse that are present in the given incremental extract window.

Date values such as:01/01/1900 or 12/23/2012

Recommended Value: NULL (Change this with discretion)

VAR_INT_INCLUDE_PSEUDO_ST_CODE_FLG

Both

The parameter defines whether to include the workflow states present between the Locking record and the Unlocking record of a case in the Case Workflow State Fact table.

Date values is 1.1: Include the Workflow States between Locking and Unlocking records of the case.0: Exclude the Workflow States between Locking and Unlocking records of the case.

-

Click OK to run the Load Plan.

4.5 Customizing an ETL Execution Plan (Informatica)/Load Plan (ODI)

When you customize an ETL Execution Plan (Informatica)/Load Plan (ODI), it is your responsibility to maintain version control over changes to ETL mappings/interfaces.

Oracle recommends that you carefully track the changes you make to Oracle-supplied ETL so that you can re-apply these changes in subsequent releases.

Note:

The ETL Execution Plans that extract data for the data mart fact tables assume that the dimensions to which each fact is related are up-to-date at the time the fact ETL Execution Plans are executed. This assumption is the basis for certain fact calculations that would provide erroneous results if the assumption were not true.As shipped, Oracle Argus Analytics ETL workflows ensure this interlock by executing the ETL for related dimensions immediately before running the ETL for a fact.

This is standard data mart management practice, but especially important, given the interdependence of the dimensions and the fact. The need to execute dimension ETL immediately before corresponding fact ETL, and the danger of not doing it, is emphasized here because it is possible (though discouraged) to modify these shipped workflows.

4.6 Creating an ETL Execution Plan (Informatica Only)

Though Oracle Argus Analytics includes ETL Execution Plans for extracting data from Oracle Argus Safety to Oracle Argus Analytics data mart, you may want to create your own ETL to extract data from other data sources.

Note:

The value of DATASOURCE_NUM_ID is set to 1 for Oracle Argus Safety and is taken from the view VW_DATASOURCE present in the Argus Analytics Source Schema. Update this view to change the DATASOURCE_NUM_ID number, only if desired.Also, ensure that this change (if needed) is done prior to running the Full Load for Argus Analytics. By default, we advise not to change this value (view).

See Also:

-

Informatica PowerCenter Online Help

To add one or more tables or columns along with the associated ETL Execution Plans to populate data into these tables, perform the following tasks:

-

Create the new source and target table metadata inside Informatica.

-

Work in Informatica PowerCenter and create the ETL components (transformation or workflow) for this program.

-

Create the required workflow for this mapping.

-

Connect to DAC and create a new task for this new mapping.

-

Synchronize the task.

-

Add the task to subject area.

-

Build the Execution Plan (Oracle Argus Analytics Data Mart Load).

4.7 Creating an ETL Load Plan (ODI Only)

Though Oracle Argus Analytics includes ETL Load Plans for extracting data from Oracle Argus Safety to Oracle Argus Analytics data mart, you may want to create your own ETL Interfaces/Mappings to extract data from other data sources.

Note:

The value of DATASOURCE_NUM_ID is set to 1 for Oracle Argus Safety and is taken from the view VW_DATASOURCE present in the Argus Analytics Source Schema (AN_SRC). Update this view to change the DATASOURCE_NUM_ID number, only if desired.Also, ensure that this change (if needed) is done prior to running the Full Load for Argus Analytics. By default, we advise not to change this value (view).

See Also:

-

Oracle ® Fusion Middleware Developer's Guide for Oracle Data Integrator 11g/12c

To add one or more tables or columns along with the associated ETL Load Plans to populate data into these tables, perform the following tasks:

-

Create the new Datastore for source and target table metadata inside the appropriate ODI Models.

-

Work in ODI Studio and create the ETL components (Interface/Mapping and its Scenario) for this program to populate the table or column appropriately.

-

Edit or create an existing Load Plan.

-

Add the scenario generated at the appropriate hierarchical level and position where it needs to be executed, in the Load Plan.

-

Save the Load Plan.

4.8 Modifying an ETL Execution Plan (Informatica Only)

You may also want to modify an existing ETL to meet your reporting requirements.

See Also:

-

Informatica PowerCenter Online Help

To modify an ETL without any changes to the associated tables or columns, perform the following tasks:

-

Identify the Execution Plan that needs to be modified in Informatica repository.

-

Open and Modify the ETLs (transformation and/or workflow).

-

Test and save the changes in repository.

-

Connect to DAC and navigate to the corresponding task.

-

Right-click the task and synchronize it.

-

Navigate to the execution plan and execute ETL to verify the changes.

To modify one or more tables or columns without any changes to the associated ETL programs (typically to widen a column):

-

Change the table/view properties, as required.

-

Save the mapping and refresh the workflow.

-

Connect to DAC and navigate to corresponding task and refresh it.

Note:

If the changes to the tables or columns are not compatible with the table that is installed in the data mart schema, you will get a warning while making the change. For example, if you are reducing the length of a number column from 15 to 10, the change is not compatible with the data existing in the table.4.9 Modifying an ETL Load Plan (ODI Only)

You may also want to modify an existing ETL to meet your reporting requirements.

See Also:

-

Oracle ® Fusion Middleware Developer's Guide for Oracle Data Integrator 11g/12c

To modify an ETL Load Plan without any changes to the associated tables or columns, perform the following tasks:

-

Identify the Load Plan that needs to be modified in Informatica repository.

-

Identify the Interface/Mapping or Package in the Load Plan that you intend to modify.

-

Open and modify the ETLs (Interface/Mapping/Procedure) in ODI Studio under the Project ArgusAnalytics111.

-

Test and save the changes in ODI Studio.

-

Verify that the changes made have been applied successfully.

-

Regenerate the associated scenario with the Interface/Mapping/Procedure.

-

Connect to DAC and navigate to the corresponding task.

To modify one or more tables or columns without any changes to the associated ETL programs (typically to widen a column):

-

Change the table/view properties, as required.

-

Save the Interface/Mapping and regenerate the associated scenario in ODI Studio.

4.10 Scheduling an ETL Execution Plan (Informatica Only)

When you submit an Execution Plan for execution in DAC, you can schedule it execute at regular intervals.

To schedule an Execution Plan, perform the following tasks:

-

Navigate to the Scheduler tab within the Execute view.

-

Create a new schedule plan.

-

Enter the required details and click Save.

4.11 Scheduling an ETL Load Plan (ODI Only)

When you submit a Load Plan for execution in DAC, you can schedule it execute at regular intervals.

To schedule a Load Plan, perform the following tasks:

-

In the ODI Studio, navigate to the Load Plans and Scenarios section in the Operator tab.

-

Expand the Load Plan that you wish to schedule.

-

Right-click on the Scheduling tree node and select New Scheduling.

-

Enter the required details and click Save.

-

Restart the associated Physical Agent with the Load Plan (default value: PA_AN).

4.12 Customizable User Exits in Oracle Argus Analytics ETLs

The VW_CONFIGURABLE_TARGET_DAYS view, and the VW_CONFIGURABLE_DUESOON_DAYS view can be used to customize some of the attribute values used in Oracle Argus Analytics as per organization requirements.

The views VW_CONFIGURABLE_TARGET_DAYS and VW_CONFIGURABLE_DUESOON_DAYS are used in the source code for the views Pending Cases Fact and Pending Reports Fact present in the Current Metrics of Oracle Argus Analytics and in the ETL SDE views.

These objects are available in the AN_SRC schema created on the Argus Safety DB Instance, specified during Oracle Argus Analytics Installation.

4.12.1 VW_CONFIGURABLE_TARGET_DAYS

The VW_CONFIGURABLE_TARGET_DAYS view is similar to this function. This view, by default, returns 10 days for a serious case and 30 days if the case is not serious, as per the enterprise present. It can be modified accordingly, as per organizational requirements.

Table 4-3 Oracle Argus Analytics Presentation Catalog Attributes Affected - Lock Target Days

| ID | Fact | Column |

|---|---|---|

|

M-CVH01 |

Case Version |

Lock Target Days |

|

M-PC04 |

Pending Cases |

Lock Target Days |

Note:

Refer to the Facts and Sources in Oracle Argus Analytics Presentation Catalog for the other attributes that are dependent on the attributes mentioned in the above table.4.12.2 VW_CONFIGURABLE_DUESOON_DAYS

The VW_CONFIGURABLE_DUESOON_DAYS view is similar to this function. This view is used in the Pending Cases Fact and Pending Reports Fact views present in the Current Metrics. It can be modified accordingly, as per organizational requirements.

Table 4-4 Oracle Argus Analytics Presentation Catalog Attributes Affected - Due Soon Days

| ID | Fact | Column |

|---|---|---|

|

M-PC06 |

Lock Due Soon Date |

|

|

M-PC02 |

Pending Cases |

Earliest Exp Report Due Soon Date |

|

M-PC06 |

Pending Cases |

Lock Due Soon Date |

|

M-PC09 |

Pending Cases |

State Due Soon Date - I |

|

M-PC12 |

Pending Cases |

State Due Soon Date - II |

|

M-PC16 |

Pending Cases |

# Unlocked Cases w Expedited Report Due Soon |

|

M-PC19 |

Pending Cases |

# Unlocked Cases Due Soon |

|

M-PC22 |

Pending Cases |

# Unlocked Cases w State Due Soon - I |

|

M-PC25 |

Pending Cases |

# Unlocked Cases w State Due Soon - II |

Note:

Refer to the Facts and Sources in Oracle Argus Analytics Presentation Catalog for the other attributes that are dependent on the attributes mentioned in the above table.