Using JupyterHub with Notebooks

JupyterHub lets multiple users work together by providing an individual Jupyter notebook server for each user. When you create a Big Data Service cluster, JupyterHub is installed and configured on the cluster nodes.

Note

JupyterHub is only available in Big Data Service 3.0.7 and later clusters.

For information specific to Big Data Service 3.0.26 ODH 1.x or earlier clusters, see Using JupyterHub in Big Data Service 3.0.26 or Earlier.

For information specific to Big Data Service 3.0.27 ODH 2.x or later clusters, see Using JupyterHub in Big Data Service 3.0.27 or Later.

Launching Kernels and Running Spark Jobs

- Access JupyterHub.

- Open a notebook server. You're redirected to the Launcher page.

- You can open one of the several kernels available by default, such as Python, PySpark, Spark and SparkR. To launch a notebook, select File > New > Notebook, and then select Select Kernel or select the corresponding icon under Notebook.

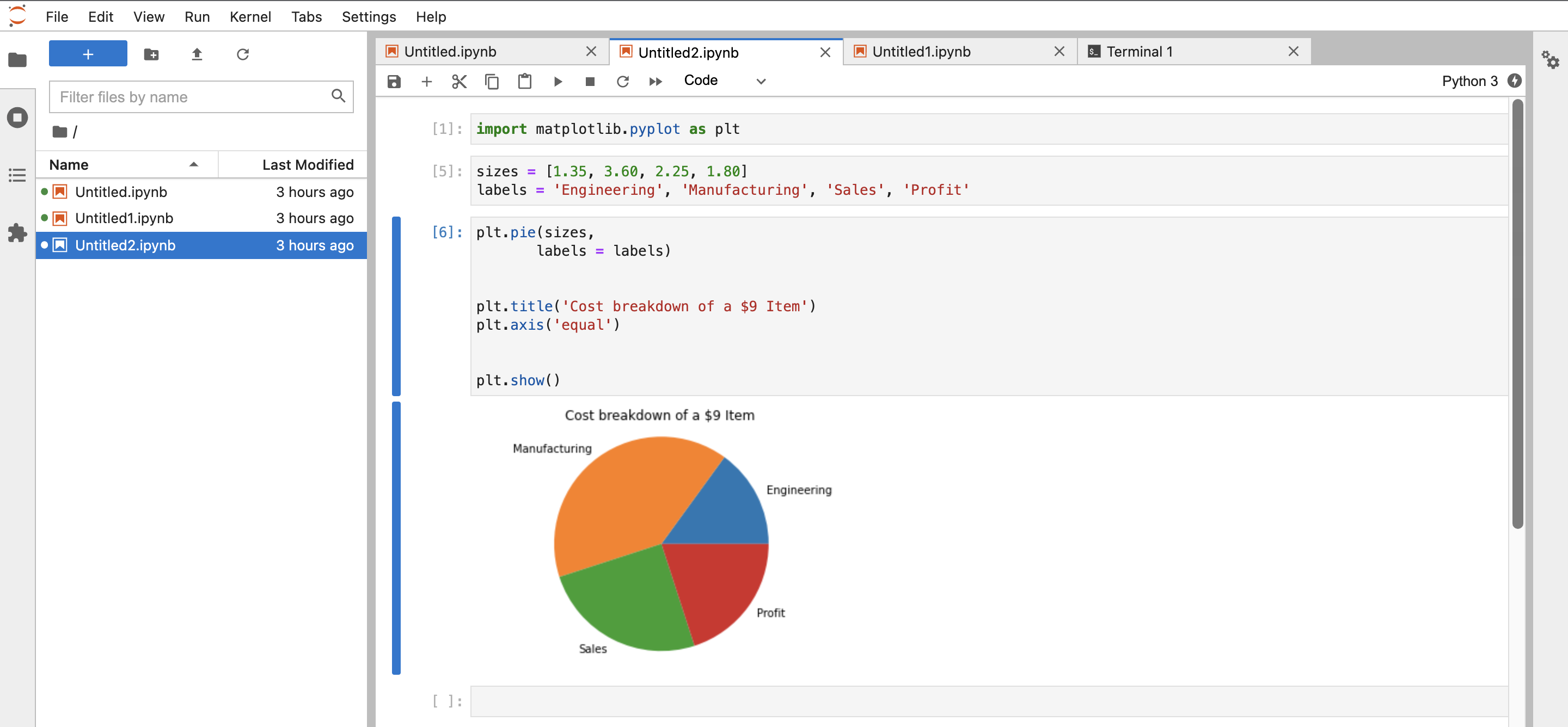

Sample Code for Python Kernel:

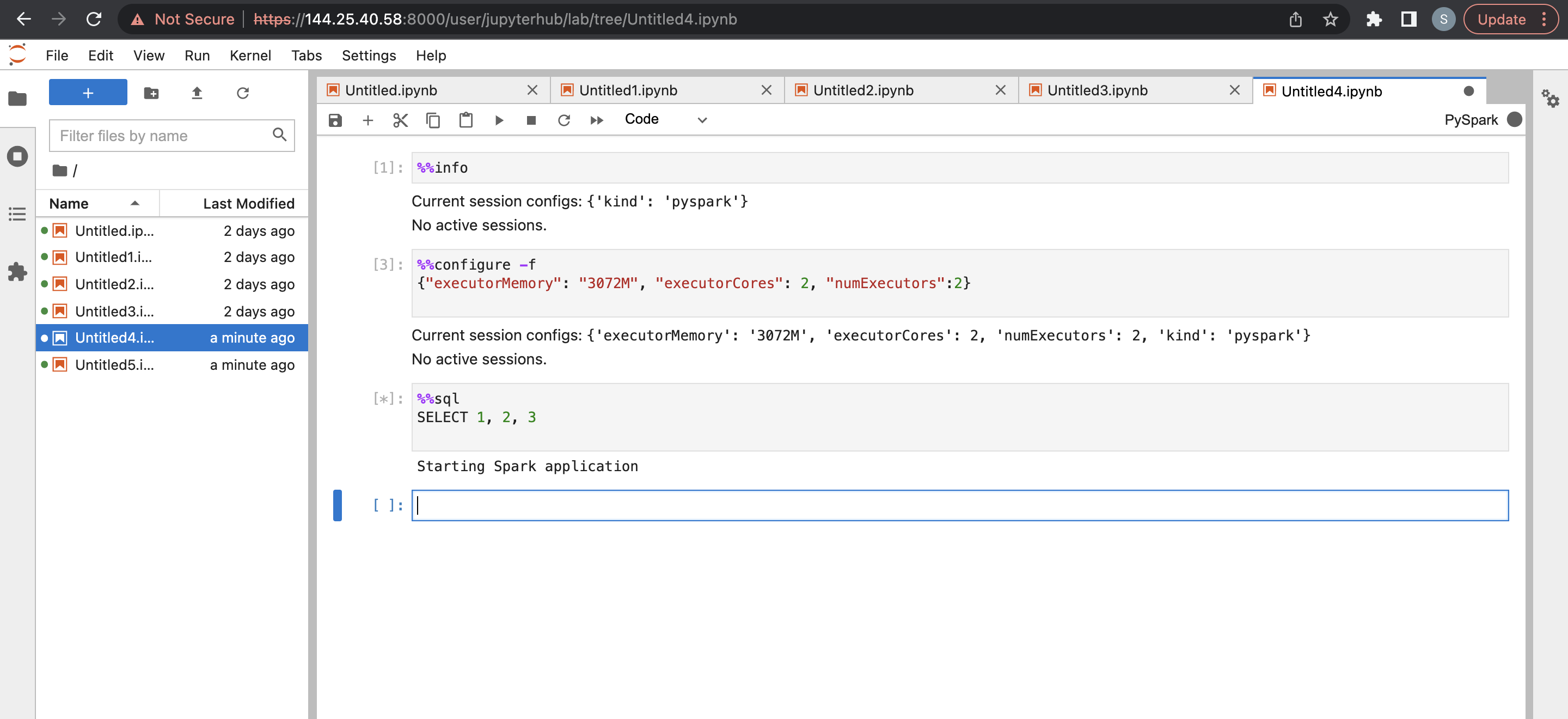

Sample Code For Sparkmagic in PySpark Kernel