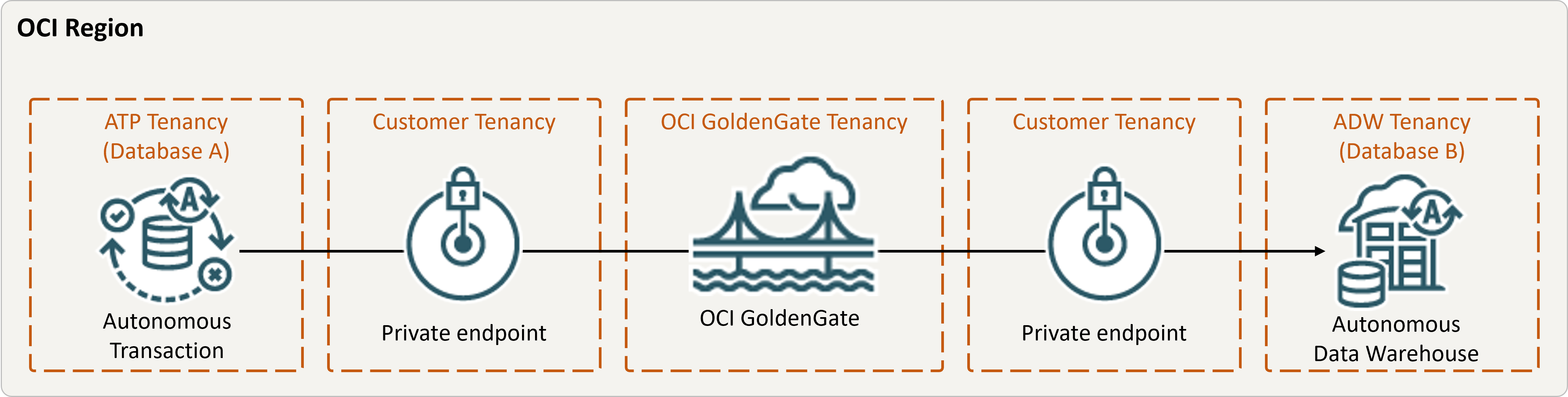

Replicate data between cloud databases in the same region

Learn how to set up Oracle Cloud Infrastructure GoldenGate to replicate data between two Autonomous AI Databases.

Overview

Oracle Cloud Infrastructure GoldenGate lets you to replicate supported databases within the same region. The following steps guide you through how to instantiate a target database using Oracle Data Pump and replicate data from the source to the target.

This quickstart is also available as LiveLab: View the workshop.

Description of the illustration same-region.png

Before you begin

You must have the following in order to proceed:

- An existing source database

- An existing target database

- The source and target database must be in a single tenancy, in the same region

- If you need sample data, download Archive.zip, and then follow the instructions in Lab 1, Task 3: Load the ATP schema.

Task 1: Set up the environment

- Create a Data Replication deployment.

- Create a source Oracle Autonomous AI Transaction Processing (ATP) connection.

- Create a target Autonomous AI Lakehouse (ALK) connection.

- Assign a connection to the deployment.

- Use the SQL tool to enable supplemental logging:

ALTER DATABASE ADD SUPPLEMENTAL LOG DATA - Run the following query in the SQL tool to ensure that

support_mode=FULLfor all tables in the source database:select * from DBA_GOLDENGATE_SUPPORT_MODE where owner = 'SRC_OCIGGLL';

Task 2: Create the Integrated Extract

An Integrated Extract captures ongoing changes to source database.

- On the deployment Details page, click Launch console.

- If required, enter oggadmin for User name and the password you used when creating the deployment, and then click Sign In.

- Add Transaction Data and a Checkpoint Table:

- Open the navigation menu and then click DB Connections.

- Click Connect to database SourceDB.

- In the navigation menu, click Trandata, and then click Add Trandata (plus icon).

- For Schema Name, enter

SRC_OCIGGLL, and then click Submit. - To verify, enter

SRC_OCIGGLLinto the Search field and click Search. - Open the navigation menu and then click DB Connections.

- Click Connect to database TargetDB.

- In the navigation menu, click Checkpoint, and then click Add Checkpoint (plus icon).

- For Checkpoint Table, enter

"SRCMIRROR_OCIGGLL"."CHECKTABLE", and then click Submit.

- Add an Extract.Note

See additional extract parameter options for more information about parameters that you can use to specify source tables.On the Extract Parameters page, append the following lines underEXTTRAIL <trail-name>:-- Capture DDL operations for listed schema tables ddl include mapped -- Add step-by-step history of -- to the report file. Very useful when troubleshooting. ddloptions report -- Write capture stats per table to the report file daily. report at 00:01 -- Rollover the report file weekly. Useful when IE runs -- without being stopped/started for long periods of time to -- keep the report files from becoming too large. reportrollover at 00:01 on Sunday -- Report total operations captured, and operations per second -- every 10 minutes. reportcount every 10 minutes, rate -- Table list for capture table SRC_OCIGGLL.*; - Check for long running transactions. Run the following script on your source

database:

select start_scn, start_time from gv$transaction where start_scn < (select max(start_scn) from dba_capture);If the query returns any rows, then you must locate the transaction's SCN and then either commit or rollback the transaction.

Task 3: Export data using Oracle Data Pump (ExpDP)

Use Oracle Data Pump (ExpDP) to export data from the source database to Oracle Object Store.

- Create an Oracle Object Store

bucket.

Take note of the namespace and bucket name for use with the Export and Import scripts.

- Create an Auth Token, and then copy and paste the token string to a text editor for later use.

- Create a credential in your source database, replacing the

<user-name>and<token>with your Oracle Cloud account username and the token string you created in the previous step:BEGIN DBMS_CLOUD.CREATE_CREDENTIAL( credential_name => 'ADB_OBJECTSTORE', username => '<user-name>', password => '<token>' ); END; - Run the following script in your source database to create the Export Data job.

Ensure that you replace the

<region>,<namespace>, and<bucket-name>in Object Store URI accordingly.SRC_OCIGGLL.dmpis a file that will be created when this script runs.DECLARE ind NUMBER; -- Loop index h1 NUMBER; -- Data Pump job handle percent_done NUMBER; -- Percentage of job complete job_state VARCHAR2(30); -- To keep track of job state le ku$_LogEntry; -- For WIP and error messages js ku$_JobStatus; -- The job status from get_status jd ku$_JobDesc; -- The job description from get_status sts ku$_Status; -- The status object returned by get_status BEGIN -- Create a (user-named) Data Pump job to do a schema export. h1 := DBMS_DATAPUMP.OPEN('EXPORT','SCHEMA',NULL,'SRC_OCIGGLL_EXPORT','LATEST'); -- Specify a single dump file for the job (using the handle just returned -- and a directory object, which must already be defined and accessible -- to the user running this procedure. DBMS_DATAPUMP.ADD_FILE(h1,'https://objectstorage.<region>.oraclecloud.com/n/<namespace>/b/<bucket-name>/o/SRC_OCIGGLL.dmp','ADB_OBJECTSTORE','100MB',DBMS_DATAPUMP.KU$_FILE_TYPE_URIDUMP_FILE,1); -- A metadata filter is used to specify the schema that will be exported. DBMS_DATAPUMP.METADATA_FILTER(h1,'SCHEMA_EXPR','IN (''SRC_OCIGGLL'')'); -- Start the job. An exception will be generated if something is not set up properly. DBMS_DATAPUMP.START_JOB(h1); -- The export job should now be running. In the following loop, the job -- is monitored until it completes. In the meantime, progress information is displayed. percent_done := 0; job_state := 'UNDEFINED'; while (job_state != 'COMPLETED') and (job_state != 'STOPPED') loop dbms_datapump.get_status(h1,dbms_datapump.ku$_status_job_error + dbms_datapump.ku$_status_job_status + dbms_datapump.ku$_status_wip,-1,job_state,sts); js := sts.job_status; -- If the percentage done changed, display the new value. if js.percent_done != percent_done then dbms_output.put_line('*** Job percent done = ' || to_char(js.percent_done)); percent_done := js.percent_done; end if; -- If any work-in-progress (WIP) or error messages were received for the job, display them. if (bitand(sts.mask,dbms_datapump.ku$_status_wip) != 0) then le := sts.wip; else if (bitand(sts.mask,dbms_datapump.ku$_status_job_error) != 0) then le := sts.error; else le := null; end if; end if; if le is not null then ind := le.FIRST; while ind is not null loop dbms_output.put_line(le(ind).LogText); ind := le.NEXT(ind); end loop; end if; end loop; -- Indicate that the job finished and detach from it. dbms_output.put_line('Job has completed'); dbms_output.put_line('Final job state = ' || job_state); dbms_datapump.detach(h1); END;

Task 4: Instantiate the target database using Oracle Data Pump (ImpDP)

Use Oracle Data Pump (ImpDP) to import data into the target database

from the SRC_OCIGGLL.dmp that was exported from the source

database.

- Create a credential in your target database to access Oracle Object Store

(using the same information in the preceding section).

BEGIN DBMS_CLOUD.CREATE_CREDENTIAL( credential_name => 'ADB_OBJECTSTORE', username => '<user-name>', password => '<token>' ); END; - Run the following script in your target database to import data from the

SRC_OCIGGLL.dmp. Ensure that you replace the<region>,<namespace>, and<bucket-name>in Object Store URI accordingly:DECLARE ind NUMBER; -- Loop index h1 NUMBER; -- Data Pump job handle percent_done NUMBER; -- Percentage of job complete job_state VARCHAR2(30); -- To keep track of job state le ku$_LogEntry; -- For WIP and error messages js ku$_JobStatus; -- The job status from get_status jd ku$_JobDesc; -- The job description from get_status sts ku$_Status; -- The status object returned by get_status BEGIN -- Create a (user-named) Data Pump job to do a "full" import (everything -- in the dump file without filtering). h1 := DBMS_DATAPUMP.OPEN('IMPORT','FULL',NULL,'SRCMIRROR_OCIGGLL_IMPORT'); -- Specify the single dump file for the job (using the handle just returned) -- and directory object, which must already be defined and accessible -- to the user running this procedure. This is the dump file created by -- the export operation in the first example. DBMS_DATAPUMP.ADD_FILE(h1,'https://objectstorage.<region>.oraclecloud.com/n/<namespace>/b/<bucket-name>/o/SRC_OCIGGLL.dmp','ADB_OBJECTSTORE',null,DBMS_DATAPUMP.KU$_FILE_TYPE_URIDUMP_FILE); -- A metadata remap will map all schema objects from SRC_OCIGGLL to SRCMIRROR_OCIGGLL. DBMS_DATAPUMP.METADATA_REMAP(h1,'REMAP_SCHEMA','SRC_OCIGGLL','SRCMIRROR_OCIGGLL'); -- If a table already exists in the destination schema, skip it (leave -- the preexisting table alone). This is the default, but it does not hurt -- to specify it explicitly. DBMS_DATAPUMP.SET_PARAMETER(h1,'TABLE_EXISTS_ACTION','SKIP'); -- Start the job. An exception is returned if something is not set up properly. DBMS_DATAPUMP.START_JOB(h1); -- The import job should now be running. In the following loop, the job is -- monitored until it completes. In the meantime, progress information is -- displayed. Note: this is identical to the export example. percent_done := 0; job_state := 'UNDEFINED'; while (job_state != 'COMPLETED') and (job_state != 'STOPPED') loop dbms_datapump.get_status(h1, dbms_datapump.ku$_status_job_error + dbms_datapump.ku$_status_job_status + dbms_datapump.ku$_status_wip,-1,job_state,sts); js := sts.job_status; -- If the percentage done changed, display the new value. if js.percent_done != percent_done then dbms_output.put_line('*** Job percent done = ' || to_char(js.percent_done)); percent_done := js.percent_done; end if; -- If any work-in-progress (WIP) or Error messages were received for the job, display them. if (bitand(sts.mask,dbms_datapump.ku$_status_wip) != 0) then le := sts.wip; else if (bitand(sts.mask,dbms_datapump.ku$_status_job_error) != 0) then le := sts.error; else le := null; end if; end if; if le is not null then ind := le.FIRST; while ind is not null loop dbms_output.put_line(le(ind).LogText); ind := le.NEXT(ind); end loop; end if; end loop; -- Indicate that the job finished and gracefully detach from it. dbms_output.put_line('Job has completed'); dbms_output.put_line('Final job state = ' || job_state); dbms_datapump.detach(h1); END;

Task 5: Add and run a Non-integrated Replicat

- Add and run a Replicat.On the Parameter File screen, replace

MAP *.*, TARGET *.*;with the following script:-- Capture DDL operations for listed schema tables -- ddl include mapped -- -- Add step-by-step history of ddl operations captured -- to the report file. Very useful when troubleshooting. -- ddloptions report -- -- Write capture stats per table to the report file daily. -- report at 00:01 -- -- Rollover the report file weekly. Useful when PR runs -- without being stopped/started for long periods of time to -- keep the report files from becoming too large. -- reportrollover at 00:01 on Sunday -- -- Report total operations captured, and operations per second -- every 10 minutes. -- reportcount every 10 minutes, rate -- -- Table map list for apply -- DBOPTIONS ENABLE_INSTANTIATION_FILTERING; MAP SRC_OCIGGLL.*, TARGET SRCMIRROR_OCIGGLL.*;Note

DBOPTIONS ENABLE_INSTATIATION_FILTERINGenables CSN filtering on tables imported using Oracle Data Pump. For more information, see DBOPTIONS Reference. - Perform Inserts to the source database:

- Return to the Oracle Cloud console and use the navigation menu to navigate back to Oracle AI Database, Autonomous AI Transaction Processing, and then SourceDB.

- On the SourceDB Details page, click Database actions, and then click SQL.

- Enter the following inserts, and then click Run Script:

Insert into SRC_OCIGGLL.SRC_CITY (CITY_ID,CITY,REGION_ID,POPULATION) values (1000,'Houston',20,743113); Insert into SRC_OCIGGLL.SRC_CITY (CITY_ID,CITY,REGION_ID,POPULATION) values (1001,'Dallas',20,822416); Insert into SRC_OCIGGLL.SRC_CITY (CITY_ID,CITY,REGION_ID,POPULATION) values (1002,'San Francisco',21,157574); Insert into SRC_OCIGGLL.SRC_CITY (CITY_ID,CITY,REGION_ID,POPULATION) values (1003,'Los Angeles',21,743878); Insert into SRC_OCIGGLL.SRC_CITY (CITY_ID,CITY,REGION_ID,POPULATION) values (1004,'San Diego',21,840689); Insert into SRC_OCIGGLL.SRC_CITY (CITY_ID,CITY,REGION_ID,POPULATION) values (1005,'Chicago',23,616472); Insert into SRC_OCIGGLL.SRC_CITY (CITY_ID,CITY,REGION_ID,POPULATION) values (1006,'Memphis',23,580075); Insert into SRC_OCIGGLL.SRC_CITY (CITY_ID,CITY,REGION_ID,POPULATION) values (1007,'New York City',22,124434); Insert into SRC_OCIGGLL.SRC_CITY (CITY_ID,CITY,REGION_ID,POPULATION) values (1008,'Boston',22,275581); Insert into SRC_OCIGGLL.SRC_CITY (CITY_ID,CITY,REGION_ID,POPULATION) values (1009,'Washington D.C.',22,688002); - In the OCI GoldenGate Deployment Console, click the Extract name (UAEXT), and then click Statistics. Verify that SRC_OCIGGLL.SRC_CITY is listed with 10 inserts.

- Go back to the Overview screen, click the Replicat name (REP), and then click Statistics. Verify that SRCMIRROR_OCIGGLL.SRC_CITY is listed with 10 inserts