2 Understanding Key Concepts of Enterprise Data Quality

This chapter provides information about key EDQ concepts.

This chapter includes the following sections.

2.1 Understanding EDQ Terms

The most important terms used in EDQ are:

- Project

-

A group of related processes working on a common set, or sets, of data using shared reference data.

- Data Store

-

A connection to a store of data, whether the data is stored in a database or in one or more files. The data store may be used as the source of data for a process, or you may export the written Staged Data results of a process to a data store, or both.

- Process

-

Specifies a set of actions to be performed on some specified data. It comprises a series of processors, each specifying how data is to be handled and the rules that should be applied to it. A process may produce:

-

Staged data: data or metrics produced by processing the input data and choosing to write output data to the results database.

-

Results data: metric information summarizing the results of the process. For example, a simple validation process may record the number of records that failed and the number of records that passed validation.

-

- Processor

-

A logical element that performs some operation on the data. Processors can perform statistical analysis, audit checks, transformations, matching, or other operations. Processors are chained together to form processes.

- Published Processors

-

Additional processors (in addition to those in the Processor Library) that have been installed from an Extension Pack (such as, the Customer Data pack) or published onto the server by EDQ users. They are included in the Project Browser so that they can be packaged like other objects.There are three types of Published processors: Template, Reference, and Locked Reference

- Reference Data

-

Consists of lists and maps that can be used by a processor to perform checking, matching, transformations and so on. Reference data can be supplied as part of EDQ or by a third party, or can be defined by the user.

- Staged Data

-

Consists of data snapshots and data written by processes and is stored within the Results schema.

- Snapshot

-

A captured copy of external data stored within the EDQ repository.

- Job

-

A configured and ordered set of tasks that may be instigated either by EDQ or externally. Examples of tasks include executions of file downloads, snapshots, processes, and exports.

- Images

-

Customizing the icon associated to a processor.

- Exports

-

There are two types of exports:

-

A prepared export (Staged Data Export or Results Book Export) that uses a saved configuration.

-

An ad-hoc export of the current results from the Results Browser to an Excel file.

-

- Web Services

-

Used to deploy processes (as configured in Director) to provide easy integration with source systems for real time data auditing, cleansing, and matching (for example, for real time duplicate prevention).

- Run Profiles

-

Optional templates that specify configuration settings that you can use to override the default job run settings.

- Issues

-

Allows users to keep a record of their key findings when analyzing data, and also provides a way for work on a project to be allocated and tracked amongst several users.

- Project Notes

-

Allows you to use Director as the definitive repository for all information associated with the project. Any information that needs to be made available to all users working on a project can be added as a note throughout the progress of a project.

2.2 What Is Data Capture?

The data capture process begins with retrieving the data to be captured from an external data source. Data can be captured from databases, text files, XML files and so on. For a comprehensive list of possible types of data source, refer to the Data Stores topic in the Concepts section of the Online Help.

Depending on the type of data source, data capture may involve:

-

Running a single SQL query on the source system.

-

Sequentially processing a delimited or fixed format file.

-

Processing an XML file to produce a stream of data.

As the data is retrieved, it is processed by a single thread. This involves:

-

Assigning an internal sequence number to each input record. This is usually a monotonically increasing number for each row.

-

Batching the rows into work units. Once a work unit is filled, it is passed into the results database work queue.

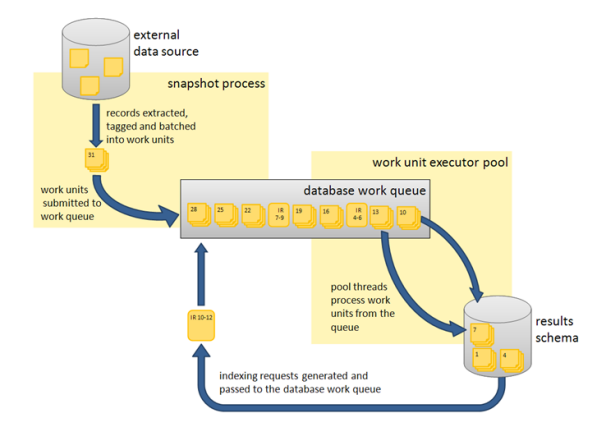

The database work queue is made up of work requests — mostly data insertion or indexing requests — to be executed on the database. The queue is processed by a pool of threads that retrieve work units from the queue, obtain a database connection to the appropriate database, and execute the work. In the case of snapshotting, the work will consist of using the JDBC batch API to load groups of records into a table.

Once all the data has been inserted for a table, the snapshot process creates one or more indexing requests and adds them to the database work queue. At least one indexing request will be created per table to index the unique row identifier, but depending on the volume of data in the snapshot and the configuration of the snapshot process other columns in the captured data may also be used to generate indexes into the snapshot data.

2.2.1 Understanding Network Communications and CPU Load

Snapshotting is expected to generate:

-

I/O and CPU load on the machine hosting the data source while data is read

-

CPU load on the web application server caused by the snapshot process reading data and grouping it for insertion

-

I/O and CPU load on the web application server, caused by the database work unit executor threads

-

A significant amount of I/O on the machine hosting the EDQ Results database as the data is inserted into a new table

-

A significant amount of I/O and some CPU load on machine hosting the Results database as the data is indexed

For example, a default EDQ installation on a 4-core server taking a snapshot of 10,000 rows of data 10 columns wide would generate SQL of the following form:

DROP TABLE DN_1; CREATE TABLE DN_1 (record-id, column1, column2, ..., column10);

100 bulk insert statements of the form:

INSERT INTO DN_1 (record_id, column1, column2, ..., column10) VALUES ( ?, ?, ..., ? )

each taking a group of 100 parameters. The bulk inserts would be executed in parallel over four separate database connections, one per CPU core.

ANALYZE TABLE DN_1 ESTIMATE STATISTICS SAMPLE 10 PERCENT

And finally, eleven CREATE INDEX... statements, indexing each of the columns in the new table (the original ten columns, plus the record_id). The CREATE INDEX statements would also be executed in parallel over four database connections.

2.3 What Is General Data Processing?

Once the data has been captured, it is ready for processing. The reader processor provides the downstream processors with managed access to the data, and the downstream processors produce results data. If any writer processors are present, they will write the results of processing back to the staged data repository.

Running a process causes the web application server to start a number of process execution threads. The default configuration of EDQ will start as many threads as there are cores on the EDQ application server machine.

2.3.1 What Is Streaming?

Instead of capturing data in a snapshot and storing it in the results database (other than temporarily during collation), it can be pulled from a source and pushed to targets as a stream.

2.3.2 What Is Work Sharing?

Each process execution thread is assigned a subset of the data to process. When the input data for a process is a data set of known size, such as snapshot or staged data, each thread will execute a query to retrieve a subset of the data, identified by the unique row IDs assigned during snapshotting. So, in the example scenario in Section 2.2.1, "Understanding Network Communications and CPU Load" describing the processing 10,000 records of data on a 4-core machine, four queries will be issued against the Results schema. The queries would be of the form:

SELECT record_id, column1, column2, … , column10

FROM DN_1

WHERE record_id > 0 AND record_id <= 2500;

In the case where the process is not run against a data set of known size, such as a job scheduled to run directly against a data source, records are shared among the process execution threads by reading all records into a queue, which is then consumed by the process execution threads.

Each process execution thread is also made aware of the sequence of processors that comprise the process. The process execution threads pass the records through each of the appropriate processors. As the processors work, they accumulate results that need to be stored in the Results schema and, in the case of writer processors, they may also accumulate data that needs to be written to staged data. All this data is accumulated into insertion groups and added into database work units, which are processed as described in the 4.1 Data capture section.

Once an execution thread has processed all its assigned records, it waits for all other process execution threads to complete. The process execution threads then enter a collation phase, during which the summary data from the multiple copies of the process are accumulated and written to the Results database by the database work queue.

The following behavior is expected during batch processing:

-

Read load on the Results schema as the captured data is read.

-

CPU load on the web application server as the data is processed.

-

Significant write load on the Results schema as results and staged data are written to the schema.

-

Reduced CPU load as the collation phase is entered.

-

A small amount of further database work as any outstanding database work units are processed and accumulated results written.

-

Further write load on the Results schema at the end of the collation phase, in the form of requests to index the results and staged data tables, as necessary. The size and number of the index requests will vary, depending on data volumes and system configuration.

Processes that are heavily built around cleaning and validation operations will tend to be bound by the I/O capacity of the database. Some processors consume significant CPU resource, but generally the speed of operation is determined by how quickly data can be provided from and written to the Results schema.

2.3.3 What Is Whole Record Set Processing?

There are a number of processors, such as the Record Duplication Profiler and Duplicate Check processors, that require access to the whole record set in order to work. If these processors only had access to a subset of the data, they would be unable to detect duplicate records with any accuracy. These processes use multiple threads to absorb the input records and build them into a temporary table. Once all the records have been examined, they are re-emitted by distributing the records amongst the various process execution threads. There is no guarantee that a record will be emitted on the same process execution thread that absorbed it.

2.3.4 What Are Run Labels?

During the design phase of a project, processes and jobs are typically run interactively using Director. When a job is run in Director, results will typically be written for inspection by the user so that the configuration of processes and jobs can be iterated to work optimally with the in-scope data. The amount of data to write can be controlled in Director.

However, when a job is deployed to production such detailed results are not normally required, and jobs are typically run with Run Labels, either from the Server Console or from the command line. When run with a Run Label, a job will only write the staged data and results views that the user has configured to be staged in the job, for better performance efficiency.

Note:

Jobs may be designed independently of any specific source or target of data. Such jobs will normally be run with a set of command line parameters, or a stored Run Profile that sets the same parameters, that dynamically change key configuration points such as the physical source of the data to read, key processing options, and the physical source of the data to write. Such jobs need to be run with a Run Label so that the written data and results are clearly separated from other runs of the job on different data. Server Console allows users to inspect results by Run Label.

2.4 What Is Match Processing?

EDQ match processors are handled in a significantly different way from the simpler processors. Due to the nature of the work carried out by match processors, multiple passes through the data are required.

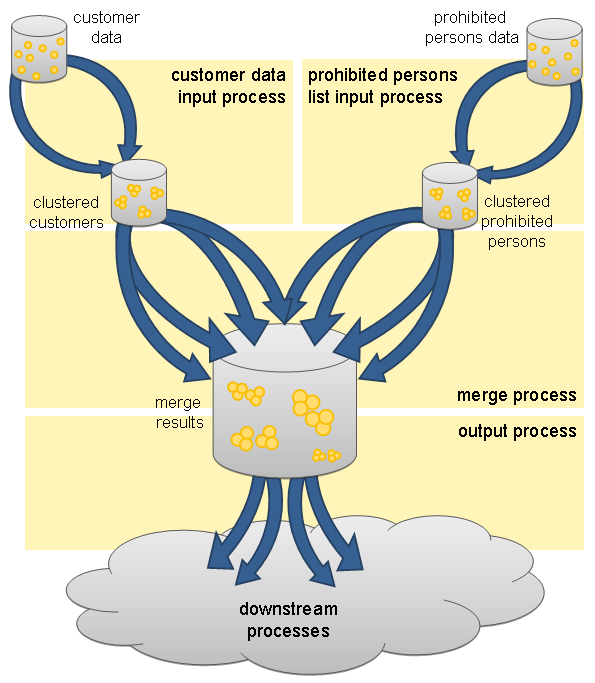

A match processor is executed by treating it as a series of sub-processes. For example, consider a process designed to match a customer data snapshot against a list of prohibited persons. The process contains a match processor that is configured to produce a list of customer reference numbers and related prohibited person identifiers. Each data stream that is input to, or output from, the match processor, is considered to be a sub-process of the match processor. Therefore, there are three sub-processes in this example, representing the customer data input stream, the prohibited persons input stream and the output data stream of the match processor. The match processor itself forms a fourth sub-process, which effectively couples the data inputs to its outputs. Each sub-process is assigned the normal quota of process execution threads, so on a 4-core machine, each sub-process would have four process execution threads.

When execution of the match processor begins, the input data sub-processes run first, processing the input data. At this point, there is no work available for the match or match output sub-processes, which remain dormant. The input data sub-processes generate cluster values for the data streams and store the cluster values and incoming records in the Results schema, using the normal database work units mechanism.

Once the input data sub-processes have processed all the available records, they terminate and commence collation of their sub-process results. Meanwhile, the match sub-process will become active. The match sub-process then works through a series of stages, with each process execution thread waiting for all the other process execution threads to complete each stage before they progress to the next. Each time a new stage begins, the work will be subdivided amongst the processor executor threads in a manner that is appropriate at that stage. The processing stages are:

| Phase | Description |

|---|---|

|

Comparison phase |

The customer data and prohibited people data is retrieved, ordered by cluster values. The data is gathered into groups of equal cluster values, queued and passed to the match process threads to compare the records. Where relationships are found between records the relationship information is written to the Results schema. |

|

Provisional grouping phase |

The relationship information detected during the comparison phase is retrieved in chunks and provisional groups of related records are formed. The relationship chunks are processed in parallel by the match processor threads. These provisional groups are written back to the Results database. |

|

Final grouping phase |

The provisional group table is inspected by a single thread to check for groups that have been artificially split by chunk boundaries. If any such cross-chunk groups are found they are merged into a single group. |

|

Merged output phase |

Each of the match processor threads retrieves an independent subset of the match groups and forms the merged output, merging multiple records into the single output records. |

This completes the match sub-process, and so the match processor execution threads now move into their collation phase.

At this point, the sub-process associated with the output of match data becomes active. The output data is divided amongst the process execution threads for the output sub-process and passed to the processors down stream from the match processor. From this point onwards, the data is processed in the normal batch processing way.

Benchmarks and production experience have shown that the comparison phase of a match processor is one of the few EDQ operations that is likely to become CPU bound. When anything other than very simple comparison operations are performed, the ability of the CPU to handle the comparison load limits the process. The comparison operations scale very well and are perfectly capable of utilizing all CPU cycles available to the EDQ Web Application Server.

Tip:

Oracle recommends that the reader familiarizes themselves with the material contained in the "Advanced Features: Matching Concept Guide" in the Online Help.

2.5 What Is Real-Time Processing?

EDQ is capable of processing messages in real time. Currently, EDQ supports messaging using:

-

Web Services

-

JMS-enabled messaging software

When configured for real-time message processing, the server starts multiple process execution threads to handle messages as they are received. An incoming message is handed to a free process execution thread, or placed in a queue to await the next process execution thread to become free. Once the message has been processed, any staged data will be written to the Results database, and the process execution thread will either pick up the next message from the queue, if one exists, or become available for the next incoming message.

When processing data in real time, the process may be run in interval mode. Interval mode allows the process to save results at set intervals so that they can be inspected by a user and published to the EDQ Dashboard. The interval can be determined either by the number of records processed or by time limit. When an interval limit is reached, EDQ starts a new set of process execution threads for the process. Once all the new process execution threads have completed any necessary initialization, any new incoming messages are passed to the new threads. Once the old set of process execution threads have finished processing any outstanding messages, the system directs those threads to enter the collation phase and save any results, after which the old process execution threads are terminated and the data is available for browsing.