2 Supported MAA Architectures

This chapter describes the supported maximum availability architecture (MAA) multi-datacenter solutions that can be used to provide continuous availability to protect an Oracle WebLogic Server system against downtime across multiple data centers. It also describes how the Continuous Availability features can be used with each architecture.

The supported MAA solutions include:

-

Active-Active Application Tier with Active-Passive Database Tier

-

Active-Passive Application Tier with Active-Passive Database Tier

-

Active-Active Stretch Cluster with Active-Passive Database Tier

Active-Active Application Tier with Active-Passive Database Tier

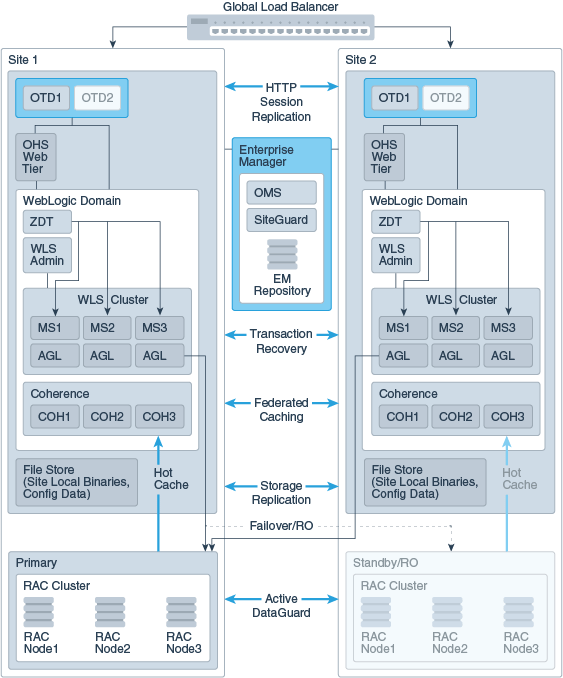

The following figure shows a recommended continuous availability solution using an Active-Active application infrastructure tier with an Active-Passive database tier.

Figure 2-1 Topology for Active-Active Application infrastructure Tier with Active-Passive Database Tier

Description of ''Figure 2-1 Topology for Active-Active Application infrastructure Tier with Active-Passive Database Tier''

The key aspects of this sample topology include:

-

Two separate WebLogic domains configured in two different data centers, Site 1 and Site 2. The domains at both sites are active. The domains include:

-

A collection of Managed Servers (MS1, MS2, and MS3) in a WebLogic Server cluster, managed by the WebLogic Server Admin Server in the domain. In this sample, Active Gridlink (AG) is being used to connect the Managed Servers to the primary database. (Although generic DataSource or MultiDataSource can be used, Active Gridlink is preferable because it offers high-availabity and improved performance). The Zero Downtime Patching (ZDT) arrows represent patching the Managed Servers in a rolling fashion.

-

A Coherence cluster (COH1, COH2, and COH3) managed by the WebLogic Server Admin Server in the domain.

-

-

A global load balancer.

-

WebLogic Server HTTP session replication across clusters.

-

Two instances of Oracle Traffic Director (OTD) at each site, one active and one passive. OTD can balance requests to the web tier or to the WebLogic Server cluster.

-

Oracle HTTP Server (OHS) Web Tier. (Optional component based on the type of environment.)

-

A filestore for the configuration data, local binaries, logs, and so on that is replicated across the two sites using any replication technology.

-

Oracle Site Guard, a component of Oracle Enterprise Manager Cloud Control, that orchestrates failover and switchover of sites.

-

Two separate Oracle RAC database clusters in two different data centers. The primary active Oracle RAC database cluster is at Site 1. Site 2 contains an Oracle RAC database cluster in standby (passive) read-only mode. The clusters can contain transaction logs, JMS stores, and application data. Data is replicated using Oracle Active DataGuard. (Although Oracle recommends using Oracle RAC database clusters because they provide the best level of high availability, they are not required. A single database or multitenant database can also be used.)

This topology uses the Continuous Availability features as follows:

-

Automated cross-domain transaction recovery—Because both domains are active, you can use the full capabilities of this feature as described in "Automated Cross-Domain Transaction Recovery". In this architecture, transactions can be recovered automatically, without any manual intervention.

-

WebLogic Zero Downtime Patching—Because both domains are active, you can orchestrate the roll out of updates separately on each site. See "WebLogic Server Zero Downtime Patching".

-

Coherence Federated Caching—You can use the full capabilities of this feature as described in "Coherence Federated Caching". Cached data is always replicated between clusters. Applications in different sites have access to a local cluster instance.

-

Coherence GoldenGate HotCache—Updates the Coherence cache in real-time for any updates that are made on the active database. See "Coherence GoldenGate HotCache".

-

Oracle Traffic Director—Adjusts traffic routing to application servers depending on server availability. You can achieve high-availability with Oracle Traffic Director by having a pair of instances configured at each site, either active-active or active-passive. See "Oracle Traffic Director".

-

Oracle Site Guard—Because only the database is in standby mode in this architecture, Oracle Site Guard will control database failover only. It does not apply to the application architecture tier because both domains are active. For more information, see "Oracle Site Guard".

Active-Passive Application Tier with Active-Passive Database Tier

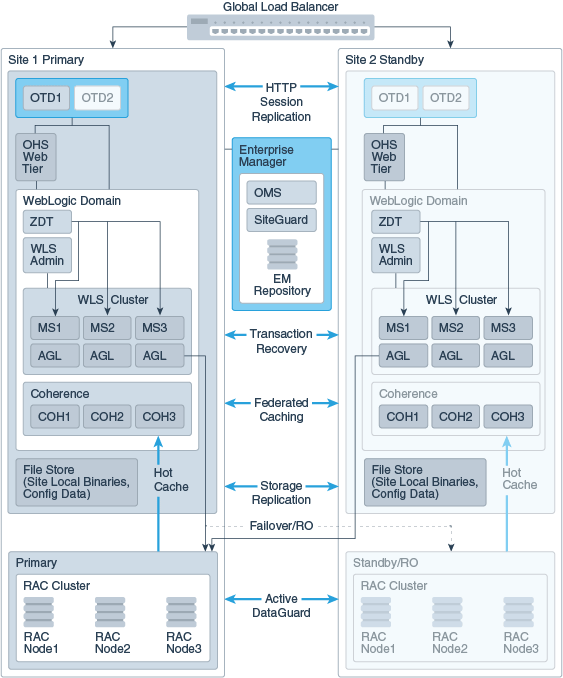

The following figure shows a recommended continuous availability topology using an Active-Passive application infrastructure tier with an Active-Passive database tier.

Figure 2-2 Topology for Active-Passive Application infrastructure Tier with Active-Passive Database Tier

Description of ''Figure 2-2 Topology for Active-Passive Application infrastructure Tier with Active-Passive Database Tier''

The key aspects of this topology include:

-

Two separate WebLogic domains configured in two different data centers, Site 1 and Site 2. The domain at Site 1 is active and the domain at Site 2 is in standby (passive) mode.

All active-passive domain pairs must be configured with symmetric topology; they must be identical and use the same domain configurations such as directory names and paths, port numbers, user accounts, load balancers and virtual server names, and the same versions of the software. Hostnames (not static IPs) must be used to specify the listen address of the Managed Servers.

The domains include:

-

A collection of Managed Servers (MS1, MS2, and MS3) in a WebLogic Server cluster, managed by the WebLogic Server Admin Server in the domain. In this sample, Active Gridlink (AG) is being used to connect the Managed Servers to the primary database. (Although generic DataSource or MultiDataSource can be used, Active Gridlink is preferable because it offers high-availabity and improved performance). The Zero Downtime Patching (ZDT) arrows represent patching the Managed Servers in a rolling fashion.

-

A Coherence cluster (COH1, COH2, and COH3) managed by the WebLogic Server Admin Server in the domain.

-

-

A global load balancer.

-

WebLogic Server HTTP session replication across clusters.

-

Two instances of Oracle Traffic Director (OTD) at each site. OTD can balance requests to the web tier or to the WebLogic Server cluster. At Site 1, one instance is active and one passive. On Site 2 they are both on standby. When Site 2 becomes active, the OTD instances on that site will start routing the requests.

-

Oracle HTTP Server (OHS) Web Tier. (Optional component based on the type of environment.)

-

A filestore for the configuration data, local binaries, logs, and so on that is replicated across the two sites using any replication technology.

-

Oracle Site Guard, a component of Oracle Enterprise Manager Cloud Control, that orchestrates failover and switchover of sites.

-

Two separate Oracle RAC database clusters in two different data centers. The primary active Oracle RAC database cluster is at Site 1. Site 2 contains an Oracle RAC database cluster in standby (passive) read-only mode. The clusters can contain transaction logs, JMS stores, and application data. Data is replicated using Oracle Active DataGuard. (Although Oracle recommends using Oracle RAC database clusters because they provide the best level of high availability, they are not required. A single database or multitenant database can also be used.)

This architecture uses the Continuous Availability features as follows:

-

Automated cross-domain transaction recovery—Because the domain at Site 2 is in standby mode, during failover you must first start the domain at Site 2. Once you do so, you can use the cross-domain transaction recovery features as described in "Automated Cross-Domain Transaction Recovery".

-

WebLogic Server Zero Downtime Patching—In this architecture, you can use Zero Downtime Patching feature on the active domain in Site 1 as described in "WebLogic Server Zero Downtime Patching". Because the servers are not running in the standby (passive) domain at Site 2, you can use OPatch. When the servers become active they will point to the patched Oracle home. For more information about OPatch, see Patching with OPatch.

-

Coherence Federated Caching—In this architecture, the passive site supports read-only operations and off-site backup. See "Coherence Federated Caching".

-

Coherence GoldenGate HotCache—In this architecture, updates on the active database at Site 1 update the Coherence cache in real time and the database updates are replicated to Site 2. When the data replication occurs on Site 2, GoldenGate HotCache updates the cache in real time. See "Coherence GoldenGate HotCache".

-

Oracle Traffic Director—In this architecture, OTD is in standby mode on the standby (passive) domain at Site 2. When Site 2 becomes active after failover, the OTD instance on the standby site is activated (by SiteGuard using a script that is run before or after failover) and the OTD instance will start routing requests to the recently started servers.

-

Oracle Site Guard—In this architecture, when Site1 fails, SiteGuard will initiate the failover using scripts that specify what should occur and in what order. For example, it can start WebLogic Server, Coherence, web applications, and other site components in a specific order. You can execute the scripts either pre- or post-failover. SiteGuard integrates with Data Guard Broker to failover the database. See "Oracle Site Guard".

Active-Active Stretch Cluster with Active-Passive Database Tier

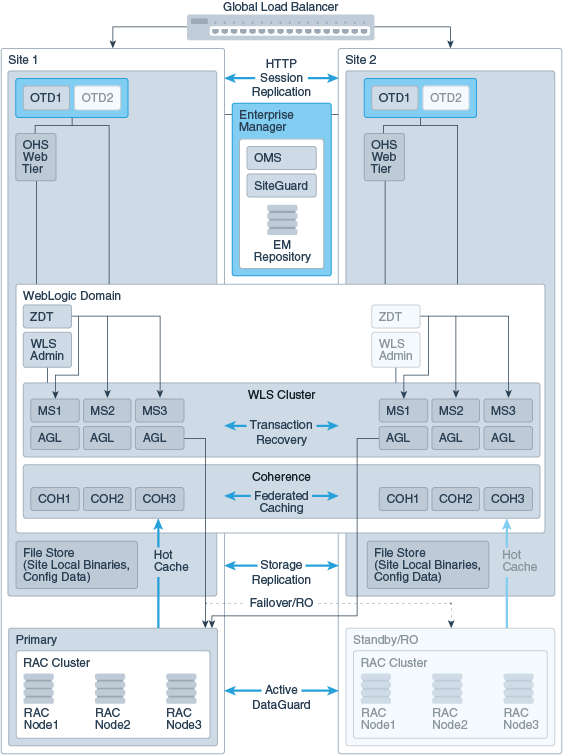

The following figure shows a recommended continuous availability solution using an Active-Active stretch cluster application infrastructure tier with an Active-Passive database tier.

Figure 2-3 Topology for Active-Active Stretch Cluster Application Infrastructure Tier and Active-Passive Database Tier

Description of ''Figure 2-3 Topology for Active-Active Stretch Cluster Application Infrastructure Tier and Active-Passive Database Tier''

The key aspects of this topology include:

-

WebLogic Server configured as a cluster that stretches across two different data centers, Site 1 and Site 2. All servers in the cluster are active.

-

The domain includes:

-

A WebLogic Server cluster that consists of a group of Managed Servers (MS1, MS2, and MS3) at Site 1 and another group of Managed Servers (MS4, MS5, and MS6) at Site 2. The Managed Servers are managed by the WebLogic Server Admin Server at Site 1. In this sample, Active Gridlink (AG) is being used to connect the Managed Servers to the primary database. (Although generic DataSource or MultiDataSource can be used, Active Gridlink is preferable because it offers high-availabity and improved performance). The Zero Downtime Patching (ZDT) arrows represent patching the Managed Servers in a rolling fashion.

-

A Coherence cluster that consists of a group of Coherence instances (COH1, COH2, and COH3) at Site 1, and another group (COH4, COH5, and COH6) at Site 2, all managed by the WebLogic Server Admin Server at Site 1.

-

-

A global load balancer.

-

WebLogic Server HTTP session replication across clusters.

-

Two instances of Oracle Traffic Director (OTD) at each site, one active and one passive. OTD can balance requests to the web tier or to the WebLogic Server cluster.

-

Oracle HTTP Server (OHS) Web Tier. (Optional component based on the type of environment.)

-

A filestore for the configuration data, local binaries, logs, and so on that is replicated across the two sites using any replication technology.

-

Oracle Site Guard, a component of Oracle Enterprise Manager Cloud Control, that orchestrates failover and switchover of sites.

-

Two separate Oracle RAC database clusters in two different data centers. The primary active Oracle RAC database cluster is at Site 1. Site 2 contains an Oracle RAC database cluster in standby (passive) read-only mode. The clusters can contain transaction logs, JMS stores, and application data. Data is replicated using Oracle Active DataGuard. (Although Oracle recommends using Oracle RAC database clusters because they provide the best level of high availability, they are not required. A single database or multitenant database can also be used.)

This architecture uses the Continuous Availability features as follows:

-

Automated cross-domain transaction recovery—Because all of the servers are in the same cluster, you can use existing WebLogic Server high availability features, server and service migration, to recover transactions.

-

In whole server migration, a migratable server instance, and all of its services, is migrated to a different physical machine upon failure. For more information, see "Whole Server Migration" in Administering Clusters for Oracle WebLogic Server.

-

In service migration, in the event of failure, services are moved to a different server instance within the cluster. For more information, see "Service Migration" in Administering Clusters for Oracle WebLogic Server.

-

-

WebLogic Zero Downtime Patching—Because all of the servers in the cluster are active, you can use the full capabilities of this feature as described in "WebLogic Server Zero Downtime Patching".

-

Coherence Federated Caching—You can use the full capabilities of this feature as described in "Coherence Federated Caching".

-

Coherence GoldenGate HotCache—You can use the full capabilities of this feature as described in "Coherence GoldenGate HotCache".

-

Oracle Traffic Director—Adjusts traffic routing to application servers depending on server availability. You can use the full capabilities of this feature as described in "Oracle Traffic Director".

-

Oracle Site Guard—Because only the database is in standby mode in this architecture, Oracle Site Guard will control database failover only. It does not apply to the application architecture tier because all servers in the cluster are active. For more information, see "Oracle Site Guard".