2 How To...

This chapter provides information on how to perform certain key tasks in EDQ. These are most useful when you already understand the basics of the product.

This chapter includes the following sections:

2.1 Execution Options

EDQ can execute the following types of task, either interactively from the GUI (by right-clicking on an object in the Project Browser, and selecting Run), or as part of a scheduled Job.

The tasks have different execution options. Click on the task below for more information:

-

External Tasks (such as File Downloads, or External Executables)

-

Exports (of data)

In addition, when setting up a Job it is possible to set Triggers to run before or after Phase execution.

When setting up a Job, tasks may be divided into several Phases in order to control the order of processing, and to use conditional execution if you want to vary the execution of a job according to the success or failure of tasks within it.

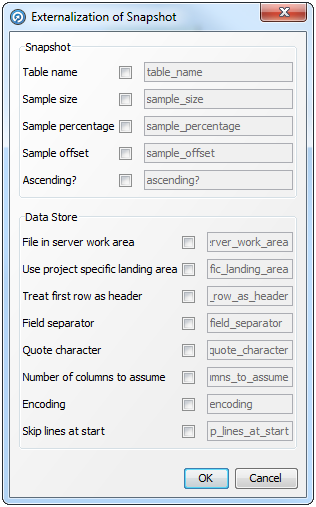

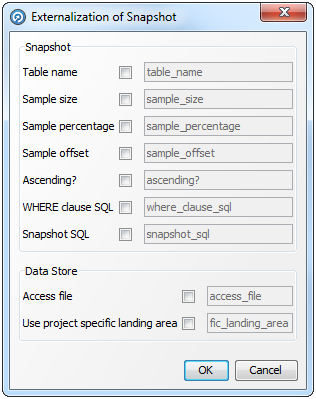

2.1.1 Snapshots

When a Snapshot is configured to run as part of a job, there is a single Enabled? option, which is set by default.

Disabling the option allows you to retain a job definition but to disable the refresh of the snapshot temporarily - for example because the snapshot has already been run and you want to re-run later tasks in the job only.

2.1.2 Processes

There are a variety of different options available when running a process, either as part of a job, or using the Quick Run option and the Process Execution Preferences:

-

Readers (options for which records to process)

-

Process (options for how the process will write its results)

-

Run Modes (options for real time processes)

-

Writers (options for how to write records from the process)

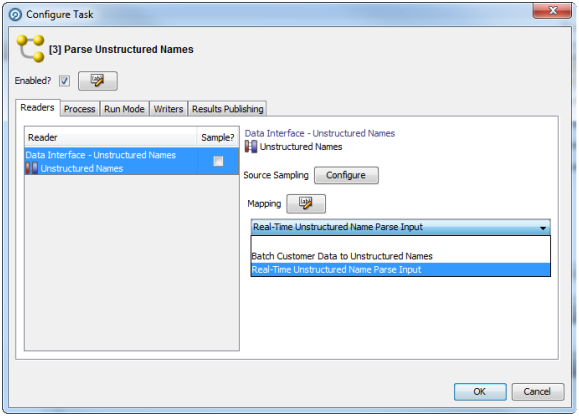

2.1.2.1 Readers

For each Reader in a process, the following option is available:

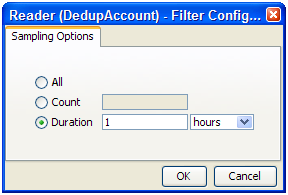

The Sample option allows you to specify job-specific sampling options. For example, you might have a process that normally runs on millions of records, but you might want to set up a specific job where it will only process some specific records that you want to check, such as for testing purposes.

Specify the required sampling using the option under Sampling, and enable it using the Sample option.

The sampling options available will depend on how the Reader is connected.

For Readers that are connected to real time providers, you can limit the process so that it will finish after a specified number of records using the Count option, or you can run the process for a limited period of time using the Duration option. For example, to run a real time monitoring process for a period of 1 hour only:

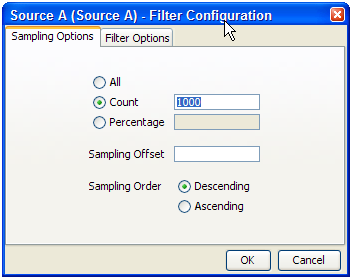

For Readers that are connected to staged data configurations, you can limit the process so that it runs only on a sample of the defined record set, using the same sampling and filtering options that are available when configuring a Snapshot. For example, to run a process so that it only processes the first 1000 records from a data source:

The Sampling Options fields are as follows:

-

All - Sample all records.

-

Count - Sample n records. This will either be the first n records or last n records, depending on the Sampling Order selected.

-

Percentage - Sample n% of the total number of records.

-

Sampling Offset - The number of records after which the sampling should be performed.

-

Sampling Order - Descending (from first record) or Ascending (from last).

Note:

If a Sampling Offset of, for example, 1800 is specified for a record set of 2000, only 200 records can be sampled regardless of the values specified in the Count or Percentage fields.

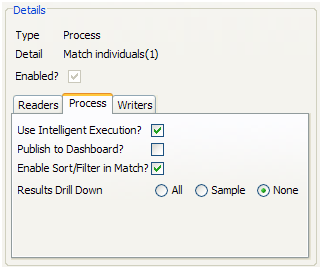

2.1.2.2 Process

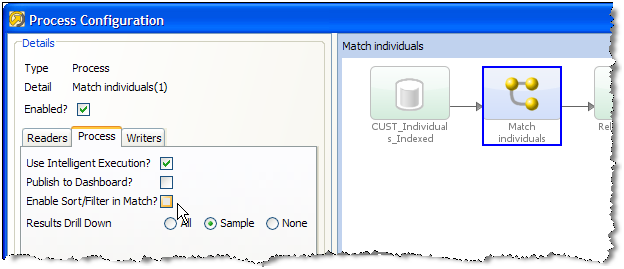

The following options are available when running a process, either as part of the Process Execution Preferences, or when running the process as part of a job.

-

Use Intelligent Execution?

Intelligent Execution means that any processors in the process which have up-to-date results based on the current configuration of the process will not re-generate their results. Processors that do not have up-to-date results are marked with the rerun marker. For more information, see the

Processor Statestopic in Enterprise Data Quality Online Help. Intelligent Execution is selected by default. Note that if you choose to sample or filter records in the Reader in a process, all processors will re-execute regardless of the Intelligent Execution setting, as the process will be running on a different set of records. -

Enable Sort/Filter in Match processors?

This option means that the specified Sort/Filter enablement settings on any match processors in the process (accessed via the Advanced Options on each match processor) will be performed as part of the process execution. The option is selected by default. When matching large volumes of data, running the Sort/Filter enablement task to allow match results to be reviewed may take a long time, so you may want to defer it by de-selecting this option. For example, if you are exporting matching results externally, you may want to begin exporting the data as soon as the matching process is finished, rather than waiting until the Enable Sort/Filter process has run. You may even want to over-ride the setting altogether if you know that the results of the matching process will not need to be reviewed.

-

Results Drill Down

This option allows you to choose the level of Results Drill Down that you require.

-

All means that drilldowns will be available for all records that are read in to the process. This is only recommended when you are processing small volumes of data (up to a few thousand records), when you want to ensure that you can find and check the processing of any of the records read into the process.

-

Sample is the default option. This is recommended for most normal runs of a process. With this option selected, a sample of records will be made available for every drilldown generated by the process. This ensures that you can explore results as you will always see some records when drilling down, but ensures that excessive amounts of data are not written out by the process.

-

None means that the process will still produce metrics, but drilldowns to the data will be unavailable. This is recommended if you want the process to run as quickly as possible from source to target, for example, when running data cleansing processes that have already been designed and tested.

-

-

Publish to Dashboard?

This option sets whether or not to publish results to the Dashboard. Note that in order to publish results, you first have to enable dashboard publication on one or more audit processors in the process.

2.1.2.3 Run Modes

To support the required Execution Types, EDQ provides three different run modes.

If a process has no readers that are connected to real time providers, it always runs in Normal mode.

If a process has at least one reader that is connected to a real time provider, the mode of execution for a process can be selected from one of the following three options:

In Normal mode, a process runs to completion on a batch of records. The batch of records is defined by the Reader configuration, and any further sampling options that have been set in the process execution preferences or job options.

Prepare mode is required when a process needs to provide a real time response, but can only do so where the non real time parts of the process have already run; that is, the process has been prepared.

Prepare mode is most commonly used in real time reference matching. In this case, the same process will be scheduled to run in different modes in different jobs - the first job will prepare the process for real time response execution by running all the non real time parts of the process, such as creating all the cluster keys on the reference data to be matched against. The second job will run the process as a real time response process (probably in Interval mode).

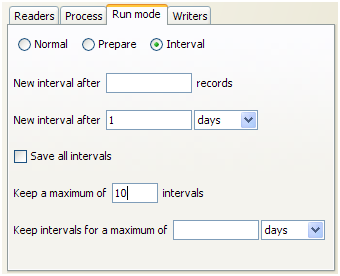

In Interval mode, a process may run for a long period of time, (or even continuously), but will write results from processing in a number of intervals. An interval is completed, and a new one started, when either a record or time threshold is reached. If both a record and a time threshold are specified, then a new interval will be started when either of the thresholds is reached.

As Interval mode processes may run for long periods of time, it is important to be able to configure how many intervals of results to keep. This can be defined either by the number of intervals, or by a period of time.

For example, the following options might be set for a real time response process that runs on a continuous basis, starting a new interval every day:

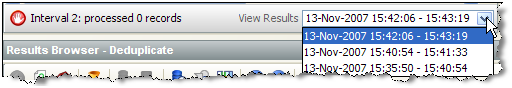

Browsing Results from processing in Interval mode

When a process is running in Interval mode, you can browse the results of the completed intervals (as long as they are not too old according to the specified options for which intervals to keep).

The Results Browser presents a simple drop-down selection box showing the start and end date and time of each interval. By default, the last completed interval is shown. Select the interval, and browse results:

If you have the process open when a new set of results becomes available, you will be notified in the status bar:

You can then select these new results using the drop-down selection box.

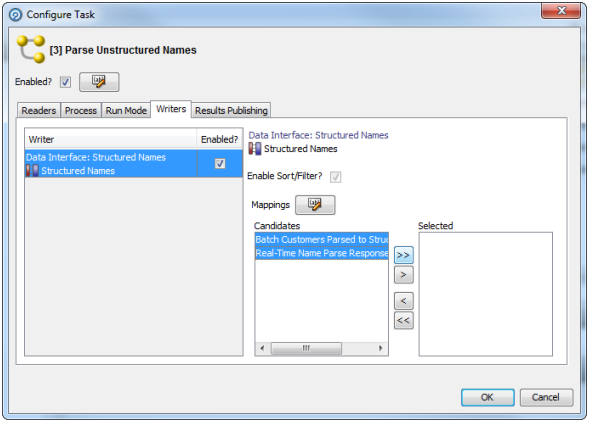

2.1.2.4 Writers

For each Writer in a process, the following options are available:

-

Write Data?

This option sets whether or not the writer will 'run'; that is, for writers that write to stage data, de-selecting the option will mean that no staged data will be written, and for writers that write to real time consumers, de-selecting the option will mean that no real time response will be written.

This is useful in two cases:

-

You want to stream data directly to an export target, rather than stage the written data in the repository, so the writer is used only to select the attributes to write. In this case, you should de-select the Write Data option and add your export task to the job definition after the process.

-

You want to disable the writer temporarily, for example, if you are switching a process from real time execution to batch execution for testing purposes, you might temporarily disable the writer that issues the real time response.

-

-

Enable Sort/Filter?

This option sets whether or not to enable sorting and filtering of the data written out by a Staged Data writer. Typically, the staged data written by a writer will only require sorting and filtering to be enabled if it is to be read in by another process where users might want to sort and filter the results, or if you want to be able to sort and filter the results of the writer itself.

The option has no effect on writers that are connected to real time consumers.

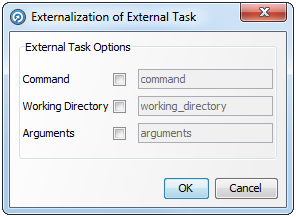

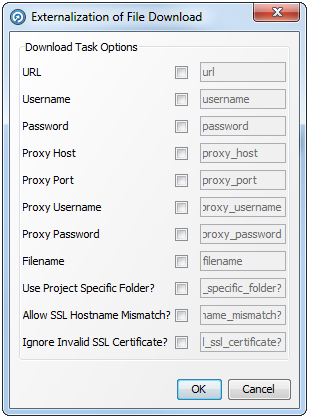

2.1.3 External Tasks

Any External Tasks (File Downloads, or External Executables) that are configured in a project can be added to a Job in the same project.

When an External Task is configured to run as part of a job, there is a single Enabled? option.

Enabling or Disabling the Enable export option allows you to retain a job definition but to enable or disable the export of data temporarily.

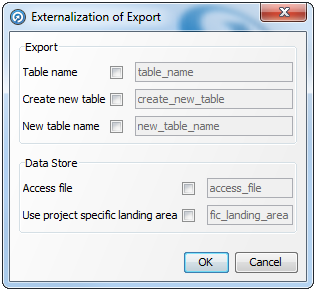

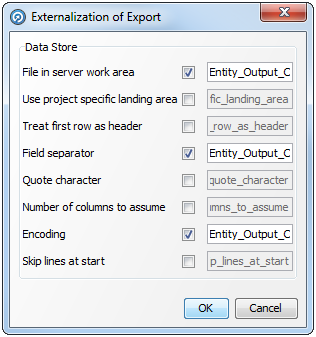

2.1.4 Exports

When an Export is configured to run as part of a job, the export may be enabled or disabled (allowing you to retain a Job definition but to enable or disable the export of data temporarily), and you can specify how you want to write data to the target Data Store, from the following options:

Delete current data and insert (default)

EDQ deletes all the current data in the target table or file and inserts the in-scope data in the export. For example, if it is writing to an external database it will truncate the table and insert the data, or if it is writing to a file it will recreate the file.

EDQ does not delete any data from the target table or file, but adds the in-scope data in the export. When appending to a UTF-16 file, use the UTF-16LE or UTF-16-BE character set to prevent a byte order marker from being written at the start of the new data.

Replace records using primary key

EDQ deletes any records in the target table that also exist in the in-scope data for the export (determined by matching primary keys) and then inserts the in-scope data.

Note:

- When an Export is run as a standalone task in Director (by right-clicking on the Export and selecting Run), it always runs in Delete current data and insert mode.

-

Delete current data and insert and Replace records using primary key modes perform Delete then Insert operations, not Update. It is possible that referential integrity rules in the target database will prevent the deletion of the records, therefore causing the Export task to fail. Therefore, in order to perform an Update operation instead, Oracle recommends the use of a dedicated data integration product, such as Oracle Data Integrator.

2.1.5 Results Book Exports

When a Results Book Export is configured to run as part of a job, there is a single option to enable or disable the export, allowing you to retain the same configuration but temporarily disable the export if required.

2.1.6 Triggers

Triggers are specific configured actions that EDQ can take at certain points in processing.

-

Before Phase execution in a Job

-

After Phase execution in a Job

For more information, see Using Triggers in Administering Oracle Enterprise Data Quality and the Advanced Options For Match Processors topic in Enterprise Data Quality Online Help.

2.2 Creating and Managing Jobs

This topic covers:

Note:

- It is not possible to edit or delete a Job that is currently running. Always check the Job status before attempting to change it.

-

Snapshot and Export Tasks in Jobs must use server-side data stores, not client-side data stores.

2.2.1 Creating a Job

-

Expand the required project in the Project Browser.

-

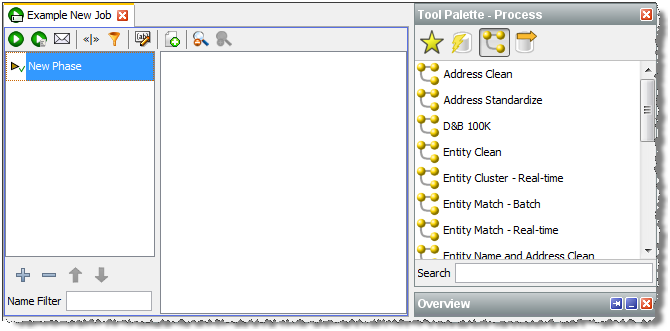

Right-click the Jobs node of the project and select New Job. The New Job dialog is displayed.

-

Enter a Name and (if required) Description, then click Finish. The Job is created and displayed in the Job Canvas:

-

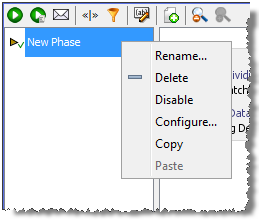

Right-click New Phase in the Phase list, and select Configure.

-

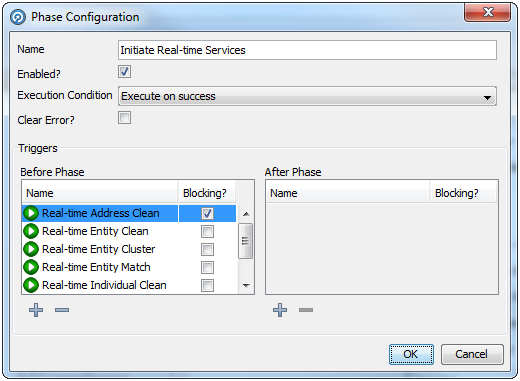

Enter a name for the phase and select other options as required:

Field Type Description Enabled? Checkbox To enable or disable the Phase. Default state is checked (enabled). Note: The status of a Phase can be overridden by a Run Profile or with the 'runopsjob' command on the EDQ Command Line Interface.

Execution Condition Drop-down list To make the execution of the Phase conditional on the success or failure of previous Phases. The options are:

-

Execute on failure: the phase will only execute if the previous phase did not complete successfully.

-

Execute on success (default): the Phase will only execute if all previous Phases have executed successfully.

-

Execute regardless: the Phase will execute regardless of whether previous Phases have succeeded or failed.

Note: If an error occurs in any phase, the error will stop all 'Execute on success' phases unless an 'Execute regardless' or 'Execute on failure' phase runs with the 'Clear Error?' button checked runs first.

Clear Error? Checkbox To clear or leave unchanged an error state in the Job. If a job phase has been in error, an error flag is applied. Subsequent phases set to Execute on success will not run unless the error flag is cleared using this option. The default state is unchecked.

Triggers N/A To configure Triggers to be activated before or after the Phase has run. For more information, see Using Job Triggers. -

-

Click OK to save the settings.

-

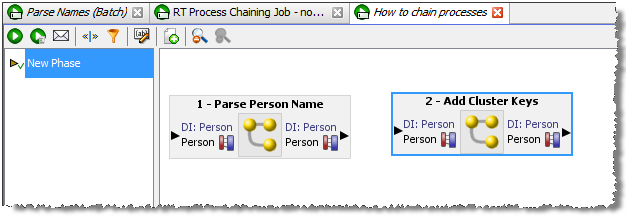

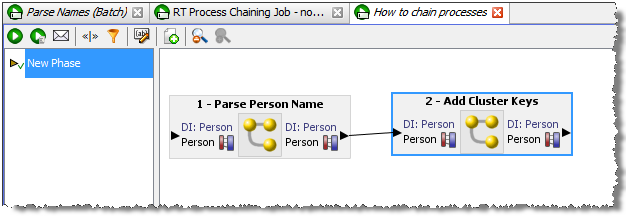

Click and drag Tasks from the Tool Palette, configuring and linking them as required.

-

To add more Phases, click the Add Job Phase button at the bottom of the Phase area. Phase order can be changed by selecting a Phase and moving it up and down the list using the Move Phase buttons. To delete a Phase, click the Delete Phase button.

-

When the Job is configured as required, click File > Save.

2.2.2 Editing a Job

-

To edit a Job, locate it within the Project Browser and either double click it or right-click and select Edit....

-

The Job is displayed in the Job Canvas. Edit the Phases and/or Tasks as required.

-

Click File >Save.

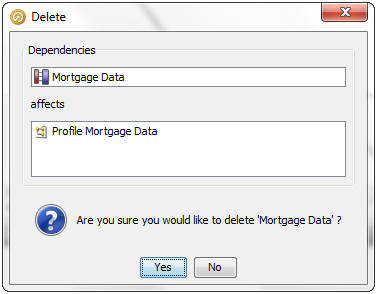

2.2.3 Deleting a Job

Deleting a job does not delete the processes that the Job contained, and nor does it delete any of the results associated with it. However, if any of the processes contained in the Job were last run by the Job, the last set of results for that process will be deleted. This will result in the processors within that process being marked as out of date.

To delete a Job, either:

-

select it in the Project Browser and press the Delete key; or

-

right-click the job and select Delete.

Remember that it is not possible to delete a Job that is currently running.

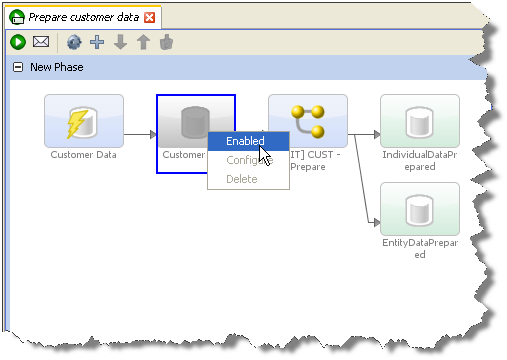

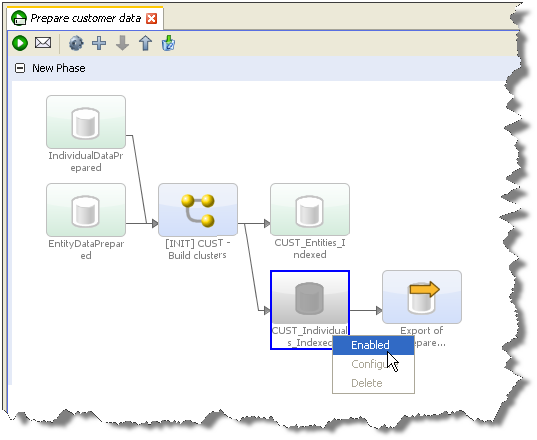

2.2.4 Job Canvas Right-Click Menu

There are further options available when creating or editing a job, accessible via the right-click menu.

Select a task on the canvas and right click to display the menu. The options are as follows:

-

Enabled - If the selected task is enabled, there will be a checkmark next to this option. Select or deselected as required.

-

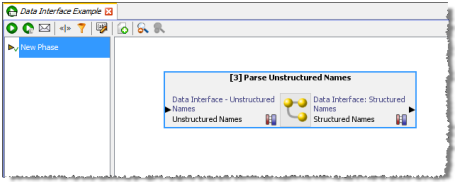

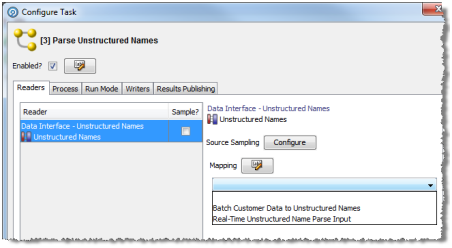

Configure Task... - This option displays the Configure Task dialog. For further details, see the Running Jobs Using Data Interfaces topic.

-

Delete - Deletes the selected task.

-

Open - Opens the selected task in the Process Canvas.

-

Cut, Copy, Paste - These options are simply used to cut, copy and paste tasks as required on the Job Canvas.

2.2.5 Editing and Configuring Job Phases

Phases are controlled using a right-click menu. The menu is used to rename, delete, disable, configure, copy, and paste Phases:

The using the Add, Delete, Up, and Down controls at the bottom of the Phase list:

For more details on editing and configuring phases see Creating a Job above, and also the Using Job Triggers topic.

For more information, see Understanding Enterprise Data Quality and Enterprise Data Quality Online Help at http://docs.oracle.com/middleware/12212/edq/index.html

2.3 Using Job Triggers

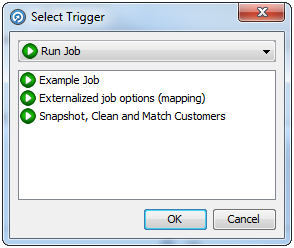

Job Triggers are used to start or interrupt other Jobs. Two types of triggers are available by default:

-

Run Job Triggers: used to start a Job.

-

Shutdown Web Services Triggers: used to shut down real-time processes.

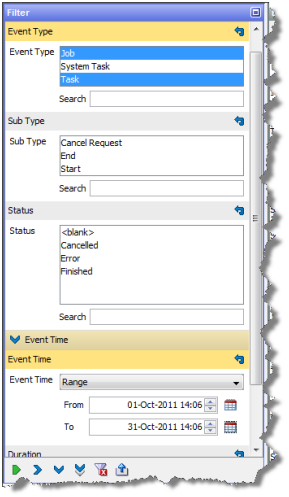

Further Triggers can be configured by an Administrator, such as sending a JMS message or calling a Web Service. They are configured using the Phase Configuration dialog, an example of which is provided below:

Triggers can be set before or after a Phase. A Before Trigger is indicated by a blue arrow above the Phase name, and an After Trigger is indicated by a red arrow below it. For example, the following image shows a Phase with Before and After Triggers:

Triggers can also be specified as Blocking Triggers. A Blocking Trigger prevents the subsequent Trigger or Phase beginning until the task it triggers is complete.

2.3.1 Configuring Triggers

-

Right-click the required Phase and select Configure. The Phase Configuration dialog is displayed.

-

In the Triggers area, click the Add Trigger button under the Before Phase or After Phase list, as required. The Select Trigger dialog is displayed:

-

Select the Trigger type in the drop-down field.

-

Select the specific Trigger in the list area.

-

Click OK.

-

If required, select the Blocking? checkbox next to the Trigger.

-

Set further Triggers as required.

-

When all the Triggers have been set, click OK.

2.3.2 Deleting a Trigger from a Job

-

Right-click the required Phase and select Configure.

-

In the Phase Configuration dialog, find the Trigger selected for deletion and click it.

-

Click the Delete Trigger button under the list of the selected Trigger. The Trigger is deleted.

-

Click OK to save changes. However, if a Trigger is deleted in error, click Cancel instead.

For more information, see Understanding Enterprise Data Quality and Enterprise Data Quality Online Help at http://docs.oracle.com/middleware/12212/edq/index.html

2.4 Job Notifications

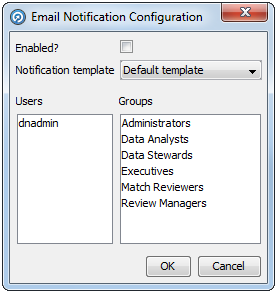

A Job may be configured to send a notification email to a user, a number of specific users, or a whole group of users, each time the Job completes execution. This allows EDQ users to monitor the status of scheduled jobs without having to log on to EDQ.

Emails will also only be sent if valid SMTP server details have been specified in the mail.properties file in the notification/smtp subfolder of the oedq_local_home directory. The same SMTP server details are also used for Issue notifications. For more information, see Administering Enterprise Data Quality Server.

The default notification template - default.txt - is found in the EDQ config/notification/jobs directory. To configure additional templates, copy this file and paste it into the same directory, renaming it and modifying the content as required. The name of the new file will appear in the Notification template field of the Email Notification Configuration dialog.

2.4.1 Configuring a Job Notification

-

Open the Job and click the Configure Notification button on the Job Canvas toolbar. The Email Notification Configuration dialog is displayed.

-

Check the Enabled? box.

-

Select the Notification template from the drop-down list.

-

Click to select the Users and Groups to send the notification to. To select more than one User and/or Group, hold down the CTRL key when clicking.

-

Click OK.

Note:

Only users with valid email addresses will receive emails. For users that are managed internally to EDQ, a valid email address must be configured in User Administration. For users that are managed externally to EDQ, for example in WebLogic or an external LDAP system, a valid 'mail' attribute must be configured.2.4.2 Default Notification Content

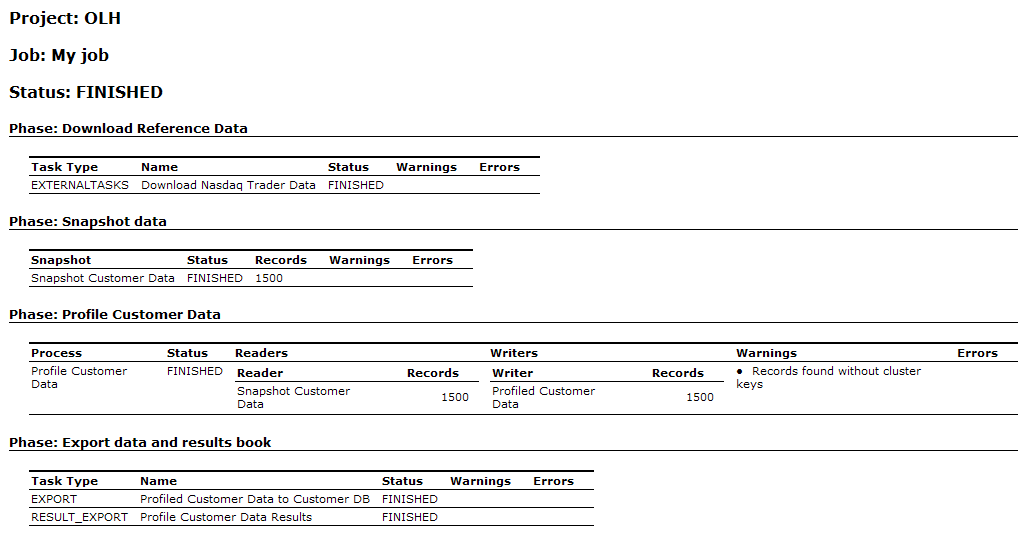

The default notification contains summary information of all tasks performed in each phase of a job, as follows:

The notification displays the status of the snapshot task in the execution of the job. The possible statuses are:

-

STREAMED - the snapshot was optimized for performance by running the data directly into a process and staging as the process ran

-

FINISHED - the snapshot ran to completion as an independent task

-

CANCELLED - the job was canceled by a user during the snapshot task

-

WARNING - the snapshot ran to completion but one or more warnings were generated (for example, the snapshot had to truncate data from the data source)

-

ERROR - the snapshot failed to complete due to an error

Where a snapshot task has a FINISHED status, the number of records snapshotted is displayed.

Details of any warnings and errors encountered during processing are included.

The notification displays the status of the process task in the execution of the job. The possible statuses are:

-

FINISHED - the process ran to completion

-

CANCELLED - the job was canceled by a user during the process task

-

WARNING - the process ran to completion but one or more warnings were generated

-

ERROR - the process failed to complete due to an error

Record counts are included for each Reader and Writer in a process task as a check that the process ran with the correct number of records. Details of any warnings and errors encountered during processing are included. Note that this may include warnings or errors generated by a Generate Warning processor.

The notification displays the status of the export task in the execution of the job. The possible statuses are:

-

STREAMED - the export was optimized for performance by running the data directly out of a process and writing it to the data target

-

FINISHED - the export ran to completion as an independent task

-

CANCELLED - the job was canceled by a user during the export task

-

ERROR - the export failed to complete due to an error

Where an export task has a FINISHED status, the number of records exported is displayed.

Details of any errors encountered during processing are included.

The notification displays the status of the results book export task in the execution of the job. The possible statuses are:

-

FINISHED - the results book export ran to completion

-

CANCELLED - the job was canceled by a user during the results book export task

-

ERROR - the results book export failed to complete due to an error

Details of any errors encountered during processing are included.

The notification displays the status of the external task in the execution of the job. The possible statuses are:

-

FINISHED - the external task ran to completion

-

CANCELLED - the job was canceled by a user during the external task

-

ERROR - the external task failed to complete due to an error

Details of any errors encountered during processing are included.

The screenshot below shows an example notification email using the default email template:

For more information, see Understanding Enterprise Data Quality and Enterprise Data Quality Online Help at http://docs.oracle.com/middleware/12212/edq/index.html

2.5 Optimizing Job Performance

This topic provides a guide to the various performance tuning options in EDQ that can be used to optimize job performance.

2.5.1 General Performance Options

There are four general techniques, applicable to all types of process, that are available to maximize performance in EDQ.

Click on the headings below for more information on each technique:

2.5.1.1 Data streaming

The option to stream data in EDQ allows you to bypass the task of staging data in the EDQ repository database when reading or writing data.

A fully streamed process or job will act as a pipe, reading records directly from a data store and writing records to a data target, without writing any records to the EDQ repository.

When running a process, it may be appropriate to bypass running your snapshot altogether and stream data through the snapshot into the process directly from a data store. For example, when designing a process, you may use a snapshot of the data, but when the process is deployed in production, you may want to avoid the step of copying data into the repository, as you always want to use the latest set of records in your source system, and because you know you will not require users to drill down to results.

To stream data into a process (and therefore bypass the process of staging the data in the EDQ repository), create a job and add both the snapshot and the process as tasks. Then click on the staged data table that sits between the snapshot task and the process and disable it. The process will now stream the data directly from the source system. Note that any selection parameters configured as part of the snapshot will still apply.

Note that any record selection criteria (snapshot filtering or sampling options) will still apply when streaming data. Note also that the streaming option will not be available if the Data Store of the Snapshot is Client-side, as the server cannot access it.

Streaming a snapshot is not always the 'quickest' or best option, however. If you need to run several processes on the same set of data, it may be more efficient to snapshot the data as the first task of a job, and then run the dependent processes. If the source system for the snapshot is live, it is usually best to run the snapshot as a separate task (in its own phase) so that the impact on the source system is minimized.

It is also possible to stream data when writing data to a data store. The performance gain here is less, as when an export of a set of staged data is configured to run in the same job after the process that writes the staged data table, the export will always write records as they are processed (whether or not records are also written to the staged data table in the repository).

However, if you know you do not need to write the data to the repository (you only need to write the data externally), you can bypass this step, and save a little on performance. This may be the case for deployed data cleansing processes, or if you are writing to an external staging database that is shared between applications, for example when running a data quality job as part of a larger ETL process, using an external staging database to pass the data between EDQ and the ETL tool.

To stream an export, create a job and add the process that writes the data as a task, and the export that writes the data externally as another task. Then disable the staged data that sits between the process and the export task. This will mean that the process will write its output data directly to the external target.

Note:

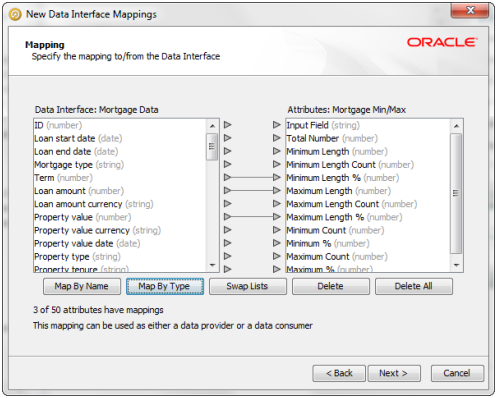

It is also possible to stream data to an export target by configuring a Writer in a process to write to a Data Interface, and configuring an Export Task that maps from the Data Interface to an Export target.

Note that Exports to Client-side data stores are not available as tasks to run as part of a job. They must be run manually from the EDQ Director Client as they use the client to connect to the data store.

2.5.1.2 Minimized results writing

Minimizing results writing is a different type of 'streaming', concerned with the amount of Results Drilldown data that EDQ writes to the repository from processes.

Each process in EDQ runs in one of three Results Drilldown modes:

-

All (all records in the process are written in the drilldowns)

-

Sample (a sample of records are written at each level of drilldown)

-

None (metrics only are written - no drilldowns will be available)

All mode should be used only on small volumes of data, to ensure that all records can be fully tracked in the process at every processing point. This mode is useful when processing small data sets, or when debugging a complex process using a small number of records.

Sample mode is suitable for high volumes of data, ensuring that a limited number of records is written for each drilldown. The System Administrator can set the number of records to write per drilldown; by default this is 1000 records. Sample mode is the default.

None mode should be used to maximize the performance of tested processes that are running in production, and where users will not need to interact with results.

To change the Results Drilldown mode when executing a process, use the Process Execution Preferences screen, or create a Job and click on the process task to configure it.

For example, the following process is changed to write no drilldown results when it is deployed in production:

2.5.1.3 Disabling Sorting and Filtering

When working with large data volumes, it can take a long time to index snapshots and written staged data in order to allow users to sort and filter the data in the Results Browser. In many cases, this sorting and filtering capability will not be needed, or only needed when working with smaller samples of the data.

The system applies intelligent sorting and filtering enablement, where it will enable sorting and filtering when working with smaller data sets, but will disable sorting and filtering for large data sets. However, you can choose to override these settings - for example to achieve maximum throughput when working with a number of small data sets.

When a snapshot is created, the default setting is to 'Use intelligent Sort/Filtering options', so that the system will decide whether or not to enable sorting and filtering based on the size of the snapshot. For more information, see Adding a Snapshot.

However, If you know that no users will need to sort or filter results that are based on a snapshot in the Results Browser, or if you only want to enable sorting or filtering at the point when the user needs to do it, you can disable sorting and filtering on the snapshot when adding or editing it.

To do this, edit the snapshot, and on the third screen (Column Selection), uncheck the option to Use intelligent Sort/Filtering, and leave all columns unchecked in the Sort/Filter column.

Alternatively, if you know that sorting and filtering will only be needed on a sub-selection of the available columns, use the tick boxes to select the relevant columns.

Disabling sorting and filtering means that the total processing time of the snapshot will be less as the additional task to enable sorting and filtering will be skipped.

Note that if a user attempts to sort or filter results based on a column that has not been enabled, the user will be presented with an option to enable it at that point.

Staged Data Sort/Filter options

When staged data is written by a process, the server does not enable sorting or filtering of the data by default. The default setting is therefore maximized for performance.

If you need to enable sorting or filtering on written staged data - for example, because the written staged data is being read by another process which requires interactive data drilldowns - you can enable this by editing the staged data definition, either to apply intelligent sort/filtering options (varying whether or not to enable sorting and filtering based on the size of the staged data table), or to enable it on selected columns by selecting the corresponding Sort/Filter checkboxes.

Match Processor Sort/Filter options

It is possible to set sort/filter enablement options for the outputs of matching. See Matching performance options.

2.5.2 Processor-specific Performance Options

In the case of Parsing and Matching, a large amount of work is performed by an individual processor, as each processor has many stages of processing. In these cases, options are available to optimize performance at the processor level.

Click on the headings below for more information on how to maximize performance when parsing or matching data:

2.5.2.1 Parsing performance options

When maximum performance is required from a Parse processor, it should be run in Parse mode, rather than Parse and Profile mode. This is particularly true for any Parse processors with a complete configuration, where you do not need to investigate the classified and unclassified tokens in the parsing output.The mode of a parser is set in its Advanced Options.

For even better performance where only metrics and data output are required from a Parse processor, the process that includes the parser may be run with no drilldowns - see Minimized results writing above.

When designing a Parse configuration iteratively, where fast drilldowns are required, it is generally best to work with small volumes of data. When working with large volumes of data, an Oracle results repository will greatly improve drilldown performance.

2.5.2.2 Matching performance options

The following techniques may be used to maximize matching performance:

Matching performance may vary greatly depending on the configuration of the match processor, which in turn depends on the characteristics of the data involved in the matching process. The most important aspect of configuration to get right is the configuration of clustering in a match processor.

In general, there is a balance to be struck between ensuring that as many potential matches as possible are found and ensuring that redundant comparisons (between records that are not likely to match) are not performed. Finding the right balance may involve some trial and error - for example, assessment of the difference in match statistics when clusters are widened (perhaps by using fewer characters of an identifier in the cluster key) or narrowed (perhaps by using more characters of an identifier in a cluster key), or when a cluster is added or removed.

The following two general guidelines may be useful:

-

If you are working with data with a large number of well-populated identifiers, such as customer data with address and other contact details such as email addresses and phone numbers, you should aim for clusters with a maximum size of 20 for every million records, and counter sparseness in some identifiers by using multiple clusters rather than widening a single cluster.

-

If you are working with data with a small number of identifiers, for example, where you can only match individuals or entities based on name and approximate location, wider clusters may be inevitable. In this case, you should aim to standardize, enhance and correct the input data in the identifiers you do have as much as possible so that your clusters can be tight using the data available. For large volumes of data, a small number of clusters may be significantly larger. For example, Oracle Watchlist Screening uses a cluster comparison limit of 7m for some of the clustering methods used when screening names against Sanctions List. In this case, you should still aim for clusters with a maximum size of around 500 records if possible (bearing in mind that every record in the cluster will need to be compared with every other record in the cluster - so for a single cluster of 500 records, there will be 500 x 499 = 249500 comparisons performed).

See the Clustering Concept Guide for more information about how clustering works and how to optimize the configuration for your data.

Disabling Sort/Filter options in Match processors

By default, sorting, filtering and searching are enabled on all match results to ensure that they are available for user review. However, with large data sets, the indexing process required to enable sorting, filtering and searching may be very time-consuming, and in some cases, may not be required.

If you do not require the ability to review the results of matching using the Review Application, and you do not need to be able to sort or filter the outputs of matching in the Results Browser, you should disable sorting and filtering to improve performance. For example, the results of matching may be written and reviewed externally, or matching may be fully automated when deployed in production.

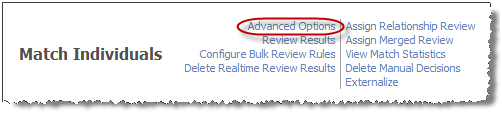

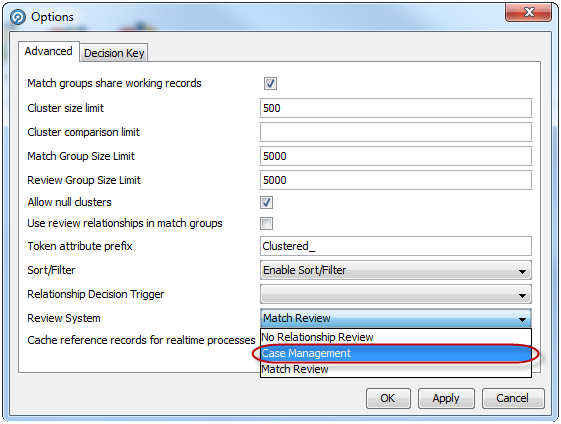

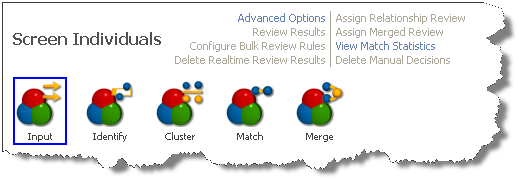

The setting to enable or disable sorting and filtering is available both on the individual match processor level, available from the Advanced Options of the processor (for details, see Sort/Filter options for match processors in the Advanced Options For Match Processors topic in Enterprise Data Quality Online Help), and as a process or job level override.

To override the individual settings on all match processors in a process, and disable the sorting, filtering and review of match results, untick the option to Enable Sort/Filter in Match processors in a job configuration, or process execution preferences:

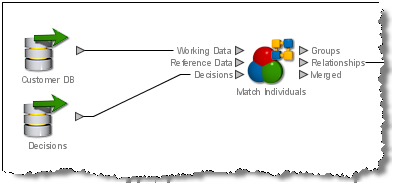

Match processors may write out up to three types of output:

-

Match (or Alert) Groups (records organized into sets of matching records, as determined by the match processor. If the match processor uses Match Review, it will produce Match Groups, whereas if if uses Case Management, it will produce Alert Groups.)

-

Relationships (links between matching records)

-

Merged Output (a merged master record from each set of matching records)

By default, all available output types are written. (Merged Output cannot be written from a Link processor.)

However, not all the available outputs may be needed in your process. For example you should disable Merged Output if you only want to identify sets of matching records.

Note that disabling any of the outputs will not affect the ability of users to review the results of a match processor.

To disable Match (or Alert) Groups output:

-

Open the match processor on the canvas and open the Match sub-processor.

-

Select the Match (or Alert) Groups tab at the top.

-

Uncheck the option to Generate Match Groups report, or to Generate Alert Groups report.

Or, if you know you only want to output the groups of related or unrelated records, use the other tick boxes on the same part of the screen.

To disable Relationships output:

-

Open the match processor on the canvas and open the Match sub-processor.

-

Select the Relationships tab at the top.

-

Uncheck the option to Generate Relationships report.

Or, if you know you only want to output some of the relationships (such as only Review relationships, or only relationships generated by certain rules), use the other tick boxes on the same part of the screen.

To disable Merged Output:

-

Open the match processor on the canvas and open the Merge sub-processor.

-

Uncheck the option to Generate Merged Output.

Or, if you know you only want to output the merged output records from related records, or only the unrelated records, use the other tick boxes on the same part of the screen.

Batch matching processes require a copy of the data in the EDQ repository in order to compare records efficiently.

As data may be transformed between the Reader and the match processor in a process, and in order to preserve the capability to review match results if a snapshot used in a matching process is refreshed, match processors always generate their own snapshots of data (except from real time inputs) to work from. For large data sets, this can take some time.

Where you want to use the latest source data in a matching process, therefore, it may be advisable to stream the snapshot rather than running it first and then feeding the data into a match processor, which will generate its own internal snapshot (effectively copying the data twice). See Streaming a Snapshot above.

Cache Reference Data for Real-Time Match processes

It is possible to configure Match processors to cache Reference Data on the EDQ server, which in some cases should speed up the Matching process. You can enable caching of Reference Data in a real-time match processor in the Advanced Options of the match processor.

For more information, see Understanding Enterprise Data Quality and Enterprise Data Quality Online Help at http://docs.oracle.com/middleware/12212/edq/index.html

2.6 Publishing to the Dashboard

EDQ can publish the results of Audit processors and the Parse processor to a web-based application (Dashboard), so that data owners, or stakeholders in your data quality project, can monitor data quality as it is checked on a periodic basis.

Results are optionally published on process execution. To set up an audit processor to publish its results when this option is used, you must configure the processor to publish its results.

To do this, use the following procedure:

-

Double-click on the processor on the Canvas to bring up its configuration dialog

-

Select the Dashboard tab (Note: In Parse, this is within the Input sub-processor).

-

Select the option to publish the processor's results.

-

Select the name of the metric as it will be displayed on the Dashboard.

-

Choose how to interpret the processor's results for the Dashboard; that is, whether each result should be interpreted as a Pass, a Warning, or a Failure.

Once your process contains one or more processors that are configured to publish their results to the Dashboard, you can run the publication process as part of process execution.

To publish the results of a process to the Dashboard:

-

From the Toolbar, click the Process Execution Preferences button.

-

On the Process tab, select the option to Publish to Dashboard.

-

Click the Save & Run button to run the process.

When process execution is complete, the configured results will be published to the Dashboard, and can be made available for users to view.

For more information, see Understanding Enterprise Data Quality and Enterprise Data Quality Online Help at http://docs.oracle.com/middleware/12212/edq/index.html

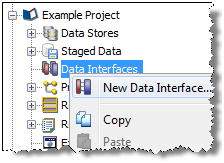

2.7 Packaging

Most objects that are set up in Director can be packaged up into a configuration file, which can be imported into another EDQ server using the Director client application.

This allows you to share work between users on different networks, and provides a way to backup configuration to a file area.

The following objects may be packaged:

-

Whole projects

-

Individual processes

-

Reference Data sets (of all types)

-

Notes

-

Data Stores

-

Staged Data configurations

-

Data Interfaces

-

Export configurations

-

Job Configurations

-

Result Book configurations

-

External Task definitions

-

Web Services

-

Published Processors

Note:

As they are associated with specific server users, issues cannot be exported and imported, nor simply copied between servers using drag-and-drop.2.7.1 Packaging objects

To package an object, select it in the Project Browser, right-click, and select Package...

For example, to package all configuration on a server, select the Server in the tree, or to package all the projects on a server, select the Projects parent node, and select Package in the same way.

You can then save a Director package file (with a .dxi extension) on your file system. The package files are structured files that will contain all of the objects selected for packaging. For example, if you package a project, all its subsidiary objects (data stores, snapshot configurations, data interfaces, processes, reference data, notes, and export configurations) will be contained in the file.

Note:

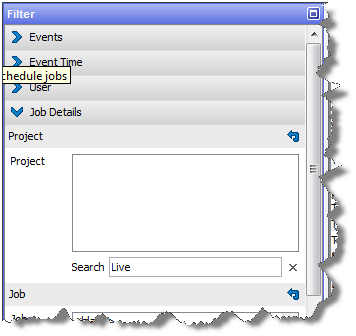

Match Decisions are packaged with the process containing the match processor to which they are related. Similarly, if a whole process is copied and pasted between projects or servers, its related match decisions will be copied across. If an individual match processor is copied and pasted between processes, however, any Match Decisions that were made on the original process are not considered as part of the configuration of the match processor, and so are not copied across.2.7.2 Filtering and Packaging

It is often useful to be able to package a number of objects - for example to package a single process in a large project and all of the Reference Data it requires in order to run.

There are three ways to apply a filter:

-

To filter objects by their names, use the quick keyword NameFilter option at the bottom of the Project Browser

-

To filter the Project Browser to show a single project (hiding all other projects), right-click on the Project, and select Show Selected Project Only.

-

To filter an object (such as a process or job) to show its related objects, right-click on the object, and select Dependency Filter, and either Items used by selected item (to show other objects that are used by the selected object, such as the Reference Data used by a selected Process) or Items using selected item (to show objects that use the selected object, such as any Jobs that use a selected Process).

Whenever a filter has been applied to the Project Browser, a box is shown just above the Task Window to indicate that a filter is active. For example, the below screenshot shows an indicator that a server that has been filtered to show only the objects used by the 'Parsing Example' process:

You can then package the visible objects by right-clicking on the server and selecting Package... This will only package the visible objects.

To clear the filter, click on the x on the indicator box.

In some cases, you may want to specifically exclude some objects from a filtered view before packaging. For example, you may have created a process reading data from a data interface with a mapping to a snapshot containing some sample data. When you package up the process for reuse on other sets of data, you want to publish the process and its data interface, but exclude the snapshot and the data store. To exclude the snapshot and the data store from the filter, right-click on the snapshot and select Exclude From Filter. The data store will also be excluded as its relationship to the process is via the snapshot. As packaging always packages the visible objects only, the snapshot and the data store will not be included in the package.

2.7.3 Opening a package file, and importing its contents

To open a Director package file, either right-click on an empty area of the Project Browser with no other object selected in the browser, and select Open Package File..., or select Open Package File... from the File menu. Then browse to the .dxi file that you want to open.

The package file is then opened and visible in the Director Project Browser in the same way as projects. The objects in the package file cannot be viewed or modified directly from the file, but you can copy them to the EDQ host server by drag-and-drop, or copy and paste, in the Project Browser.

You can choose to import individual objects from the package, or may import multiple objects by selecting a node in the file and dragging it to the appropriate level in your Projects list. This allows you to merge the entire contents of a project within a package into an existing project, or (for example) to merge in all the reference data sets or processes only.

For example, the following screenshot shows an opened package file with a number of projects all exported from a test system. The projects are dragged and dropped into the new server by dragging them from the package file to the server:

Note that when multiple objects are imported from a package file, and there are name conflicts with existing objects in the target location, a conflict resolution screen is shown allowing you to change the name of the object you are importing, ignore the object (and so use the existing object of the same name), or to overwrite the existing object with the one in the package file. You can choose a different action for each object with a name conflict.

If you are importing a single object, and there is a name conflict, you cannot overwrite the existing object and must either cancel the import or change the name of the object you are importing.

Once you have completed copying across all the objects you need from a package file, you can close it, by right-clicking on it, and selecting Close Package File.

Opened package files are automatically disconnected at the end of each client session.

2.7.4 Working with large package files

Some package files may be very large, for example if large volumes of Reference Data are included in the package. When working with large package files, it is quicker to copy the file to the server's landingarea for files and open the DXI file from the server. Copying objects from the package file will then be considerably quicker.

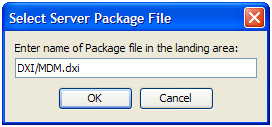

To open a package file in this way, first copy the DXI file to the server landing area. Then, using the Director client, right-click on the server in the Project Browser and select Open Server Package File...

You then need to type in the name of the file into the dialog. If the file is stored in a subfolder of the landingarea, you will need to include this in the name. For example, to open a file called MDM.dxi that is held within a DXI subfolder of the landingarea:

2.7.5 Copying between servers

If you want to copy objects between EDQ servers on the same network, you can do this without packaging objects to file.

To copy objects (such as projects) between two connected EDQ servers, connect to each server, and drag-and-drop the objects from one server to the other:

Note:

To connect to another server, select File menu, New Server... The default port to connect to EDQ using the client is 9002.For more information, see Enterprise Data Quality Online Help. at http://docs.oracle.com/middleware/12212/edq/index.html

2.8 Purging Results

EDQ uses a repository database to store the results and data it generates during processing. All Results data is temporary, in the sense that it can be regenerated using the stored configuration, which is held in a separate database internally.

In order to manage the size of the Results repository database, the results for a given set of staged data (either a snapshot or written staged data), a given process, a given job, or all results for a given project can be purged.

For example, it is possible to purge the results of old projects from the server, while keeping the configuration of the project stored so that its processes can be run again in the future.

Note that the results for a project, process, job, or set of staged data are automatically purged if that project, process, job, or staged data set is deleted. If required, this means that project configurations can be packaged, the package import tested, and then deleted from the server. The configurations can then be restored from the archive at a later date if required.

To purge results for a given snapshot, set of written staged data, process, job, or project:

-

Right-click on the object in the Project Browser. The purge options are displayed.

-

Select the appropriate Purge option.

If there is a lot of data to purge, the task details may be visible in the Task Window.

Note:

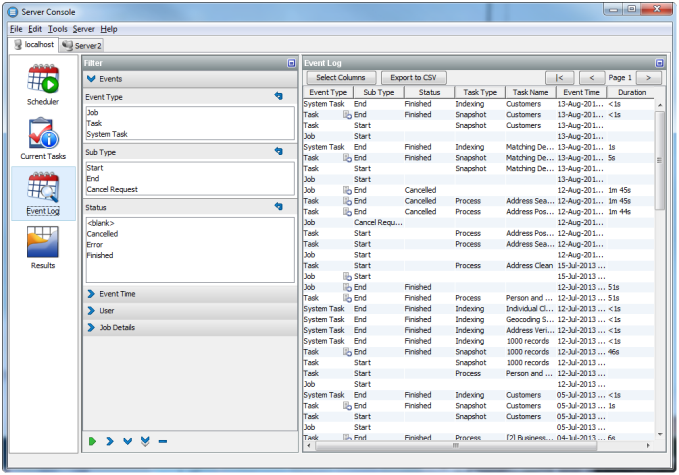

- Purging data from Director will not purge the data in the Results window in Server Console. Similarly, purging data in Server Console will not affect the Director Results window. Therefore, if freeing up disc space it may be necessary to purge data from both.

-

The EDQ Purge Results commands only apply to jobs run without a run label (that is, those run from Director or from the Command Line using the runjobs command.

-

The Server Console Purge Results rules only apply to jobs run with a run label (that is, those run in Server Console with an assigned run label, or from the Command Line using the runopsjob command)

-

It is possible to configure rules in Server Console to purge data after a set period of time. See the

Result Purge Rulestopic in Enterprise Data Quality Online Help for further details. These purge rules only apply to Server Console results.

2.8.1 Purging Match Decision Data

Match Decision data is not purged along with the rest of the results data. Match Decisions are preserved as part of the audit trail which documents the way in which the output of matching was handled.

If it is necessary to delete Match Decision data, for example, during the development process, the following method should be used:

-

Open the relevant Match processor.

-

Click Delete Manual Decisions.

-

Click OK to permanently delete all the Match Decision data, or Cancel to return to the main screen.

Note:

The Match Decisions purge takes place immediately. However, it will not be visible in the Match Results until the Match process is re-run. This final stage of the process updates the relationships to reflect the fact that there are no longer any decisions stored against them.For more information, see Understanding Enterprise Data Quality and Enterprise Data Quality Online Help at http://docs.oracle.com/middleware/12212/edq/index.html

2.9 Creating Processors

In addition to the range of data quality processors available in the Processor Library, EDQ allows you to create and share your own processors for specific data quality functions.

There are two ways to create processors:

-

Using an external development environment to write a new processor - see the

Extending EDQtopic in Enterprise Data Quality Online Help for more details -

Using EDQ to create processors - read on in this topic for more details

2.9.1 Creating a processor from a sequence of configured processors

EDQ allows you to create a single processor for a single function using a combination of a number of base (or 'member') processors used in sequence.

Note that the following processors may not be included in a new created processor:

-

Parse

-

Match

-

Group and Merge

-

Merge Data Sets

A single configured processor instance of the above processors may still be published, however, in order to reuse the configuration.

To take a simple example, you may want to construct a reusable Add Gender processor that derives a Gender value for individuals based on Title and Forename attributes. To do this, you have to use a number of member processors. However, when other users use the processor, you only want them to configure a single processor, input Title and Forename attributes (however they are named in the data set), and select two Reference Data sets - one to map Title values to Gender values, and one to map Forename values to Gender values. Finally, you want three output attributes (TitleGender, NameGender and BestGender) from the processor.

To do this, you need to start by configuring the member processors you need (or you may have an existing process from which to create a processor). For example, the screenshot below shows the use of 5 processors to add a Gender attribute, as follows:

-

Derive Gender from Title (Enhance from Map).

-

Split Forename (Make Array from String).

-

Get first Forename (Select Array Element).

-

Derive Gender from Forename (Enhance from Map).

-

Merge to create best Gender (Merge Attributes).

To make these into a processor, select them all on the Canvas, right-click, and select Make Processor.

This immediately creates a single processor on the Canvas and takes you into a processor design view, where you can set up how the single processor will behave.

From the processor design view, you can set up the following aspects of the processor (though note that in many cases you will be able to use the default settings):

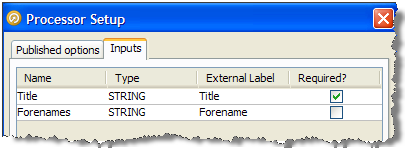

2.9.2 Setting Inputs

The inputs required by the processor are calculated automatically from the configuration of the base processors. Note that where many of the base processors use the same configured input attribute(s), only one input attribute will be created for the new processor.

However, if required you can change or rename the inputs required by the processor in the processor design view, or make an input optional. To do this, click on the Processor Setup icon at the top of the Canvas, then select the Inputs tab.

In the case above, two input attributes are created - Title and Forenames, as these were the names of the distinct attributes used in the configuration of the base processors.

The user chooses to change the External Label of one of these attributes from Forenames to Forename to make the label more generic, and chooses to make the Forename input optional:

Note that if an input attribute is optional, and the user of the processor does not map an attribute to it, the attribute value will be treated as Null in the logic of the processor.

Note:

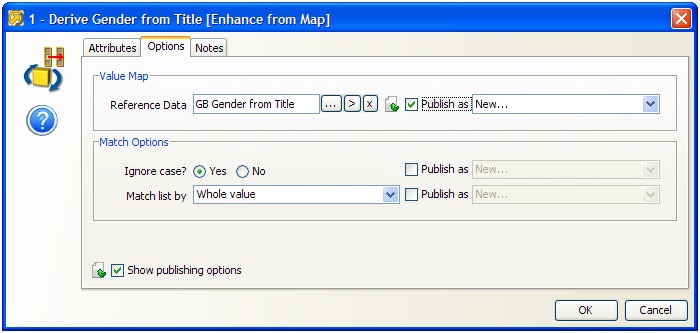

It is also possible to change the Name of each of the input attributes in this screen, which means their names will be changed within the design of the processor only (without breaking the processor if the actual input attributes from the source data set in current use are different). This is available so that the configuration of the member processors matches up with the configuration of the new processor, but will make no difference to the behavior of the created processor.2.9.3 Setting Options

The processor design page allows you to choose the options on each of the member processors that you want to expose (or "publish") for the processor you are creating. In our example, above, we want the user to be able to select their own Reference Data sets for mapping Title and Forename values to Gender values (as for example the processor may be used on data for a new country, meaning the provided Forename to Gender map would not be suitable).

To publish an option, open the member processor in the processor design page, select the Options tab, and tick the Show publishing options box at the bottom of the window.

You can then choose which options to publish. If you do not publish an option, it will be set to its configured value and the user of the new processor will not be able to change it (unless the user has permission to edit the processor definition).

There are two ways to publish options:

-

Publish as New - this exposes the option as a new option on the processor you are creating.

-

Use an existing published option (if any) - this allows a single published option to be shared by many member processors. For example, the user of the processor can specify a single option to Ignore Case which will apply to several member processors.

Note:

If you do not publish an option that uses Reference Data, the Reference Data will be internally packaged as part of the configuration of the new processor. This is useful where you do not want end users of the processor to change the Reference Data set.In our example, we open up the first member processor (Derive Gender from Title) and choose to publish (as new) the option specifying the Reference Data set used for mapping Title values to Gender values:

Note above that the Match Options are not published as exposed options, meaning the user of the processor will not be able to change these.

We then follow the same process to publish the option specifying the Reference Data set used for mapping Forename values to Gender values on the fourth processor (Derive Gender from Forename).

Once we have selected the options that we want to publish, we can choose how these will be labeled on the new processor.

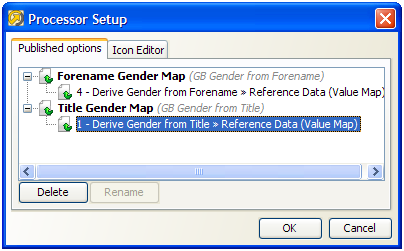

To do this, click on Processor Setup button at the top of the canvas and rename the options. For example, we might label the two options published above Title Gender Map and Forename Gender Map:

2.9.4 Setting Output Attributes

The Output Attributes of the new processor are set to the output attributes of any one (but only one) of the member processors.

By default, the final member processor in the sequence is used for the Output Attributes of the created processor. To use a different member processor for the output attributes, click on it, and select the Outputs icon on the toolbar:

The member processor used for Outputs is marked with a green shading on its output side:

Note:

Attributes that appear in Results Views are always exposed as output attributes of the new processor. You may need to add a member processor to profile or check the output attributes that you want to expose, and set it as the Results Processor (see below) to ensure that you see only the output attributes that you require in the new processor (and not for example input attributes to a transformation processor). Alternatively, if you do not require a Results View, you can unset it and the exposed output attributes will always be those of the Outputs processor only.2.9.5 Setting Results Views

The Results Views of the new processor are set to those of any one (but only one) of the member processors.

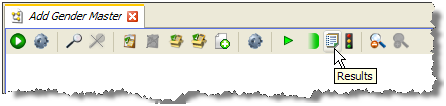

By default, the final member processor in the sequence is used for the Results of the created processor. To use a different member processor for the results views, click on it, and select the Results icon on the toolbar:

The member processor used for Results is now marked with an overlay icon:

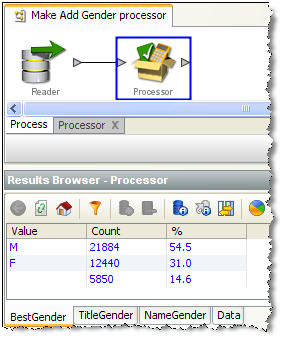

Note that in some cases, you may want to add a member processor specifically for the purpose of providing Results Views. In our example, we may want to add a Frequency Profiler of the three output attributes (TitleGender, ForenameGender and BestGender) so that the user of a new processor can see a breakdown of what the Add Gender processor has done. To do this, we add a Frequency Profiler in the processor design view, select the three attributes as inputs, select it as our Results Processor and run it.

If we exit the processor designer view, we can see that the results of the Frequency Profiler are used as the results of the new processor:

2.9.6 Setting Output Filters

The Output Filters of the new processor are set to those of any one (and only one) of the member processors.

By default, the final member processor in the sequence is used for the Output Filters of the created processor. To use a different member processor, click on it, and select the Filter button on the toolbar:

The selected Output Filters are colored green in the processor design view to indicate that they will be exposed on the new processor:

2.9.7 Setting Dashboard Publication Options

The Dashboard Publication Options of the new processor are set to those of any one (and only one) of the member processors.

If you require results from your new processor to be published to the Dashboard, you need to have an Audit processor as one of your member processors.

To select a member processor as the Dashboard processor, click on it and select the Dashboard icon on the toolbar:

The processor is then marked with a traffic light icon to indicate that it is the Dashboard Processor:

Note:

In most cases, it is advisable to use the same member processor for Results Views, Output Filters, and Dashboard Publication options for consistent results when using the new processor. This is particularly true when designing a processor designed to check data.2.9.8 Setting a Custom Icon

You may want to add a custom icon to the new processor before publishing it for others to use. This can be done for any processor simply by double-clicking on the processor (outside of the processor design view) and selecting the Icon & Group tab.

See the Customizing Processor Icons for more details.

Once you have finished designing and testing your new processor, the next step is to publish it for others to use.

For more information, see Enterprise Data Quality Online Help at http://docs.oracle.com/middleware/12212/edq/index.html

2.10 Publishing Processors

Configured single processors can be published to the Tool Palette for other users to use on data quality projects.

It is particularly useful to publish the following types of processor, as their configuration can easily be used on other data sets:

-

Match processors (where all configuration is based on Identifiers)

-

Parse processors (where all configuration is based on mapped attributes)

-

Processors that have been created in EDQ (where configuration is based on configured inputs)

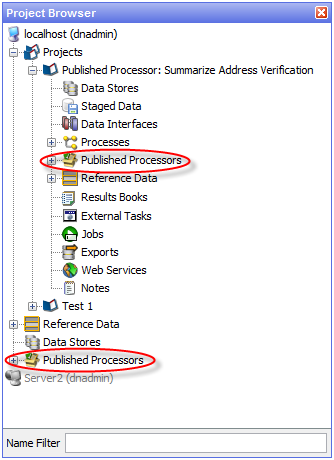

Published processors appear both in the Tool Palette, for use in processes, and in the Project Browser, so that they can be packaged for import onto other EDQ instances.

Note:

The icon of the processor may be customized before publication. This also allows you to publish processors into new families in the Tool Palette.To publish a configured processor, use the following procedure:

-

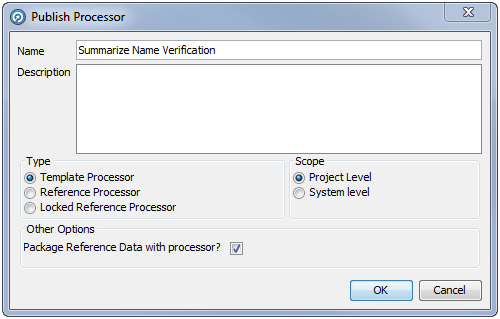

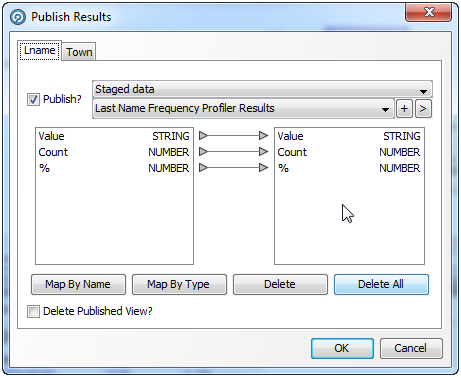

Right-click on the processor, and select Publish Processor. The following dialog is displayed:

-

In the Name field, enter a name for the processor as it will appear on the Tool Palette.

-

If necessary, enter further details in the Description field.

-

Select the Published processor Type: Template, Reference, or Locked Reference.

-

Select the Scope: Project (the processor is available in the current project only) or System (the processor is available for use in all projects on the system).

-

If you want to package the associated Reference Data with this published processor, select the Package Reference Data with processor checkbox.

Note:

Options that externalized on the published processor always require Reference Data to be made available (either in the project or at system level. Options that are not externalized on the published processor can either have their Reference Data supplied with the published processor (the default behavior with this option selected) or can still require Reference Data to be made available. For example, to use a standard system-level Reference Data set.2.10.1 Editing a Published Processor

Published processors can be edited in the same way as a normal processor, although they must be republished once any changes have been made.

If a Template Published processor is edited and published, only subsequent instances of that processor will be affected, as there is no actual link between the original and any instances.

If a Reference or Locked Reference Published processor is reconfigured, all instances of the process will be modified accordingly. However, if an instance of the processor is in use when the original is republished, the following dialog is displayed:

2.10.2 Attaching Help to published processors

It is possible to attach Online Help before publishing a processor, so that users of it can understand what the processor is intended to do.

The Online Help must be attached as a zip file containing an file named index.htm (or index.html), which will act as the main help page for the published processors. Other html pages, as well as images, may be included in the zip file and embedded in, or linked from, the main help page. This is designed so that a help page can be designed using any HTML editor, saved as an HTML file called index.htm and zipped up with any dependent files.

To do this, right-click the published processor and select Attach Help. This will open a file browsing dialog which is used to locate and select the file.

Note:

The Set Help Location option is used to specify a path to a help file or files, rather than attaching them to a processor. This option is intended for Solutions Development use only.If a processor has help attached to it, the help can be accessed by the user by selecting the processor and pressing F1. Note that help files for published processors are not integrated with the standard EDQ Online Help that is shipped with the product, so are not listed in its index and cannot be found by search.

2.10.3 Publishing processors into families

It is possible to publish a collection of published processors with a similar purpose into a family on the Tool Palette. For example, you may create a number of processors for working with a particular type of data and publish them all into their own family.

To do this, you must customize the family icon of each processor before publication, and select the same icon for all the processors you want to publish into the same family. When the processor is published, the family icon is displayed in the Tool Palette, and all processors that have been published and which use that family icon will appear in that family. The family will have the same name as the name given to the family icon.

For more information, see Understanding Enterprise Data Quality and Enterprise Data Quality Online Help at http://docs.oracle.com/middleware/12212/edq/index.html

2.11 Using Published Processors

Published processors are located in either the individual project or system level, as shown below:

There are three types of Published processor:

-

Template - These processors can be reconfigured as required.

-

Reference - These processors inherit their configuration from the original processor. They can only be reconfigured by users with the appropriate permission to do so. They are identified by a green box in the top-left corner of the processor icon.

-

Locked Reference - These processors also inherit the configuration from the original processor, but unlike standard Reference processors, this link cannot be removed. They are identified by a red box in the top-left corner of the processor icon.

These processors can be used in the same way as any other processor; either added from the Project Browser or Tool Palette by clicking and dragging it to the Project Canvas.

For further information, see the Published Processors topic in Enterprise Data Quality Online Help.

2.11.1 Permissions

The creation and use of published processors are controlled by the following permissions:

-

Published Processor: Add - The user can publish a processor.

-

Published Processor: Modify - This permission, in combination with the Published Processor: Add permission - allows the user to overwrite an existing published processor.

-

Published Processor: Delete - The user can delete a published processor.

-

Remove Link to Reference Processor - The user can unlock a Reference published processor. See the following section for further details.

2.11.2 Unlocking a Reference Published Processor

If a user has the Remove Link to Reference Processor permission, they can unlock a Reference Published processor. To do this, use the following procedure:

-

Right click on the processor.

-

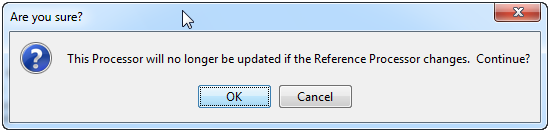

Select Remove link to Reference Processor. The following dialog is displayed:

-

Click OK to confirm. The instance of the processor is now disconnected from the Reference processor, and therefore will not be updated when the original processor is.

For more information, see Understanding Enterprise Data Quality and Enterprise Data Quality Online Help.

2.12 Investigating a Process

During the creation of complex processes or when revisiting existing processes, it is often desirable to investigate how the process was set up and investigate errors if they exist.

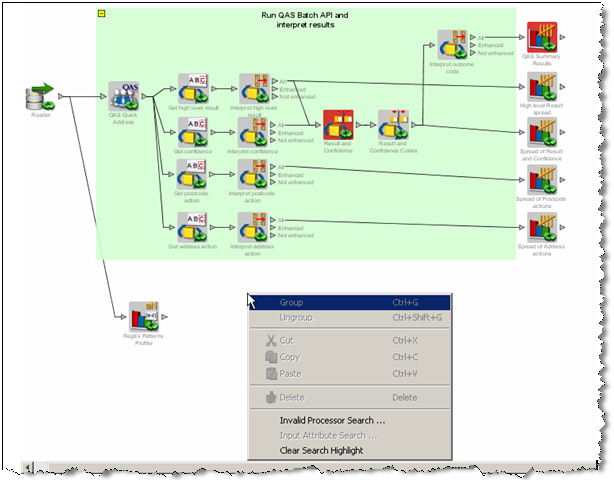

2.12.1 Invalid Processor Search

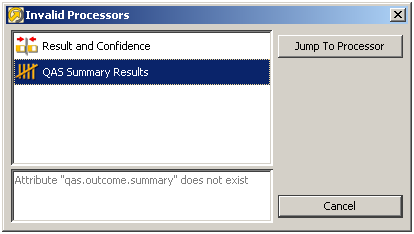

Where many processors exist on the canvas, you can be assisted in discovering problems by using the Invalid Processor Search (right click on the canvas). This option is only available where one or more processors have errors on that canvas.

This brings up a dialog showing all the invalid processors connected on the canvas. Details of the errors are listed for each processor, as they are selected. The Jump To Processor option takes the user to the selected invalid processor on the canvas, so the problem can be investigated and corrected from the processor configuration.

2.12.2 Input Attribute Search

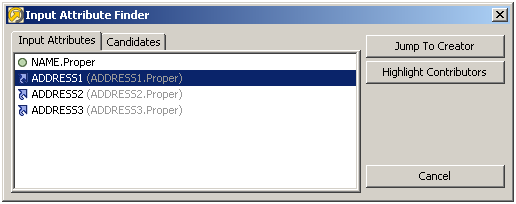

You may list the attributes which are used as inputs to a processor by right clicking on that processor, and selecting Input Attribute Search.

The icons used indicate whether the attribute is the latest version of an attribute or a defined attribute.

There are 2 options available:

-

Jump to Creator - this takes you to the processor which created the selected attribute.

-

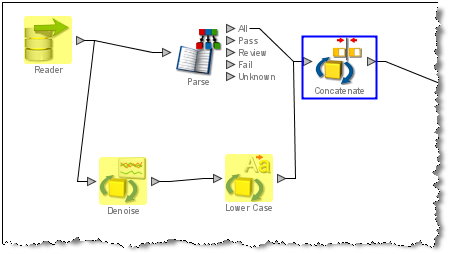

Highlight Contributors - this highlights all the processors (including the Reader, if relevant) which had an influence on the value of the selected input attribute. The contributory processors are highlighted in yellow. In the example below, the Concatenate processor had one of its input attributes searched, in order to determine which path contributed to the creation of that attribute.

The candidates tab in the Input Attributes Search enables the same functionality to take place on any of the attributes which exist in the processor configuration. The attributes do not necessarily have to be used as inputs in the processor configuration.

2.12.3 Clear Search Highlight

This action will clear all the highlights made by the Highlight Contributors option.

For more information, see Understanding Enterprise Data Quality and Enterprise Data Quality Online Help at http://docs.oracle.com/middleware/12212/edq/index.html

2.13 Previewing Published Results Views

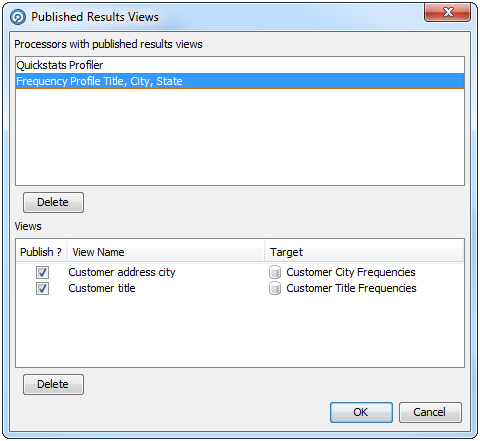

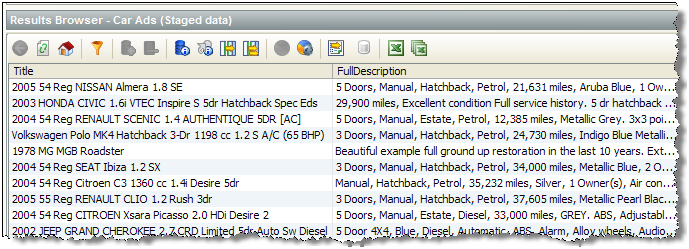

It is possible to preview the results of running a process before it is run, or to disable or delete specific results views. This is done with the Published Results Views dialog:

To open the dialog, either:

-

click the Published Results Views button on the Process toolbar:

-

or right-click on a processor in the Process and select Published Results Views in the menu:

This dialog is divided into two areas:

-

Processors with published results views - Lists all the processors in the process that have published views.

-

Views - Lists the views of the processor currently selected in the Processors with published results views area.

Published views can be selected or deselected by checking or unchecking the Publish? checkbox. Alternatively, they can be deleted by selecting the view and clicking Delete.

If a view is deleted by accident, click Cancel on the bottom-right corner of the dialog to restore it.

For more information, see Understanding Enterprise Data Quality and Enterprise Data Quality Online Help at http://docs.oracle.com/middleware/12212/edq/index.html

2.14 Using the Results Browser

The EDQ Results Browser is designed to be easy-to-use. In general, you can click on any processor in an EDQ process to display its summary view or views in the Results Browser.

The Results Browser has various straight-forward options available as buttons at the top - just hover over the button to see what it does.

However, there are a few additional features of the Results Browser that are less immediately obvious:

2.14.1 Show Results in New Window

It is often useful to open a new window with the results from a given processor so that you can refer to the results even if you change which processor you are looking at in EDQ. For example, you might want to compare two sets of results by opening two Results Browser windows and viewing them side-by-side.

To open a new Results Browser, right-click on a processor in a process and select Show results in new window.

The same option exists for viewing Staged Data (either snapshots or data written from processes), from the right-click menu in the Project Browser.

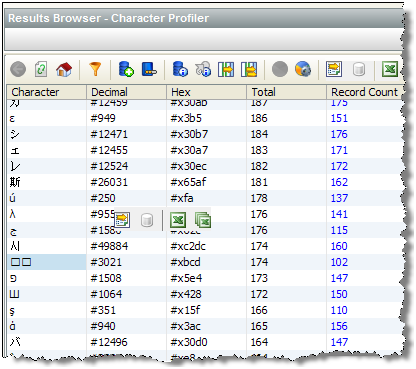

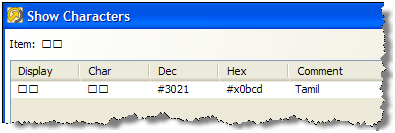

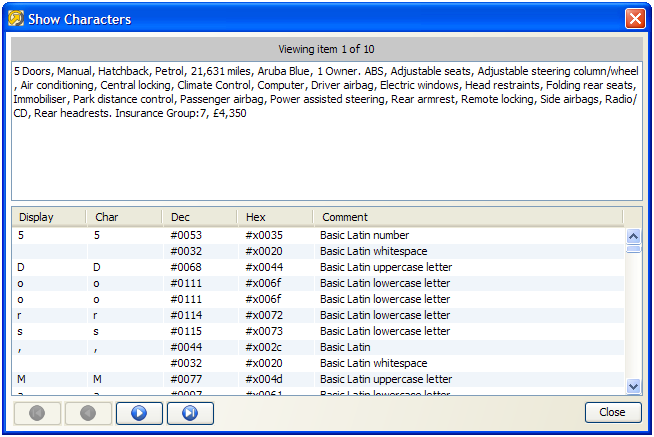

2.14.2 Show Characters

On occasion, you might see unusual characters in the Results Browser, or you might encounter very long fields that are difficult to see in their entirety.