Handling JavaFX Events

5 Working with Events from Touch-Enabled Devices

This topic describes the events that are generated by the different types of gestures that are recognized by touch-enabled devices, such as touch events, zoom events, rotate events, and swipe events. This topic shows you how to work with these types of events in your JavaFX application.

Starting with JavaFX 2.2, users can interact with your JavaFX applications using touches and gestures on touch-enabled devices. Touches and gestures can involve a single point or multiple points of contact. The type of event that is generated is determined by the touch or type of gesture that the user makes.

Touch and gesture events are processed the same way that other events are processed. See Processing Events for a description of this process. Convenience methods for registering event handlers for touch and gesture events are available. See Working with Convenience Methods for more information.

Gesture and Touch Events

JavaFX applications generate gesture events when an application is running on a device with a touch screen or a trackpad that recognizes gestures. For platforms that recognize gestures, the native recognition is used to identify the gesture performed. Table 5-1 describes the gestures that are supported and the corresponding event types that are generated.

Table 5-1 Supported Gestures and Generated Event Types

| Gesture | Description | Events Generated |

|---|---|---|

|

Rotate |

Two-finger turning movement where one finger moves clockwise around the other finger to rotate an object clockwise and one finger moves counterclockwise around the other finger to rotate an object counterclockwise. |

|

|

Scroll |

Sliding movement, up or down for vertical scrolling, left or right for horizontal scrolling. |

If a mouse wheel is used for scrolling, only the events of type |

|

Swipe |

Sweeping movement across the screen or trackpad to the right, left, up, or down. Diagonal movement is not recognized as a swipe. |

A single swipe event is generated for each swiping gesture. |

|

Zoom |

Two-finger pinching motion where fingers are brought together to zoom out and fingers are moved apart to zoom in. |

|

Touch events are generated when the application is running on a device with a touch screen and the user touches one or more fingers to the screen. These events can be used to provide lower level tracking for the individual touch points that are part of a touch or gesture. For more information on touch events, see Working with Touch Events.

Targets of Gestures

The target of most gestures is the node at the center point of all touches at the beginning of the gesture. The target of a swipe gesture is the node at the center of the entire path of all of the fingers.

If more than one node is located at the target point, the topmost node is considered the target. All of the events generated from a single, continuous gesture, including inertia from the gesture, are delivered to the node that was selected when the gesture started. For more information on targets of events, see Target Selection.

Other Events Generated

Gestures and touches can generate other types of events in addition to the events for the gesture or touch performed. A swipe gesture generates scroll events in addition to the swipe event. Depending on the length of the swipe, it is possible that the swipe and scroll events have different targets. The target of a scroll event is the node at the point where the gesture started. The target of a swipe event is the node at the center of the entire path of the gesture.

Touches on a touch screen also generate corresponding mouse events. For example, touching a point on the screen generates TOUCH_PRESSED and MOUSE_PRESSED events. Moving a single point on the screen generates scroll events and drag events. Even if your application does not handle touch or gesture events directly, it can run on a touch-enabled device with minimal changes by responding to the mouse events that are generated in response to touches.

If your application handles touches, gestures, and mouse events, make sure that you do not handle a single action multiple times. For example, if a gesture generates scroll events and drag events and you provide the same processing for handlers of both types of events, the movement on the screen could be twice the amount expected. You can use the isSynthesized() method for mouse events to determine if the event was generated by mouse movement or movement on a touch screen and only handle the event once.

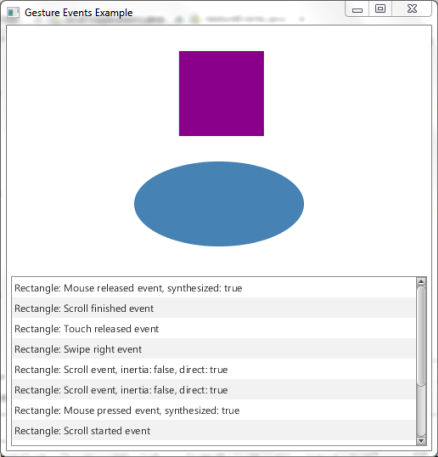

Gesture Events Example

The Gesture Events example shows a rectangle, an ellipse, and an event log. Figure 5-1 shows the example.

The log contains a record of the events that were handled. This example enables you to try different gestures and see what events are generated for each.

The Gesture Events example is available in the GestureEventsExample.zip file. Extract the NetBeans project and open it in the NetBeans IDE.

To generate gesture events, you must run the example on a device with a touch screen or a trackpad that supports gestures. To generate touch events, you must run the example on a device with a touch screen.

Creating the Shapes

The Gesture Events example shows a rectangle and an ellipse. Example 5-1 shows the code used to create each shape and the layout pane that contains the shapes.

Example 5-1 Set Up the Shapes

// Create the shapes that respond to gestures and use a VBox to

// organize them

VBox shapes = new VBox();

shapes.setAlignment(Pos.CENTER);

shapes.setPadding(new Insets(15.0));

shapes.setSpacing(30.0);

shapes.setPrefWidth(500);

shapes.getChildren().addAll(createRectangle(), createEllipse());

...

private Rectangle createRectangle() {

final Rectangle rect = new Rectangle(100, 100, 100, 100);

rect.setFill(Color.DARKMAGENTA);

...

return rect;

}

private Ellipse createEllipse() {

final Ellipse oval = new Ellipse(100, 50);

oval.setFill(Color.STEELBLUE);

...

return oval;

}

You can use gestures to move, rotate, and zoom in and out of these objects.

Handling the Events

In general, event handlers for the shape objects in the Gesture Events example perform similar operations for each type of event that is handled. For all types of events, an entry is posted to the log of events.

On platforms that support inertia for gestures, additional events might be generated after the event-type_FINISHED event. For example, SCROLL events might be generated after the SCROLL_FINISHED event if there is any inertia associated with the scroll gesture. Use the isInertia() method to identify the events that are generated based on the inertia of the gesture. If the method returns true, the event was generated after the gesture was completed.

Events are generated by gestures on a touch screen or on a trackpad. SCROLL events are also generated by the mouse wheel. Use the isDirect() method to identify the source of the event. If the method returns true, the event was generated by a gesture on a touch screen. Otherwise, the method returns false. You can use this information to provide different behavior based on the source of the event.

Touches on a touch screen also generate corresponding mouse events. For example, touching an object generates both TOUCH_PRESSED and MOUSE_PRESSED events. Use the isSynthesized() method to determine the source of the mouse event. If the method returns true, the event was generated by a touch instead of by a mouse.

The inc() and dec() methods in the Gesture Events example are used to provide a visual cue that an object is the target of a gesture. The number of gestures in progress is tracked, and the appearance of the target object is changed when the number of active gestures changes from 0 to 1 or drops to 0.

In the Gesture Events example, the handlers for the rectangle and ellipse are similar. Therefore, the code examples in the following sections show the handlers for the rectangle. See GestureEvents.java for the handlers for the ellipse.

Handling Scroll Events

When a scroll gesture is performed, SCROLL_STARTED, SCROLL, and SCROLL_FINISHED events are generated. When a mouse wheel is moved, only a SCROLL event is generated. Example 5-2 shows the rectangle's handlers for scroll events in the Gesture Events example. Handlers for the ellipse are similar.

Example 5-2 Define the Handlers for Scroll Events

rect.setOnScroll(new EventHandler<ScrollEvent>() {

@Override public void handle(ScrollEvent event) {

if (!event.isInertia()) {

rect.setTranslateX(rect.getTranslateX() + event.getDeltaX());

rect.setTranslateY(rect.getTranslateY() + event.getDeltaY());

}

log("Rectangle: Scroll event" +

", inertia: " + event.isInertia() +

", direct: " + event.isDirect());

event.consume();

}

});

rect.setOnScrollStarted(new EventHandler<ScrollEvent>() {

@Override public void handle(ScrollEvent event) {

inc(rect);

log("Rectangle: Scroll started event");

event.consume();

}

});

rect.setOnScrollFinished(new EventHandler<ScrollEvent>() {

@Override public void handle(ScrollEvent event) {

dec(rect);

log("Rectangle: Scroll finished event");

event.consume();

}

});

In addition to the common handling described in Handling the Events, SCROLL events are handled by moving the object in the direction of the scroll gesture. If the scroll gesture ends outside of the window, the shape is moved out of the window. SCROLL events that are generated based on inertia are ignored by the handler for the rectangle. The handler for the ellipse continues to move the ellipse in response to SCROLL events that are generated from inertia and could result in the ellipse moving out of the window even if the gesture ends within the window.

Handling Zoom Events

When a zoom gesture is performed, ZOOM_STARTED, ZOOM, and ZOOM_FINISHED events are generated. Example 5-3 shows the rectangle's handlers for zoom events in the Gesture Events example. Handlers for the ellipse are similar.

Example 5-3 Define the Handlers for Zoom Events

rect.setOnZoom(new EventHandler<ZoomEvent>() {

@Override public void handle(ZoomEvent event) {

rect.setScaleX(rect.getScaleX() * event.getZoomFactor());

rect.setScaleY(rect.getScaleY() * event.getZoomFactor());

log("Rectangle: Zoom event" +

", inertia: " + event.isInertia() +

", direct: " + event.isDirect());

event.consume();

}

});

rect.setOnZoomStarted(new EventHandler<ZoomEvent>() {

@Override public void handle(ZoomEvent event) {

inc(rect);

log("Rectangle: Zoom event started");

event.consume();

}

});

rect.setOnZoomFinished(new EventHandler<ZoomEvent>() {

@Override public void handle(ZoomEvent event) {

dec(rect);

log("Rectangle: Zoom event finished");

event.consume();

}

});

In addition to the common handling described in Handling the Events, ZOOM events are handled by scaling the object according to the movement of the gesture. The handlers for the rectangle and ellipse handle all ZOOM events the same, regardless of inertia or the source of the event.

Handling Rotate Events

When a rotate gesture is performed, ROTATE_STARTED, ROTATE, and ROTATE_FINISHED events are generated. Example 5-4 shows the rectangle's handlers for rotate events in the Gesture Events example. Handlers for the ellipse are similar.

Example 5-4 Define the Handlers for Rotate Events

rect.setOnRotate(new EventHandler<RotateEvent>() {

@Override public void handle(RotateEvent event) {

rect.setRotate(rect.getRotate() + event.getAngle());

log("Rectangle: Rotate event" +

", inertia: " + event.isInertia() +

", direct: " + event.isDirect());

event.consume();

}

});

rect.setOnRotationStarted(new EventHandler<RotateEvent>() {

@Override public void handle(RotateEvent event) {

inc(rect);

log("Rectangle: Rotate event started");

event.consume();

}

});

rect.setOnRotationFinished(new EventHandler<RotateEvent>() {

@Override public void handle(RotateEvent event) {

dec(rect);

log("Rectangle: Rotate event finished");

event.consume();

}

});

In addition to the common handling described in Handling the Events, ROTATE events are handled by rotating the object according to the movement of the gesture. The handlers for the rectangle and ellipse handle all ROTATE events the same, regardless of inertia or the source of the event.

Handling Swipe Events

When a swipe gesture is performed, either a SWIPE_DOWN, SWIPE_LEFT, SWIPE_RIGHT, or SWIPE_UP event is generated, depending on the direction of the swipe. Example 5-5 shows the rectangle's handlers for SWIPE_RIGHT and SWIPE_LEFT events in the Gesture Events example. The ellipse does not handle swipe events.

Example 5-5 Define the Handlers for Swipe Events

rect.setOnSwipeRight(new EventHandler<SwipeEvent>() {

@Override public void handle(SwipeEvent event) {

log("Rectangle: Swipe right event");

event.consume();

}

});

rect.setOnSwipeLeft(new EventHandler<SwipeEvent>() {

@Override public void handle(SwipeEvent event) {

log("Rectangle: Swipe left event");

event.consume();

}

});

The only action performed for swipe events is to record the event in the log. However, swipe gestures also generate scroll events. The target of the swipe event is the topmost node at the center of the path of the gesture. This target could be different than the target of the scroll events, which is the topmost node at the point where the gesture started. The rectangle and ellipse respond to scroll events that are generated by a swipe gesture when they are the target of the scroll events.

Handling Touch Events

When a touch screen is touched, a TOUCH_MOVED, TOUCH_PRESSED, TOUCH_RELEASED, or TOUCH_STATIONARY event is generated for each touch point. The touch event contains information for every touch point that is part of the touch action. Example 5-6 shows the rectangle's handlers for touch pressed and touch released events in the Gesture Events example. The ellipse does not handle touch events.

Example 5-6 Define the Handlers for Touch Events

rect.setOnTouchPressed(new EventHandler<TouchEvent>() {

@Override public void handle(TouchEvent event) {

log("Rectangle: Touch pressed event");

event.consume();

}

});

rect.setOnTouchReleased(new EventHandler<TouchEvent>() {

@Override public void handle(TouchEvent event) {

log("Rectangle: Touch released event");

event.consume();

}

});

The only action performed for touch events is to record the event in the log. Touch events can be used to provide lower level tracking for the individual touch points that are part of a touch or gesture. See Working with Touch Events for more information and an example.

Handling Mouse Events

Mouse events are generated by actions with the mouse and by touches on a touch screen. Example 5-7 shows the ellipse's handlers for MOUSE_PRESSED and MOUSE_RELEASED events in the Gesture Events example.

Example 5-7 Define Handlers for Mouse Events

oval.setOnMousePressed(new EventHandler<MouseEvent>() {

@Override public void handle(MouseEvent event) {

if (event.isSynthesized()) {

log("Ellipse: Mouse pressed event from touch" +

", synthesized: " + event.isSynthesized());

}

event.consume();

}

});

oval.setOnMouseReleased(new EventHandler<MouseEvent>() {

@Override public void handle(MouseEvent event) {

if (event.isSynthesized()) {

log("Ellipse: Mouse released event from touch" +

", synthesized: " + event.isSynthesized());

}

event.consume();

}

});

Mouse pressed and mouse released events are handled by the ellipse only when the events are generated by touches on a touch screen. Handlers for the rectangle record all mouse pressed and mouse released events in the log.

Managing the Log

The Gesture Events example shows a log of the events that were handled by the shapes on the screen. An ObservableList object is used to record the events for each shape, and a ListView object is used to display the list of events. The log is limited to 50 entries. The newest entry is added to the top of the list and the oldest entry is removed from the bottom. See GestureEvents.java for the code that manages the log.

Work with the shapes in the application and notice what events are generated for each gesture that you perform.

Additional Resources

See the JavaFX API documentation for more information on gesture events, touch events, and touch points.

Joni develops documentation for JavaFX. She has been a technical writer for over 10 years and has a background in enterprise application development.

Joni develops documentation for JavaFX. She has been a technical writer for over 10 years and has a background in enterprise application development.