7 Preparing Storage for an Enterprise Deployment

This chapter describes how to prepare storage for an Oracle Identity and Access Management enterprise deployment.

The storage model described in this guide was chosen for maximum availability, best isolation of components, symmetry in the configuration, and facilitation of backup and disaster recovery. The rest of the guide uses a directory structure and directory terminology based on this storage model. Other directory layouts are possible and supported.

This chapter contains the following topics:

-

Section 7.1, "Overview of Preparing Storage for Enterprise Deployment"

-

Section 7.2, "Terminology for Directories and Directory Variables"

-

Section 7.4, "About Recommended Locations for the Different Directories"

-

Section 7.5, "Configuring Exalogic Storage for Oracle Identity Management"

7.1 Overview of Preparing Storage for Enterprise Deployment

It is important to set up your storage in a way that makes the enterprise deployment easier to understand, configure, and manage. Oracle recommends setting up your storage according to information in this chapter. The terminology defined in this chapter is used in diagrams and procedures throughout the guide.

Use this chapter as a reference to help understand the directory variables used in the installation and configuration procedures. Other directory layouts are possible and supported, but the model adopted in this guide is chosen for maximum availability, providing both the best isolation of components and symmetry in the configuration and facilitating backup and disaster recovery. The rest of the document uses this directory structure and directory terminology.

7.2 Terminology for Directories and Directory Variables

This section describes the directory variables used throughout this guide for configuring the Oracle Identity and Access Management enterprise deployment. You are not required to set these as environment variables. The following directory variables are used to describe the directories installed and configured in the guide:

-

ORACLE_BASE: This environment variable and related directory path refers to the base directory under which Oracle products are installed.

-

MW_HOME: This variable and related directory path refers to the location where Oracle Fusion Middleware resides. A

MW_HOMEhas aWL_HOME, anORACLE_COMMON_HOMEand one or moreORACLE_HOMEs.There is a different

MW_HOMEfor each product suite.In this guide, this value might be preceded by a product suite abbreviation, for example:

DIR_MW_HOME,IAD_MW_HOME,IGD_MW_HOME, andWEB_MW_HOME. -

WL_HOME: This variable and related directory path contains installed files necessary to host a WebLogic Server. The

WL_HOMEdirectory is a peer of Oracle home directory and resides within theMW_HOME. -

ORACLE_HOME: This variable points to the location where an Oracle Fusion Middleware product, such as Oracle HTTP Server or Oracle SOA Suite is installed and the binaries of that product are being used in a current procedure. In this guide, this value might be preceded by a product suite abbreviation, for example:

IAD_ORACLE_HOME,IGD_ORACLE_HOME,WEB_ORACLE_HOME,WEBGATE_ORACLE_HOME,SOA_ORACLE_HOME, andOUD_ORACLE_HOME. -

ORACLE_COMMON_HOME: This variable and related directory path refer to the location where the Oracle Fusion Middleware Common Java Required Files (JRF) Libraries and Oracle Fusion Middleware Enterprise Manager Libraries are installed. An example is:

MW_HOME/oracle_common -

ORACLE_INSTANCE: An Oracle instance contains one or more system components, such as Oracle Web Cache or Oracle HTTP Server. An Oracle instance directory contains updatable files, such as configuration files, log files, and temporary files.

In this guide, this value might be preceded by a product suite abbreviation, such as

WEB_ORACLE_INSTANCE. -

JAVA_HOME: This is the location where Oracle JRockit is installed.

-

ASERVER_HOME: This path refers to the file system location where the Oracle WebLogic domain information (configuration artifacts) are stored.

There is a different

ASERVER_HOMEfor each domain used, specifically:IGD_ASERVER_HOMEandIAD_ASERVER_HOME -

MSERVER_HOME: This path refers to the local file system location where the Oracle WebLogic domain information (configuration artifacts) are stored.This directory is generated by the

pack/unpackutilities and is a subset of theASERVER_HOME. It is used to start and stop managed servers. The Administration Server is still started from theASERVER_HOMEdirectory.There is a different

MSERVER_HOMEfor each domain used. Optionally, it can be used to start and stop managed servers. -

LCM_HOME: This is the location of the life cycle management tools and software repository.

For more information about, and examples of these variables, see Section 7.4.4, "Recommended Directory Locations."

7.3 About File Systems

After you create the partitions on your storage, you must place file systems on the partitions so that you can store the Oracle files. For local or direct attached shared storage, the file system type is most likely the default type for your operating system, for example: EXT3 for Linux.

If your shared storage is on network attached storage (NAS), which is accessed by two or more hosts either exclusively or concurrently, then you must use a supported clustered file system such as NFS version 3 or 4. Such file systems provide conflict resolution and locking capabilities.

7.4 About Recommended Locations for the Different Directories

This section contains the following topics:

-

Section 7.4.1, "Recommendations for Binary (Middleware Home) Directories"

-

Section 7.4.2, "Recommendations for Domain Configuration Files"

-

Section 7.4.3, "Shared Storage Recommendations for JMS File Stores and Transaction Logs"

7.4.1 Recommendations for Binary (Middleware Home) Directories

The following sections describe guidelines for using shared storage for your Oracle Fusion Middleware middleware home directories:

-

Section 7.4.1.1, "About the Binary (Middleware Home) Directories"

-

Section 7.4.1.3, "About Using Redundant Binary (Middleware Home) Directories"

7.4.1.1 About the Binary (Middleware Home) Directories

When you install any Oracle Fusion Middleware product, you install the product binaries into a Middleware home. The binary files installed in the Middleware home are read-only and remain unchanged unless the Middleware home is patched or upgraded to a newer version.

In a typical production environment, the Middleware home files are saved in a separate location from the domain configuration files, which you create using the Oracle Fusion Middleware Configuration Wizard.

The Middleware home for an Oracle Fusion Middleware installation contains the binaries for Oracle WebLogic Server, the Oracle Fusion Middleware infrastructure files, and any Oracle Fusion Middleware product-specific directories.

If you have your LDAPHOSTs in a different zone from your application hosts, it may be desirable not to share the Binary installation location across zones. If you are adopting this model and want to have a separate location for LDAP binaries, create two shares for the binaries on your SAN: one for the Application Tier binaries and one for the directory binaries. The first share is mounted on the application tier servers and the second share mounted on the directory tier servers. While the shares are different they will be mounted on the servers using the same mount point. For example: /u01/oracle/products

The Web tier binaries are not shared. These are placed onto local storage so that SAN storage does not have to be mounted in the DMZ.

For more information about the structure and content of an Oracle Fusion Middleware home, see Oracle Fusion Middleware Installation Guide for Oracle Enterprise Content Management Suite.

7.4.1.2 About Sharing a Single Middleware Home

Oracle Fusion Middleware enables you to configure multiple Oracle WebLogic Server domains from a single Middleware home. This allows you to install the Middleware home in a single location on a shared volume and reuse the Middleware home for multiple host installations.

When a Middleware home is shared by multiple servers on different hosts, there are some best practices to keep in mind. In particular, be sure that the Oracle Inventory on each host is updated for consistency and for the application of patches.

To update the oraInventory for a host and attach a Middleware home on shared storage, use the following command:

ORACLE_HOME/oui/bin/attachHome.sh

For more information about the Oracle inventory, see "Oracle Universal Installer Inventory" in the Oracle Universal Installer Concepts Guide.

7.4.1.3 About Using Redundant Binary (Middleware Home) Directories

For maximum availability, Oracle recommends using redundant binary installations on shared storage.

In this model, you install two identical Middleware homes for your Oracle Fusion Middleware software on two different shared volumes. You then mount one of the Middleware homes to one set of servers, and the other Middleware home to the remaining servers. Each Middleware home has the same mount point, so the Middleware home always has the same path, regardless of which Middleware home the server is using.

Should one Middleware home become corrupted or unavailable, only half your servers are affected. For additional protection, Oracle recommends that you disk mirror these volumes.

If separate volumes are not available on shared storage, Oracle recommends simulating separate volumes using different directories within the same volume and mounting these to the same mount location on the host side. Although this does not guarantee the protection that multiple volumes provide, it does allow protection from user deletions and individual file corruption.

This is normally achieved post deployment by performing the following steps:

-

Create a new shared volume for binaries.

-

Leave the original mounted volume on odd numbered servers. for example: OAMHOST1, OIMHOST1

-

Mount the new volume in the same location on even mounted servers, for example: OAMHOST2, OIMHOST2

-

Copy the files on volume1 to volume2 by copying from an odd numbered host to an even numbered host.

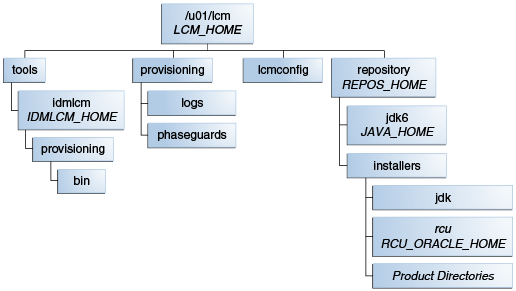

7.4.1.4 About the Lifecycle Repository

The lifecycle repository contains the lifecycle management tools, such as the deployment and patching tools. It also contains a software repository which includes the software to be installed as well as any patches to be applied.

It is recommended that the Lifecycle repository be mounted onto every host in the topology for the duration of provisioning. This allows the deployment process to place files into this location ready for use by other process steps that might be running on different hosts. Having a centralized repository saves you from having to manually copy files around during the provisioning process.

Having a centralized repository is also important for patching. The repository is only required when provisioning or patching is occurring. At other times, this disk share can be unmounted from any or all hosts, ensuring security across zones is maintained.

The advantages of having a shared lifecycle repository are:

-

Single location for software.

-

Simplified deployment provisioning.

-

Simplified patching.

Some organizations may prohibit the mounting of file systems across zones, even if it is only for the duration of initial provisioning or for patching. In this case, when you undertake deployment provisioning, you must duplicate the software repository and perform a number of manual file copies during the deployment process.

For simplicity, this guide recommends using a single shared lifecycle repository. However the guide does include the necessary extra manual steps in case this is not possible.

7.4.2 Recommendations for Domain Configuration Files

The following sections describe guidelines for using shared storage for the Oracle WebLogic Server domain configuration files you create when you configure your Oracle Fusion Middleware products in an enterprise deployment:

-

Section 7.4.2.2, "Shared Storage Requirements for Administration Server Domain Configuration Files"

-

Section 7.4.2.3, "Local Storage Requirements for Managed Server Domain Configuration Files"

7.4.2.1 About Oracle WebLogic Server Administration and Managed Server Domain Configuration Files

When you configure an Oracle Fusion Middleware product, you create or extend an Oracle WebLogic Server domain. Each Oracle WebLogic Server domain consists of a single Administration Server and one or more managed servers.

For more information about Oracle WebLogic Server domains, see Oracle Fusion Middleware Understanding Domain Configuration for Oracle WebLogic Server.

In an enterprise deployment, it is important to understand that the managed servers in a domain can be configured for active-active high availability. However, the Administration server cannot. The Administration Server is a singleton service. That is, it can be active on only one host at any given time.

ASERVER_HOME is the primary location of the domain configuration. MSERVER_HOME is a copy of the domain configuration that is used to start and stop managed servers. The WebLogic Administration Server automatically copies configuration changes applied to the ASERVER_HOME domain configuration to all those MSERVER_HOME configuration directories that have been registered to be part of the domain. However, the MSERVER_HOME directories also contain deployments and data specific to the managed servers. For that reason, when performing backups, you must include both ASERVER_HOME and MSERVER_HOME.

7.4.2.2 Shared Storage Requirements for Administration Server Domain Configuration Files

Administration Server configuration files must reside on Shared Storage. This allows the administration server to be started on a different host should the primary host become unavailable. The directory where the administration server files is located is known as the ASERVER_HOME directory. This directory is located on shared storage and mounted to each host in the application tier.

Managed Server configuration Files should reside on local storage to prevent performance issues associated with contention. The directory where the managed server configuration files are located is known as the MSERVER_HOME directory. It is highly recommended that managed server domain configuration files be placed onto local storage.

7.4.2.3 Local Storage Requirements for Managed Server Domain Configuration Files

If you must use shared storage, it is recommended that you create a storage partition for each node and mount that storage exclusively to that node

The configuration steps provided for this enterprise deployment topology assume that a local domain directory for each node is used for each managed server.

7.4.3 Shared Storage Recommendations for JMS File Stores and Transaction Logs

JMS file stores and JTA transaction logs must be placed on shared storage in order to ensure that they are available from multiple hosts for recovery in the case of a server failure or migration.

For more information about saving JMS and JTA information in a file store, see "Using the WebLogic Persistent Store" in Oracle Fusion Middleware Configuring Server Environments for Oracle WebLogic Server.

7.4.4 Recommended Directory Locations

This section describes the recommended use of shared and local storage.

This section includes the following topics:

7.4.4.1 Lifecycle Management and Deployment Repository

You need a separate share to hold the Lifecycle Management Tools and Deployment Repository. This share is only required during deployment and any subsequent patching. Once deployment is complete, you can unmount this share from each host.

Note:

Note: If you have patches that you want to deploy using the patch management tool, you must remount this share while you are applying the patches.Ideally, you should mount this share on ALL hosts for the duration of provisioning. Doing so will make the provisioning process simpler, as you will not need to manually copy any files, such as the keystores required by the Web Tier. If your organization prohibits sharing the LCM_HOME to the Web tier hosts (even for the duration of deployment), you must create a local copy of the contents of this share on the DMZ hosts and make manual file copies during the deployment phases.

7.4.4.2 Shared Storage

I In an enterprise deployment the following shared storage is required. This shared storage must be on shared disk. The mount point must be /u01/oracle.

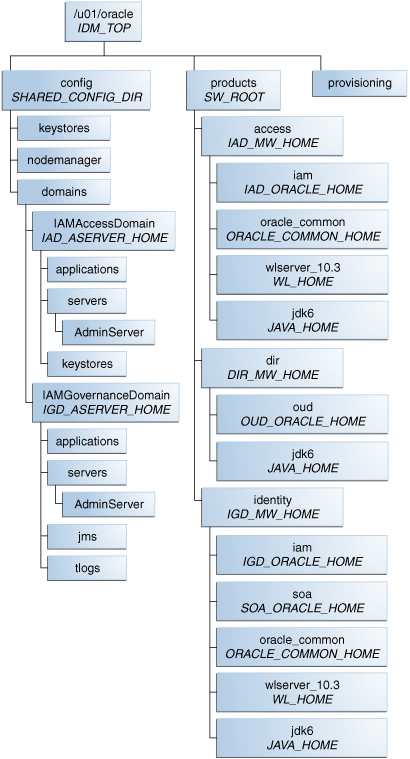

The recommended layout is described in Table 7-1 and Table 7-2 and shown in Figure 7-2.

Note:

Even though it is not shared, theIDM_TOP location must be writable.Table 7-1 Volumes on Shared Storage–Distributed Topology

| Environment Variable | Volume Name | Mount Point | Mounted on Hosts | Exclusive |

|---|---|---|---|---|

|

SW_ROOT |

Binaries |

|

OAMHOST1 OAMHOST2 OIMHOST1 OIMHOST2 LDAPHOST1 LDAPHOST2Foot 1 |

No |

|

SHARED_CONFIG_DIR |

sharedConfig |

|

OAMHOST1 OAMHOST2 OIMHOST1 OIMHOST2 |

No |

|

DIR_MW_HOMEFoot 2 |

dirBinaries |

|

LDAPHOST1 LDAPHOST2 |

No |

Footnote 1 Only mount to LDAPHOST1 and LDAPHOST2 when directory is in the Application Zone

Footnote 2 Only required when directory is being placed into a Directory/Database Zone

Table 7-2 Volumes on Shared Storage–Consolidated Topology

| Environment Variable | Volume Name | Mount Point | Mounted on Hosts | Exclusive |

|---|---|---|---|---|

|

SW_ROOT |

Binaries |

|

IAMHOST1 IAMHOST2 LDAPHOST1 LDAPHOST2Foot 1 |

No |

|

SHARED_CONFIG_DIR |

sharedConfig |

|

IAMHOST1 IAMHOST2 |

No |

|

DIR_MW_HOMEFoot 2 |

dirBinaries |

|

LDAPHOST1 LDAPHOST2 |

No |

Footnote 1 Only mount to LDAPHOST1 and LDAPHOST2 when directory is in the Application Zone

Footnote 2 Only required when directory is being placed into a Directory/Database Zone

The figure shows the shared storage directory hierarchy. Under the mount point, /u01/oracle (SW_ROOT) are the directories config and products.

If you plan to deploy your directory into a different zone from the application tier and you do not want to mount your storage across zones, then you can create shared storage dedicated to the directory tier for the purposes of holding DIR_MW_HOME. Note that this will still have the same mount point as the shared storage in the application tier, for example: /u01/oracle.

The directory config contains domains, which contains:

-

IAMAccessDomain(IAD_ASERVER_HOME).IAMAccessDomainhas three subdirectories:applications,servers, andkeystores. Theserversdirectory has a subdirectory,AdminServer. -

IAMGovernanceDomain(IGD_ASERVER_HOME).IAMGovernanceDomainhas five subdirectories:applications,servers,keystores,jms, andtlogs. Theserversdirectory has a subdirectory,AdminServer.

The directory products contains the directories access, dir, and identity.

The directory access (IAD_MW_HOME) has four subdirectories: iam (IAD_ORACLE_HOME), oracle_common (ORACLE_COMMON_HOME), wlserver_10.3 (WL_HOME), and jdk6 (JAVA_HOME).

The directory dir (DIR_MW_HOME) has two subdirectories: oud (OUD_ORACLE_HOME) and jdk6(JAVA_HOME).

The directory identity (IGD_MW_HOME) has five subdirectories: iam (IGD_ORACLE_HOME), soa (SOA_ORACLE_HOME), oracle_common (ORACLE_COMMON_HOME), wlserver_10.3 (WL_HOME), and jdk6 (JAVA_HOME).

The directory provisioning is used by the Identity and Access Deployment Wizard and contains information relating to the deployment plan.

If you have a dedicated directory tier, the share for SW_ROOT will be different depending on whether or not you are on an LDAPHOST or an IAMHOST

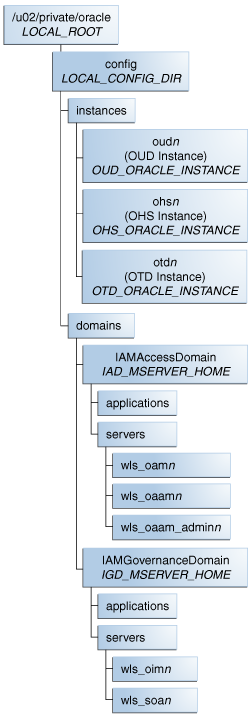

7.4.4.3 Private Storage

In an Enterprise Deployment it is recommended that the following directories be created on local storage or on shared storage mounted exclusively to a given host:

Table 7-3 Private Storage Directories

| Tier | Environment Variable | Directory | Hosts |

|---|---|---|---|

|

Web Tier |

WEB_MW_HOME |

|

WEBHOST1 WEBHOST2 |

|

WEB_ORACLE_INSTANCE |

|

WEBHOST1 WEBHOST2 |

|

|

OTD_ORACLE_INSTANCE |

/u02/private/oracle/config/instances/otdn |

WEBHOST1 WEBHOST2 |

|

|

OTD_ORACLE_HOME |

/u01/oracle/products/web/otd |

WEBHOST1/2 |

|

|

OHS_ORACLE_HOME |

/u01/oracle/products/web/ohs |

WEBHOST1/2 |

|

|

Application Tier |

OUD_ORACLE_INSTANCE |

|

LDAPHOST1 LDAPHOST2 |

|

IAD_MSERVER_HOME |

|

OAMHOST1 OAMHOST2 |

|

|

IGD_MSERVER_HOME |

|

OIMHOST1 OIMHOST2 |

The figure shows the local storage directory hierarchy. The top level directory, /u02/private/oracle (LOCAL_ROOT), has a subdirectory, config.

The directory config has a subdirectory for each product that has an instance, that is, Web Server and LDAP (in this case, Oracle HTTP Server and Oracle Unified Directory). The appropriate directory only appears on the relevant host, that is, the WEB_ORACLE_INSTANCE directory only appears on the WEBHOSTS

The domains directory contains one subdirectory for each domain in the topology, that is, IAMAccessDomain and IAMGovernanceDomain.

IAMAccessDomain (IAD_MSERVER_HOME) contains applications and servers. The servers directory contains wls_oamn, where n is the Access Manager instance. If OAAM is configured, this folder also contains wls_oaamn and wls_oaam_adminn

IAMGovernanceDomain (IGD_MSERVER_HOME), which contains applications and servers. The servers directory contains wls_oimn and wls_soan, where n is the Oracle Identity Manager and SOA instance, respectively.

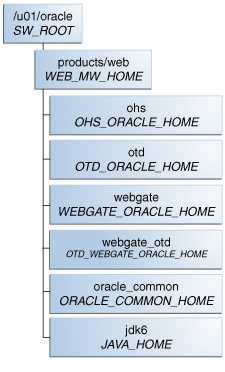

Figure 7-4 shows the local binary storage directory hierarchy. The top level directory, /u01/oracle (), has a subdirectory, products.

The products directory contains the web directory (WEB_MW_HOME), which has four subdirectories: web (WEB_ORACLE_HOME), webgate (WEBGATE_ORACLE_HOME), oracle_common (ORACLE_COMMON_HOME), and jdk6 (JAVA_HOME).

Note:

While it is recommended that you putWEB_ORACLE_INSTANCE directories onto local storage, you can use shared storage. If you use shared storage, you must ensure that the HTTP lock file is placed on discrete locations.7.5 Configuring Exalogic Storage for Oracle Identity Management

The following sections describe how to configure the Sun ZFS Storage 7320 appliance for an enterprise deployment:

7.5.1 Summary of the Storage Appliance Directories and Corresponding Mount Points for Physical Exalogic

For the Oracle Identity Management enterprise topology, you install all software products on the Sun ZFS Storage 7320 appliance, which is a standard hardware storage appliance available with every Exalogic machine. No software is installed on the local storage available for each compute node.

To organize the enterprise deployment software on the appliance, you create a new project, called IAM. The shares (/products and /config) are created within this project on the appliance, so you can later mount the shares to each compute node.

To separate the product binaries from the files specific to each compute node, you create a separate share for each compute node. Sub-directories are for the host names are created under config and products directories. Each private directory is identified by the logical host name; for example, IAMHOST1 and IAMHOST2.

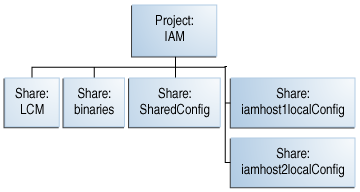

Figure 7-6 shows the recommended physical directory structure on the Sun ZFS Storage 7320 appliance.

Table 7-5 shows how the shares on the appliance map to the mount points you will create on the vServers.

Figure 7-5 Physical Structure of the Shares on the Sun ZFS Storage Appliance for Physical Exalogic Deployments

Figure 7-5 illustrates the physical structure of the shares on the Sun ZFS storage appliance

Table 7-4 Mapping the Shares on the Appliance to Mount Points on Each Compute Node

| Project | Share | Mount Point | Host | Mounted On | Privileges to Assign to User, Group, and Other |

|---|---|---|---|---|---|

|

IAM |

|

|

IAMHOST1 IAMHOST2 |

|

R and W (Read and Write) |

|

IAM |

LCM |

|

ALL Hosts |

|

R and W (Read and Write) |

|

IAM |

|

|

IAMHOST1 IAMHOST2 |

|

R and W (Read and Write) |

|

IAM |

iamhost1localConfig |

|

IAMHOST1 |

|

R and W (Read and Write) |

|

IAM |

iamhost2localConfig |

|

IAMHOST2 |

|

R and W (Read and Write) |

7.5.2 Summary of the Storage Appliance Directories and Corresponding Mount Points for Virtual Exalogic

For the Oracle Identity Management enterprise topology, you install all software products on the Sun ZFS Storage 7320 appliance, which is a standard hardware storage appliance available with every Exalogic machine. No software is installed on the local storage available for each compute node.

To organize the enterprise deployment software on the appliance, you create a new project, called IAM. The shares (/products and /config) are created within this project on the appliance, so you can later mount the shares to each compute node.

To separate the product binaries from the files specific to each compute node, you create a separate share for each compute node. Sub-directories are for the host names are created under config and products directories. Each private directory is identified by the logical host name; for example, IAMHOST1 and IAMHOST2.

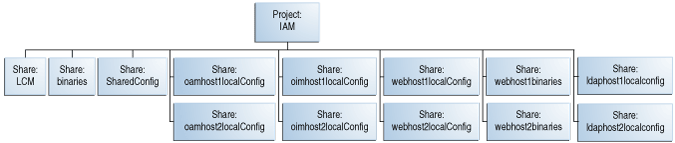

Figure 7-6 shows the recommended physical directory structure on the Sun ZFS Storage 7320 appliance.

Table 7-5 shows how the shares on the appliance map to the mount points you create on the vServers that host the enterprise deployment software.

Figure 7-6 Physical Structure of the Shares on the Sun ZFS Storage Appliance for Virtual Exalogic Deployments

Figure 7-6 illustrates the physical structure of the shares on the Sun ZFS storage appliance.

Table 7-5 Mapping the Shares on the Appliance to Mount Points on Each vServer

| Project | Share | Mount Point | Host | Mounted On | Privileges to Assign to User, Group, and Other |

|---|---|---|---|---|---|

|

IAM |

|

|

OAMHOST1 OAMHOST2 OIMHOST1 OIMHOST2 |

|

R and W (Read and Write) |

|

IAM |

LCM |

|

ALL Hosts |

|

R and W (Read and Write) |

|

IAM |

|

|

OAMHOST1 OAMHOST2 OIMHOST1 OIMHOST2 |

|

R and W (Read and Write) |

|

IAM |

oamhost1localConfig |

|

OAMHOST1 |

|

R and W (Read and Write) |

|

IAM |

oamhost2localConfig |

|

OAMHOST2 |

|

R and W (Read and Write) |

|

IAM |

oimhost1localConfig |

|

OIMHOST1 |

|

R and W (Read and Write) |

|

IAM |

oimhost2localConfig |

|

OIMHOST2 |

|

R and W (Read and Write) |

|

IAM |

webhost1localConfig |

|

WEBHOST1 |

|

R and W (Read and Write) |

|

IAM |

webhost2localConfig |

|

WEBHOST2 |

|

R and W (Read and Write) |

|

IAM |

|

|

WEBHOST1 |

|

R and W (Read and Write) |

|

IAM |

|

|

WEBHOST2 |

|

R and W (Read and Write) |

Note:

Thebinary directories can be changed to read only after the configuration is complete if desired.7.5.3 Preparing Storage for Exalogic Deployment

Prepare storage for the physical Exalogic deployment as described in the following subsections:

-

Section 7.5.3.1, "Prerequisite Storage Appliance Configuration Tasks"

-

Section 7.5.3.3, "Creating the IAM Project Using the Storage Appliance Browser User Interface (BUI)"

-

Section 7.5.3.4, "Creating the Shares in the IAM Project Using the BUI"

7.5.3.1 Prerequisite Storage Appliance Configuration Tasks

The instructions in this guide assume that the Sun ZFS Storage 7320 appliance is already set up and initially configured. Specifically, it is assumed you have reviewed the following sections in the Oracle Exalogic Elastic Cloud Machine Owner's Guide:

7.5.3.2 Creating Users and Groups in NIS

This step is optional. If you want to use the onboard NIS servers, you can create users and groups using the steps in this section.

First, determine the name of your NIS server by logging into the Storage BUI. For example:

-

Log in to the ZFS Storage Appliance using the following URL:

https://exalogicsn01-priv:215

-

Log in to the BUI using the storage administrator's user name (root) and password.

-

Navigate to Configuration, and then Services.

-

Click on NIS

There is a green dot next to it if it is running. If it is not running and you wish to configure NIS, see the Oracle Exalogic Elastic Cloud Machine Owner's Guide.

-

Click on NIS. you will see the named NIS servers. Make a note of one of the NIS servers.

Now that you have the name of the NIS server open a terminal window on the NIS server as root and perform the following actions:

-

Create users as described in Section 9.11, "Configuring Users and Groups."

-

Add Users to

ypby performing the following steps:-

Navigate to the following directory:

/var/yp

-

Run the following command:

make -C /var/yp

-

If required, restart the services using the following commands:

service ypserv start service yppasswdd start service rpcimapd start service ypbind start\

-

Validate that the users and groups appear in NIS by issuing the command:

ypcat passwd

and

ypcat group

-

7.5.3.3 Creating the IAM Project Using the Storage Appliance Browser User Interface (BUI)

To configure the appliance for the recommended directory structure, you create a custom project, called IAM, using the Sun ZFS Storage 7320 appliance Browser User Interface (BUI).

After you set up and configure the Sun ZFS Storage 7320 appliance, the appliance has a set of default projects and shares. For more information, see "Default Storage Configuration" in the Oracle Exalogic Elastic Cloud Machine Owner's Guide.

The instructions in this section describe the specific steps for creating a new "IAM" project for the enterprise deployment. For more general information about creating a custom project using the BUI, see "Creating Custom Projects" in the Oracle Exalogic Elastic Cloud Machine Owner's Guide.

To create a new custom project called IAM on the Sun ZFS Storage 7320 appliance:

-

Log in to the ZFS Storage Appliance using the URL:

https://exalogicsn01-priv:215

-

Log in to the BUI using the storage administrator's user name (root) and password.

-

Navigate to the Projects page by clicking on the Shares tab, then the Projects sub-tab.

The BUI displays the Project Panel.

-

Click Add next to the Projects title to display the Create Project window.

Enter Name:

IAMClick Apply.

-

Click Edit Entry next to the newly created IAM Project.

-

Click the General tab on the project page to set project properties.

-

Update the following values:

-

Mountpoint: Set to

/export/IAM -

Under the Default Settings Filesystems section:

User:

oracleGroup:

oinstallPermissions:

RWX RWX R_X

-

-

For the purposes of the enterprise deployment, you can accept the defaults for the remaining project properties.

For more information about the properties you can set here, see the "Project Settings" table in the Oracle Exalogic Elastic Cloud Machine Owner's Guide.

-

Click Apply on the General tab to create the IDM project.

7.5.3.4 Creating the Shares in the IAM Project Using the BUI

After you have created the IAM project, the next step is to create the required shares within the project.

The instructions in this section describe the specific steps for creating the shares required for an Oracle Identity Management enterprise deployment. For more general information about creating custom shares using the BUI, see "Creating Custom Shares" in the Oracle Exalogic Elastic Cloud Machine Owner's Guide.

Table 7-5 lists the shares required for all the topologies described in this guide. The table also indicates what privileges are required for each share.

Create two additional shares for each of the compute nodes hosting Oracle Traffic Director, as shown in Table 7-5.

To create each share, use the following instructions, replacing the name and privileges, as described in Table 7-5:

-

Login to the storage system BUI, using the following URL:

https://ipaddress:215For example:

https://exalogicsn01-priv:215

-

Navigate to the Projects page by clicking the Shares tab, and then the Projects sub-tab.

-

On the Project Panel, click IAM.

-

Click the plus (+) button next to Filesystems to add a file system.

The Create Filesystems screen is displayed.

-

In the Create Filesystems screen, choose IAM from the Project pull-down menu.

-

In the Name field, enter the name for the share.

Refer to Table 7-5 for the name of each share.

-

From the Data migration source pull-down menu, choose None.

-

Select the Permissions option and set the permissions for each share.

Refer to Table 7-5 for the permissions to assign each share.

-

Select the Inherit Mountpoint option.

-

To enforce UTF-8 encoding for all files and directories in the file system, select the Reject non UTF-8 option.

-

From the Case sensitivity pull-down menu, select Mixed.

-

From the Normalization pull-down menu, select None.

-

Click Apply to create the share.

Repeat the procedure for each share listed in Table 7-5.

7.5.3.5 Allowing Local Root Access to Shares

If you want to run commands or traverse directories on the share as the root user, you must add an NFS exception to allow you to do so. You can create exceptions either at the individual, share, or project level.

To keep things simple, in this example you create the exception at the project level.

To create an exception for NFS at the project level:

-

In the Browser User Interface (BUI), access the Projects user interface by clicking Configuration, STORAGE, Shares, and then Projects.

The Project Panel appears.

-

On the Project Panel, click Edit next to the project IAM.

-

Select the Protocols tab.

-

Click the + sign next to NFS exceptions.

-

Select Type: network.

-

In the Entity field, enter the IP address of the compute node as it appears on the Storage Network (bond0) in CIDR format. For example: 192.168.10.3/19

192.168.10.3/19

-

Set Access Mode to Read/Write and check Root Access.

-

Click Apply.

-

Repeat for each compute node that accesses the ZFS appliance.