Use Case: Configuring Oracle Solaris ZFS Snapshot Replication With One Partner Cluster as Zone Cluster

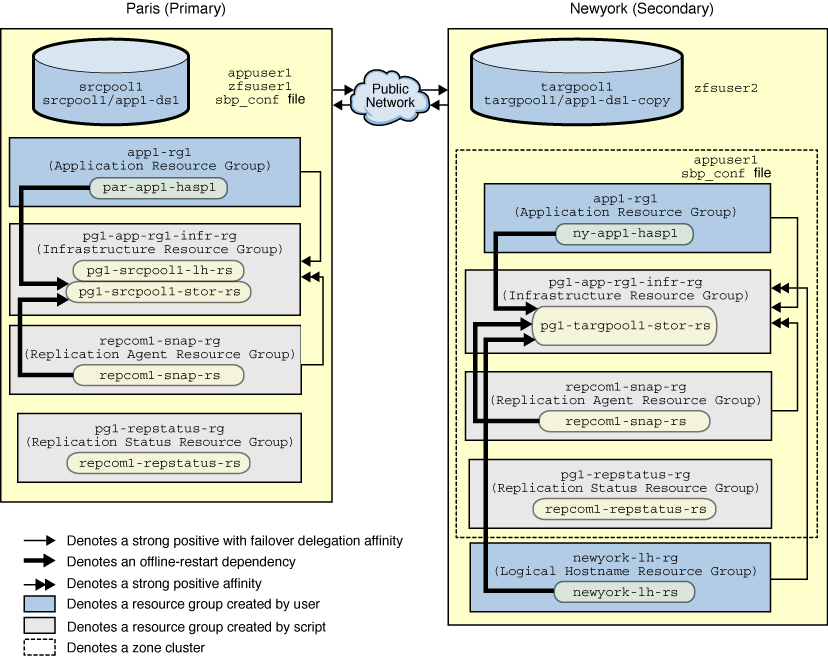

This example shows how to set up the protection group with Oracle Solaris ZFS snapshot replication to protect and manage the application and its ZFS datasets with one partner being a zone cluster. Assume that the application resource group and application user is already set up. If a partner cluster is a zone cluster, execute the commands for setting up logical hostname, private string, and ZFS permissions in the global zone of the nodes of the zone cluster. Any Geographic edition commands must be executed within the zone cluster. If either partner cluster is a zone cluster, you must create the private string to store the SSH passphrase on both the partners. You must create logical hostname resource and resource group in the global zone of the zone cluster partner. Assume that the primary cluster paris is a global cluster and secondary cluster newyork is a zone cluster. The following figure shows the setup of resource groups and resources for Oracle Solaris ZFS Snapshot Replication With One Partner Cluster as Zone Cluster.

Figure 3 Example Resource Group and Resource Setup With One Partner Cluster as Global Zone and Other Partner Cluster as Zone Cluster

Perform the following actions to configure the Oracle Solaris ZFS snapshot replication:

-

Decide the hostname to use on each cluster as replication-related logical hostname. For this example, assume that the logical hostname on the paris cluster is paris-lh. The logical hostname on the newyork cluster is newyork-lh.

Add the logical hostname entries for paris-lh in the /etc/hosts file on both the nodes of the paris cluster. Similarly, add the logical hostname entries for newyork-lh in the /etc/hosts file in the global zone on both nodes of the newyork cluster.

-

Create the replication user and SSH setup. For information about the step for creating the replication user and SSH setup, see Use Case: Setting Up Replication User and SSH.

-

Provide ZFS permissions to replication user on both clusters. You must import the ZFS storage pool to provide permissions. If application's HAStoragePlus is online, log in as the root user on the node where it is online. Else if the ZFS storage pool is not online on any cluster node, import the ZFS storage pool on any node and perform ZFS commands on that node. On each cluster, log in as the root user to the cluster node where the zpool is imported.

Type the following commands in the global zone of the paris cluster node where srcpool1 is imported:

# /sbin/zfs allow zfsuser1 create,destroy,hold,mount,receive,release,send,rollback,snapshot \ srcpool1/app1-ds1 # /sbin/zfs allow srcpool1/app1-ds1 ---- Permissions on srcpool1/app1-ds1 --------------------------------- Local+Descendent permissions: user zfsuser1 create,destroy,hold,mount,receive,release,rollback,send,snapshot #

Type the following commands in the global zone of the newyork cluster node where the targpool1 zpool is imported.

# /sbin/zfs allow zfsuser2 create,destroy,hold,mount,receive,release,send,rollback,snapshot \ targpool1/app1-ds1-copy # /sbin/zfs allow targpool1/app1-ds1-copy ---- Permissions on targpool1/app1-ds1-copy --------------------------------- Local+Descendent permissions: user zfsuser2 create,destroy,hold,mount,receive,release,rollback,send,snapshot #

Note that the replication user on each cluster must have the above Local+Descendent ZFS permissions on the ZFS dataset used on that cluster.

-

Since newyork is a zone cluster, create Oracle Solaris Cluster private strings in the global zone of each partner to store the SSH passphrase for the replication user of that partner. The private string object name must be in the format zonename:replication-component:local_service_passphrase.

Since the paris cluster is not a zone cluster, you must use global as the zonename. Type the following command in any one node of the paris cluster to create the private string for zfsuser1, and specify the SSH passphrase for zfsuser1 at the prompt:

# /usr/cluster/bin/clpstring create -b global:repcom1:local_service_passphrase \ global:repcom1:local_service_passphrase Enter string value: Enter string value again:

Suppose newyork is the zone cluster name. The zone cluster name is always the same as the zone name by restriction, so you must use newyork as the zonename. Type the following command in the global zone of any one zone of the newyork cluster to create the private string for zfsuser2, and specify the SSH passphrase for zfsuser2 at the prompt :

# /usr/cluster/bin/clpstring create -b newyork:repcom1:local_service_passphrase \ newyork:repcom1:local_service_passphrase Enter string value: Enter string value again:

-

Suppose you create a file /var/tmp/geo/zfs_snapshot/sbp_conf file to use as the script-based plugin configuration file on both clusters to specify replication component node lists and evaluation rules. Add the following entry in the /var/tmp/geo/zfs_snapshot/sbp_conf file on each node of the paris cluster :

repcom1|any|paris-node-1,paris-node-2

Add the following entry in the /var/tmp/geo/zfs_snapshot/sbp_conf file in each zone of the newyork zone cluster :

repcom1|any|newyork-node-1,newyork-node-2

-

Copy /opt/ORCLscgrepzfssnap/etc/zfs_snap_geo_config to create a parameters file /var/tmp/geo/zfs_snapshot/repcom1_conf for the replication component from one node of primary cluster paris. Type the configuration parameters in the configuration file.

PS=paris-newyork PG=pg1 REPCOMP=repcom1 REPRS=repcom1-repstatus-rs REPRG=pg1-repstatus-rg DESC="Protect app1-rg1 using ZFS snapshot replication" APPRG=app1-rg1 CONFIGFILE=/var/tmp/geo/zfs_snapshot/sbp_conf LOCAL_REP_USER=zfsuser1 REMOTE_REP_USER=zfsuser2 LOCAL_PRIV_KEY_FILE= REMOTE_PRIV_KEY_FILE=/export/home/zfsuser2/.ssh/zfsrep1 LOCAL_ZPOOL_RS=par-app1-hasp1 REMOTE_ZPOOL_RS=ny-app1-hasp1 LOCAL_LH=paris-lh REMOTE_LH=newyork-lh LOCAL_DATASET=srcpool1/app1-ds1 REMOTE_DATASET=targpool1/app1-ds1-copy REPLICATION_INTERVAL=120 NUM_OF_SNAPSHOTS_TO_STORE=2 REPLICATION_STREAM_PACKAGE=false SEND_PROPERTIES=true INTERMEDIARY_SNAPSHOTS=false RECURSIVE=true MODIFY_PASSPHRASE=false

-

Execute the zfs_snap_geo_register script using the replication configuration file as parameter on any one node of the primary cluster paris. You must perform this step from the same node as in the previous step.

$ /opt/ORCLscgrepzfssnap/util/zfs_snap_geo_register -f /var/tmp/geo/zfs_snapshot/repcom1_conf

The setup actions by zfs_snap_geo_register script creates the following components in the primary cluster, as shown in Example Resource Group and Resource Setup With One Partner Cluster as Global Zone and Other Partner Cluster as Zone Cluster:

-

Protection group pg1

-

Replication component repcom1

-

Infrastructure resource group pg1-app1-rg1-infr-rg and its resources

-

Replication resource group repcom1-snap-rg which contains the resource repcom1-snap-rs

-

Replication status resource group pg1-repstatus-rg and replication status resource repcom1-repstatus-rs

-

-

On any node of the paris cluster, check that the protection group and replication component is created successfully.

# /usr/cluster/bin/geopg show pg1 # /usr/cluster/bin/geopg status pg1

-

On any one zone of the newyork cluster, get the protection group that is created on the primary cluster. You must type the following command in the zone cluster.

# /usr/cluster/bin/geopg get -s paris-newyork pg1

This command creates the resource group and resource setup in the zone cluster which is the secondary cluster, as shown in Example Resource Group and Resource Setup With One Partner Cluster as Global Zone and Other Partner Cluster as Zone Cluster.

-

Configure a logical hostname resource and resource group in the global zone of the zone cluster partner newyork to host the replication hostname. Type the following commands on one node of the global zone.

The zone cluster partner is newyork, and replication hostname to use for newyork is newyork-lh. The infrastructure resource group created automatically in the newyork zone cluster is pg1-app-rg1-infr-rg. The storage resource in that infrastructure resource group is pg1-targpool1-stor-rs. Type the following commands in the global zone of any one zone of newyork cluster:

# clrg create newyork-lh-rg # clrslh create -g newyork-lh-rg -h newyork-lh newyork-lh-rs # clrg manage newyork-lh-rg # clrg set -p RG_affinities=++newyork:pg1-app-rg1-infr-rg newyork-lh-rg (C538594) WARNING: resource group global:newyork-lh-rg has a strong positive affinity on resource group newyork:pg1-app-rg1-infr-rg with Auto_start_on_new_cluster=FALSE; global:newyork-lh-rg will be forced to remain offline until its strong affinities are satisfied. # clrs set -p Resource_dependencies_offline_restart=newyork:pg1-targpool1-stor-rs newyork-lh-rs # clrg show -p Auto_start_on_new_cluster newyork-lh-rg === Resource Groups and Resources === Resource Group: newyork-lh-rg Auto_start_on_new_cluster: True

If the Auto_start_on_new_cluster property is not set to True, type the following command:

# clrg set -p Auto_start_on_new_cluster=True newyork-lh-rg

-

From any zone of the newyork cluster, check that the protection group and replication components are available. Ensure that the protection group synchronization status between paris and newyork cluster shows OK. You must type the following command in the zone cluster:

$ /usr/cluster/bin/geopg show pg1 $ /usr/cluster/bin/geopg status pg1

Similarly, check status from any one node of paris cluster:

$ /usr/cluster/bin/geopg show pg1 $ /usr/cluster/bin/geopg status pg1

-

Activate the protection group to start the Oracle Solaris ZFS snapshot replication from any node of the paris or newyork cluster.

$ /usr/cluster/bin/geopg start -e global pg1

-

Type the following command from one node of either partner cluster to confirm whether the protection group started on both clusters.

# geopg status pg1