2.3 Underflow

Underflow occurs, roughly speaking, when the result of an arithmetic operation is so small that it cannot be stored in its intended destination format without suffering a rounding error that is larger than usual.

2.3.1 Underflow Thresholds

Table 11 shows the underflow thresholds for single, double, and double-extended precision.

| |||||||||||||||

The positive subnormal numbers are those numbers between the smallest normal number and zero. Subtracting two (positive) tiny numbers that are near the smallest normal number might produce a subnormal number. Or, dividing the smallest positive normal number by two produces a subnormal result.

The presence of subnormal numbers provides greater precision to floating-point calculations that involve small numbers, although the subnormal numbers themselves have fewer bits of precision than normal numbers. Producing subnormal numbers (rather than returning the answer zero) when the mathematically correct result has magnitude less than the smallest positive normal number is known as gradual underflow.

There are several other ways to deal with such underflow results. One way, common in the past, was to flush those results to zero. This method is known as abrupt underflow and was the default on most mainframes before the advent of the IEEE Standard.

The mathematicians and computer designers who drafted IEEE Standard 754 considered several alternatives while balancing the desire for a mathematically robust solution with the need to create a standard that could be implemented efficiently.

2.3.2 How Does IEEE Arithmetic Treat Underflow?

IEEE Standard 754 chooses gradual underflow as the preferred method for dealing with underflow results. This method amounts to defining two representations for stored values, normal and subnormal.

Recall that the IEEE format for a normal floating-point number is:

(-1)s × (2(e–bias)) × 1.f

where s is the sign bit, e is the biased exponent, and f is the fraction. Only s, e, and f need to be stored to fully specify the number. Because the implicit leading bit of the significand is defined to be 1 for normal numbers, it need not be stored.

The smallest positive normal number that can be stored, then, has the negative exponent of greatest magnitude and a fraction of all zeros. Even smaller numbers can be accommodated by considering the leading bit to be zero rather than one. In the double-precision format, this effectively extends the minimum exponent from 10‐308 to 10‐324, because the fraction part is 52 bits long (roughly 16 decimal digits.) These are the subnormal numbers; returning a subnormal number, rather than flushing an underflowed result to zero, is gradual underflow.

Clearly, the smaller a subnormal number, the fewer nonzero bits in its fraction; computations producing subnormal results do not enjoy the same bounds on relative round-off error as computations on normal operands. However, the key fact about gradual underflow is that its use implies the following:

Underflowed results need never suffer a loss of accuracy any greater than that which results from ordinary round-off error.

Addition, subtraction, comparison, and remainder are always exact when the result is very small.

Recall that the IEEE format for a subnormal floating-point number is:

(-1)s × (2(-bias+1)) × 0.f

where s is the sign bit, the biased exponent e is zero, and f is the fraction. Note that the implicit power-of-two bias is one greater than the bias in the normal format, and the implicit leading bit of the fraction is zero.

Gradual underflow allows you to extend the lower range of representable numbers. It is not smallness that renders a value questionable, but its associated error. Algorithms exploiting subnormal numbers have smaller error bounds than other systems. The next section provides some mathematical justification for gradual underflow.

2.3.3 Why Gradual Underflow?

The purpose of subnormal numbers is not to avoid underflow/overflow entirely, as some other arithmetic models do. Rather, subnormal numbers eliminate underflow as a cause for concern for a variety of computations, typically, multiply followed by add. For a more detailed discussion, see Underflow and the Reliability of Numerical Software by James Demmel and Combating the Effects of Underflow and Overflow in Determining Real Roots of Polynomials by S. Linnainmaa.

The presence of subnormal numbers in the arithmetic means that untrapped underflow, which implies loss of accuracy, cannot occur on addition or subtraction. If x and y are within a factor of two, then x – y is error-free. This is critical to a number of algorithms that effectively increase the working precision at critical places in algorithms.

In addition, gradual underflow means that errors due to underflow are no worse than usual round-off error. This is a much stronger statement than can be made about any other method of handling underflow, and this fact is one of the best justifications for gradual underflow.

2.3.4 Error Properties of Gradual Underflow

Most of the time, floating-point results are rounded:

computed result = true result + round-off

One convenient measure of how large the round-off can be is called a unit in the last place, abbreviated ulp. The least significant bit of the fraction of a floating-point number in its standard representation is its last place. The value represented by this bit (e.g., the absolute difference between the two numbers whose representations are identical except for this bit) is a unit in the last place of that number. If the computed result is obtained by rounding the true result to the nearest representable number, then clearly the round-off error is no larger than half a unit in the last place of the computed result. In other words, in IEEE arithmetic with rounding mode to nearest, it will be the computed result of the following:

0 ≤ |round-off| ≤½ulp

Note that an ulp is a relative quantity. An ulp of a very large number is itself very large, while an ulp of a tiny number is itself tiny. This relationship can be made explicit by expressing an ulp as a function: ulp(x) denotes a unit in the last place of the floating-point number x.

Moreover, an ulp of a floating-point number depends on the precision to which that number is represented. For example, Table 12 shows the values of ulp(1) in each of the four floating-point formats described above:

|

Recall that only a finite set of numbers can be exactly represented in any computer arithmetic. As the magnitudes of numbers get smaller and approach zero, the gap between neighboring representable numbers narrows. Conversely, as the magnitude of numbers gets larger, the gap between neighboring representable numbers widens.

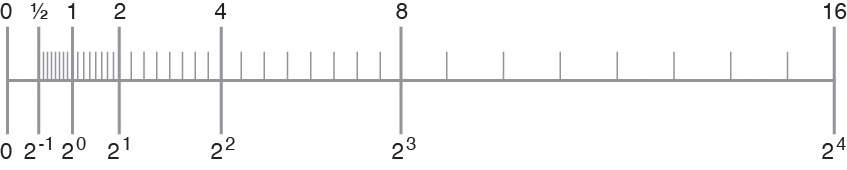

For example, imagine you are using a binary arithmetic that has only 3 bits of precision. Then, between any two powers of 2, there are 23 = 8 representable numbers, as shown in the following figure.

Figure 6 Number Line

The number line shows how the gap between numbers doubles from one exponent to the next.

In the IEEE single format, the difference in magnitude between the two smallest positive subnormal numbers is approximately 10- 45, whereas the difference in magnitude between the two largest finite numbers is approximately 1031!

In Table 13, nextafter(x,+∞) denotes the next representable number after x as you move along the number line towards +∞.

|

Any conventional set of representable floating-point numbers has the property that the worst effect of one inexact result is to introduce an error no worse than the distance to one of the representable neighbors of the computed result. When subnormal numbers are added to the representable set and gradual underflow is implemented, the worst effect of one inexact or underflowed result is to introduce an error no greater than the distance to one of the representable neighbors of the computed result.

In particular, in the region between zero and the smallest normal number, the distance between any two neighboring numbers equals the distance between zero and the smallest subnormal number. The presence of subnormal numbers eliminates the possibility of introducing a round-off error that is greater than the distance to the nearest representable number.

Because no calculation incurs round-off error greater than the distance to any of the representable neighbors of the computed result, many important properties of a robust arithmetic environment hold, including these three:

x ≠ y if and only if x – y ≠ 0

(x – y) + y ≈ x, to within a rounding error in the larger of x and y

1/(1/x) ≈ x, when x is a normalized number, implying 1/x ≠ 0 even for the largest normalized x

An alternative underflow scheme is abrupt underflow, which flushes underflow results to zero. Abrupt underflow violates the first and second properties whenever x – y underflows. Also, abrupt underflow violates the third property whenever 1/x underflows.

Let λ represent the smallest positive normalized number, which is also known as the underflow threshold. Then the error properties of gradual underflow and abrupt underflow can be compared in terms of λ.

gradual underflow: |error| < ½ulp in λ

abrupt underflow: |error| ≈ λ

There is a significant difference between half a unit in the last place of λ, and λ itself.

2.3.5 Two Examples of Gradual Underflow Versus Abrupt Underflow

The following are two well-known mathematical examples. The first example is code that computes an inner product.

sum = 0;

for (i = 0; i < n; i++) {

sum = sum + a[i] * y[i];

}

return sum;

With gradual underflow, the result is as accurate as round-off allows. In abrupt underflow, a small but nonzero sum could be delivered that looks plausible but is much worse. However,it must be admitted that to avoid just these sorts of problems, clever programmers scale their calculations if they are able to anticipate where minuteness might degrade accuracy.

The second example, deriving a complex quotient, is not amenable to scaling:

assuming |r / s|≤1

assuming |r / s|≤1

![=[ (p ?? (r / s) + q) + i(q ?? (r / s) - p) ] / [ s + r ?? (r / s) ] image:=[ (p ?? (r / s) + q) + i(q ?? (r / s) - p) ] / [ s + r ?? (r / s) ]](figures/2-3-5-pt2.jpg) .

.

It can be shown that, despite round-off, the computed complex result differs from the exact result by no more than what would have been the exact result if p + i • q and r + i • s each had been perturbed by no more than a few ulps. This error analysis holds in the face of underflows, except that when both a and b underflow, the error is bounded by a few ulps of |a + i • b| Neither conclusion is true when underflows are flushed to zero.

This algorithm for computing a complex quotient is robust, and amenable to error analysis, in the presence of gradual underflow. A similarly robust, easily analyzed, and efficient algorithm for computing the complex quotient in the face of abrupt underflow does not exist. In abrupt underflow, the burden of worrying about low-level, complicated details shifts from the implementer of the floating-point environment to its users.

The class of problems that succeed in the presence of gradual underflow, but fail with abrupt underflow, is larger than the users of abrupt underflow might realize. Many of the frequently used numerical techniques fall in this class, such as the following:

Linear equation solving

Polynomial equation solving

Numerical integration

Convergence acceleration

Complex division

2.3.6 Does Underflow Matter?

Despite these examples, it can be argued that underflow rarely matters, and so, why bother? However, this argument turns upon itself.

In the absence of gradual underflow, user programs need to be sensitive to the implicit in accuracy threshold. For example, in single precision, if underflow occurs in some parts of a calculation, and abrupt underflow is used to replace underflowed results with 0, then accuracy can be guaranteed only to around 10-31, not 10-38, the usual lower range for single-precision exponents.

This means that programmers need to implement their own method of detecting when they are approaching this inaccuracy threshold, or else abandon the quest for a robust, stable implementation of their algorithm.

Some algorithms can be scaled so that computations don't take place in the constricted area near zero. However, scaling the algorithm and detecting the inaccuracy threshold can be difficult and time-consuming, even if it is not necessary for most data.