6 Working with Touch Events

This topic describes the touch events that enable users to interact with your JavaFX application using a touch screen. Touch points identify each point of contact for a touch. This topic shows you how to identify the touch points and handle touch events to provide sophisticated responses to touch actions.

A touch action consists of one or more points of contact on a touch screen. The action can be a simple press and release, or a more complicated series of holds and moves between the press and release. A series of events is generated for each point of contact for the duration of the action. In addition to the touch events, mouse events and gesture events are generated. If your JavaFX application does not require a complex response to a touch action, you might prefer to handle the mouse or gesture event instead of the touch event. For more information about handling gesture events, see Working with Events from Touch-Enabled Devices.

Touch events require a touch screen and the Windows 7 operating system.

Overview of Touch Actions

The term touch action refers to the entire scope of a user's touch from the time that contact is made with the touch screen to the time that the touch screen is released by all points of contact. The types of touch events that are generated during a touch action are TOUCH_PRESSED, TOUCH_MOVED, TOUCH_STATIONARY, and TOUCH_RELEASED.

Each point of contact with the screen is considered a touch point. For each touch point, a touch event is generated. When a touch action contains multiple points of contact, a set of events, which is one event for each touch point, is generated for each state in the touch action.

See the sections Touch Points, Touch Events, and Event Sets for more information about these elements. See Touch Events Example for an example of how touch events can be used in a JavaFX application.

Touch Points

When a user touches a touch screen, a touch point is created for each individual point of contact. A touch point is represented by an instance of the TouchPoint class, and contains information about the location, the state, and the target of the point of contact. The states of a touch point are pressed, moved, stationary, and released.

Tip:

The number of touch points that are generated might be limited by the touch screen. For example, if the touch screen supports only two points of contact and the user touches the screen with three fingers, only two touch points are generated. For the purposes of this article, it is assumed that the touch screen recognizes all points of contact.

Each touch point has an ID, which is assigned sequentially as touch points are added to the touch action. The ID of a touch point remains the same from the time that contact is made with the touch screen to the time that contact is released. When a point of contact is released, the associated touch point is no longer part of the touch action. For example, if the touch screen is touched with two fingers, the ID assigned to the first touch point is 1 and the ID assigned to the second touch point is 2. If the second finger is removed from the touch screen, only touch point 1 remains as part of the touch action. If another finger is then added to the touch action, the ID assigned to the new touch point is 3, and the touch action has touch points 1 and 3.

Touch Events

Touch events are generated to track the actions of touch points. A touch event is represented by an instance of the TouchEvent class. Touch events are generated only from touches on a touch screen. Touch events are not generated from a trackpad.

Touch events are similar to other events, which have a source, target, and event types that further define the action that occurs. The types of touch events are TOUCH_PRESSED, TOUCH_MOVED, TOUCH_STATIONARY, and TOUCH_RELEASED. Multiple TOUCH_MOVED and TOUCH_STATIONARY events can be generated for a touch point, depending on the distance moved and the time that a touch point is held in place. See Processing Events for basic information about events and how events are processed.

Touch events also have the following items:

-

Touch point

Main touch point that is associated with this event

-

Touch count

The number of touch points currently associated with the touch action

-

List of touch points

The set of the touch points currently associated with the touch action

-

Event set ID

ID of the event set that contains this event

Event Sets

When a touch action has a single point of contact, a single touch event is generated for each state of the action. When a touch action has multiple points of contact, a set of touch events is generated for each state of the action. Each touch event in the set is associated with a different one of the touch points.

Each set of events has an event set ID. The event set ID increments by one for each set that is generated in response to the touch action. The events in the set can have different event types, depending on the state of the touch point with which it is associated. As points of contact are added or removed during the touch action, the number of events in the event set changes. For example, Table 6-1 describes the event sets that are generated when a user touches the touch screen with two fingers, moves both fingers, touches the touch screen with a third finger, moves all fingers, and then removes all fingers from the screen.

Table 6-1 Event Sets for a Single Touch Action

| Event Set ID | Number of Touch Events | Event Type for Each Event |

|---|---|---|

|

1 |

1 |

|

|

2 |

2 |

|

|

3 |

2 |

|

|

4 |

3 |

|

|

5 |

3 |

|

|

6 |

3 |

|

|

7 |

3 |

|

|

8 |

3 |

|

|

9 |

2 |

|

|

10 |

1 |

|

Touch Point Targets and Touch Event Targets

The target of a touch event is the target of the touch point that is associated with the event. The initial target of the touch point is the topmost node at the initial point of contact with the touch screen. If a touch action has multiple points of contact, it is possible for each touch point, and therefore each touch event, to have a different target. This feature enables you to handle each touch point independently of the other touch points. See Handling Concurrent Touch Points Independently for an example.

Typically, all of the events for one touch point are delivered to the same target. However, you can alter the target of subsequent events using the grab() and ungrab() methods for the touch point.

The grab() method enables the node that is currently processing the event to make itself the target of the touch point. The grab(target) method enables another node to be made the target of the touch point. Because events in the event set have access to all of the touch points for the set, it is possible to use the grab() method to direct all subsequent events for the touch action to the same node. The grab() method can also be used to reset the target of a touch point, as shown in Changing the Target of a Touch Point.

The ungrab() method is used to release the touch point from the current target. Subsequent events for the touch action are then sent to the topmost node at the current location of the touch point.

Additional Events Generated from Touches

When a user touches a touch screen, other types of events are generated in addition to touch events:

-

Mouse events

Simulated mouse events enable an application to run on a device with a touch screen even if touch events are not handled by the application. Use the

isSynthesized()method to determine if the mouse event is from a touch action. See Handling Mouse Events for an example. -

Gesture events

Gesture events are generated for the commonly recognized touch actions of scrolling, swiping, rotating, and zooming. If these are the only types of touch actions that your application must handle, you can handle these gesture events instead of the touch events. See Working with Events from Touch-Enabled Devices for information on gesture events.

Touch Events Example

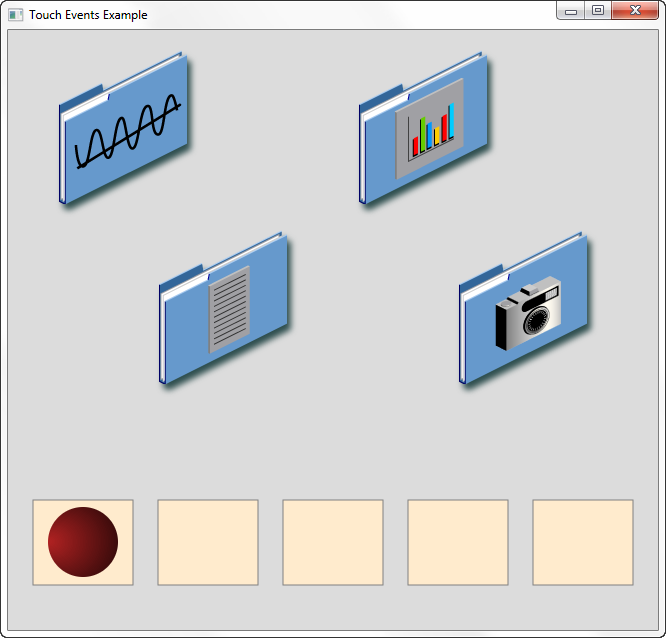

The Touch Events example uses four folders to demonstrate the ability to independently handle each touch point in a set. The example also shows how the grab() method can be used to repeatedly jump the circle from one rectangle to another. Figure 6-1 shows the user interface for the example.

The Touch Events example is available in the TouchEventsExample.zip file. Extract the NetBeans project and open it in the NetBeans IDE. To generate touch events, you must run the example on a device with a touch screen.

Handling Concurrent Touch Points Independently

In a typical gesture, the target is the node at the center of all of the points of contact, and only one node is affected by the response to the gesture. By handling each touch point separately, you can affect all of the nodes that are touched.

In the Touch Events example, you can move each folder by touching the folder and moving your finger. You can move multiple folders at once by touching each folder with a separate finger and moving all fingers.

Each folder is an instance of the TouchImage class. The TouchImage class creates an image view and adds event handlers for TOUCH_PRESSED, TOUCH_RELEASED, and TOUCH_MOVED events. Example 6-1 shows the definition of this class.

Example 6-1 TouchImage Class Definition

public static class TouchImage extends ImageView {

private long touchId = -1;

double touchx, touchy;

public TouchImage(int x, int y, Image img) {

super(img);

setTranslateX(x);

setTranslateY(y);

setEffect(new DropShadow(8.0, 4.5, 6.5, Color.DARKSLATEGRAY));

setOnTouchPressed(new EventHandler<TouchEvent>() {

@Override public void handle(TouchEvent event) {

if (touchId == -1) {

touchId = event.getTouchPoint().getId();

touchx = event.getTouchPoint().getSceneX() - getTranslateX();

touchy = event.getTouchPoint().getSceneY() - getTranslateY();

}

event.consume();

}

});

setOnTouchReleased(new EventHandler<TouchEvent>() {

@Override public void handle(TouchEvent event) {

if (event.getTouchPoint().getId() == touchId) {

touchId = -1;

}

event.consume();

}

});

setOnTouchMoved(new EventHandler<TouchEvent>() {

@Override public void handle(TouchEvent event) {

if (event.getTouchPoint().getId() == touchId) {

setTranslateX(event.getTouchPoint().getSceneX() - touchx);

setTranslateY(event.getTouchPoint().getSceneY() - touchy);

}

event.consume();

}

});

}

}

When a folder is touched, a touch point is created for each point of contact and touch events are sent to the folder. The touch ID is used to ensure that a folder responds only once when multiple points of contact are on the folder.

When a TOUCH_PRESSED event is received, the touch ID is checked to determine if it is a new touch for this folder. If so, the touch ID is set to the ID of the touch point and the location of the touch point is saved.

When a TOUCH_RELEASED event is received, the touch ID is checked to ensure that it matches the touch point that is being processed. If so, the touch ID is reset to indicate that processing is complete.

When a TOUCH_MOVED event is received, the touch ID is checked to ensure that it matches the touch point that is being processed. If so, the folder is moved to the new location for the touch point. If the touch ID does not match the touch point, then more than one point of contact is likely on the folder. To avoid responding to multiple movements of the same folder, the event is ignored.

Changing the Target of a Touch Point

The target of a touch point is typically the same node for the duration of the touch action. However, in some situations, you might want to change the target of a touch point during the touch action.

In the Touch Events example, the circle moves from one rectangle to another by touching the circle with one finger and a rectangle with a second finger. While the second finger remains on the circle after the jump, lift the first finger and touch a different rectangle to cause the circle to jump again. This action is possible only if you change the target of the second touch point.

The circle is an instance of the Ball class. The Ball class creates a circle and adds event handlers for the TOUCH_PRESSED, TOUCH_RELEASED, TOUCH_MOVED, and TOUCH_STATIONARY events. The same handler is used for the TOUCH_MOVED and TOUCH_STATIONARY events. Example 6-2 shows the definition of this class.

Example 6-2 Ball Class Definition

private static class Ball extends Circle {

double touchx, touchy;

public Ball(int x, int y) {

super(35);

RadialGradient gradient = new RadialGradient(0.8, -0.5, 0.5, 0.5, 1,

true, CycleMethod.NO_CYCLE, new Stop [] {

new Stop(0, Color.FIREBRICK),

new Stop(1, Color.BLACK)

});

setFill(gradient);

setTranslateX(x);

setTranslateY(y);

setOnTouchPressed(new EventHandler<TouchEvent>() {

@Override public void handle(TouchEvent event) {

if (event.getTouchCount() == 1) {

touchx = event.getTouchPoint().getSceneX() - getTranslateX();

touchy = event.getTouchPoint().getSceneY() - getTranslateY();

setEffect(new Lighting());

}

event.consume();

}

});

setOnTouchReleased(new EventHandler<TouchEvent>() {

@Override public void handle(TouchEvent event) {

setEffect(null);

event.consume();

}

});

// Jump if the first finger touched the ball and is either

// moving or still, and the second finger touches a rectangle

EventHandler<TouchEvent> jumpHandler = new EventHandler<TouchEvent>() {

@Override public void handle(TouchEvent event) {

if (event.getTouchCount() != 2) {

// Ignore if this is not a two-finger touch

return;

}

TouchPoint main = event.getTouchPoint();

TouchPoint other = event.getTouchPoints().get(1);

if (other.getId() == main.getId()) {

// Ignore if the second finger is in the ball and

// the first finger is anywhere else

return;

}

if (other.getState() != TouchPoint.State.PRESSED ||

other.belongsTo(Ball.this) ||

!(other.getTarget() instanceof Rectangle) ){

// Jump only if the second finger was just

// pressed in a rectangle

return;

}

// Jump now

setTranslateX(other.getSceneX() - touchx);

setTranslateY(other.getSceneY() - touchy);

// Grab the destination touch point, which is now inside

// the ball, so that jumping can continue without

// releasing the finger

other.grab();

// The original touch point is no longer of interest so

// call ungrab() to release the target

main.ungrab();

event.consume();

}

};

setOnTouchStationary(jumpHandler);

setOnTouchMoved(jumpHandler);

}

}

When a TOUCH_PRESSED event is received, the number of touch points is checked to ensure that only the instance of the Ball class is being touched. If so, the location of the touch point is saved, and a lighting effect is added to show that the circle is selected.

When a TOUCH_RELEASED event is received, the lighting effect is removed to show that the circle is no longer selected.

When a TOUCH_MOVED or TOUCH_STATIONARY event is received, the following conditions that are required for a jump are checked:

-

The touch count must be two.

The touch point that is associated with this event is considered the start point of the jump. The event has access to all of the touch points for the touch action. The second touch point in the set of touch points is considered the end point of the jump.

-

The state of the second touch point is

PRESSED.The circle is moved only when the second point of contact is made. Any other state for the second touch point is ignored.

-

The target of the second touch point is a rectangle.

The circle can jump only from rectangle to rectangle, or within a rectangle. If the target of the second touch point is anything else, the circle is not moved.

If the conditions for a jump are met, the circle is jumped to the location of the second touch point. To jump again, the first point of contact is released and a third location is touched, with the expectation that the circle will jump to the third location. However, when the first point of contact is released, the touch point whose target was the circle goes away and now the circle no longer gets touch events. A second jump is not possible without lifting both fingers and starting a new jump.

To make a second jump possible while keeping the second finger on the circle and touching a new location, the grab() method is used to make the circle the target of the second touch point. After the grab, events for the second touch point are sent to the circle instead of the rectangle that was the original target. The circle can then watch for a new touch point and jump again.

Additional Resources

See the JavaFX API documentation for more information on touch events and touch points.