51 Developing for A/B Testing

With the Oracle WebCenter Sites: A/B Testing feature, marketers can test variants of website pages (both design and content variations) to determine which page variants produce best results.

For information on how marketers create and run A/B tests, see Working With A/B Testing in Using Oracle WebCenter Sites.

Topics:

51.1 A/B Testing Prerequisites

What you need to do to be able to use the A/B Testing feature is, include the A/B Testing code element in the templates, enable the A/B Testing property in the wcs_properties.json file, and obtain Google Analytics IDs.

Note:

A/B test functionality for this Oracle WebCenter Sites product is provided through an integration with Google Analytics. To use the A/B test functionality for this Oracle product, you must first register with Google for a Google Analytics account that will allow you to measure the performance of an “A/B” test. In addition, you acknowledge that as part of an “A/B” test, anonymous end user information (that is, information of end users who access your website for the test) will be delivered to and may be used by Google under Google’s terms that govern that information.-

Include the A/B code element in templates on which A/B tests will be run. See Scripting Templates for A/B Testing.

-

Set the property

abtest.delivery.enabledto true on the delivery instance, that is any instance that will deliver the A/B test variants to site visitors. The property should not be set on instances that are used only to create the A/B tests, because this will give false results. Theabtest.delivery.enabledproperty is in the ABTest category of thewcs_properties.jsonfile.See A/B Test Properties in the Property Files Reference for Oracle WebCenter Sites.

-

Obtain all Google Analytics IDs and add them to the configuration.

Other actions must be taken by users and administrators as prerequisites for A/B testing. For a complete list, see Before You Begin A/B Testing in Using Oracle WebCenter Sites.

51.2 Scripting Templates for A/B Testing

You need to add a single line of code, a call element, to allow A/B Testing to operate. The element code you include will generate JavaScript code on the page. When the tested page loads in the browser, it will call back to the server to see if there are any A/B tests on this page.

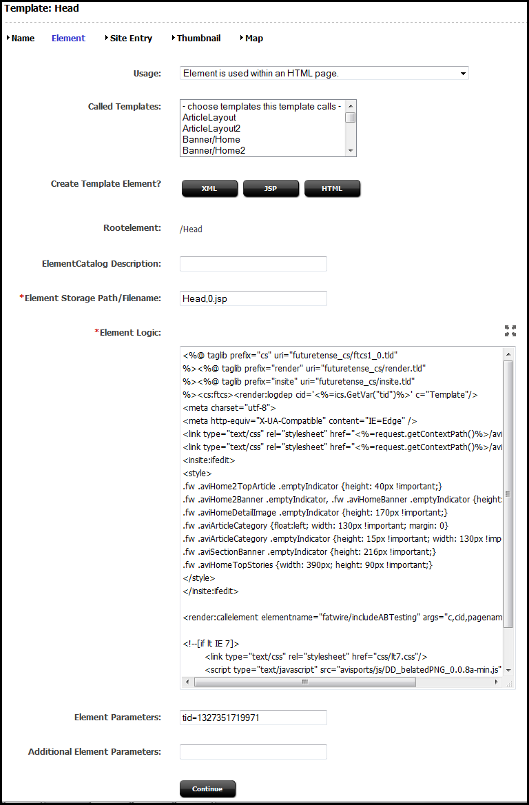

This line must be added to templates on those pages to be used in A/B Testing. Many sites incorporate a design that includes a shared template on all pages. If your site uses this design, then it will only be necessary to add the call element to the single template. In the avisports sample website, a template named Head includes the call element.

To edit the template, follow these steps for each site that will use A/B Testing:

From here, you need to add ab to the Cache Criteria for the page templates used on the site.

51.3 Updating Cache Criteria for A/B Testing

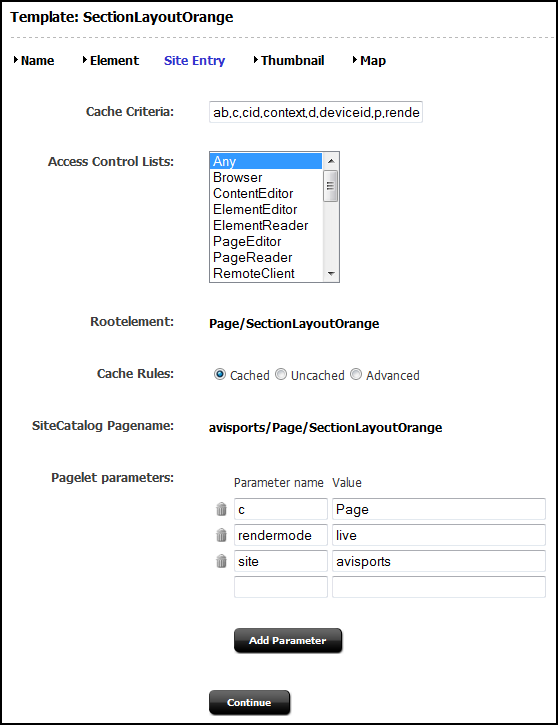

After you have added the call element code to templates to enable A/B Testing, you need to add ab to the Cache Criteria for the page templates used on the tested pages.

In the avisports sample website, the two page templates used are SectionLayoutGreen and SectionLayoutOrange. You will need to make this change on all page templates used on your site that are used in A/B Testing.

Note:

Creating a new template addsab to the cache criteria by default.51.4 Viewing A/B Test Details as JSON

Each A/B Testing asset, used through the WCS_ABTest asset type, contains a field that specifically lists the changes made between the original web page (the A page) and the test page (the B page). These changes are referred to as the differential data, and are stored in JSON format in the Variations (JSON) field.

To view the differential data:

If needed, the differential data stored in the JSON can be edited directly, although this is not recommended for contributors. Copy the JSON to a proper JSON editor, and make the changes necessary, then paste back in the Variations (JSON) field.

51.5 Understanding Confidence Algorithms

When marketers create A/B tests, they can select a confidence level for their results. This number determines confidence in the significance of the test results, specifically that conversion differences are caused by variant differences themselves rather than random visitor variations.

See Selecting the Confidence Level of an A/B Test in Using Oracle WebCenter Sites. This section provides details on how conversion confidence is calculated.

The conversion rate is the conversion event count divided by the view count. This is typically represented by p. The percent change of the conversion rate is determined by subtracting the p of page A from p of page B and then dividing by the p of page A. This is calculated per-user. For example, a user who is converted 1000000 times will only be counted once. The algorithm used to determine confidence is Z-Score.

The word "confidence" in this context is used to refer to a statistical computation used to determine how confident we are that the difference between the results for A and the results for B are actually caused by variations on the pages, and not just random variations between visitors.

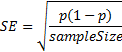

The confidence interval is calculated using the Wald method for a binomial distribution.

Figure 51-3 Wald Method of Determining Confidence Intervals for Binomial Distributions

Description of "Figure 51-3 Wald Method of Determining Confidence Intervals for Binomial Distributions"

The larger the sample, the greater the confidence in the results. A common threshold used in A/B Testing is a confidence interval with a 3% range from the final score. However, this is only a common use, and any plus/minus range can be used. The confidence interval is then determined for the conversion rate (p) by multiplying the standard error with that percentile range of standard normal distribution.

At this point the results must be determined to be significant; that is, that conversion rates are not different based on random variations. The Z-Score is calculated in this way:

The Z-Score is the number of positive standard deviation values between the control and the test mean values. Using the previous standard confidence interval, a statistical significance of 95% is determined when the view event count is greater than 1000 and that the Z-Score probability is either greater than 95% or less than 5%.