5 Optimizing MapReduce Jobs Using Perfect Balance

This chapter describes how you can shorten the run time of some MapReduce jobs by using Perfect Balance. It contains the following sections:

Change Notice:

As of Big Data Connectors 4.8, the option to run Perfect Balance via automatic invocation is no longer supported. Use the Perfect Balance API as described in this document.5.1 What is Perfect Balance?

The Perfect Balance feature of Oracle Big Data Appliance distributes the reducer load in a MapReduce application so that each reduce task does approximately the same amount of work. While the default Hadoop method of distributing the reduce load is appropriate for many jobs, it does not distribute the load evenly for jobs with significant data skew.

Data skew is an imbalance in the load assigned to different reduce tasks. The load is a function of:

-

The number of keys assigned to a reducer.

-

The number of records and the number of bytes in the values per key.

The total run time for a job is extended, to varying degrees, by the time that the reducer with the greatest load takes to finish. In jobs with a skewed load, some reducers complete the job quickly, while others take much longer. Perfect Balance can significantly shorten the total run time by distributing the load evenly, enabling all reducers to finish at about the same time.

Your MapReduce job can be written using either the mapred or mapreduce APIs; Perfect Balance supports both of them.

5.1.1 About Balancing Jobs Across Map and Reduce Tasks

A typical Hadoop job has map and reduce tasks. Hadoop distributes the mapper workload uniformly across Hadoop Distributed File System (HDFS) and across map tasks, while preserving the data locality. In this way, it reduces skew in the mappers.

Hadoop also hashes the map-output keys uniformly across all reducers. This strategy works well when there are many more keys than reducers, and each key represents a very small portion of the workload. However, it is not effective when the mapper output is concentrated into a small number of keys. Hashing these keys results in skew and does not work in applications like sorting, which require range partitioning.

Perfect Balance distributes the load evenly across reducers by first sampling the data, optionally chopping large keys into two or more smaller keys, and using a load-aware partitioning strategy to assign keys to reduce tasks.

5.1.2 Perfect Balance Components

Perfect Balance has these components:

-

Job Analyzer: Gathers and reports statistics about the MapReduce job so that you can determine whether to use Perfect Balance.

-

Counting Reducer: Provides additional statistics to the Job Analyzer to help gauge the effectiveness of Perfect Balance.

-

Load Balancer: Runs before the MapReduce job to generate a static partition plan, and reconfigures the job to use the plan. The balancer includes a user-configurable, progressive sampler that stops sampling the data as soon as it can generate a good partitioning plan.

5.2 Application Requirements

To use Perfect Balance successfully, your application must meet the following requirements:

-

The job is distributive, so that splitting a group of records associated with a reduce key does not produce incorrect results for the application.

To balance a load, Perfect Balance subpartitions the values of large reduce keys and sends each subpartition to a different reducer. This distribution contrasts with the standard Hadoop practice of sending all values for a single reduce key to the same reducer. Your application must be able to handle output from the reducers that is not fully aggregated, so that it does not produce incorrect results.

This partitioning of values is called chopping. Applications that support chopping have distributive reduce functions. See "About Chopping".

If your application is not distributive, then you can still run Perfect Balance after disabling the key-splitting feature. The job still benefits from using Perfect Balance, but the load is not as evenly balanced as it is when key splitting is in effect. See the

oracle.hadoop.balancer.keyLoad.minChopBytesconfiguration property to disable key splitting. -

This release does not support combiners. Perfect Balance detects the presence of combiners and does not balance when they are present.

5.4 Analyzing a Job's Reducer Load

Job Analyzer is a component of Perfect Balance that identifies imbalances in a load, and how effective Perfect Balance is in correcting the imbalance when actually running the job. This section contains the following topics:

5.4.1 About Job Analyzer

You can use Job Analyzer to decide whether a job is a candidate for load balancing with Perfect Balance. Job Analyzer uses the output logs of a MapReduce job to generate a simple report with statistics like the elapsed time and the load for each reduce task. By default, it uses the standard Hadoop counters displayed by the JobTracker user interface, but organizes the data to emphasize the relative performance and load of the reduce tasks, so that you can more easily interpret the results.

If the report shows that the data is skewed (that is, the reducers processed very different loads and the run times varied widely), then the application is a good candidate for Perfect Balance.

5.4.1.1 Methods of Running Job Analyzer

You can choose between two methods of running Job Analyzer:

-

As a standalone utility: Job Analyzer runs against existing job output logs. This is a good choice when you want to analyze a job that previously ran.

-

While using Perfect Balance: Job Analyzer runs against the output logs for the current job running with Perfect Balance. This is a good choice when you want to analyze the current job.

5.4.2 Running Job Analyzer as a Standalone Utility

As a standalone utility, Job Analyzer provides a quick way to analyze the reduce load of a previously run job.

To run Job Analyzer as a standalone utility:

-

Log in to the server where you will run Job Analyzer.

-

Locate the output logs from the job to analyze:

Set

oracle.hadoop.balancer.application_idto the job ID of the job you want to analyze.You can obtain the job ID from the YARN Resource Manager web interface. Click the application ID of a job, and then click Tracking URL. The job ID typically begins with "job_".

Alternately, if you already ran Perfect Balance or Job Analyzer on this job, you can read the job ID from the

application_idfile generated by Perfect Balance in its report directory (outdir/_balancerby default). -

Run the Job Analyzer utility as described in "Job Analyzer Utility Syntax."

-

View the Job Analyzer report in a browser.

5.4.2.1 Job Analyzer Utility Example

The following example runs a script that sets the required variables, uses the MapReduce job logs for a job with an application ID of job_1396563311211_0947, and creates the report in the default location. It then copies the HTML version of the report from HDFS to the /home/jdoe local directory and opens the report in a browser.

To run this example on a YARN cluster, replace the application ID with the application ID of the job. The application ID of the job looks like this example: job_1396563311211_0947.

Example 5-1 Running the Job Analyzer Utility

$ cat runja.sh BALANCER_HOME=/opt/oracle/orabalancer-<version>-h2 export HADOOP_CLASSPATH=${BALANCER_HOME}/jlib/orabalancer-<version>.jar:${BALANCER_HOME}/jlib/commons-math-2.2.jar:$HADOOP_CLASSPATH # Command on YARN cluster hadoop jar orabalancer-<version>.jar oracle.hadoop.balancer.tools.JobAnalyzer \ -D oracle.hadoop.balancer.application_id=job_1396563311211_0947 $ sh ./runja.sh $ $ hadoop fs -get jdoe_nobal_outdir/_balancer/jobanalyzer-report.html /home/jdoe $ cd /home/jdoe $ firefox jobanalyzer-report.html

5.4.3 Running Job Analyzer Using Perfect Balance

This section explains how you can modify your job to generate Job Analyzer reports using the Perfect Balance API.

See Also:

For details about the Perfect Balance API, see the following:5.4.3.1 Running Job Analyzer Using the Perfect Balance API

This section first explains how to prepare your code using the API and then how to run Job Analyzer.

Before You Start:

Before running Job Analyzer, invoke Balancer in your application code. Make the following updates to your code and recompile.-

Import the

Balancerclass. -

After the job finishes, you can also call

Balancer.save().If Balancer ran, this optional method saves the partition file report into the

_balancersubdirectory of the job output directory. It also writes a JobAnalyzer report.

For example:

... import oracle.hadoop.balancer.Balancer; ... <Configure your job> ... job.waitForCompletion(true); Balancer.save(job); ...

After compiling the modified application, follow these steps to generate Job Analyzer:

- Log in to the server where you will submit the job that uses Perfect Balance.

- Set up Perfect Balance by taking the steps in "Getting Started with Perfect Balance."

- Run the job.

The example below runs a script that does the following:

-

Sets the required variables

-

Uses Perfect Balance to run a job with Job Analyzer (and without load balancing).

-

Creates the report in the default location.

-

Copies the HTML version of the report from HDFS to the

/home/jdoelocal directory. -

Opens the report in a browser

The output includes warnings, which you can ignore.

Example 5-2 Running Job Analyzer with Perfect Balance

$ cat ja_nobalance.sh # set up perfect balance BALANCER_HOME=/opt/oracle/orabalancer-<version>-h2 export HADOOP_CLASSPATH=${BALANCER_HOME}/jlib/orabalancer-<version>.jar:${BALANCER_HOME}/jlib/commons-math-2.2.jar:${HADOOP_CLASSPATH} # run the job hadoop jar application_jarfile.jar ApplicationClass \ -D application_config_property \ -D mapreduce.input.fileinputformat.inputdir=jdoe_application/input \ -D mapreduce.output.fileoutputformat.outputdir=jdoe_nobal_outdir \ -D mapreduce.job.name=nobal \ -D mapreduce.job.reduces=10 \ -conf application_config_file.xml $ sh ja_nobalance.sh 14/04/14 14:52:42 INFO input.FileInputFormat: Total input paths to process : 5 14/04/14 14:52:42 INFO mapreduce.JobSubmitter: number of splits:5 14/04/14 14:52:42 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1397066986369_3478 14/04/14 14:52:43 INFO impl.YarnClientImpl: Submitted application application_1397066986369_3478 . . . File Input Format Counters Bytes Read=112652976 File Output Format Counters Bytes Written=384974202 $ hadoop fs -get jdoe_nobal_outdir/_balancer/jobanalyzer-report.html /home/jdoe $ cd /home/jdoe $ firefox jobanalyzer-report.html

5.4.3.2 Collecting Additional Metrics

The Job Analyzer report includes the load metrics for each key, if you set the oracle.hadoop.balancer.Balancer.configureCountingReducer() method before job submission.

This additional information provides a more detailed picture of the load for each reducer, with metrics that are not available in the standard Hadoop counters.

The Job Analyzer report also compares its predicted load with the actual load. The difference between these values measures how effective Perfect Balance was in balancing the job.

Job Analyzer might recommend key load coefficients for the Perfect Balance key load model, based on its analysis of the job load. To use these recommended coefficients when running a job with Perfect Balance, set the oracle.hadoop.balancer.linearKeyLoad.feedbackDir property to the directory containing the Job Analyzer report of a previously analyzed run of the job.

If the report contains recommended coefficients, then Perfect Balance automatically uses them. If Job Analyzer encounters an error while collecting the additional metrics, then the report does not contain the additional metrics.

Use the feedbackDir property when you do not know the values of the load model coefficients for a job, but you have the Job Analyzer output from a previous run of the job. Then you can set the value of feedbackDir to the directory where that output is stored. The values recommended from those files typically perform better than the Perfect Balance default values, because the recommended values are based on an analysis of your job's load.

Alternately, if you already know good values of the load model coefficients for your job, you can set the load model properties:

Running the job with these coefficients results in a more balanced job.

5.4.4 Reading the Job Analyzer Report

Job Analyzer writes its report in two formats: HTML for you, and XML for Perfect Balance. You can open the report in a browser, either directly in HDFS or after copying it to the local file system

To open a Job Analyzer report in HDFS in a browser:

-

Open the HDFS web interface on port 50070 of a NameNode node (node01 or node02), using a URL like the following:

http://bda1node01.example.com:50070

-

From the Utilities menu, choose Browse the File System.

-

Navigate to the

job_output_dir/_balancerdirectory.

To open a Job Analyzer report in the local file system in a browser:

-

Copy the report from HDFS to the local file system:

$ hadoop fs -get job_output_dir/_balancer/jobanalyzer-report.html /home/jdoe -

Switch to the local directory:

$ cd /home/jdoe

-

Open the file in a browser:

$ firefox jobanalyzer-report.html

When inspecting the Job Analyzer report, look for indicators of skew such as:

-

The execution time of some reducers is longer than others.

-

Some reducers process more records or bytes than others.

-

Some map output keys have more records than others.

-

Some map output records have more bytes than others.

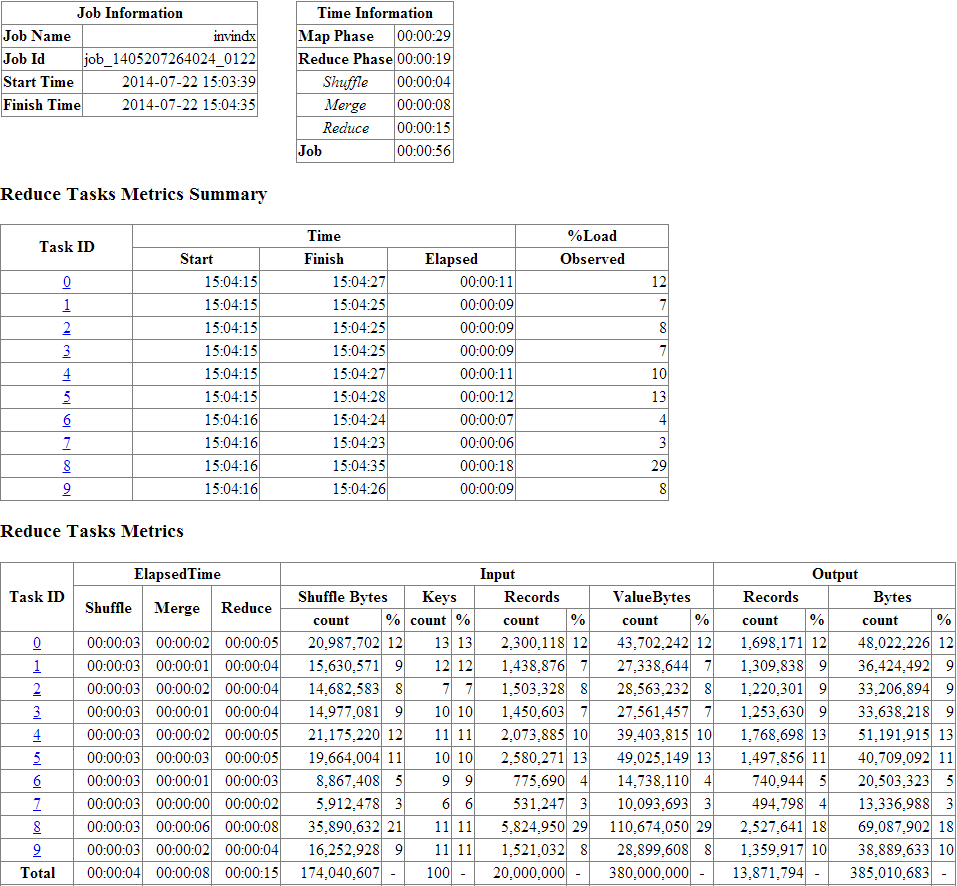

The following figure shows the beginning of the analyzer report for the inverted index (invindx) example. It displays the key load coefficient recommendations, because this job ran with the appropriate configuration settings. See "Collecting Additional Metrics."

The task IDs are links to tables that show the analysis of specific tasks, enabling you to drill down for more details from the first, summary table.

This example uses an extremely small data set, but notice the differences between tasks 7 and 8: The input records range from 3% to 29%, and their corresponding elapsed times range from 5 to 15 seconds. This variation indicates skew.

Figure 5-1 Job Analyzer Report for Unbalanced Inverted Index Job

Description of "Figure 5-1 Job Analyzer Report for Unbalanced Inverted Index Job"

5.5 About Configuring Perfect Balance

Perfect Balance uses the standard Hadoop methods of specifying configuration properties in the command line. You can use the -conf option to identify a configuration file, or the -D option to specify individual properties. All Perfect Balance configuration properties have default values, and so setting them is optional.

"Perfect Balance Configuration Property Reference" lists the configuration properties in alphabetical order with a full description. The following are functional groups of properties.

Job Analyzer Properties

Key Chopping Properties

Load Balancing Properties

Load Model Properties

MapReduce-Related Properties

Partition Report Properties

5.6 Running a Balanced MapReduce Job Using Perfect Balance

The oracle.hadoop.balancer.Balancer class contains methods for creating a partitioning plan, saving the plan to a file, and running the MapReduce job using the plan. You only need to add the code to the application's job driver Java class, not redesign the application. When you run a shell script to run the application, you can include Perfect Balance configuration settings.

5.6.1 Modifying Your Java Code to Use Perfect Balance

The Perfect Balance installation directory contains a complete example, including input data, of a Java MapReduce program that uses the Perfect Balance API.

For a description of the inverted index example and execution instructions, see orabalancer-<version>-h2/examples/invindx/README.txt.

To explore the modified Java code, see orabalancer-<version>-h2/examples/jsrc/oracle/hadoop/balancer/examples/invindx/InvertedIndexMapred.java or InvertedIndexMapreduce.java.

The modifications to run Perfect Balance include the following:

-

The

createBalancermethod validates the configuration properties and returns aBalancerinstance. -

The

waitForCompletionmethod samples the data and creates a partitioning plan. -

The

addBalancingPlanmethod adds the partitioning plan to the job configuration settings. -

The

configureCountingReducermethod collects additional load statistics. -

The

savemethod saves the partition report and generates the Job Analyzer report.

Example 5-3 shows fragments from the inverted index Java code.

Example 5-3 Running Perfect Balance in a MapReduce Job

.

.

.

import oracle.hadoop.balancer.Balancer;

.

.

.

///// BEGIN: CODE TO INVOKE BALANCER (PART-1, before job submission) //////

Configuration conf = job.getConfiguration();

Balancer balancer = null;

boolean useBalancer =

conf.getBoolean("oracle.hadoop.balancer.driver.balance", true);

if(useBalancer)

{

balancer = Balancer.createBalancer(conf);

balancer.waitForCompletion();

balancer.addBalancingPlan(conf);

}

if(conf.getBoolean("oracle.hadoop.balancer.tools.useCountingReducer", true))

{

Balancer.configureCountingReducer(conf);

}

////////////// END: CODE TO INVOKE BALANCER (PART-1) //////////////////////

boolean isSuccess = job.waitForCompletion(true);

///////////////////////////////////////////////////////////////////////////

// BEGIN: CODE TO INVOKE BALANCER (PART-2, after job completion, optional)

// If balancer ran, this saves the partition file report into the _balancer

// sub-directory of the job output directory. It also writes a JobAnalyzer

// report.

Balancer.save(job);

////////////// END: CODE TO INVOKE BALANCER (PART-2) //////////////////////

.

.

.

}

5.6.2 Running Your Modified Java Code with Perfect Balance

When you run your modified Java code, you can set the Perfect Balance properties by using the standard hadoop command syntax:

bin/hadoop jar application_jarfile.jar ApplicationClass \ -conf application_config.xml \ -conf perfect_balance_config.xml \ -D application_config_property \ -D perfect_balance_config_property \ -libjars application_jar_path.jar...

Example 5-4 runs a script named pb_balanceapi.sh, which runs the InvertedIndexMapreduce class example packaged in the Perfect Balance JAR file. The key load metric properties are set to the values recommended in the Job Analyzer report shown in Figure 5-1.

To run the InvertedIndexMapreduce class example, see "About the Perfect Balance Examples."

Example 5-4 Running the InvertedIndexMapreduce Class

$ cat pb_balanceapi.sh BALANCER_HOME=/opt/oracle/orabalancer-<version>-h2 APP_JAR_FILE=/opt/oracle/orabalancer-<version>-h2/jlib/orabalancer-<version>.jar export HADOOP_CLASSPATH=${BALANCER_HOME}/jlib/orabalancer-<version>.jar:${BALANCER_HOME}/jlib/commons-math-2.2.jar:$HADOOP_CLASSPATH hadoop jar ${APP_JAR_FILE} oracle.hadoop.balancer.examples.invindx.InvertedIndexMapreduce \ -D mapreduce.input.fileinputformat.inputdir=invindx/input \ -D mapreduce.output.fileoutputformat.outputdir=jdoe_outdir_api \ -D mapreduce.job.name=jdoe_invindx_api \ -D mapreduce.job.reduces=10 \ -D oracle.hadoop.balancer.linearKeyLoad.keyWeight=93.981394 \ -D oracle.hadoop.balancer.linearKeyLoad.rowWeight=0.001126 \ -D oracle.hadoop.balancer.linearKeyLoad.byteWeight=0.0 $ sh ./balanceapi.sh 14/04/14 15:03:51 INFO balancer.Balancer: Creating balancer 14/04/14 15:03:51 INFO balancer.Balancer: Starting Balancer 14/04/14 15:03:51 INFO input.FileInputFormat: Total input paths to process : 5 14/04/14 15:03:54 INFO balancer.Balancer: Balancer completed 14/04/14 15:03:55 INFO input.FileInputFormat: Total input paths to process : 5 14/04/14 15:03:55 INFO mapreduce.JobSubmitter: number of splits:5 14/04/14 15:03:55 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1397066986369_3510 14/04/14 15:03:55 INFO impl.YarnClientImpl: Submitted application application_1397066986369_3510 . . . File Input Format Counters Bytes Read=112652976 File Output Format Counters Bytes Written=384974202

5.7 About Perfect Balance Reports

Perfect Balance generates these reports when it runs a job:

-

Job Analyzer report: Contains various indicators about the distribution of the load in a job. The report is saved in HTML for you, and XML for Perfect Balance to use. The report is always named

jobanalyzer-report.htmland-.xml. See "Reading the Job Analyzer Report." -

Partition report: Identifies the keys that are assigned to the various reducers. This report is saved in JSON for Perfect Balance to use; it does not contain information of use to you. The report is named

${job_output_dir}/_balancer/orabalancer_report.json. It is only generated for balanced jobs. -

Reduce key metric reports: Perfect Balance generates a report for each file partition, when the appropriate configuration properties are set. The reports are saved in XML for Perfect Balance to use; they do not contain information of use to you. They are named

${job_output_dir}/_balancer/ReduceKeyMetricList-attempt_jobid_taskid_task_attemptid.xml. They are generated only when the counting reducer is used (that is, whenBalancer.configureCountingReduceris invoked before job submission.

The reports are stored by default in the job output directory (${mapreduce.output.fileoutputformat.outputdir} in YARN. Following is the structure of that directory:

job_output_directory

/_SUCCESS

/_balancer

ReduceKeyMetricList-attempt_201305031125_0016_r_000000_0.xml

ReduceKeyMetricList-attempt_201305031125_0016_r_000001_0.xml

.

.

.

jobanalyzer-report.html

jobanalyzer-report.xml

orabalancer_report.json

/part-r-00000

/part-r-00001

.

.

.

5.8 About Chopping

To balance a load, Perfect Balance might subpartition the values of a single reduce key and send each subpartition to a different reducer. This partitioning of values is called chopping.

5.8.1 Selecting a Chopping Method

You can configure how Perfect Balance chops the values by setting the oracle.hadoop.balancer.choppingStrategy configuration property:

-

Chopping by hash partitioning: Set

choppingStrategy=hashwhen sorting is not required. This is the default chopping strategy. -

Chopping by round robin: Set

choppingStrategy=roundRobinas an alternative strategy when total-order chopping is not required. If the load for a hash chopped key is unbalanced among reducers, try to use this chopping strategy. -

Chopping by total-order partitioning: Set

choppingStrategy=rangeto sort the values in each subpartition and order them across all subpartitions. In any parallel sort job, each task sort the rows within the task. The job must ensure that the values in reduce task 2 are greater than values in reduce task 1, the values in reduce task 3 are greater than the values in reduce task 2, and so on. The job generates multiple files containing data in sorted order, instead of one large file with sorted data.For example, if a key is chopped into three subpartitions, and the subpartitions are sent to reducers 5, 8 and 9, then the values for that key in reducer 9 are greater than all values for that key in reducer 8, and the values for that key in reducer 8 are greater than all values for that key in reducer 5. When

choppingStrategy=range,Perfect Balance ensures this ordering across reduce tasks.

If an application requires that the data is aggregated across files, then you can disable chopping by setting oracle.hadoop.balancer.keyLoad.minChopBytes=-1. Perfect Balance still offers performance gains by combining smaller reduce keys, called bin packing.

5.8.2 How Chopping Impacts Applications

If a MapReduce job aggregates the data by reduce key, then each reduce task aggregates the values for each key within that task. However, when chopping is enabled in Perfect Balance, the rows associated with a reduce key might be in different reduce tasks, leading to partial aggregation. Thus, values for a reduce key are aggregated within a reduce task, but not across reduce tasks. (The values for a reduce key across reduce tasks can be sorted, as discussed in "Selecting a Chopping Method".)

When complete aggregation is required, you can disable chopping. Alternatively, you can examine the application that consumes the output of your MapReduce job. The application might work well with partial aggregation.

For example, a search engine might read in parallel the output from a MapReduce job that creates an inverted index. The output of a reduce task is a list of words, and for each word, a list of documents in which the word occurs. The word is the key, and the list of documents is the value. With partial aggregation, some words have multiple document lists instead of one aggregated list. Multiple lists are convenient for the search engine to consume in parallel. A parallel search engine might even require document lists to be split instead of aggregated into one list. See "About the Perfect Balance Examples" for a Hadoop job that creates an inverted index from a document collection.

As another example, Oracle Loader for Hadoop loads data from multiple files to the correct partition of a target table. The load step is faster when there are multiple files for a reduce key, because they enable a higher degree of parallelism than loading from one file for a reduce key.

5.9 Troubleshooting Jobs Running with Perfect Balance

If you get Java "out of heap space" or "GC overhead limit exceeded" errors on the client node while running the Perfect Balance sampler, then increase the client JVM heap size for the job.

Use the Java JVM -Xmx option.You can specify client JVM options before running the Hadoop job, by setting the HADOOP_CLIENT_OPTS variable:

$ export HADOOP_CLIENT_OPTS="-Xmx1024M $HADOOP_CLIENT_OPTS"

Setting HADOOP_CLIENT_OPTS changes the JVM options only on the client node. It does not change JVM options in the map and reduce tasks. See the invindx script for an example of setting this variable.

Setting HADOOP_CLIENT_OPTS is sufficient to increase the heap size for the sampler, regardless of whether oracle.hadoop.balancer.runMode is set to local or distributed. When runMode=local, the sampler runs on the client node, and HADOOP_CLIENT_OPTS sets the heap size on the client node. When runMode=distributed, Perfect Balance automatically sets the heap size for the sampler Hadoop job based on the -Xmx setting you provide in HADOOP_CLIENT_OPTS. Perfect Balance never changes the heap size for the map and reduce tasks of your job, only for its sampler job.

5.10 About the Perfect Balance Examples

The Perfect Balance installation files include a full set of examples that you can run immediately. The InvertedIndex example is a MapReduce application that creates an inverted index on an input set of text files. The inverted index maps words to the location of the words in the text files. The input data is included.

5.10.1 About the Examples in This Chapter

The InvertedIndex example provides the basis for all examples in this chapter. They use the same data set and run the same MapReduce application. The modifications to the InvertedIndex example simply highlight the steps you must perform in running your own applications with Perfect Balance.

If you want to run the examples in this chapter, or use them as the basis for running your own jobs, then make the following changes:

-

If you are modifying the examples to run your own application, then add your application JAR files to

HADOOP_CLASSPATHand-libjars. -

Ensure that the value of

mapreduce.input.fileinputformat.inputdiridentifies the location of your data.The

invindx/inputdirectory contains the sample data for the InvertedIndex example. To use this data, you must first set it up. See "Extracting the Example Data Set." -

Replace

jdoewith your Hadoop user name. -

Review the configuration setting and the shell script to ensure that they are appropriate for the job.

-

You can run the browser from your laptop or connect to Oracle Big Data Appliance using a client that supports graphical interfaces, such as VNC.

5.10.2 Extracting the Example Data Set

To run the InvertedIndex examples or any of the examples in this chapter, you must first set up the data files.

To extract the InvertedIndex data files:

-

Log in to a server where Perfect Balance is installed.

-

Change to the

examples/invindxsubdirectory:cd /opt/oracle/orabalancer-<version>-h2/examples/invindx

-

Unzip the data and copy it to the HDFS

invindx/inputdirectory:./invindx -setup

For complete instructions for running the InvertedIndex example, see /opt/oracle/orabalancer-<version>-h2/examples/invindx/README.txt.

5.11 Perfect Balance Configuration Property Reference

This section describes the Perfect Balance configuration properties and a few generic Hadoop MapReduce properties that Perfect Balance reads from the job configuration:

See "About Configuring Perfect Balance" for a list of the properties organized into functional categories.

Note:

CDH5 deprecates many MapReduce properties and replaces them with new properties. Perfect Balance continues to work with the old property names, but Oracle recommends that you use the new names. For the new MapReduce property names, see the Cloudera website at:

MapReduce Configuration Properties

| Property | Type, Default Value, Description |

|---|---|

|

mapreduce.input.fileinputformat.inputdir |

Type: String Default Value: Not defined Description: A comma-separated list of input directories. |

|

mapreduce.inputformat.class |

Type: String Default Value: Description: The full name of the |

|

mapreduce.map.class |

Type: String Default Value: Description: The full name of the mapper class. |

|

mapreduce.output.fileoutputformat.outputdir |

Type: String Default Value: Not defined Description: The job output directory. |

|

mapreduce.partitioner.class |

Type: String Default Value: Description: The full name of the partitioner class. |

|

mapreduce.reduce.class |

Type: String Default Value: Description: The full name of the reducer class. |

Job Analyzer Configuration Properties

| Property | Type, Default Value, Description |

|---|---|

|

oracle.hadoop.balancer.application_id |

Type: String Default Value: Not defined Description: The job identifier of the job you want to analyze with Job Analyzer. This property is a parameter to the Job Analyzer utility in standalone mode on YARN clusters; it does not apply to MRv1 clusters. See "Running Job Analyzer as a Standalone Utility". |

|

oracle.hadoop.balancer.tools.writeKeyBytes |

Type: Boolean Default Value: Description: Controls whether the counting reducer collects the byte representations of the reduce keys for the Job Analyzer. Set this property to |

Perfect Balance Configuration Properties

| Property | Type, Default Value, Description |

|---|---|

|

oracle.hadoop.balancer.choppingStrategy Note that the choppingStrategy property takes precedence over the deprecated property |

Type: String Default Value: Description: This property controls the behavior of sampler when it needs to chop a key. The following values are valid:

See also the deprecated property: oracle.hadoop.balancer.enableSorting |

|

oracle.hadoop.balancer.confidence |

Type: Float Default Value: Description: The statistical confidence indicator for the load factor specified by the This property accepts values greater than or equal to 0.5 and less than 1.0 (0.5 <= value < 1.0). A value less than 0.5 resets the property to its default value. Oracle recommends a value greater than or equal to 0.9. Typical values are 0.95 and 0.99. |

|

oracle.hadoop.balancer.enableSorting |

Type: Boolean Default Value: Description: This property is deprecated. To use the map output key sorting comparator as a total-order partitioning function, set When this property is false, map output keys will be chopped using a hash function. When this property is true, map output keys will be chopped using the map output key sorting comparator as a total-order partitioning function. When this property is true, balancer will preserve a total order over the values of a chopped key. See also: oracle.hadoop.balancer.choppingStrategy |

|

oracle.hadoop.balancer.inputFormat.mapred.map.tasks |

Type: Integer Default Value: Description: Sets the Hadoop Set this property to a value greater than or equal to one (1). A value less than 1 disables the property. Some input formats, such as You can increase the value for larger data sets, that is, more than a million rows of about 100 bytes per row. However, extremely large values can cause the input format's |

|

oracle.hadoop.balancer.inputFormat.mapred.max.split.size |

Type: Long Default Value: Description: Sets the Hadoop Set this property to a value greater than or equal to one (1). A value less than 1 disables the property. The optimal split size is a trade-off between obtaining a good sample (smaller splits) and efficient I/O performance (larger splits). Some input formats, such as You can increase the value for larger data sets (tens of terabytes) or if the input format's |

|

oracle.hadoop.balancer.keyLoad.minChopBytes |

Type: Long Default Value: Description: Controls whether Perfect Balance chops large map output keys into medium keys:

|

|

oracle.hadoop.balancer.linearKeyLoad.byteWeight |

Type: Float Default Value: Description: Weights the number of bytes per key in the linear key load model specified by the |

|

oracle.hadoop.balancer.linearKeyLoad.feedbackDir |

Type: String Default Value: Not defined Description: The path to a directory that contains the Job Analyzer report for a job that it previously analyzed. The sampler reads this report for feedback to use to optimize the current balancing plan. You can set this property to the Job Analyzer report directory of a job that is the same or similar to the current job, so that the feedback is directly applicable. If the feedback directory contains a Job Analyzer report with recommended values for the Perfect Balance linear key load model coefficients, then Perfect Balance automatically reads and uses them. The recommended values take precedence over user-specified values in these configuration parameters: Job Analyzer attempts to recommend good values for these coefficients. However, Perfect Balance reads the load model coefficients from this list of configuration properties under the following circumstances:

|

|

oracle.hadoop.balancer.linearKeyLoad.keyWeight |

Type: Float Default Value: 50.0 Description: Weights the number of medium keys per large key in the linear key load model specified by the |

|

oracle.hadoop.balancer.linearKeyLoad.rowWeight |

Type: Float Default Value: 0.05 Description: Weights the number of rows per key in the linear key load model specified by the |

|

oracle.hadoop.balancer.maxLoadFactor |

Type: Float Default Value: 0.05 Description: The target reducer load factor that you want the balancer's partition plan to achieve. The load factor is the relative deviation from an estimated value. For example, if The values of these two properties determine the sampler's stopping condition. The balancer samples until it can generate a plan that guarantees the specified load factor at the specified confidence level. This guarantee may not hold if the sampler stops early because of other stopping conditions, such as the number of samples exceeds |

|

oracle.hadoop.balancer.maxSamplesPct |

Type: Float Default Value: Description: Limits the number of samples that Perfect Balance can collect to a fraction of the total input records. A value less than zero disables the property (no limit). You may need to increase the value for Hadoop applications with very unbalanced reducer partitions or densely clustered map-output keys. The sampler needs to sample more data to achieve a good partitioning plan in these cases. |

|

oracle.hadoop.balancer.minSplits |

Type: Integer Default Value: Description: Sets the minimum number of splits that the sampler reads. If the total number of splits is less than this value, then the sampler reads all splits. Set this property to a value greater than or equal to one ( |

|

oracle.hadoop.balancer.numThreads |

Type: Integer Default Value: Description: Number of sampler threads. Set this value based on the processor and memory resources available on the node where the job is initiated. A higher number of sampler threads implies higher concurrency in sampling. Set this property to one ( |

|

oracle.hadoop.balancer.report.overwrite |

Type: Boolean Default Value: Description: Controls whether Perfect Balance overwrites files in the location specified by the |

|

oracle.hadoop.balancer.reportPath |

Type: String Default Value: Description: The path where Perfect Balance writes the partition report before the Hadoop job output directory is available, that is, before the MapReduce job finishes running. At the end of the job, Perfect Balance moves the file to |

|

oracle.hadoop.balancer.runMode |

Type: String Default Value: Description: Specifies how to run the Perfect Balance sampler. The following values are valid:

If this property is set to an invalid string, Perfect Balance resets it to |

|

oracle.hadoop.balancer.tmpDir |

Type: String Default Value: Description: The path to a staging directory in the file system of the job output directory (HDFS or local). Perfect Balance creates the directory if it does not exist, and copies the partition report to it for loading into the Hadoop distributed cache. |

|

oracle.hadoop.balancer.useClusterStats |

Type: Boolean Default Value: Description: Enables the sampler to use cluster sampling statistics. These statistics improve the accuracy of sampled estimates, such as the number of records in a map-output key, when the map-output keys are distributed in clusters across input splits, instead of being distributed independently across all input splits. Set this property to |

|

oracle.hadoop.balancer.useMapreduceApi |

Type: Boolean Default Value: Description: Identifies the MapReduce API used in the Hadoop job:

|