2 Administering Oracle Big Data Appliance

This chapter provides information about the software and services installed on Oracle Big Data Appliance. It contains these sections:

2.1 Monitoring Multiple Clusters Using Oracle Enterprise Manager

An Oracle Enterprise Manager plug-in enables you to use the same system monitoring tool for Oracle Big Data Appliance as you use for Oracle Exadata Database Machine or any other Oracle Database installation. With the plug-in, you can view the status of the installed software components in tabular or graphic presentations, and start and stop these software services. You can also monitor the health of the network and the rack components.

Oracle Enterprise Manager enables you to monitor all Oracle Big Data Appliance racks on the same InfiniBand fabric. It provides summary views of both the rack hardware and the software layout of the logical clusters.

Note:

Before you start, contact Oracle Support for up-to-date information about Enterprise Manager plug-in functionality.2.1.1 Using the Enterprise Manager Web Interface

After opening Oracle Enterprise Manager web interface, logging in, and selecting a target cluster, you can drill down into these primary areas:

-

InfiniBand network: Network topology and status for InfiniBand switches and ports. See Figure 2-1.

-

Hadoop cluster: Software services for HDFS, MapReduce, and ZooKeeper.

-

Oracle Big Data Appliance rack: Hardware status including server hosts, Oracle Integrated Lights Out Manager (Oracle ILOM) servers, power distribution units (PDUs), and the Ethernet switch.

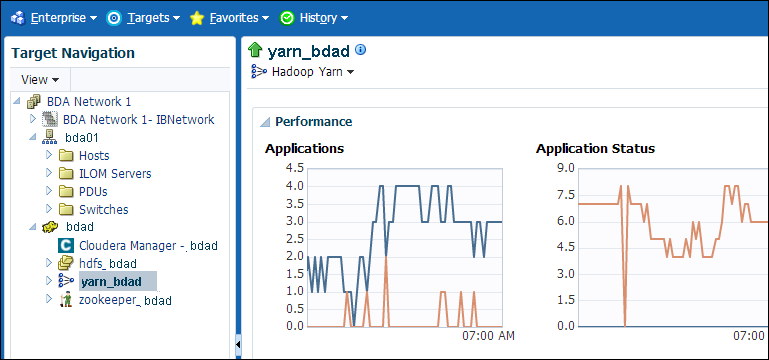

Figure 2-1 shows a small section of the cluster home page.

Figure 2-1 YARN Page in Oracle Enterprise Manager

Description of "Figure 2-1 YARN Page in Oracle Enterprise Manager"

To monitor Oracle Big Data Appliance using Oracle Enterprise Manager:

-

Download and install the plug-in. See Oracle Enterprise Manager System Monitoring Plug-in Installation Guide for Oracle Big Data Appliance.

-

Log in to Oracle Enterprise Manager as a privileged user.

-

From the Targets menu, choose Big Data Appliance to view the Big Data page. You can see the overall status of the targets already discovered by Oracle Enterprise Manager.

-

Select a target cluster to view its detail pages.

-

Expand the target navigation tree to display the components. Information is available at all levels.

-

Select a component in the tree to display its home page.

-

To change the display, choose an item from the drop-down menu at the top left of the main display area.

2.1.2 Using the Enterprise Manager Command-Line Interface

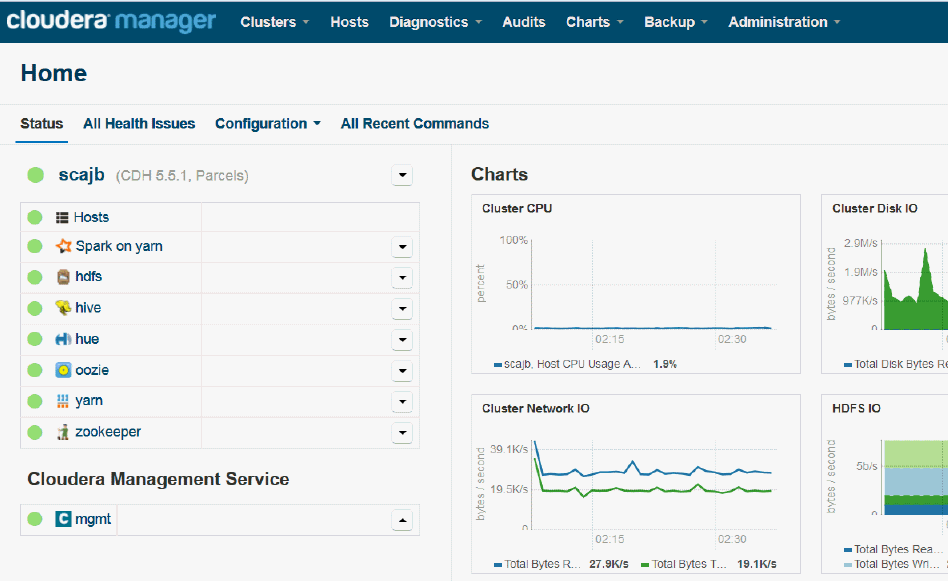

2.2 Managing Operations Using Cloudera Manager

Cloudera Manager is installed on Oracle Big Data Appliance to help you with Cloudera's Distribution including Apache Hadoop (CDH) operations. Cloudera Manager provides a single administrative interface to all Oracle Big Data Appliance servers configured as part of the Hadoop cluster.

Cloudera Manager simplifies the performance of these administrative tasks:

-

Monitor jobs and services

-

Start and stop services

-

Manage security and Kerberos credentials

-

Monitor user activity

-

Monitor the health of the system

-

Monitor performance metrics

-

Track hardware use (disk, CPU, and RAM)

Cloudera Manager runs on the ResourceManager node (node03) and is available on port 7180.

To use Cloudera Manager:

-

Open a browser and enter a URL like the following:

In this example,

bda1is the name of the appliance,node03is the name of the server,example.comis the domain, and7180is the default port number for Cloudera Manager. -

Log in with a user name and password for Cloudera Manager. Only a user with administrative privileges can change the settings. Other Cloudera Manager users can view the status of Oracle Big Data Appliance.

See Also:

Cloudera Manager Monitoring and Diagnostics Guide at

or click Help on the Cloudera Manager Support menu

2.2.1 Monitoring the Status of Oracle Big Data Appliance

In Cloudera Manager, you can choose any of the following pages from the menu bar across the top of the display:

-

Home: Provides a graphic overview of activities and links to all services controlled by Cloudera Manager. See Figure 2-2.

-

Clusters: Accesses the services on multiple clusters.

-

Hosts: Monitors the health, disk usage, load, physical memory, swap space, and other statistics for all servers in the cluster.

-

Diagnostics: Accesses events and logs. Cloudera Manager collects historical information about the systems and services. You can search for a particular phrase for a selected server, service, and time period. You can also select the minimum severity level of the logged messages included in the search: TRACE, DEBUG, INFO, WARN, ERROR, or FATAL.

-

Audits: Displays the audit history log for a selected time range. You can filter the results by user name, service, or other criteria, and download the log as a CSV file.

-

Charts: Enables you to view metrics from the Cloudera Manager time-series data store in a variety of chart types, such as line and bar.

-

Backup: Accesses snapshot policies and scheduled replications.

-

Administration: Provides a variety of administrative options, including Settings, Alerts, Users, and Kerberos.

Figure 2-2 shows the Cloudera Manager home page.

2.2.2 Performing Administrative Tasks

As a Cloudera Manager administrator, you can change various properties for monitoring the health and use of Oracle Big Data Appliance, add users, and set up Kerberos security.

To access Cloudera Manager Administration:

-

Log in to Cloudera Manager with administrative privileges.

-

Click Administration, and select a task from the menu.

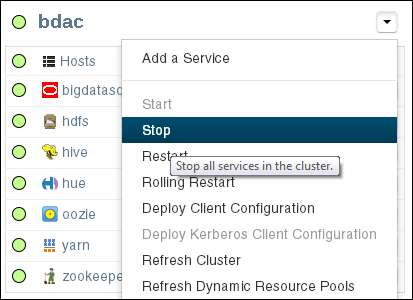

2.2.3 Managing CDH Services With Cloudera Manager

Cloudera Manager provides the interface for managing these services:

-

HDFS

-

Hive

-

Hue

-

Oozie

-

YARN

-

ZooKeeper

You can use Cloudera Manager to change the configuration of these services, stop, and restart them. Additional services are also available, which require configuration before you can use them. See "Unconfigured Software."

Note:

Manual edits to Linux service scripts or Hadoop configuration files do not affect these services. You must manage and configure them using Cloudera Manager.

2.3 Using Hadoop Monitoring Utilities

You also have the option of using the native Hadoop utilities. These utilities are read-only and do not require authentication.

Cloudera Manager provides an easy way to obtain the correct URLs for these utilities. On the YARN service page, expand the Web UI submenu.

2.3.1 Monitoring MapReduce Jobs

You can monitor MapReduce jobs using the resource manager interface.

To monitor MapReduce jobs:

-

Open a browser and enter a URL like the following:

http://bda1node03.example.com:8088

In this example,

bda1is the name of the rack,node03is the name of the server where the YARN resource manager runs, and8088is the default port number for the user interface.

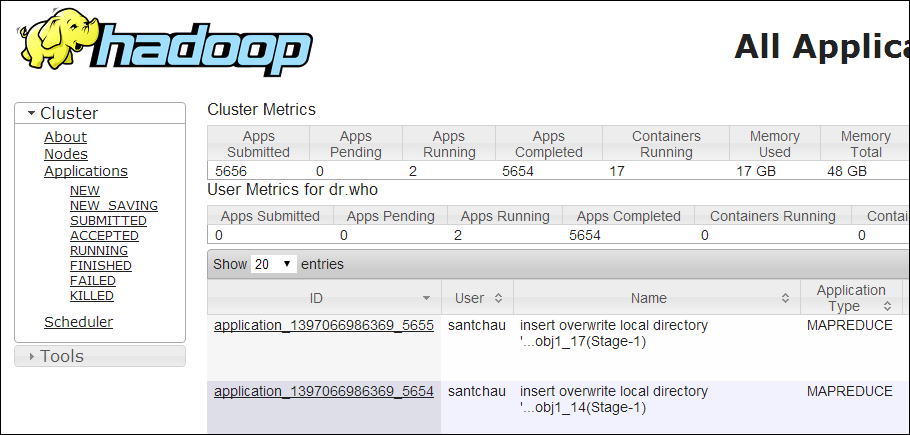

Figure 2-3 shows the resource manager interface.

Figure 2-3 YARN Resource Manager Interface

Description of "Figure 2-3 YARN Resource Manager Interface"

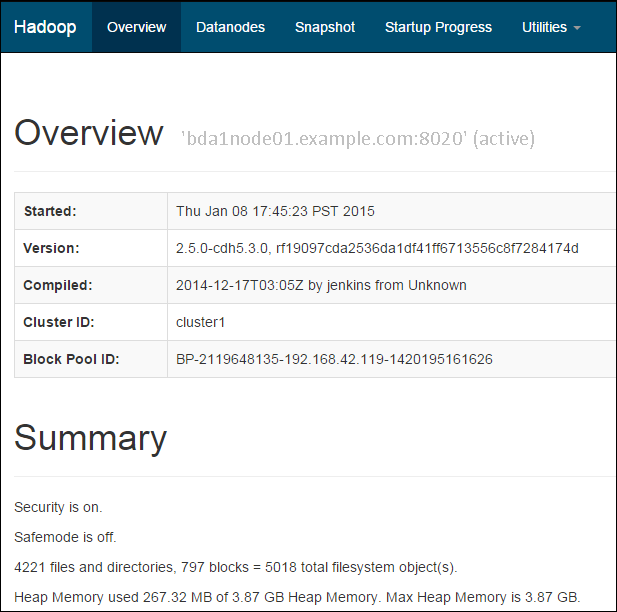

2.3.2 Monitoring the Health of HDFS

You can monitor the health of the Hadoop file system by using the DFS health utility on the first two nodes of a cluster.

To monitor HDFS:

-

Open a browser and enter a URL like the following:

http://bda1node01.example.com:50070

In this example,

bda1is the name of the rack,node01is the name of the server where the dfshealth utility runs, and50070is the default port number for the user interface.

Figure 2-3 shows the DFS health utility interface.

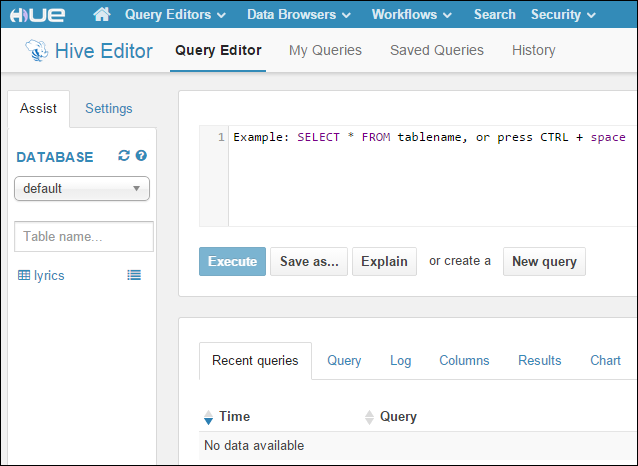

2.4 Using Cloudera Hue to Interact With Hadoop

Hue runs in a browser and provides an easy-to-use interface to several applications to support interaction with Hadoop and HDFS. You can use Hue to perform any of the following tasks:

-

Query Hive data stores

-

Create, load, and delete Hive tables

-

Work with HDFS files and directories

-

Create, submit, and monitor MapReduce jobs

-

Monitor MapReduce jobs

-

Create, edit, and submit workflows using the Oozie dashboard

-

Manage users and groups

Hue is automatically installed and configured on Oracle Big Data Appliance. It runs on port 8888 of the ResourceManager node. See the tables in About the CDH Software Services for Hue’s location within different cluster configurations.

To use Hue:

-

Log in to Cloudera Manager and click the hue service on the Home page.

-

On the hue page under Quick Links, click

Hue Web UI. -

Bookmark the Hue URL, so that you can open Hue directly in your browser. The following URL is an example:

http://bda1node03.example.com:8888

-

Log in with your Hue credentials.

If Hue accounts have not been created yet, log into the default Hue administrator account by using the following credentials:

-

Username:

admin -

Password:

cm-admin-password

where

cm-admin-passwordis the password specified when the cluster for the Cloudera Manager admin user was activated. You can then create other user and administrator accounts. -

Figure 2-5 shows the Hive Query Editor.

2.5 About the Oracle Big Data Appliance Software

The following sections identify the software installed on Oracle Big Data Appliance. Some components operate with Oracle Database 11.2.0.2 and later releases.

This section contains the following topics:

2.5.1 Software Components

These software components are installed on all servers in the cluster. Oracle Linux, required drivers, firmware, and hardware verification utilities are factory installed. All other software is installed on site. The optional software components may not be configured in your installation.

Note:

You do not need to install additional software on Oracle Big Data Appliance. Doing so may result in a loss of warranty and support. See the Oracle Big Data Appliance Owner's Guide.

Base image software:

-

Oracle Linux 6.8 with Oracle Unbreakable Enterprise Kernel version 2 (UEK2). Oracle Linux 5 Big Data Appliance upgrades stay at 5.11.

-

Oracle R Distribution 3.2.0

-

MySQL Enterprise Server – Advanced Edition version 5.6

-

Puppet, firmware, Oracle Big Data Appliance utilities

-

Oracle InfiniBand software

Mammoth installation:

-

Cloudera's Distribution including Apache Hadoop Release 5.8 including:

-

Apache Hive

-

Apache HBase

-

Apache Spark

-

-

Cloudera Manager Release 5.8.1 including Cloudera Navigator

-

Oracle Big Data SQL 3.0.1 (optional)

-

Oracle NoSQL Database Community Edition or Enterprise Edition 4.0.9 (optional)

-

Oracle Big Data Connectors 4.6 (optional):

-

Oracle Big Data Discovery 1.2.2 or 1.3 (optional)

See Also:

Oracle Big Data Appliance Owner's Guide for information about the Mammoth utility

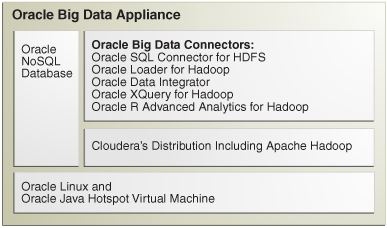

Figure 2-6 shows the relationships among the major components.

Figure 2-6 Major Software Components of Oracle Big Data Appliance

Description of "Figure 2-6 Major Software Components of Oracle Big Data Appliance"

2.5.2 Unconfigured Software

Your Oracle Big Data Appliance license includes all components in Cloudera Enterprise Data Hub Edition. All CDH components are installed automatically by the Mammoth utility. Do not download them from the Cloudera website.

However, you must use Cloudera Manager to add some services before you can use them, such as the following:

To add a service:

-

Log in to Cloudera Manager as the

adminuser. -

On the Home page, expand the cluster menu in the left panel and choose Add a Service to open the Add Service wizard. The first page lists the services you can add.

-

Follow the steps of the wizard.

See Also:

-

For a list of key CDH components:

http://www.cloudera.com/content/www/en-us/products/apache-hadoop/key-cdh-components.html -

CDH5 Installation and Configuration Guide for configuration procedures at

2.5.3 Allocating Resources Among Services

You can allocate resources to each service—HDFS, YARN, Oracle Big Data SQL, Hive, and so forth—as a percentage of the total resource pool. Cloudera Manager automatically calculates the recommended resource management settings based on these percentages. The static service pools isolate services on the cluster, so that a high load on one service as a limited impact on the other services.

To allocate resources among services:

-

Log in as

adminto Cloudera Manager. -

Open the Clusters menu at the top of the page, then select Static Service Pools under Resource Management.

-

Select Configuration.

-

Follow the steps of the wizard, or click Change Settings Directly to edit the current settings.

2.6 About the CDH Software Services

All services are installed on all nodes in a CDH cluster, but individual services run only on designated nodes. There are slight variations in the location of the services depending on the configuration of the cluster.

This section describes the services in a default YARN configuration.

This section contains the following topics:

2.6.1 Where Do the Services Run on a Three-Node, Development Cluster?

Oracle Big Data Appliance now enables the use of three-node clusters for development purposes.

Caution:

Three-node clusters are generally not suitable for production environments because all of the nodes are master nodes. This puts constraints on high availability. The minimum recommended cluster size for a production environment is five nodesTable 2-1 Service Locations for a Three-Node Development Cluster

| Node1 | Node2 | Node3 |

|---|---|---|

| NameNode | NameNode/Failover | - |

| Failover Controller | Failover Controller | - |

| DataNode | DataNode | DataNode |

| NodeManager | NodeManager | NodeManager |

| JournalNode | JournalNode | JournalNode |

| Sentry | HttpFS | Cloudera Manager and CM roles |

| - | MySQL Backup | MySQL Primary |

| ResourceManager | Hive | ResourceManager |

| - | Hive Metastore | JobHistory |

| - | ODI | Spark History |

| - | Oozie | - |

| - | Hue | - |

| - | WebHCat | - |

| ZooKeeper | ZooKeeper | ZooKeeper |

| Active Navigator Key Trustee Server (if HDFS Transparent Encryption is enabled) | Passive Navigator Key Trustee Server (if HDFS Transparent Encryption is enabled) | - |

| Kerberos Master KDC (Only if MIT Kerberos is enabled and on-BDA KDCs are being used.) | Kerberos Slave KDC (Only if MIT Kerberos is enabled and on-BDA KDCs are being used.) | - |

2.6.2 Where Do the Services Run on a Single-Rack CDH Cluster?

Table 2-2 identifies the services in CDH clusters configured within a single rack, including starter racks and clusters with five or more nodes. Node01 is the first server in the cluster (server 1, 7, or 10), and nodenn is the last server in the cluster (server 6, 9, 12, or 18). Multirack clusters have different services layouts, as do three-node clusters for development purposes. Both are described separately in this chapter.

Table 2-2 Service Locations for One or More CDH Clusters in a Single Rack

| Node01 | Node02 | Node03 | Node04 | Node05 to nn |

|---|---|---|---|---|

|

Balancer |

- |

Cloudera Manager Server |

- |

- |

|

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

|

DataNode |

DataNode |

DataNode |

DataNode |

DataNode |

|

Failover Controller |

Failover Controller |

- |

Hive, Hue, Oozie |

- |

|

JournalNode |

JournalNode |

JournalNode |

- |

- |

|

- |

MySQL Backup |

MySQL Primary |

- |

- |

|

NameNode |

NameNode |

Navigator Audit Server and Navigator Metadata Server |

- |

- |

|

NodeManager (in clusters of eight nodes or less) |

NodeManager (in clusters of eight nodes or less) |

NodeManager |

NodeManager |

NodeManager |

|

- |

- |

SparkHistoryServer |

Oracle Data Integrator Agent |

- |

|

- |

- |

ResourceManager |

ResourceManager |

- |

|

ZooKeeper |

ZooKeeper |

ZooKeeper |

- |

- |

|

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

|

Kerberos KDC (if MIT Kerberos is enabled and on-BDA KDCs are being used) |

Kerberos KDC (if MIT Kerberos is enabled and on-BDA KDCs are being used) |

JobHistory |

- |

- |

|

Sentry Server (if enabled) |

- |

- |

- |

- |

|

Active Navigator Key Trustee Server (if HDFS Transparent Encryption is enabled) |

Passive Navigator Key Trustee Server (if HDFS Transparent Encryption is enabled) |

- |

- |

- |

|

- |

HttpFS |

- |

- |

- |

Note:

If Oracle Big Data Discovery is installed, the NodeManager and DataNode on Node05 of the primary cluster are decomissioned.If Oozie high availability is enabled, then Oozie servers are hosted on Node04 and another node (preferably a ResourceNode) selected by the customer.

2.6.3 Where Do the Services Run on a Multirack CDH Cluster?

When multiple racks are configured as a single CDH cluster, some critical services are installed on the second rack. There can be variations in the distribution of services between the first and second racks in different multirack clusters. The two scenarios that account for these differences are:

-

The cluster spanned multiple racks in its original configuration.

The resulting service locations across the first and second rack are described in Table 2-3 and Table 2-4. In this case, the JournalNode, Mysql Primary, ResourceManager, and Zookeeper are installed on node 2 of the first rack.

-

The cluster was single-rack in the original configuration and was extended later.

The resulting service locations across the first and second rack are described in Table 2-5 and Table 2-6. In this case, the JournalNode, Mysql Primary, ResourceManager, and Zookeeper are installed on node 3 of the first rack.

Note:

If Oracle Big Data Discovery is installed, the NodeManager and DataNode on Node05 in the first rack of the primary cluster are decommissioned.There is one variant that is determined specifically by cluster size – for clusters of eight nodes less, nodes that run NameNode also run NodeManager. This is not true for clusters larger than eight nodes.

Table 2-3 First Rack Service Locations (When the Cluster Started as Multirack Cluster)

| Node01 | Node02 | Node03 | Node04 | Node05 to nn(1) |

|---|---|---|---|---|

|

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

|

– |

Cloudera Manager Server |

– |

– |

– |

|

DataNode |

DataNode |

DataNode |

DataNode |

DataNode |

|

Failover Controller |

- |

- |

- |

- |

|

JournalNode |

JournalNode |

Navigator Audit Server and Navigator Metadata Server |

- |

- |

|

NameNode |

MySQL Primary |

SparkHistoryServer |

- |

- |

|

NodeManager (in clusters of eight nodes or less) |

NodeManager |

NodeManager |

NodeManager |

NodeManager |

|

– |

ResourceManager |

- |

- |

- |

|

ZooKeeper |

ZooKeeper |

- |

- |

- |

|

Kerberos KDC (Only if MIT Kerberos is enabled and on-BDA KDCs are being used.) |

Kerberos KDC (Only if MIT Kerberos is enabled and on-BDA KDCs are being used.) |

|||

|

Sentry Server (Only if Sentry is enabled.) |

||||

|

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

|

Active Navigator Key Trustee Server (if HDFS Transparent Encryption is enabled) |

Footnote 1 nn includes the servers in additional racks.

Table 2-4 shows the service locations in the second rack of a cluster that was originally configured to span multiple racks.

Table 2-4 Second Rack Service Locations (When the Cluster Started as Multirack Cluster)

| Node01 | Node02 | Node03 | Node04 | Node05 to nn |

|---|---|---|---|---|

|

Balancer |

- |

- |

- |

- |

|

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

|

DataNode |

DataNode |

DataNode |

DataNode |

DataNode |

|

Failover Controller |

- |

- |

- |

- |

|

JournalNode |

Hive, Hue, Oozie |

- |

- |

- |

|

MySQL Backup |

- |

- |

- |

- |

|

NameNode |

- |

- |

- |

- |

|

NodeManager (in clusters of eight nodes or less) |

NodeManager |

NodeManager |

NodeManager |

NodeManager |

|

HttpFS |

Oracle Data Integrator Agent |

- |

- |

- |

|

- |

ResourceManager |

- |

- |

- |

|

ZooKeeper |

- |

- |

- |

- |

|

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

|

Passive Navigator Key Trustee Server (if HDFS Transparent Encryption is enabled) |

Table 2-5 shows the service locations in the first rack of a cluster that was originally configured as a single-rack cluster and subsequently extended.

Table 2-5 First Rack Service Locations (When a Single-Rack Cluster is Extended)

| Node01 | Node02 | Node03 | Node04 | Node05 to nn(2) |

|---|---|---|---|---|

|

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

|

- |

- |

Cloudera Manager Server |

- |

- |

|

DataNode |

DataNode |

DataNode |

DataNode |

DataNode |

|

Failover Controller |

- |

Navigator Audit Server and Navigator Metadata Server |

- |

- |

|

JournalNode |

- |

JournalNode |

- |

- |

|

NameNode |

- |

MySQL Primary |

- |

- |

|

NodeManager (in clusters of eight nodes or less) |

NodeManager |

NodeManager |

NodeManager |

NodeManager |

|

- |

- |

ResourceManager |

- |

- |

|

ZooKeeper |

- |

ZooKeeper |

- |

- |

|

Kerberos KDC (Only if MIT Kerberos is enabled and on-BDA KDCs are being used.) |

Kerberos KDC (Only if MIT Kerberos is enabled and on-BDA KDCs are being used.) |

SparkHistoryServer |

- |

- |

|

Sentry Server (Only if Sentry is enabled.) |

- |

- |

- |

- |

|

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

|

Active Navigator Key Trustee Server (if HDFS Transparent Encryption is enabled) |

- |

- |

- |

- |

Footnote 2 nn includes the servers in additional racks.

Table 2-6 shows the service locations in the second rack of a cluster originally configured as a single-rack cluster and subsequently extended.

Table 2-6 Second Rack Service Locations (When a Single-Rack Cluster is Extended)

| Node01 | Node02 | Node03 | Node04 | Node05 to nn |

|---|---|---|---|---|

|

Balancer |

||||

|

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

Cloudera Manager Agent |

|

DataNode |

DataNode |

DataNode |

DataNode |

DataNode |

|

Failover Controller |

- |

- |

- |

- |

|

JournalNode |

Hive, Hue, Oozie, Solr |

- |

- |

- |

|

MySQL Backup |

- |

- |

- |

- |

|

NameNode |

- |

- |

- |

- |

|

NodeManager (in clusters of eight nodes or less) |

NodeManager |

NodeManager |

NodeManager |

NodeManager |

|

HttpFS |

Oracle Data Integrator Agent |

- |

- |

- |

|

- |

ResourceManager |

- |

- |

- |

|

ZooKeeper |

- |

- |

- |

- |

|

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

Big Data SQL (if enabled) |

|

Passive Navigator Key Trustee Server (if HDFS Transparent Encryption is enabled) |

Note:

When expanding a cluster from one to two racks, Mammoth moves all critical services from nodes 2 and 4 of the first rack to nodes 1 and 2 of the second rack. Nodes 2 and 4 of the first rack become noncritical nodes.

2.6.4 About MapReduce

Yet Another Resource Negotiator (YARN) is the version of MapReduce that runs on Oracle Big Data Appliance, beginning with version 3.0. MapReduce applications developed using MapReduce 1 (MRv1) may require recompilation to run under YARN.

The ResourceManager performs all resource management tasks. An MRAppMaster performs the job management tasks. Each job has its own MRAppMaster. The NodeManager has containers that can run a map task, a reduce task, or an MRAppMaster. The NodeManager can dynamically allocate containers using the available memory. This architecture results in improved scalability and better use of the cluster than MRv1.

YARN also manages resources for Spark and Impala.

See Also:

"Running Existing Applications on Hadoop 2 YARN" at

http://hortonworks.com/blog/running-existing-applications-on-hadoop-2-yarn/

2.6.5 Automatic Failover of the NameNode

The NameNode is the most critical process because it keeps track of the location of all data. Without a healthy NameNode, the entire cluster fails. Apache Hadoop v0.20.2 and earlier are vulnerable to failure because they have a single name node.

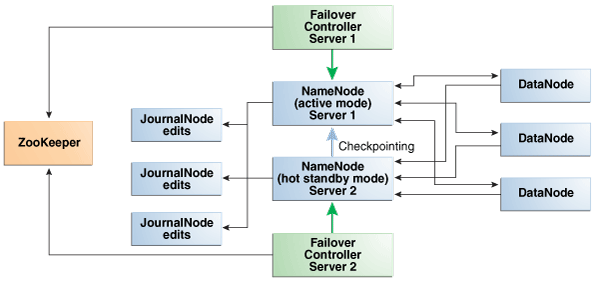

Cloudera's Distribution including Apache Hadoop Version 4 (CDH5) reduces this vulnerability by maintaining redundant NameNodes. The data is replicated during normal operation as follows:

-

CDH maintains redundant NameNodes on the first two nodes of a cluster. One of the NameNodes is in active mode, and the other NameNode is in hot standby mode. If the active NameNode fails, then the role of active NameNode automatically fails over to the standby NameNode.

-

The NameNode data is written to a mirrored partition so that the loss of a single disk can be tolerated. This mirroring is done at the factory as part of the operating system installation.

-

The active NameNode records all changes to the file system metadata in at least two JournalNode processes, which the standby NameNode reads. There are three JournalNodes, which run on the first three nodes of each cluster.

-

The changes recorded in the journals are periodically consolidated into a single fsimage file in a process called checkpointing.

On Oracle Big Data Appliance, the default log level of the NameNode is DEBUG, to support the Oracle Audit Vault and Database Firewall plugin. If this option is not configured, then you can reset the log level to INFO.

Note:

Oracle Big Data Appliance 2.0 and later releases do not support the use of an external NFS filer for backups and do not use NameNode federation.

Figure 2-7 shows the relationships among the processes that support automatic failover of the NameNode.

Figure 2-7 Automatic Failover of the NameNode on Oracle Big Data Appliance

Description of "Figure 2-7 Automatic Failover of the NameNode on Oracle Big Data Appliance"

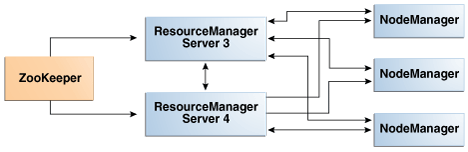

2.6.6 Automatic Failover of the ResourceManager

The ResourceManager allocates resources for application tasks and application masters across the cluster. Like the NameNode, the ResourceManager is a critical point of failure for the cluster. If all ResourceManagers fail, then all jobs stop running. Oracle Big Data Appliance supports ResourceManager High Availability in Cloudera 5 to reduce this vulnerability.

CDH maintains redundant ResourceManager services on node03 and node04. One of the services is in active mode, and the other service is in hot standby mode. If the active service fails, then the role of active ResourceManager automatically fails over to the standby service. No failover controllers are required.

Figure 2-8 shows the relationships among the processes that support automatic failover of the ResourceManager.

Figure 2-8 Automatic Failover of the ResourceManager on Oracle Big Data Appliance

Description of "Figure 2-8 Automatic Failover of the ResourceManager on Oracle Big Data Appliance"

2.6.7 Map and Reduce Resource Allocation

Oracle Big Data Appliance dynamically allocates memory to YARN. The allocation depends upon the total memory on the node and whether the node is one of the four critical nodes.

If you add memory, update the NodeManager container memory by increasing it by 80% of the memory added. Leave the remaining 20% for overhead.

2.7 Effects of Hardware on Software Availability

The effects of a server failure vary depending on the server's function within the CDH cluster. Oracle Big Data Appliance servers are more robust than commodity hardware, so you should experience fewer hardware failures. This section highlights the most important services that run on the various servers of the primary rack. For a full list, see "Where Do the Services Run on a Single-Rack CDH Cluster?."

Note:

In a multirack cluster, some critical services run on the first server of the second rack. See "Where Do the Services Run on a Multirack CDH Cluster?."

2.7.1 Logical Disk Layout

Each server has 12 disks. . Disk partitioning is described in the table below.

**INTERNAL XREF ERROR** describes how the disks are partitioned.

The operating system is installed on disks 1 and 2. These two disks are mirrored. They include the Linux operating system, all installed software, NameNode data, and MySQL Database data. The NameNode and MySQL Database data are replicated on the two servers for a total of four copies. As shown in the table, in cases where Disk 1 and 2 are 4 TB drives these include a 3440 GB HDFS data partition. If Disk 1 and 2 are 8 TB, each includes a 7314 GB HDFS data partition.

Drive 3 through 12 each contain a single HDFS or Oracle NoSQL Database data partition

Table 2-7 Oracle Big Data Appliance Server Disk Partioning

| Disks 1 and 2 (OS) | Disks 3 – 12 (Data) |

|---|---|

8 TB Drives:

Number Start End Size File system Name Flags 1 1049kB 500MB 499MB ext4 primary boot 2 500MB 501GB 500GB primary raid 3 501GB 550GB 50.0GB linux-swap(v1) primary 4 550GB 7864GB 7314GB ext4 primary |

8 TB Drives:

Number Start End Size File system Name Flags 1 1049kB 7864GB 7864GB ext4 primary |

4 TB Drives:

Number Start End Size File system Name Flags 1 1049kB 500MB 499MB ext4 primary boot 2 500MB 501GB 500GB primary raid 3 501GB 560GB 59.5GB linux-swap(v1) primary 4 560GB 4000GB 3440GB ext4 primary |

4 TB Drives:

Number Start End Size File system Name Flags 1 1049kB 4000GB 4000GB ext4 primary |

2.7.2 Critical and Noncritical CDH Nodes

Critical nodes are required for the cluster to operate normally and provide all services to users. In contrast, the cluster continues to operate with no loss of service when a noncritical node fails.

On single-rack clusters, the critical services are installed initially on the first four nodes of the cluster. The remaining nodes (node05 up to node18) only run noncritical services. If a hardware failure occurs on one of the critical nodes, then the services can be moved to another, noncritical server. For example, if node02 fails, then you might move its critical services node05. Table 2-2 identifies the initial location of services for clusters that are configured on a single rack.

In a multirack cluster, some critical services run on the first server of the second rack. See "Where Do the Services Run on a Single-Rack CDH Cluster?."

2.7.2.1 High Availability or Single Points of Failure?

Some services have high availability and automatic failover. Other services have a single point of failure. The following list summarizes the critical services:

-

NameNodes: High availability with automatic failover

-

ResourceManagers: High availability with automatic failover

-

MySQL Database: Primary and backup databases are configured with replication of the primary database to the backup database. There is no automatic failover. If the primary database fails, the functionality of the cluster is diminished, but no data is lost.

-

Cloudera Manager: The Cloudera Manager server runs on one node. If it fails, then Cloudera Manager functionality is unavailable.

-

Oozie server, Hive server, Hue server, and Oracle Data Integrator agent: These services have no redundancy. If the node fails, then the services are unavailable.

2.7.2.2 Where Do the Critical Services Run?

Table 2-8 identifies where the critical services run in a CDH cluster. These four nodes are described in more detail in the topics that follow.

Table 2-8 Critical Service Locations on a Single Rack

| Node Name | Critical Functions |

|---|---|

|

First NameNode |

Balancer, Failover Controller, JournalNode, NameNode, Puppet Master, ZooKeeper |

|

Second NameNode |

Failover Controller, JournalNode, MySQL Backup Database, NameNode, ZooKeeper |

|

First ResourceManager Node |

Cloudera Manager Server, JobHistory, JournalNode, MySQL Primary Database, ResourceManager, ZooKeeper. |

|

Second ResourceManager Node |

Hive, Hue, Oozie, Solr, Oracle Data Integrator Agent, ResourceManager |

In a single-rack cluster, the four critical nodes are created initially on the first four nodes. See "Where Do the Services Run on a Single-Rack CDH Cluster?"

In a multirack cluster, the Second NameNode and the Second ResourceManager nodes are moved to the first two nodes of the second rack. See "Where Do the Services Run on a Multirack CDH Cluster?".

2.7.3 First NameNode Node

If the first NameNode fails or goes offline (such as a restart), then the second NameNode automatically takes over to maintain the normal activities of the cluster.

Alternatively, if the second NameNode is already active, it continues without a backup. With only one NameNode, the cluster is vulnerable to failure. The cluster has lost the redundancy needed for automatic failover.

The puppet master also runs on this node. The Mammoth utility uses Puppet, and so you cannot install or reinstall the software if, for example, you must replace a disk drive elsewhere in the rack.

2.7.4 Second NameNode Node

If the second NameNode fails, then the function of the NameNode either fails over to the first NameNode (node01) or continues there without a backup. However, the cluster has lost the redundancy needed for automatic failover if the first NameNode also fails.

The MySQL backup database also runs on this node. MySQL Database continues to run, although there is no backup of the master database.

2.7.5 First ResourceManager Node

If the first ResourceManager node fails or goes offline (such as in a restart of the server where the node is running), then the second ResourceManager automatically takes over the distribution of MapReduce tasks to specific nodes across the cluster.

If the second ResourceManager is already active when the first ResourceManager becomes inaccessible, then it continues as ResourceManager, but without a backup. With only one ResourceManager, the cluster is vulnerable because it has lost the redundancy needed for automatic failover.

If the first ResourceManager node fails or goes offline (such as a restart), then the second ResourceManager automatically takes over to distribute MapReduce tasks to specific nodes across the cluster.

Alternatively, if the second ResourceManager is already active, it continues without a backup. With only one ResourceManager, the cluster is vulnerable to failure. The cluster has lost the redundancy needed for automatic failover.

These services are also disrupted:

-

Cloudera Manager: This tool provides central management for the entire CDH cluster. Without this tool, you can still monitor activities using the utilities described in "Using Hadoop Monitoring Utilities".

-

MySQL Database: Cloudera Manager, Oracle Data Integrator, Hive, and Oozie use MySQL Database. The data is replicated automatically, but you cannot access it when the master database server is down.

2.7.6 Second ResourceManager Node

If the second ResourceManager node fails, then the function of the ResourceManager either fails over to the first ResourceManager or continues there without a backup. However, the cluster has lost the redundancy needed for automatic failover if the first ResourceManager also fails.

These services are also disrupted:

-

Oracle Data Integrator Agent This service supports Oracle Data Integrator, which is one of the Oracle Big Data Connectors. You cannot use Oracle Data Integrator when the ResourceManager node is down.

-

Hive: Hive provides a SQL-like interface to data that is stored in HDFS. Oracle Big Data SQL and most of the Oracle Big Data Connectors can access Hive tables, which are not available if this node fails.

-

Hue: This administrative tool is not available when the ResourceManager node is down.

-

Oozie: This workflow and coordination service runs on the ResourceManager node, and is unavailable when the node is down.

2.7.7 Noncritical CDH Nodes

The noncritical nodes are optional in that Oracle Big Data Appliance continues to operate with no loss of service if a failure occurs. The NameNode automatically replicates the lost data to always maintain three copies. MapReduce jobs execute on copies of the data stored elsewhere in the cluster. The only loss is in computational power, because there are fewer servers on which to distribute the work.

2.8 Managing a Hardware Failure

If a server starts failing, you must take steps to maintain the services of the cluster with as little interruption as possible. You can manage a failing server easily using the bdacli utility, as described in the following procedures. One of the management steps is called decommissioning. Decommissioning stops all roles for all services, thereby preventing data loss. Cloudera Manager requires that you decommission a CDH node before retiring it.

When a noncritical node fails, there is no loss of service. However, when a critical node fails in a CDH cluster, services with a single point of failure are unavailable, as described in "Effects of Hardware on Software Availability". You must decide between these alternatives:

-

Wait for repairs to be made, and endure the loss of service until they are complete.

-

Move the critical services to another node. This choice may require that some clients are reconfigured with the address of the new node. For example, if the second ResourceManager node (typically node03) fails, then users must redirect their browsers to the new node to access Cloudera Manager.

You must weigh the loss of services against the inconvenience of reconfiguring the clients.

2.8.1 About Oracle NoSQL Database Clusters

Oracle NoSQL Database clusters do not have critical nodes. The storage nodes are replicated, and users can choose from three administrative processes on different nodes. There is no loss of services.

If the node hosting Mammoth fails (the first node of the cluster), then follow the procedure for reinstalling it in "Prerequisites for Managing a Failing Node"

To repair or replace any failing NoSQL node, follow the procedure in "Managing a Failing Noncritical Node".

2.8.2 Prerequisites for Managing a Failing Node

Ensure that you do the following before managing a failing or failed server, whether it is configured as a CDH node or an Oracle NoSQL Database node:

-

Try restarting the services or rebooting the server.

-

Determine whether the failing node is critical or noncritical.

-

If the failing node is where Mammoth is installed:

-

For a CDH node, select a noncritical node in the same cluster as the failing node.

For a NoSQL node, repair or replace the failed server first, and use it for these steps.

-

Upload the Mammoth bundle to that node and unzip it.

-

Extract all files from

BDAMammoth-version.run, using a command like the following:# ./BDAMammoth-ol6-4.0.0.run

Afterward, you must run all Mammoth operations from this node.

See Oracle Big Data Appliance Owner's Guide for information about the Mammoth utility.

-

Follow the appropriate procedure in this section for managing a failing node.

Mammoth is installed on the first node of the cluster, unless its services were migrated previously.

-

2.8.3 Managing a Failing CDH Critical Node

Only CDH clusters have critical nodes.

To manage a failing critical node:

-

Log in as

rootto the node where Mammoth is installed. -

Migrate the services to a noncritical node. Replace node_name with the name of the failing node, such as bda1node02.

bdacli admin_cluster migrate node_nameWhen the command finishes, node_name is decommissioned and its services are running on a previously noncritical node.

-

Announce the change to the user community, so that they can redirect their clients to the new critical node as required.

-

Repair or replace the failed server.

-

From the Mammoth node as

root, reprovision the repaired or replaced server as a noncritical node. Use the same name as the migrated node for node_name, such as bda1node02:bdacli admin_cluster reprovision node_name -

If the failed node supported services like HBase or Impala, which Mammoth installs but does not configure, then use Cloudera Manager to reconfigure them on the new node.

2.8.4 Managing a Failing Noncritical Node

Use the following procedure to replace a failing node in either a CDH or a NoSQL cluster.

To manage a failing noncritical node:

-

Log in as

rootto the node where Mammoth is installed (typically node01). -

Decommission the failing node.Replace node_name with the name of the failing node, such as bda1node07.

bdacli admin_cluster decommission node_name -

Repair or replace the failed server.

-

From the Mammoth node as

root, recommission the repaired or replaced server. Use the same name as the decommissioned node for node_name, such as bda1node07:bdacli admin_cluster recommission node_name

See Also:

Oracle Big Data Appliance Owner's Guide for the complete bdacli syntax

2.9 Stopping and Starting Oracle Big Data Appliance

This section describes how to shut down Oracle Big Data Appliance gracefully and restart it.

2.9.1 Prerequisites

You must have root access. Passwordless SSH must be set up on the cluster, so that you can use the dcli utility.

To ensure that passwordless-ssh is set up:

-

Log in to the first node of the cluster as

root. -

Use a

dclicommand to verify it is working. This command should return the IP address and host name of every node in the cluster:# dcli -C hostname 192.0.2.1: bda1node01.example.com 192.0.2.2: bda1node02.example.com . . . -

If you do not get these results, then set up

dclion the cluster:# setup-root-ssh -C

See Also:

Oracle Big Data Appliance Owner's Guide for details about these commands.

2.9.2 Stopping Oracle Big Data Appliance

Follow these procedures to shut down all Oracle Big Data Appliance software and hardware components.

Note:

The following services stop automatically when the system shuts down. You do not need to take any action:

-

Oracle Enterprise Manager agent

-

Auto Service Request agents

2.9.2.1 Stopping All Managed Services

Use Cloudera Manager to stop the services it manages, including flume, hbase, hdfs, hive, hue, mapreduce, oozie, and zookeeper.

2.9.2.2 Stopping Cloudera Manager Server

Follow this procedure to stop Cloudera Manager Server.

After stopping Cloudera Manager, you cannot access it using the web console.

2.9.2.4 Dismounting NFS Directories

All nodes share an NFS directory on node03, and additional directories may also exist. If a server with the NFS directory (/opt/exportdir) is unavailable, then the other servers hang when attempting to shut down. Thus, you must dismount the NFS directories first.

2.9.2.5 Stopping the Servers

The Linux shutdown -h command powers down individual servers. You can use the dcli -g command to stop multiple servers.

2.9.2.6 Stopping the InfiniBand and Cisco Switches

To stop the network switches, turn off a PDU or a breaker in the data center. The switches only turn off when power is removed.

The network switches do not have power buttons. They shut down only when power is removed

To stop the switches, turn off all breakers in the two PDUs.

2.9.3 Starting Oracle Big Data Appliance

Follow these procedures to power up the hardware and start all services on Oracle Big Data Appliance.

2.9.3.1 Powering Up Oracle Big Data Appliance

- Switch on all 12 breakers on both PDUs.

- Allow 4 to 5 minutes for Oracle ILOM and the Linux operating system to start on the servers.

If the servers do not start automatically, then you can start them locally by pressing the power button on the front of the servers, or remotely by using Oracle ILOM. Oracle ILOM has several interfaces, including a command-line interface (CLI) and a web console. Use whichever interface you prefer.

For example, you can log in to the web interface as root and start the server from the Remote Power Control page. The URL for Oracle ILOM is the same as for the host, except that it typically has a -c or -ilom extension. This URL connects to Oracle ILOM for bda1node4:

http://bda1node04-ilom.example.com

2.9.3.2 Starting the HDFS Software Services

Use Cloudera Manager to start all the HDFS services that it controls.

2.10 Managing Oracle Big Data SQL

Oracle Big Data SQL is registered with Cloudera Manager as an add-on service. You can use Cloudera Manager to start, stop, and restart the Oracle Big Data SQL service or individual role instances, the same way as a CDH service.

Cloudera Manager also monitors the health of the Oracle Big Data SQL service, reports service outages, and sends alerts if the service is not healthy.

2.10.1 Adding and Removing the Oracle Big Data SQL Service

Oracle Big Data SQL is an optional service on Oracle Big Data Appliance. It may be installed with the other client software during the initial software installation or an upgrade. Use Cloudera Manager to determine whether it is installed. A separate license is required; Oracle Big Data SQL is not included with the Oracle Big Data Appliance license.

You cannot use Cloudera Manager to add or remove the Oracle Big Data SQL service from a CDH cluster on Oracle Big Data Appliance. Instead, log in to the server where Mammoth is installed (usually the first node of the cluster) and use the following commands in the bdacli utility:

-

To enable Oracle Big Data SQL

bdacli enable big_data_sql

-

To disable Oracle Big Data SQL:

bdacli disable big_data_sql

See Also:

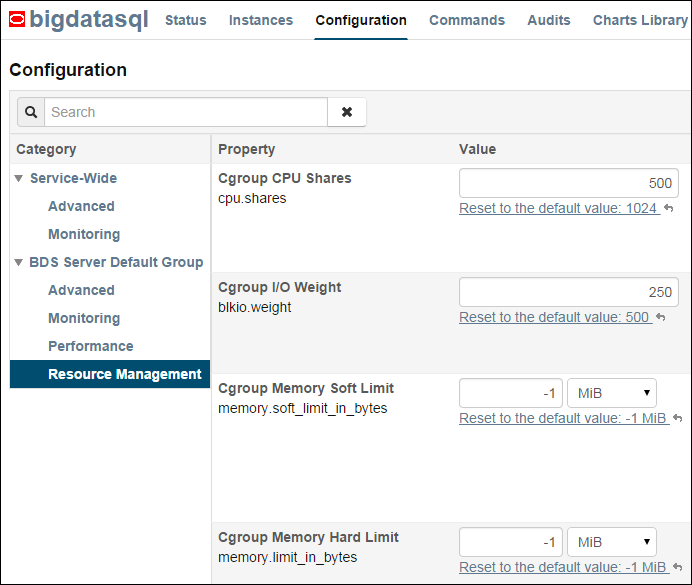

2.10.2 Allocating Resources to Oracle Big Data SQL

You can modify the property values in a Linux kernel Control Group (Cgroup) to reserve resources for Oracle Big Data SQL.

To modify the resource management configuration settings:

-

Log in as

adminto Cloudera Manager. -

On the Home page, click bigdatasql from the list of services.

-

On the bigdatasql page, click Configuration.

-

Under Category, expand BDS Server Default Group and click Resource Management.

-

Modify the values of the following properties as required:

-

Cgroup CPU Shares

-

Cgroup I/O Weight

-

Cgroup Memory Soft Limit

-

Cgroup Memory Hard Limit

See the Description column on the page for guidelines.

-

-

Click Save Changes.

-

From the Actions menu, click Restart.

Figure 2-10 shows the bigdatasql service configuration page.

Figure 2-10 Modifying the Cgroup Settings for Oracle Big Data SQL

Description of "Figure 2-10 Modifying the Cgroup Settings for Oracle Big Data SQL"

See Also:

2.11 Security on Oracle Big Data Appliance

You can take precautions to prevent unauthorized use of the software and data on Oracle Big Data Appliance.

2.11.1 About Predefined Users and Groups

Every open-source package installed on Oracle Big Data Appliance creates one or more users and groups. Most of these users do not have login privileges, shells, or home directories. They are used by daemons and are not intended as an interface for individual users. For example, Hadoop operates as the hdfs user, MapReduce operates as mapred, and Hive operates as hive.

You can use the oracle identity to run Hadoop and Hive jobs immediately after the Oracle Big Data Appliance software is installed. This user account has login privileges, a shell, and a home directory.

Oracle NoSQL Database and Oracle Data Integrator run as the oracle user. Its primary group is oinstall.

Note:

Do not delete, re-create, or modify the users that are created during installation, because they are required for the software to operate.

Table 2-9 identifies the operating system users and groups that are created automatically during installation of Oracle Big Data Appliance software for use by CDH components and other software packages.

Table 2-9 Operating System Users and Groups

| User Name | Group | Used By | Login Rights |

|---|---|---|---|

|

|

|

Apache Flume parent and nodes |

No |

|

|

|

Apache HBase processes |

No |

|

|

|

No |

|

|

|

|

No |

|

|

|

|

Hue processes |

No |

|

|

|

ResourceManager, NodeManager, Hive Thrift daemon |

Yes |

|

|

|

Yes |

|

|

|

|

Oozie server |

No |

|

|

|

Oracle NoSQL Database, Oracle Loader for Hadoop, Oracle Data Integrator, and the Oracle DBA |

Yes |

|

|

|

Puppet parent (puppet nodes run as |

No |

|

|

|

Apache Sqoop metastore |

No |

|

|

Auto Service Request |

No |

|

|

|

|

ZooKeeper processes |

No |

2.11.2 About User Authentication

Oracle Big Data Appliance supports Kerberos security as a software installation option. See Supporting User Access to Oracle Big Data Appliance for details about setting up clients and users to access a Kerberos-protected cluster.

2.11.3 About Fine-Grained Authorization

The typical authorization model on Hadoop is at the HDFS file level, such that users either have access to all of the data in the file or none. In contrast, Apache Sentry integrates with the Hive and Impala SQL-query engines to provide fine-grained authorization to data and metadata stored in Hadoop.

Oracle Big Data Appliance automatically configures Sentry during software installation, beginning with Mammoth utility version 2.5.

See Also:

-

Cloudera Manager Help

-

Managing Clusters with Cloudera Manager at

2.11.4 About HDFS Transparent Encryption

HDFS Transparent Encryption protects Hadoop data that is at rest on disk. After HDFS Transparent Encryption is enabled for a cluster on Oracle Big Data Appliance, data writes and reads to encrypted zones (HDFS directories) on the disk are automatically encrypted and decrypted. This process is “transparent” because it is invisible to the application working with the data.

HDFS Transparent Encryption does not affect user access to Hadoop data, although it can have a minor impact on performance.

HDFS Transparent Encryption is an option that you can select during the initial installation of the software by the Mammoth utility. You can also enable or disable HDFS Transparent Encryption at any time by using the bdacli utility. Note that HDFS Transparent Encryption can be installed only on a Kerberos-secured cluster.

Oracle recommends that you set up the Navigator Key Trustee (the service that manages keys and certificates) on a separate server, external to the Oracle Big Data Appliance.

See the following MOS documents at My Oracle Support for instructions on installing and enabling HDFS Transparent Encryption.

| Title | MOS Doc ID |

|---|---|

| How to Setup Highly Available Active and Passive Key Trustee Servers on BDA V4.4 Using 5.5 Parcels | 2112644.1

Installing using parcels as described in this MOS document is recommended over package-based installation. See Cloudera’s comments on Parcels. |

| How to Enable/Disable HDFS Transparent Encryption on Oracle Big Data Appliance V4.4 with bdacli | 2111343.1 |

| How to Create Encryption Zones on HDFS on Oracle Big Data Appliance V4.4 | 2111829.1 |

Note:

If either HDFS Transparent Encryption or Kerberos is disabled, data stored in the HDFS Transparent Encryption zones in the cluster will remain encrypted and therefore inaccessible. To restore access to the data, re-enable HDFS Transparent Encryption using the same key provider.

See Also:

Cloudera documentation about HDFS at-rest encryption at http://www.cloudera.com for more information about managing files in encrypted zones.

2.11.5 About HTTPS/Network Encryption

HTTPS Network/Encryption on the Big Data Appliance has two components :

-

Web Interface Encryption

Configures HTTPS for the following web interfaces: Cloudera Manager, Oozie, and HUE. This encryption is now enabled automatically in new Mammoth installations. For current installations it can be enabled via the bdacli utility. This feature does not require that Kerberos is enabled.

-

Encryption for Data in Transit and Services

There are two subcomponents to this feature. Both are options that can be enabled in the Configuration Utility at installation time or enabled/disabled using the bdacli utility at any time. Both require that Kerberos is enabled.-

Encrypt Hadoop Services

This includes SSL encryption for HDFS, MapReduce, and YARN web interfaces, as well as encrypted shuffle for MapReduce and YARN. It also enable authentication for access to the web consoles for the MapReduce, and YARN roles.

-

Encrypt HDFS Data Transport

This option will enable encryption of data transferred between DataNodes and clients, and among DataNodes.

-

HTTPS/Network Encryption is enabled and disabled on a per cluster basis. The Configuration Utility described in the Oracle Big Data Appliance Owner’s Guide, includes settings for enabling encryption for Hadoop Services and HDFS Data Transport when a cluster is created. The bdacli utility reference pages (also in the Oracle Big Data Appliance Owner’s Guide ) provide HTTPS/Network Encryption command line options.

See Also:

Supporting User Access to Oracle Big Data Appliancefor an overview of how Kerberos is used to secure CDH clusters.

About HDFS Transparent Encryption for information about Oracle Big Data Appliance security for Hadoop data at-rest.

Cloudera documentation at http://www.cloudera.com for more information about HTTPS communication in Cloudera Manager and network-level encryption in CDH.

2.11.5.1 Configuring Web Browsers to use Kerberos Authentication

If web interface encryption is enabled, each web browser accessing an HDFS, MapReduce, or YARN-encrypted web interface must be configured to authenticate with Kerberos. Note that this is not necessary for the Cloudera Manager, Oozie, and Hue web interfaces, which do not require Kerberos.

The following are the steps to configure Mozilla FirefoxFoot 3, Microsoft Internet ExplorerFoot 4, and Google ChromeFoot 5 for Kerberos authentication.

To configure Mozilla Firefox:

-

Enter

about:configin the Location Bar. -

In the Search box on the about:config page, enter:

network.negotiate-auth.trusted-uris -

Under Preference Name, double-click the

network.negotiate-auth.trusted-uris. -

In the Enter string value dialog, enter the hostname or the domain name of the web server that is protected by Kerberos. Separate multiple domains and hostnames with a comma.

To configure Microsoft Internet Explorer:

-

Configure the Local Intranet Domain:

-

Open Microsoft Internet Explorer and click the Settings "gear" icon in the top-right corner. Select

Internet options. -

Select the Security tab.

-

Select the Local intranet zone and click Sites.

-

Make sure that the first two options,

Include all local (intranet) sites not listed in other zonesandInclude all sites that bypass the proxy serverare checked. -

Click Advanced on the

Local intranetdialog box and, one at a time, add the names of the Kerberos-protected domains to the list of websites. -

Click Close.

-

Click OK to save your configuration changes, then click OK again to exit the Internet Options panel.

-

-

Configure Intranet Authentication for Microsoft Internet Explorer:

-

Click the Settings "gear" icon in the top-right corner. Select

Internet Options. -

Select the Security tab.

-

Select the Local Intranet zone and click the Custom level... button to open the Security Settings - Local Intranet Zone dialog box.

-

Scroll down to the User Authentication options and select

Automatic logon only in Intranet zone. -

Click OK to save your changes.

-

To configure Google Chrome:

If you are using Microsoft Windows, use the Control Panel to navigate to the Internet Options dialogue box. Configuration changes required are the same as those described above for Microsoft Internet Explorer.

OnFoot 6 or on Linux, add the --auth-server-whitelist parameter to the google-chrome command. For example, to run Chrome from a Linux prompt, run the google-chrome command as follows

google-chrome --auth-server-whitelist = "hostname/domain"

Note:

On Microsoft Windows, the Windows user must be an user in the Kerberos realm and must possess a valid ticket. If these requirements are not met, an HTTP 403 is returned to the browser upon attempt to access a Kerberos-secured web interface.2.11.6 Port Numbers Used on Oracle Big Data Appliance

Table 2-10 identifies the port numbers that might be used in addition to those used by CDH.

To view the ports used on a particular server:

-

In Cloudera Manager, click the Hosts tab at the top of the page to display the Hosts page.

-

In the Name column, click a server link to see its detail page.

-

Scroll down to the Ports section.

See Also:

For the full list of CDH port numbers, go to the Cloudera website at

Table 2-10 Oracle Big Data Appliance Port Numbers

| Service | Port |

|---|---|

|

30920 |

|

|

HBase master service (node01) |

60010 |

|

3306 |

|

|

20910 |

|

|

Oracle NoSQL Database administration |

5001 |

|

5010 to 5020 |

|

|

Oracle NoSQL Database registration |

5000 |

|

111 |

|

|

8140 |

|

|

Puppet node service |

8139 |

|

668 |

|

|

22 |

|

|

6481 |

2.11.7 About Puppet Security

The puppet node service (puppetd) runs continuously as root on all servers. It listens on port 8139 for "kick" requests, which trigger it to request updates from the puppet master. It does not receive updates on this port.

The puppet master service (puppetmasterd) runs continuously as the puppet user on the first server of the primary Oracle Big Data Appliance rack. It listens on port 8140 for requests to push updates to puppet nodes.

The puppet nodes generate and send certificates to the puppet master to register initially during installation of the software. For updates to the software, the puppet master signals ("kicks") the puppet nodes, which then request all configuration changes from the puppet master node that they are registered with.

The puppet master sends updates only to puppet nodes that have known, valid certificates. Puppet nodes only accept updates from the puppet master host name they initially registered with. Because Oracle Big Data Appliance uses an internal network for communication within the rack, the puppet master host name resolves using /etc/hosts to an internal, private IP address.

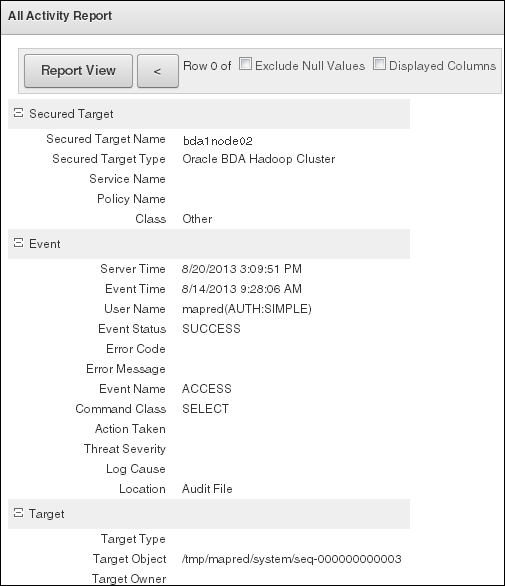

2.12 Auditing Oracle Big Data Appliance

You can use Oracle Audit Vault and Database Firewall to create and monitor the audit trails for HDFS and MapReduce on Oracle Big Data Appliance.

This section describes the Oracle Big Data Appliance plug-in:

2.12.1 About Oracle Audit Vault and Database Firewall

Oracle Audit Vault and Database Firewall secures databases and other critical components of IT infrastructure in these key ways:

-

Provides an integrated auditing platform for your enterprise.

-

Captures activity on Oracle Database, Oracle Big Data Appliance, operating systems, directories, file systems, and so forth.

-

Makes the auditing information available in a single reporting framework so that you can understand the activities across the enterprise. You do not need to monitor each system individually; you can view your computer infrastructure as a whole.

Audit Vault Server provides a web-based, graphic user interface for both administrators and auditors.

You can configure CDH/Hadoop clusters on Oracle Big Data Appliance as secured targets. The Audit Vault plug-in on Oracle Big Data Appliance collects audit and logging data from these services:

-

HDFS: Who makes changes to the file system.

-

Hive DDL: Who makes Hive database changes.

-

MapReduce: Who runs MapReduce jobs that correspond to file access.

-

Oozie workflows: Who runs workflow activities.

The Audit Vault plug-in is an installation option. The Mammoth utility automatically configures monitoring on Oracle Big Data Appliance as part of the software installation process.

See Also:

For more information about Oracle Audit Vault and Database Firewall:

2.12.2 Setting Up the Oracle Big Data Appliance Plug-in

The Mammoth utility on Oracle Big Data Appliance performs all the steps needed to setup the plug-in, using information that you provide.

To set up the Audit Vault plug-in for Oracle Big Data Appliance:

-

Ensure that Oracle Audit Vault and Database Firewall Server Release 12.1.1 is up and running on the same network as Oracle Big Data Appliance.

-

Complete the Audit Vault Plug-in section of Oracle Big Data Appliance Configuration Generation Utility.

-

Install the Oracle Big Data Appliance software using the Mammoth utility. An Oracle representative typically performs this step.

You can also add the plug-in at a later time using

bdacli. See Oracle Big Data Appliance Owner's Guide.

When the software installation is complete, the Audit Vault plug-in is installed on Oracle Big Data Appliance, and Oracle Audit Vault and Database Firewall is collecting its audit information. You do not need to perform any other installation steps.

See Also:

Oracle Big Data Appliance Owner's Guide for using Oracle Big Data Appliance Configuration Generation Utility

2.12.3 Monitoring Oracle Big Data Appliance

After installing the plug-in, you can monitor Oracle Big Data Appliance the same as any other secured target. Audit Vault Server collects activity reports automatically.

The following procedure describes one type of monitoring activity.

To view an Oracle Big Data Appliance activity report:

-

Log in to Audit Vault Server as an auditor.

-

Click the Reports tab.

-

Under Built-in Reports, click Audit Reports.

-

To browse all activities, in the Activity Reports list, click the Browse report data icon for All Activity.

-

Add or remove the filters to list the events.

Event names include ACCESS, CREATE, DELETE, and OPEN.

-

Click the Single row view icon in the first column to see a detailed report.

Figure 2-11 shows the beginning of an activity report, which records access to a Hadoop sequence file.

Figure 2-11 Activity Report in Audit Vault Server

Description of "Figure 2-11 Activity Report in Audit Vault Server"

2.13 Collecting Diagnostic Information for Oracle Customer Support

If you need help from Oracle Support to troubleshoot CDH issues, then you should first collect diagnostic information using the bdadiag utility with the cm option.

To collect diagnostic information:

-

Log in to an Oracle Big Data Appliance server as

root. -

Run

bdadiagwith at least thecmoption. You can include additional options on the command line as appropriate. See the Oracle Big Data Appliance Owner's Guide for a complete description of thebdadiagsyntax.# bdadiag cm

The command output identifies the name and the location of the diagnostic file.

-

Go to My Oracle Support at

http://support.oracle.com. -

Open a Service Request (SR) if you have not already done so.

-

Upload the

bz2file into the SR. If the file is too large, then upload it tosftp.oracle.com, as described in the next procedure.

To upload the diagnostics to ftp.oracle.com:

-

Open an SFTP client and connect to

sftp.oracle.com. Specify port 2021 and remote directory/support/incoming/target, wheretargetis the folder name given to you by Oracle Support. -

Log in with your Oracle Single Sign-on account and password.

-

Upload the diagnostic file to the new directory.

-

Update the SR with the full path and the file name.

Footnote Legend

Footnote 3: Mozilla Firefox is a registered trademark of the Mozilla Foundation.Footnote 4: Microsoft Internet Explorer is a registered trademark of Microsoft Corporation.

Footnote 5: Google Chrome is a registered trademark of Google Inc

Footnote 6: Mac OS is a registered trademark of Apple, Inc.