Capacity Planning

|

|

Examining Results from the Baseline Applications

The following sections provide information about baseline applications:

- MedRec Benchmark Overview

- Changes to MedRec and Transactions Used for Benchmarking

- Overview of the Baseline Applications

- Configuration for MedRec Baseline Applications (Light and Heavy)

- Measured TPS for Light MedRec Application on UNIX

- Measured TPS for Heavy MedRec Application on UNIX

- Measured TPS for Light MedRec Application on Windows 2000

- Measured TPS for Heavy MedRec Application on Windows 2000

- Next Steps

MedRec Benchmark Overview

The MedRec sample application was used for the Light and Heavy-weight application tests. The difference between the Light and Heavy-weight application tests is the communication protocol used between client and server. For the Light-weight application test, the HTTP 1.1 protocol is used from the clients. For the Heavy-weight application test, the HTTPS 1.1 protocol is used, which involves secure/encrypted data communication between client and server.

Avitek Medical Records (or MedRec) is a WebLogic Server sample application suite that concisely demonstrates all aspects of the J2EE platform. MedRec is designed as an educational tool for all levels of J2EE developers; it showcases the use of each J2EE component, and illustrates best practice design patterns for component interaction and client development.

The MedRec application provides a framework for patients, doctors, and administrators to manage patient data using a variety of different clients. Patient data includes:

- Patient profile information—A patient's name, address, social security number, and login information.

- Patient medical records—Details about a patient's visit with a physician such as the patient's vital signs and symptoms as well as the physician's diagnosis and prescriptions.

The MedRec application suite consists of two main J2EE applications. Each application supports one or more user scenarios for MedRec:

- medrecEar—Patients log in to the patient Web Application (patientWebApp) to edit their profile information, or request that their profile be added to the system. Patients can also view prior medical records of visits with their physician. Administrators use the administration Web Application (adminWebApp) to approve or deny new patient profile requests. medrecEar also provides all of the controller and business logic used by the MedRec application suite, as well as the Web Service used by different clients.

- physicianEar—Physicians and nurses log in to the physician Web Application (physicianWebApp) to search and access patient profiles, create and review patient medical records, and prescribe medicine to patients. The physician application is designed to communicate using the Web Service provided in medrecEar.

The medrecEAR application is deployed to a single WebLogic Server instance called MedRecServer. The physicianEAR application is deployed to a separate server, PhysicianServer, which communicates with the controller components of medrecEAR by using Web services.

Changes to MedRec and Transactions Used for Benchmarking

For capacity planning benchmarking purposes, the WebLogic Server 8.1 MedRec application has been optimized. The patient registration process was made synchronous and two-phase commit is omitted in the modified application. The client running the LoadRunner benchmarking software is used to generate the load using the following sequence of transactions:

Each action in the sequence above is treated as a transaction for computing the throughput. For each client, a unique patient ID is generated for every iteration.

Overview of the Baseline Applications

BEA produced baseline capacity planning results as a starting point. These baseline numbers were generated using three different applications:

- Light application software—MedRec application consisting of search, select, insert, and update transactions using the HTTP protocol.

- Heavy application software—MedRec application consisting of search, select, insert, and update transactions using the HTTPS (secure) protocol.

You can use the metrics provided for each of these applications to set the expectation about WebLogic Server throughput, response time, number of concurrent users, and overall impact on performance based on customer choice of hardware and configuration.

The measurements in the systems under study were captured without adding a think-time factor to the transaction mix.

Note: For a capacity planning profile using the WebAuction application on standard hardware, see Chapter 15, "Analysis of Capacity Planning," in J2EE Applications and BEA WebLogic Server, Prentice Hall, 2001, ISBN 0-13-091111-9 at http://www.phptr.com.

Configuration for MedRec Baseline Applications (Light and Heavy)

For all measurements, the client applications and the Oracle database were kept on a 4x400 Mhz Solaris processor. A WebLogic Server intance was started on each processor/configuration combination listed. Baseline numbers from the capacity measurement runs for each of three applications are listed in tabular form, after the description of the application.

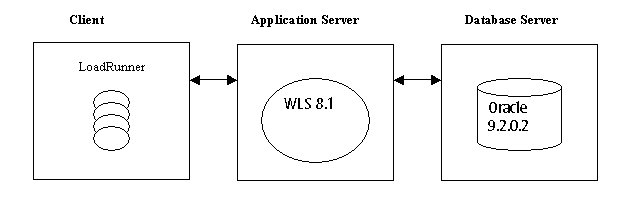

The baseline numbers were collected using a three-tier configuration, separating the client applications (running LoadRunner benchmarking software), WebLogic Server containing the MedRec baseline application, and the Oracle database server. The database in the systems under study is an Oracle 8.1.7 database. All measurements were taken using a 1 GB network.

Figure 2-1 Three-Tier Configuration

WebLogic Server Configurations

The baseline numbers were collected using the following product versions and settings:

- BEA WebLogic Server 8.1 GA product.

- Sun JDK141_02 with the following Java output on a Solaris platform:

-

Java(TM) 2 Runtime Environment, Standard Edition (build 1.4.1_02-ea-b01)

Java HotSpot(TM) Client VM (build 1.4.1_02-ea-b01, mixed mode)

- BEA JRockit on the Windows platform.

-

Java(TM) 2 Runtime Environment, Standard Edition (build 1.4.1_02)

BEA WebLogic JRockit(R) Virtual Machine (build 8.1-1.4.1_02-win32-CROSIS-20030317-1550, Native Threads, Generational Concurrent Garbage Collector)

- Default WebLogic JVM configuration, except for the following:

JVM configuration—Initial and maximum heap size set to 1G

Thread count—Set to 50, the optimal value found.

Used Oracle 9.2.0.2 Thin Driver

JDBC Connection pool size set to 50 (initial and maximum), the optimal value found.

Cluster configuration—One Managed Server for each node with the Administration Server running on the first node.

Measured Configurations

The following configurations were measured:

- WebLogic Server 8.1, Sun JDK141_02: 1-, 2-, 4- and 8-processor configuration on 750 MHz processor with 1 GB memory settings and one instance of WebLogic Server with BEA's best tuning.

- WebLogic Server 8.1, Sun JDK141_02: 1-, 2-, 3- and 4-node configuration on 400 MHz processor with 1 GB memory settings and one instance of WebLogic Server per node with BEA's best tuning. The LoadRunner clients performed the load balancing.

- WebLogic Server 8.1, JRockit version 141_02: 1-, 2-, 3- and 4-node configuration on 700 MHz processor with 1 GB memory settings and one instance of WebLogic Server per node with BEA's best tuning. The LoadRunner clients performed the load balancing.

Database Configurations

Oracle 9.2.0.2.0 was used on an eight-way 750 MHz E6800. To improve performance, the Oracle logs (8GB) were placed on a RAID array of four disks striped together and another RAID array of four disks striped for all the other database files.

Database configurations were as follows:

- Host—Solaris 9

- CPU—8X 750 MHz UltraSparc III - Sun E6800

- Memory—8GB

- Disk—2 X 18GB + 4 x 18GB RAID Array

- Software—Oracle9i Enterprise Edition Release 9.2.0.2.0

Client Configurations

Client configurations were as follows:

- Host— Windows 2000 Advanced Server

- CPU—4X 700 MHz Poweredge 6400

- Memory—4 GB

- Disk— 2 X 18GB

- Software—LoadRunner 7.02

Network Configuration

A one gigabite (1 GB) network was used.

LoadRunner Configurations

LoadRunner 7.02 was used for client load generation on a 4X700 MHz Windows 2000 box with 4 GB of memory. No think time is used for the test. HTTP/HTTPs version 1.1 was used.

About Baseline Numbers

The baseline numbers produced by the benchmarks used in this study should not be used to compare WebLogic Server with other application servers or hardware running similar benchmarks. The benchmark methodology and tuning used in this study are unique.

A number of benchmarks show how a well-designed application can perform on WebLogic Server. These benchmarks are available from BEA Systems. For more information, contact your BEA Systems sales representative.

Measured TPS for Light MedRec Application on UNIX

The measurements for the light MedRec application on UNIX were obtained using Solaris hardware and the HTTP protocol. Table 2-1 lists the number of clients in the first column, and hardware configuration with transactions per second in the remaining columns. For this application on UNIX, the designation for the configuration number is light medrec UNIX n where n is the configuration number (lmUn).

(TPS = Transactions Per Second)

Table 2-1 Number of Clients x TPS for Light MedRec Application on UNI

Table 2-2 summarizes the TPS achieved for each processor type/configuration combination measured, and identifies the number of concurrent clients running to achieve the measured TPS result.

Table 2-2 Measured TPS for Light MedRec Application on UNIX

Measured TPS for Heavy MedRec Application on UNIX

The measurements for the heavy MedRec application on UNIX were obtained using Solaris hardware and the HTTP protocol. Table 2-3 lists the number of clients in the first column, and hardware configuration with transactions per second in the remaining columns. For this application on UNIX, the designation for the configuration number is heavy medrec UNIX n (hmUn).

(TPS = Transactions Per Second)

Table 2-3 Number of Clients x TPS for Heavy MedRec Application on UNIX

Measured TPS for Light MedRec Application on Windows 2000

The measurements for the light MedRec application on Windows 2000 were obtained using the HTTP protocol. Table 2-4 lists the number of concurrently running clients in the first column, and hardware configuration with transactions per second in the remaining columns. For this application on Windows 2000, the designation for the configuration number is light medrec Windows n (lmWn).

Table 2-4 Number of Clients x TPS for Light MedRec Application on Windows 2000

The following table summarizes the measured TPS achieved for each processor type/configuration combination measured, and identifies the number of concurrent clients running to achieve the measured TPS result.

Table 2-5 Measured TPS for Light MedRec on Windows 2000

Measured TPS for Heavy MedRec Application on Windows 2000

The following are measurements for the heavy MedRec application on Windows 2000. All tests on all operating systems use the HTTPS (secure) protocol. The JVM used for heavy MedRec application is the Sun Microsystems JDK.

Table 2-6 lists the number of clients in the first column, and hardware configuration with transactions per second in the remaining columns. For this application on Windows 2000, the designation for the configuration number is heavy medrec { U | W } n (hmUn or mmWn).

Table 2-6 Number of Clients x TPS for Heavy MedRec Application on Windows 2000

Next Steps

After examining the characteristics and baseline results from sample applications, the next step is to compare your application to one or more of the baseline samples. Then proceed to Determining Hardware Capacity Requirements. These steps can assist you in generating capacity planning requirements for your application.