16 Near Cache

The objective of a Near Cache is to provide the best of both worlds between the extreme performance of the Replicated Cache Service and the extreme scalability of the Partitioned Cache Service by providing fast read access to Most Recently Used (MRU) and Most Frequently Used (MFU) data. To achieve this, the Near Cache is an implementation that wraps two caches: a "front cache" and a "back cache" that automatically and transparently communicate with each other by using a read-through/write-through approach.

The "front cache" provides local cache access. It is assumed to be inexpensive, in that it is fast, and is limited in terms of size. The "back cache" can be a centralized or multi-tiered cache that can load-on-demand in case of local cache misses. The "back cache" is assumed to be complete and correct in that it has much higher capacity, but more expensive in terms of access speed. The use of a Near Cache is not confined to Coherence*Extend; it also works with TCMP.

This design allows Near Caches to have configurable levels of cache coherency, from the most basic expiry-based caches and invalidation-based caches, up to advanced data-versioning caches that can provide guaranteed coherency. The result is a tunable balance between the preservation of local memory resources and the performance benefits of truly local caches.

The typical deployment uses a Local Cache for the "front cache". A Local Cache is a reasonable choice because it is thread safe, highly concurrent, size-limited and/or auto-expiring and stores the data in object form. For the "back cache", a remote, partitioned cache is used.

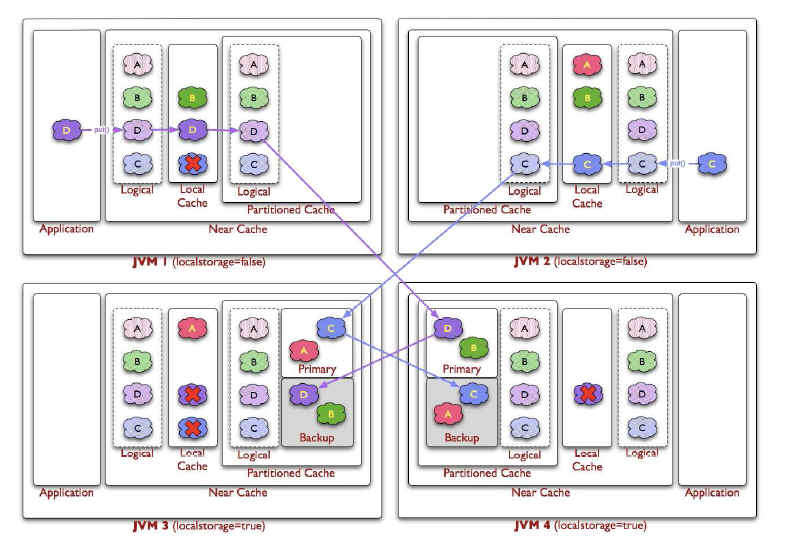

The following figure illustrates the data flow in a Near Cache. If the client writes an object D into the grid, the object is placed in the local cache inside the local JVM and in the partitioned cache which is backing it (including a backup copy). If the client requests the object, it can be obtained from the local, or "front cache", in object form with no latency.

Figure 16-1 Put Operations in a Near Cache Environment

Description of "Figure 16-1 Put Operations in a Near Cache Environment"

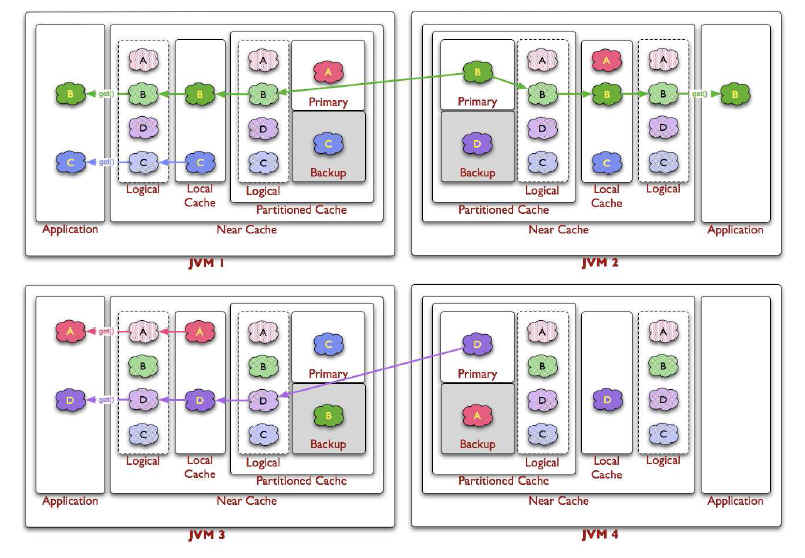

If the client requests an object that has been expired or invalidated from the "front cache", then Coherence will automatically retrieve the object from the partitioned cache. The updated object will be written to the "front cache" and then delivered to the client.

Figure 16-2 Get Operations in a Near Cache Environment

Description of "Figure 16-2 Get Operations in a Near Cache Environment"

16.1 Near Cache Invalidation Strategies

An invalidation strategy keeps the "front cache" of the Near Cache synchronous with the "back cache". The Near Cache can be configured with to listen to certain events in the back cache and automatically update or invalidate entries in the front cache. Depending on the interface that the back cache implements, the Near Cache provides four different strategies of invalidating the front cache entries that have changed by other processes in the back cache

Table 16-1 describes the invalidation strategies. You can find more information on the Listen* invalidation strategies and the read-through/write-through approach in Chapter 9, "Read-Through, Write-Through, Write-Behind Caching and Refresh-Ahead".

Table 16-1 Near Cache Invalidation Strategies

| Strategy Name | Description |

|---|---|

|

None |

This strategy instructs the cache not to listen for invalidation events at all. This is the best choice for raw performance and scalability when business requirements permit the use of data which might not be absolutely current. Freshness of data can be guaranteed by use of a sufficiently brief eviction policy for the front cache. |

|

Present |

This strategy instructs the Near Cache to listen to the back cache events related only to the items currently present in the front cache. This strategy works best when each instance of a front cache contains distinct subset of data relative to the other front cache instances (for example, sticky data access patterns). |

|

All |

This strategy instructs the Near Cache to listen to all back cache events. This strategy is optimal for read-heavy tiered access patterns where there is significant overlap between the different instances of front caches. |

|

Auto |

This strategy instructs the Near Cache to switch automatically between |

16.2 Configuring the Near Cache

A Near Cache is configured by using the <near-scheme> element in the coherence-cache-config file. This element has two required sub-elements: front-scheme for configuring a local (front-tier) cache and a back-scheme for defining a remote (back-tier) cache. While a local cache (<local-scheme>) is a typical choice for the front-tier, you can also use non-JVM heap based caches, (<external-scheme> or <paged-external-scheme>) or schemes based on Java objects (<class-scheme>).

The remote or back-tier cache is described by the <back-scheme> element. A back-tier cache can be either a distributed cache (<distributed-scheme>) or a remote cache (<remote-cache-scheme>). The <remote-cache-scheme> element enables you to use a clustered cache from outside the current cluster.

Optional sub-elements of <near-scheme> include <invalidation-strategy> for specifying how the front-tier and back-tier objects will be kept synchronous and <listener> for specifying a listener which will be notified of events occurring on the cache.

For an example configuration, see "Sample Near Cache Configuration". The elements in the file are described in the <near-scheme> topic.

16.3 Obtaining a Near Cache Reference

Coherence provides methods in the com.tangosol.net.CacheFactory class to obtain a reference to a configured Near Cache by name. For example:

Example 16-1 Obtaining a Near Cache Reference

NamedCache cache = CacheFactory.getCache("example-near-cache");

The current release of Coherence also enables you to configure a Near Cache for Java, C++, or for .NET clients.

16.4 Cleaning Up Resources Associated with a Near Cache

Instances of all NamedCache implementations, including NearCache, should be explicitly released by calling the NamedCache.release() method when they are no longer needed. This frees any resources they might hold.

If the particular NamedCache is used for the duration of the application, then the resources will be cleaned up when the application is shut down or otherwise stops. However, if it is only used for a period, the application should call its release() method when finished using it.

16.5 Sample Near Cache Configuration

The following sample code illustrates the configuration of a Near Cache. Sub-elements of <near-scheme> define the Near Cache. Note the use of the <front-scheme> element for configuring a local (front) cache and a <back-scheme> element for defining a remote (back) cache. See the <near-scheme> topic for a description of the Near Cache elements.

Example 16-2 Sample Near Cache Configuration

<?xml version="1.0"?>

<cache-config>

<caching-scheme-mapping>

<cache-mapping>

<cache-name>example-near-cache</cache-name>

<scheme-name>example-near</scheme-name>

</cache-mapping>

</caching-scheme-mapping>

<caching-schemes>

<local-scheme>

<scheme-name>example-local</scheme-name>

</local-scheme>

<near-scheme>

<scheme-name>example-near</scheme-name>

<front-scheme>

<local-scheme>

<scheme-ref>example-local</scheme-ref>

</local-scheme>

</front-scheme>

<back-scheme>

<remote-cache-scheme>

<scheme-ref>example-remote</scheme-ref>

</remote-cache-scheme>

</back-scheme>

</near-scheme>

<remote-cache-scheme>

<scheme-name>example-remote</scheme-name>

<service-name>ExtendTcpCacheService</service-name>

<initiator-config>

<tcp-initiator>

<remote-addresses>

<socket-address>

<address>localhost</address>

<port>9099</port>

</socket-address>

</remote-addresses>

<connect-timeout>5s</connect-timeout>

</tcp-initiator>

<outgoing-message-handler>

<request-timeout>30s</request-timeout>

</outgoing-message-handler>

</initiator-config>

</remote-cache-scheme>

</caching-schemes>

</cache-config>