| Logical Domains (LDoms) 1.1 Administration Guide |

| C H A P T E R 6 |

A virtual disk contains two components: the virtual disk itself as it appears in a guest domain, and the virtual disk backend, which is where data is stored and where virtual I/O ends up. The virtual disk backend is exported from a service domain by the virtual disk server (vds) driver. The vds driver communicates with the virtual disk client (vdc) driver in the guest domain through the hypervisor using a logical domain channel (LDC). Finally, a virtual disk appears as /dev/[r]dsk/cXdYsZ devices in the guest domain.

The virtual disk backend can be physical or logical. Physical devices can include the following:

Logical devices can be any of the following:

This section describes adding a virtual disk to a guest domain, changing virtual disk and timeout options, and removing a virtual disk from a guest domain. See Virtual Disk Backend Options for a description of virtual disk options. See Virtual Disk Timeout for a description of the virtual disk timeout.

Export the virtual disk backend from a service domain.

# ldm add-vdsdev [options={ro,slice,excl}] [mpgroup=mpgroup] backend volume_name@service_name

|

Assign the backend to a guest domain.

# ldm add-vdisk [timeout=seconds] disk_name volume_name@service_name ldom |

Note - A backend is actually exported from the service domain and assigned to the guest domain when the guest domain (ldom) is bound. |

A virtual disk backend can be exported multiple times either through the same or different virtual disk servers. Each exported instance of the virtual disk backend can then be assigned to either the same or different guest domains.

When a virtual disk backend is exported multiple times, it should not be exported with the exclusive (excl) option. Specifying the excl option will only allow exporting the backend once. The backend can be safely exported multiple times as a read-only device with the ro option.

The following example describes how to add the same virtual disk to two different guest domains through the same virtual disk service.

Export the virtual disk backend two times from a service domain by using the following commands.

# ldm add-vdsdev [options={ro,slice}] backend volume1@service_name

# ldm add-vdsdev [options={ro,slice}] backend volume2@service_name

|

The add-vdsdev subcommand displays the following warning to indicate that the backend is being exported more than once.

Warning: “backend” is already in use by one or more servers in guest “ldom” |

Assign the exported backend to each guest domain by using the following commands.

The disk_name can be different for ldom1 and ldom2.

# ldm add-vdisk [timeout=seconds] disk_name volume1@service_name ldom1 # ldm add-vdisk [timeout=seconds] disk_name volume2@service_name ldom2 |

When a backend is exported as a virtual disk, it can appear in the guest domain either as a full disk or as a single slice disk. The way it appears depends on the type of the backend and on the options used to export it.

When a backend is exported to a domain as a full disk, it appears in that domain as a regular disk with 8 slices (s0 to s7). Such a disk is visible with the format(1M) command. The disk’s partition table can be changed using either the fmthard(1M) or format(1M) command.

A full disk is also visible to the OS installation software and can be selected as a disk onto which the OS can be installed.

Any backend can be exported as a full disk except physical disk slices that can only be exported as single slice disks.

When a backend is exported to a domain as a single slice disk, it appears in that domain as a regular disk with 8 slices (s0 to s7). However, only the first slice (s0) is usable. Such a disk is visible with the format(1M) command, but the disk’s partition table cannot be changed.

A single slice disk is also visible from the OS installation software and can be selected as a disk onto which you can install the OS. In that case, if you install the OS using the UNIX File System (UFS), then only the root partition (/) must be defined, and this partition must use all the disk space.

Any backend can be exported as a single slice disk except physical disks that can only be exported as full disks.

Different options can be specified when exporting a virtual disk backend. These options are indicated in the options= argument of the ldm add-vdsdev command as a comma separated list. The valid options are: ro, slice, and excl.

The read-only (ro) option specifies that the backend is to be exported as a read-only device. In that case, the virtual disk assigned to the guest domain can only be accessed for read operations, and any write operation to the virtual disk will fail.

The exclusive (excl) option specifies that the backend in the service domain has to be opened exclusively by the virtual disk server when it is exported as a virtual disk to another domain. When a backend is opened exclusively, it is not accessible by other applications in the service domain. This prevents the applications running in the service domain from inadvertently using a backend that is also being used by a guest domain.

Because the excl option prevents applications running in the service domain from accessing a backend exported to a guest domain, do not set the excl option in the following situations:

When guest domains are running, if you want to be able to use commands such as format(1M) or luxadm(1M) to manage physical disks, then do not export these disks with the excl option.

When you export an SVM volume, such as a RAID or a mirrored volume, do not set the excl option. Otherwise, this can prevent SVM from starting some recovery operation in case a component of the RAID or mirrored volume fails. See Using Virtual Disks on Top of SVM for more information.

If the Veritas Volume Manager (VxVM) is installed in the service domain and Veritas Dynamic Multipathing (VxDMP) is enabled for physical disks, then physical disks have to be exported without the (non-default) excl option. Otherwise, the export fails, because the virtual disk server (vds) is unable to open the physical disk device. See Using Virtual Disks When VxVM Is Installed for more information.

If you are exporting the same virtual disk backend multiple times from the same virtual disk service, see Export a Virtual Disk Backend Multiple Times for more information.

By default, the backend is opened non-exclusively. That way the backend still can be used by applications running in the service domain while it is exported to another domain. Note that this is a new behavior starting with the Solaris 10 5/08 OS release. Before the Solaris 10 5/08 OS release, disk backends were always opened exclusively, and it was not possible to have a backend opened non-exclusively.

A backend is normally exported either as a full disk or as a single slice disk depending on its type. If the slice option is specified, then the backend is forcibly exported as a single slice disk.

This option is useful when you want to export the raw content of a backend. For example, if you have a ZFS or SVM volume where you have already stored data and you want your guest domain to access this data, then you should export the ZFS or SVM volume using the slice option.

For more information about this option, see Virtual Disk Backend.

The virtual disk backend is the location where data of a virtual disk are stored. The backend can be a disk, a disk slice, a file, or a volume, such as ZFS, SVM, or VxVM. A backend appears in a guest domain either as a full disk or as single slice disk, depending on whether the slice option is set when the backend is exported from the service domain. By default, a virtual disk backend is exported non-exclusively as a readable-writable full disk.

A physical disk or disk LUN is always exported as a full disk. In that case, virtual disk drivers (vds and vdc) forward I/O from the virtual disk and act as a pass-through to the physical disk or disk LUN.

A physical disk or disk LUN is exported from a service domain by exporting the device corresponding to the slice 2 (s2) of that disk without setting the slice option. If you export the slice 2 of a disk with the slice option, only this slice is exported and not the entire disk.

For example, to export the physical disk clt48d0 as a virtual disk, you must export slice 2 of that disk (clt48d0s2) from the service domain as follows.

service# ldm add-vdsdev /dev/dsk/c1t48d0s2 c1t48d0@primary-vds0 |

From the service domain, assign the disk (pdisk) to guest domain ldg1, for example.

service# ldm add-vdisk pdisk c1t48d0@primary-vds0 ldg1 |

After the guest domain is started and running the Solaris OS, you can list the disk (c0d1, for example) and see that the disk is accessible and is a full disk; that is, a regular disk with 8 slices.

ldg1# ls -1 /dev/dsk/c0d1s* /dev/dsk/c0d1s0 /dev/dsk/c0d1s1 /dev/dsk/c0d1s2 /dev/dsk/c0d1s3 /dev/dsk/c0d1s4 /dev/dsk/c0d1s5 /dev/dsk/c0d1s6 /dev/dsk/c0d1s7 |

A physical disk slice is always exported as a single slice disk. In that case, virtual disk drivers (vds and vdc) forward I/O from the virtual disk and act as a pass-through to the physical disk slice.

A physical disk slice is exported from a service domain by exporting the corresponding slice device. If the device is different from slice 2 then it is automatically exported as a single slice disk whether or not you specify the slice option. If the device is the slice 2 of the disk, you must set the slice option to export only slice 2 as a single slice disk; otherwise, the entire disk is exported as full disk.

For example, to export slice 0 of the physical disk c1t57d0 as a virtual disk, you must export the device corresponding to that slice (c1t57d0s0) from the service domain as follows.

service# ldm add-vdsdev /dev/dsk/c1t57d0s0 c1t57d0s0@primary-vds0 |

You do not need to specify the slice option, because a slice is always exported as a single slice disk.

From the service domain, assign the disk (pslice) to guest domain ldg1, for example.

service# ldm add-vdisk pslice c1t57d0s0@primary-vds0 ldg1 |

After the guest domain is started and running the Solaris OS, you can list the disk (c0d13, for example) and see that the disk is accessible.

ldg1# ls -1 /dev/dsk/c0d13s* /dev/dsk/c0d13s0 /dev/dsk/c0d13s1 /dev/dsk/c0d13s2 /dev/dsk/c0d13s3 /dev/dsk/c0d13s4 /dev/dsk/c0d13s5 /dev/dsk/c0d13s6 /dev/dsk/c0d13s7 |

Although there are 8 devices, because the disk is a single slice disk, only the first slice (s0) is usable.

A file or volume (for example from ZFS or SVM) is exported either as a full disk or as single slice disk depending on whether or not the slice option is set.

If you do not set the slice option, a file or volume is exported as a full disk. In that case, virtual disk drivers (vds and vdc) forward I/O from the virtual disk and manage the partitioning of the virtual disk. The file or volume eventually becomes a disk image containing data from all slices of the virtual disk and the metadata used to manage the partitioning and disk structure.

When a blank file or volume is exported as full disk, it appears in the guest domain as an unformatted disk; that is, a disk with no partition. Then you need to run the format(1M) command in the guest domain to define usable partitions and to write a valid disk label. Any I/O to the virtual disk fails while the disk is unformatted.

From the service domain, create a file (fdisk0 for example) to use as the virtual disk.

service# mkfile 100m /ldoms/domain/test/fdisk0 |

The size of the file defines the size of the virtual disk. This example creates a 100- megabyte blank file to get a 100-megabyte virtual disk.

From the service domain, export the file as a virtual disk.

service# ldm add-vdsdev /ldoms/domain/test/fdisk0 fdisk0@primary-vds0 |

In this example, the slice option is not set, so the file is exported as a full disk.

From the service domain, assign the disk (fdisk) to guest domain ldg1, for example.

service# ldm add-vdisk fdisk fdisk0@primary-vds0 ldg1 |

After the guest domain is started and running the Solaris OS, you can list the disk (c0d5, for example) and see that the disk is accessible and is a full disk; that is, a regular disk with 8 slices.

ldg1# ls -1 /dev/dsk/c0d5s* /dev/dsk/c0d5s0 /dev/dsk/c0d5s1 /dev/dsk/c0d5s2 /dev/dsk/c0d5s3 /dev/dsk/c0d5s4 /dev/dsk/c0d5s5 /dev/dsk/c0d5s6 /dev/dsk/c0d5s7 |

If the slice option is set, then the file or volume is exported as a single slice disk. In that case, the virtual disk has only one partition (s0), which is directly mapped to the file or volume backend. The file or volume only contains data written to the virtual disk with no extra data like partitioning information or disk structure.

When a file or volume is exported as a single slice disk, the system simulates a fake disk partitioning which makes that file or volume appear as a disk slice. Because the disk partitioning is simulated, you do not create partitioning for that disk.

From the service domain, create a ZFS volume (zdisk0 for example) to use as a single slice disk.

service# zfs create -V 100m ldoms/domain/test/zdisk0 |

The size of the volume defines the size of the virtual disk. This example creates a 100-megabyte volume to get a 100-megabyte virtual disk.

From the service domain, export the device corresponding to that ZFS volume, and set the slice option so that the volume is exported as a single slice disk.

service# ldm add-vdsdev options=slice /dev/zvol/dsk/ldoms/domain/test/zdisk0 zdisk0@primary-vds0 |

From the service domain, assign the volume (zdisk0) to guest domain ldg1, for example.

service# ldm add-vdisk zdisk0 zdisk0@primary-vds0 ldg1 |

After the guest domain is started and running the Solaris OS, you can list the disk (c0d9, for example) and see that the disk is accessible and is a single slice disk (s0).

ldg1# ls -1 /dev/dsk/c0d9s* /dev/dsk/c0d9s0 /dev/dsk/c0d9s1 /dev/dsk/c0d9s2 /dev/dsk/c0d9s3 /dev/dsk/c0d9s4 /dev/dsk/c0d9s5 /dev/dsk/c0d9s6 /dev/dsk/c0d9s7 |

Before the Solaris 10 5/08 OS release, the slice option did not exist, and volumes were exported as single slice disks. If you have a configuration exporting volumes as virtual disks and if you upgrade the system to the Solaris 10 5/08 OS, volumes are now exported as full disks instead of single slice disks. To preserve the old behavior and to have your volumes exported as single slice disks, you need to do either of the following:

Use the ldm set-vdsdev command in LDoms 1.1 software, and set the slice option for all volumes you want to export as single slice disks. Refer to the ldm man page or the Logical Domains (LDoms) Manager 1.1 Man Page Guide for more information about this command.

Add the following line to the /etc/system file on the service domain.

set vds:vd_volume_force_slice = 1 |

Note - Setting this tunable forces the export of all volumes as single slice disks, and you cannot export any volume as a full disk. |

| Backend | No Slice Option | Slice Option Set |

|---|---|---|

| Disk (disk slice 2) | Full disk[1] | Single slice disk[2] |

| Disk slice (not slice 2) | Single slice disk[3] | Single slice disk |

| File | Full disk | Single slice disk |

| Volume, including ZFS, SVM, or VxVM | Full disk | Single slice disk |

It is possible to use the loopback file (lofi) driver to export a file as a virtual disk. However, doing this adds an extra driver layer and impacts performance of the virtual disk. Instead, you can directly export a file as a full disk or as a single slice disk. See File and Volume.

To export a slice as a virtual disk either directly or indirectly (for example through a SVM volume), ensure that the slice does not start on the first block (block 0) of the physical disk by using the prtvtoc(1M) command.

If you directly or indirectly export a disk slice which starts on the first block of a physical disk, you might overwrite the partition table of the physical disk and make all partitions of that disk inaccessible.

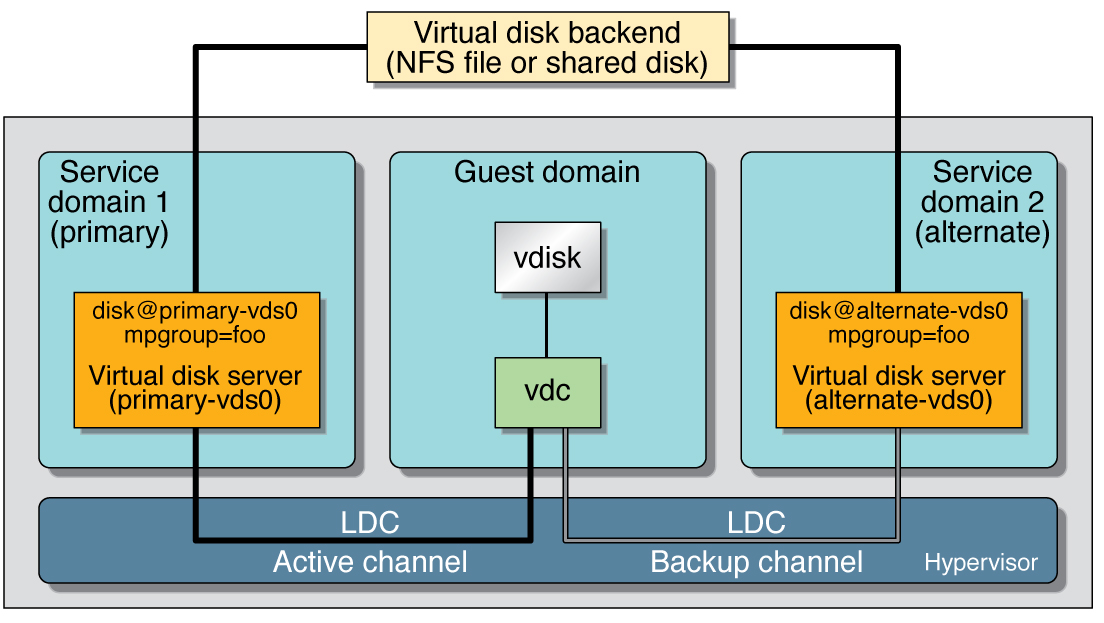

If a virtual disk backend is accessible through different service domains, then you can configure virtual disk multipathing so that the virtual disk in a guest domain remains accessible if a service domain goes down. An example of a virtual disk backend accessible through different service domains is a file on a network file system (NFS) server or a shared physical disk connected to several service domains.

To enable virtual disk multipathing, you must export a virtual disk backend from the different service domains and add it to the same multipathing group (mpgroup). The mpgroup is identified by a name and is configured when the virtual disk backend is exported.

Figure 5-2 illustrates how to configure virtual disk multipathing. In this example, a multipathing group named foo is used to create a virtual disk, whose backend is accessible from two service domains: primary and alternate.

Export the virtual backend from the primary service domain.

# ldm add-vdsdev mpgroup=foo backend_path1 volume@primary-vds0 |

Where backend_path1 is the path to the virtual disk backend from the primary domain.

Export the same virtual backend from the alternate service domain.

# ldm add-vdsdev mpgroup=foo backend_path2 volume@alternate-vds0 |

Where backend_path2 is the path to the virtual disk backend from the alternate domain.

Export the virtual disk to the guest domain.

# ldm add-vdisk disk_name volume@primary-vds0 ldom |

You can export a compact disc (CD) or digital versatile disc (DVD) the same way you export any regular disk. To export a CD or DVD to a guest domain, export slice 2 of the CD or DVD device as a full disk; that is, without the slice option.

Note - You cannot export the CD or DVD drive itself; you only can export the CD or DVD that is inside the CD or DVD drive. Therefore, a CD or DVD must be present inside the drive before you can export it. Also, to be able to export a CD or DVD, that CD or DVD cannot be in use in the service domain. In particular, the Volume Management file system, volfs(7FS) service must not use the CD or DVD. See Export a CD or DVD From the Service Domain to the Guest Domain for instructions on how to remove the device from use by volfs. |

If you have an International Organization for Standardization (ISO) image of a CD or DVD stored in file or on a volume, and export that file or volume as a full disk then it appears as a CD or DVD in the guest domain.

When you export a CD, DVD, or an ISO image, it automatically appears as a read-only device in the guest domain. However, you cannot perform any CD control operations from the guest domain; that is, you cannot start, stop, or eject the CD from the guest domain. If the exported CD, DVD, or ISO image is bootable, the guest domain can be booted on the corresponding virtual disk.

For example, if you export a Solaris OS installation DVD, you can boot the guest domain on the virtual disk corresponding to that DVD and install the guest domain from that DVD. To do so, when the guest domain reaches the ok prompt, use the following command.

ok boot /virtual-devices@100/channel-devices@200/disk@n:f |

Where n is the index of the virtual disk representing the exported DVD.

|

From the service domain, check whether the volume management daemon, vold(1M), is running and online.

service# svcs volfs STATE STIME FMRI online 12:28:12 svc:/system/filesystem/volfs:default |

If the volume management daemon is not running or online, go to step 5.

If the volume management daemon is running and online, as in the example in Step 2, do the following:

From the service domain, find the disk path for the CD-ROM device.

service# cdrw -l Looking for CD devices... Node Connected Device Device type ----------------------+--------------------------------+----------------- /dev/rdsk/c1t0d0s2 | MATSHITA CD-RW CW-8124 DZ13 | CD Reader/Writer |

From the service domain, export the CD or DVD disk device as a full disk.

service# ldm add-vdsdev /dev/dsk/c1t0d0s2 cdrom@primary-vds0 |

From the service domain, assign the exported CD or DVD to the guest domain (ldg1 in this example).

service# ldm add-vdisk cdrom cdrom@primary-vds0 ldg1 |

By default, if the service domain providing access to a virtual disk backend is down, all I/O from the guest domain to the corresponding virtual disk is blocked. The I/O automatically is resumed when the service domain is operational and is servicing I/O requests to the virtual disk backend.

However, there are some cases when file systems or applications might not want the I/O operation to block, but for it to fail and report an error if the service domain is down for too long. It is now possible to set a connection timeout period for each virtual disk, which can then be used to establish a connection between the virtual disk client on a guest domain and the virtual disk server on the service domain. When that timeout period is reached, any pending I/O and any new I/O will fail as long as the service domain is down and the connection between the virtual disk client and server is not reestablished.

This timeout can be set by doing one of the following:

Using the ldm add-vdisk command.

ldm add-vdisk timeout=seconds disk_name volume_name@service_name ldom |

Using the ldm set-vdisk command.

ldm set-vdisk timeout=seconds disk_name ldom |

Specify the timeout in seconds. If the timeout is set to 0, the timeout is disabled and I/O is blocked while the service domain is down (this is the default setting and behavior).

Alternatively, the timeout can be set by adding the following line to the /etc/system file on the guest domain.

set vdc:vdc_timeout = seconds |

Note - If this tunable is set, it overwrites any timeout setting done using the ldm CLI. Also, the tunable sets the timeout for all virtual disks in the guest domain. |

If a physical SCSI disk or LUN is exported as a full disk, the corresponding virtual disk supports the user SCSI command interface, uscsi(7D) and multihost disk control operations mhd(7I). Other virtual disks, such as virtual disks having a file or a volume as a backend, do not support these interfaces.

As a consequence, applications or product features using SCSI commands (such as SVM metaset, or Solaris Cluster shared devices) can be used in guest domains only with virtual disks having a physical SCSI disk as a backend.

The format(1M) command works in a guest domain with virtual disks exported as full disk. Single slice disks are not seen by the format(1M) command, and it is not possible to change the partitioning of such disks.

Virtual disks whose backends are SCSI disks support all format(1M) subcommands. Virtual disks whose backends are not SCSI disks do not support some format(1M) subcommands, such as repair and defect. In that case, the behavior of format(1M) is similar to the behavior of Integrated Drive Electronics (IDE) disks.

This section describes using the Zettabyte File System (ZFS) to store virtual disk backends exported to guest domains. ZFS provides a convenient and powerful solution to create and manage virtual disk backends. ZFS enables:

Refer to the Solaris ZFS Administration Guide in the Solaris 10 System Administrator Collection for more information about using the ZFS.

In the following descriptions and examples, the primary domain is also the service domain where disk images are stored.

To store the disk images, first create a ZFS storage pool in the service domain. For example, this command creates the ZFS storage pool ldmpool containing the disk c1t50d0 in the primary domain.

primary# zpool create ldmpool clt50d0 |

This example is going to create a disk image for guest domain ldg1. To do so, a ZFS for this guest domain is created, and all disk images of this guest domain will be stored on that file system.

Primary# zfs create ldmpool/ldg1 |

Disk images can be stored on ZFS volumes or ZFS files. Creating a ZFS volume, whatever its size, is quick using the zfs create -V command. On the other hand, ZFS files have to be created using the mkfile command. The command can take some time to complete, especially if the file to create is quite large, which is often the case when creating a disk image.

Both ZFS volumes and ZFS files can take advantage of ZFS features such as snapshot and clone, but a ZFS volume is a pseudo device while a ZFS file is a regular file.

If the disk image is to be used as a virtual disk onto which the Solaris OS is to be installed, then it should be large enough to contain:

Therefore, the size of a disk image to install the entire Solaris OS should be at least 8 gigabytes.

Export the ZFS volume or file as a virtual disk. The syntax to export a ZFS volume or file is the same, but the path to the backend is different.

When the guest domain is started, the ZFS volume or file appears as a virtual disk on which the Solaris OS can be installed.

When your disk image is stored on a ZFS volume or on a ZFS file, you can create snapshots of this disk image by using the ZFS snapshot command.

Before you create a snapshot of the disk image, ensure that the disk is not currently in use in the guest domain to ensure that data currently stored on the disk image are coherent. There are several ways to ensure that a disk is not in use in a guest domain. You can either:

Stop and unbind the guest domain. This is the safest solution, and this is the only solution available if you want to create a snapshot of a disk image used as the boot disk of a guest domain.

Alternatively, you can unmount any slices of the disk you want to snapshot used in the guest domain, and ensure that no slice is in use the guest domain.

In this example, because of the ZFS layout, the command to create a snapshot of the disk image is the same whether the disk image is stored on a ZFS volume or on a ZFS file.

Once you have created a snapshot of a disk image, you can duplicate this disk image by using the ZFS clone command. Then the cloned image can be assigned to another domain. Cloning a boot disk image quickly creates a boot disk for a new guest domain without having to perform the entire Solaris OS installation process.

For example, if the disk0 created was the boot disk of domain ldg1, do the following to clone that disk to create a boot disk for domain ldg2.

primary# zfs create ldmpool/ldg2 primary# zfs clone ldmpool/ldg1/disk0@version_1 ldmpool/ldg2/disk0 |

Then ldompool/ldg2/disk0 can be exported as a virtual disk and assigned to the new ldg2 domain. The domain ldg2 can directly boot from that virtual disk without having to go through the OS installation process.

When a boot disk image is cloned, the new image is exactly the same as the original boot disk, and it contains any information that has been stored on the boot disk before the image was cloned, such as the host name, the IP address, the mounted file system table, or any system configuration or tuning.

Because the mounted file system table is the same on the original boot disk image and on the cloned disk image, the cloned disk image has to be assigned to the new domain in the same order as it was on the original domain. For example, if the boot disk image was assigned as the first disk of the original domain, then the cloned disk image has to be assigned as the first disk of the new domain. Otherwise, the new domain is unable to boot.

If the original domain was configured with a static IP address, then a new domain using the cloned image starts with the same IP address. In that case, you can change the network configuration of the new domain by using the sys-unconfig(1M) command. To avoid this problem you can also create a snapshot of a disk image of an unconfigured system.

After the sys-unconfig(1M) command completes, the domain halts.

Take a snapshot of the domain boot disk image, for example.

primary# zfs snapshot ldmpool/ldg1/disk0@unconfigured |

At this point you have the snapshot of the boot disk image of an unconfigured system. You can clone this image to create a new domain which, when first booted, asks for the configuration of the system.

If the original domain was configured with the Dynamic Host Configuration Protocol (DHCP), then a new domain using the cloned image also uses DHCP. In that case, you do not need to change the network configuration of the new domain because it automatically receives an IP address and its network configuration as it boots.

This section describes using volume managers in a Logical Domains environment.

Any Zettabyte File System (ZFS), Solaris Volume Manager (SVM), or Veritas Volume Manager (VxVM) volume can be exported from a service domain to a guest domain as a virtual disk. A volume can be exported either as a single slice disk (if the slice option is specified with the ldm add-vdsdev command) or as a full disk.

Note - The remainder of this section uses an SVM volume as an example. However, the discussion also applies to ZFS and VxVM volumes. |

The following example shows how to export a volume as a single slice disk. For example, if a service domain exports the SVM volume /dev/md/dsk/d0 to domain1 as a single slice disk, and domain1 sees that virtual disk as /dev/dsk/c0d2*, then domain1 only has an s0 device; that is, /dev/dsk/c0d2s0.

The virtual disk in the guest domain (for example, /dev/dsk/c0d2s0) is directly mapped to the associated volume (for example, /dev/md/dsk/d0), and data stored onto the virtual disk from the guest domain are directly stored onto the associated volume with no extra metadata. So data stored on the virtual disk from the guest domain can also be directly accessed from the service domain through the associated volume.

If the SVM volume d0 is exported from the primary domain to domain1, then the configuration of domain1 requires some extra steps.

primary# metainit d0 3 1 c2t70d0s6 1 c2t80d0s6 1 c2t90d0s6 primary# ldm add-vdsdev options=slice /dev/md/dsk/d0 vol3@primary-vds0 primary# ldm add-vdisk vdisk3 vol3@primary-vds0 domain1 |

After domain1 has been bound and started, the exported volume appears as /dev/dsk/c0d2s0, for example, and you can use it.

domain1# newfs /dev/rdsk/c0d2s0 domain1# mount /dev/dsk/c0d2s0 /mnt domain1# echo test-domain1 > /mnt/file |

After domain1 has been stopped and unbound, data stored on the virtual disk from domain1 can be directly accessed from the primary domain through SVM volume d0.

primary# mount /dev/md/dsk/d0 /mnt primary# cat /mnt/file test-domain1 |

Note - A single slice disk cannot be seen by the format(1M) command, cannot be partitioned, and cannot be used as an installation disk for the Solaris OS. See Virtual Disk Appearance for more information about this topic. |

When a RAID or mirror SVM volume is used as a virtual disk by another domain, then it has to be exported without setting the exclusive (excl) option. Otherwise, if there is a failure on one of the components of the SVM volume, then the recovery of the SVM volume using the metareplace command or using a hot spare does not start. The metastat command sees the volume as resynchronizing, but the resynchronization does not progress.

For example, /dev/md/dsk/d0 is a RAID SVM volume exported as a virtual disk with the excl option to another domain, and d0 is configured with some hot-spare devices. If a component of d0 fails, SVM replaces the failing component with a hot spare and resynchronizes the SVM volume. However, the resynchronization does not start. The volume is reported as resynchronizing, but the resynchronization does not progress.

# metastat d0 d0: RAID State: Resyncing Hot spare pool: hsp000 Interlace: 32 blocks Size: 20097600 blocks (9.6 GB) Original device: Size: 20100992 blocks (9.6 GB) Device Start Block Dbase State Reloc c2t2d0s1 330 No Okay Yes c4t12d0s1 330 No Okay Yes /dev/dsk/c10t600C0FF0000000000015153295A4B100d0s1 330 No Resyncing Yes |

In such a situation, the domain using the SVM volume as a virtual disk has to be stopped and unbound to complete the resynchronization. Then the SVM volume can be resynchronized using the metasync command.

# metasync d0 |

When the Veritas Volume Manager (VxVM) is installed on your system, and if Veritas Dynamic Multipathing (DMP) is enabled on a physical disk or partition you want to export as virtual disk, then you have to export that disk or partition without setting the (non-default) excl option. Otherwise, you receive an error in /var/adm/messages while binding a domain that uses such a disk.

vd_setup_vd(): ldi_open_by_name(/dev/dsk/c4t12d0s2) = errno 16 vds_add_vd(): Failed to add vdisk ID 0 |

You can check if Veritas DMP is enabled by checking multipathing information in the output of the command vxdisk list; for example:

# vxdisk list Disk_3 Device: Disk_3 devicetag: Disk_3 type: auto info: format=none flags: online ready private autoconfig invalid pubpaths: block=/dev/vx/dmp/Disk_3s2 char=/dev/vx/rdmp/Disk_3s2 guid: - udid: SEAGATE%5FST336753LSUN36G%5FDISKS%5F3032333948303144304E0000 site: - Multipathing information: numpaths: 1 c4t12d0s2 state=enabled |

Alternatively, if Veritas DMP is enabled on a disk or a slice that you want to export as a virtual disk with the excl option set, then you can disable DMP using the vxdmpadm command. For example:

# vxdmpadm -f disable path=/dev/dsk/c4t12d0s2 |

This section describes using volume managers on top of virtual disks.

Any virtual disk can be used with ZFS. A ZFS storage pool (zpool) can be imported in any domain that sees all the storage devices that are part of this zpool, regardless of whether the domain sees all these devices as virtual devices or real devices.

Any virtual disk can be used in the SVM local disk set. For example, a virtual disk can be used for storing the SVM metadevice state database, metadb(1M), of the local disk set or for creating SVM volumes in the local disk set.

Any virtual disk whose backend is a SCSI disk can be used in a SVM shared disk set, metaset(1M). Virtual disks whose backends are not SCSI disks cannot be added into a SVM share disk set. Trying to add a virtual disk whose backend is not a SCSI disk into a SVM shared disk set fails with an error similar to the following.

# metaset -s test -a c2d2 metaset: domain1: test: failed to reserve any drives |

Copyright © 2008, Sun Microsystems, Inc. All rights reserved.