| A P P E N D I X A |

|

RAID Basics |

This appendix provides background information about RAID including an overview of RAID terminology and RAID levels. Topics covered include the following:

Redundant array of independent disks (RAID) is a storage technology used to improve the processing capability of storage systems. This technology is designed to provide reliability in disk array systems and to take advantage of the performance gains offered by an array of multiple disks over single-disk storage.

RAID’s two primary underlying concepts are:

In the event of a disk failure, disk access continues normally and the failure is transparent to the host system.

A logical drive is an array of independent physical drives. Increased availability, capacity, and performance are achieved by creating logical drives. The logical drive appears to the host the same as a local hard disk drive does.

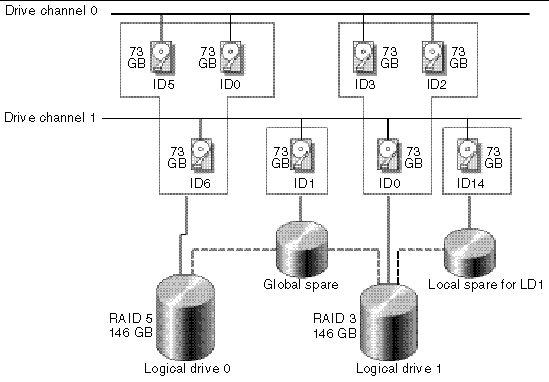

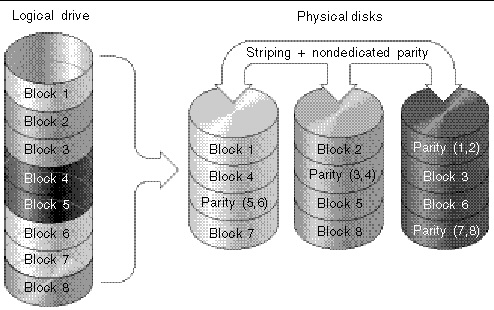

FIGURE A-1 Logical Drive Including Multiple Physical Drives

A logical volume is composed of two or more logical drives. The logical volume can be divided into a maximum of 32 partitions for Fibre Channel. During operation, the host sees a nonpartitioned logical volume or a partition of a logical volume as one single physical drive.

A local spare drive is a standby drive assigned to serve one specified logical drive. When a member drive of this specified logical drive fails, the local spare drive becomes a member drive and automatically starts to rebuild.

A global spare drive does not only serve one specified logical drive. When a member drive from any of the logical drives fails, the global spare drive joins that logical drive and automatically starts to rebuild.

You can connect up to 15 devices (excluding the controller itself) to a SCSI channel when the Wide function is enabled (16-bit SCSI). You can connect up to 125 devices to an FC channel in loop mode. Each device has a unique ID that identifies the device on the SCSI bus or FC loop.

A logical drive consists of a group of SCSI drives, Fibre Channel drives, or SATA drives. Physical drives in one logical drive do not have to come from the same SCSI channel. Also, each logical drive can be configured for a different RAID level.

A drive can be assigned as the local spare drive to one specified logical drive, or as a global spare drive. A spare is not available for logical drives that have no data redundancy (RAID 0).

FIGURE A-2 Allocation of Drives in Logical Drive Configurations

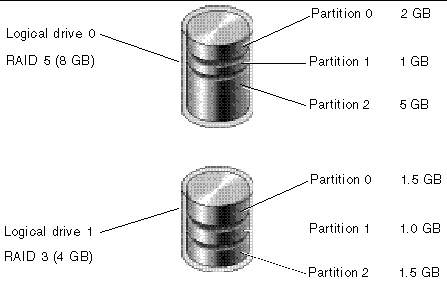

You can divide a logical drive or logical volume into several partitions or use the entire logical drive as single partition.

FIGURE A-3 Partitions in Logical Drive Configurations

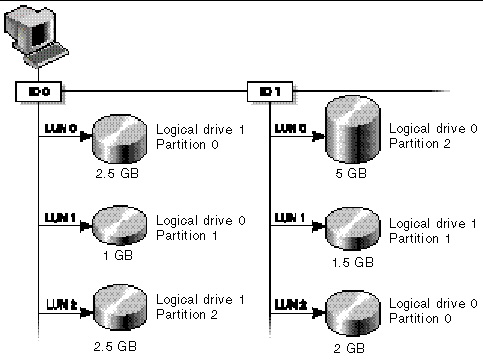

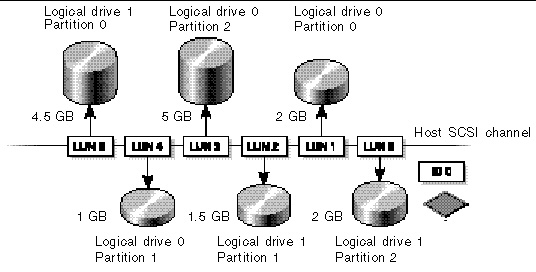

Each partition is mapped to LUNs under host SCSI IDs or IDs on host channels. Each SCSI ID/LUN acts as one individual hard drive to the host computer.

FIGURE A-4 Mapping Partitions to Host ID/LUNs

FIGURE A-5 Mapping Partitions to LUNs Under an ID

There are several ways to implement a RAID array, using a combination of mirroring, striping, duplexing, and parity technologies. These various techniques are referred to as RAID levels. Each level offers a mix of performance, reliability, and cost. Each level uses a distinct algorithm to implement fault tolerance.

There are several RAID level choices: RAID 0, 1, 3, 5, 1+0, 3+0 (30), and 5+0 (50). RAID levels 1, 3, and 5 are the most commonly used.

The following table provides a brief overview of the RAID levels.

Capacity refers to the total number (N) of physical drives available for data storage. For example, if the capacity is N-1 and the total number of disk drives in the logical drive is six 36-Mbyte drives, the disk space available for storage is equal to five disk drives--(5 x 36 Mbyte or 180 Mbyte. The -1 refers to the amount of striping across six drives, which provides redundancy of data and is equal to the size of one of the disk drives.

For RAID 3+0 (30) and 5+0 (50), capacity refers to the total number of physical drives (N) minus one physical drive (#) for each logical drive in the volume. For example, if the total number of disk drives in the logical drive is twenty 36-Mbyte drives and the total number of logical drives is 2, the disk space available for storage is equal to 18 disk drives--18 x 36 Mbyte (648 Mbyte).

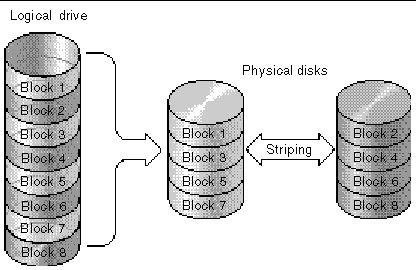

RAID 0 implements block striping, where data is broken into logical blocks and is striped across several drives. Unlike other RAID levels, there is no facility for redundancy. In the event of a disk failure, data is lost.

In block striping, the total disk capacity is equivalent to the sum of the capacities of all drives in the array. This combination of drives appears to the system as a single logical drive.

RAID 0 provides the highest performance. It is fast because data can be simultaneously transferred to or from every disk in the array. Furthermore, read/writes to separate drives can be processed concurrently.

FIGURE A-6 RAID 0 Configuration

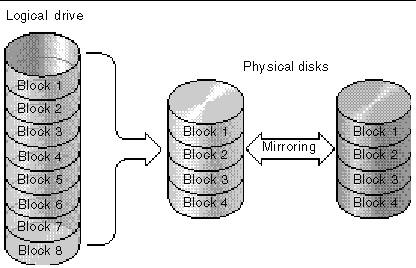

RAID 1 implements disk mirroring, where a copy of the same data is recorded onto two drives. By keeping two copies of data on separate disks, data is protected against a disk failure. If, at any time, a disk in the RAID 1 array fails, the remaining good disk (copy) can provide all of the data needed, thus preventing downtime.

In disk mirroring, the total usable capacity is equivalent to the capacity of one drive in the RAID 1 array. Thus, combining two 1-Gbyte drives, for example, creates a single logical drive with a total usable capacity of 1 Gbyte. This combination of drives appears to the system as a single logical drive.

| Note - RAID 1 does not allow expansion. RAID levels 3 and 5 permit expansion by adding drives to an existing array. |

FIGURE A-7 RAID 1 Configuration

In addition to the data protection that RAID 1 provides, this RAID level also improves performance. In cases where multiple concurrent I/O is occurring, that I/O can be distributed between disk copies, thus reducing total effective data access time.

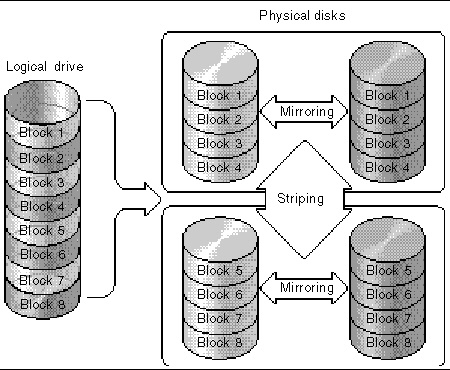

RAID 1+0 combines RAID 0 and RAID 1 to offer mirroring and disk striping. Using RAID 1+0 is a time-saving feature that enables you to configure a large number of disks for mirroring in one step. It is not a standard RAID level option that you can select; it does not appear in the list of RAID level options supported by the controller. If four or more disk drives are chosen for a RAID 1 logical drive, RAID 1+0 is performed automatically.

FIGURE A-8 RAID 1+0 Configuration

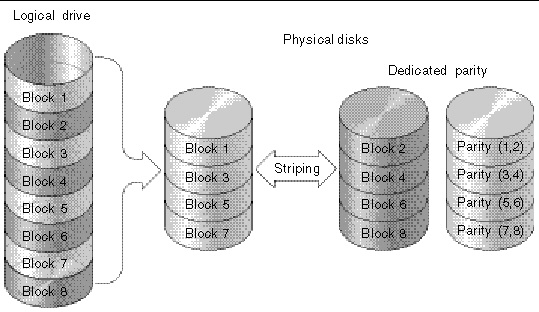

RAID 3 implements block striping with dedicated parity. This RAID level breaks data into logical blocks, the size of a disk block, and then stripes these blocks across several drives. One drive is dedicated to parity. In the event that a disk fails, the original data can be reconstructed using the parity information and the information on the remaining disks.

In RAID 3, the total disk capacity is equivalent to the sum of the capacities of all drives in the combination, excluding the parity drive. Thus, combining four 1-Gbyte drives, for example, creates a single logical drive with a total usable capacity of 3 Gbyte. This combination appears to the system as a single logical drive.

RAID 3 provides increased data transfer rates when data is being read in small chunks or sequentially. However, in write operations that do not span every drive, performance is reduced because the information stored in the parity drive needs to be recalculated and rewritten every time new data is written, limiting simultaneous I/O.

FIGURE A-9 RAID 3 Configuration

RAID 5 implements multiple-block striping with distributed parity. This RAID level offers redundancy with the parity information distributed across all disks in the array. Data and its parity are never stored on the same disk. In the event that a disk fails, original data can be reconstructed using the parity information and the information on the remaining disks.

FIGURE A-10 RAID 5 Configuration

RAID 5 offers increased data transfer rates when data is accessed in large chunks, or randomly and reduced data access time during many simultaneous I/O cycles.

Advanced RAID levels require the use of the array’s built-in volume manager. These combination RAID levels provide the protection benefits of RAID 1, 3, or 5 with the performance of RAID 1. To use advanced RAID, first create two or more RAID 1, 3, or 5 arrays, and then join them. The following table provides a description of the advanced RAID levels.

The external RAID controllers provide both local spare drive and global spare drive functions. The local spare drive is used only for one specified drive; the global spare drive can be used for any logical drive on the array.

The local spare drive always has higher priority than the global spare drive. Therefore, if a drive fails and both types of spares are available at the same time or a greater size is needed to replace the failed drive, the local spare is used.

If there is a failed drive in the RAID 5 logical drive, replace the failed drive with a new drive to keep the logical drive working. To identify a failed drive, refer to the Sun StorEdge 3000 Family RAID Firmware User’s Guide for your array.

|

Caution - If, when trying to remove a failed drive, you mistakenly remove the wrong drive, you can no longer access the logical drive because you have incorrectly failed another drive. |

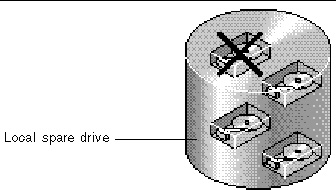

A local spare drive is a standby drive assigned to serve one specified logical drive. When a member drive of this specified logical drive fails, the local spare drive becomes a member drive and automatically starts to rebuild.

A local spare drive always has higher priority than a global spare drive; that is, if a drive fails and there is a local spare and a global spare drive available, the local spare drive is used.

FIGURE A-11 Local (Dedicated) Spare

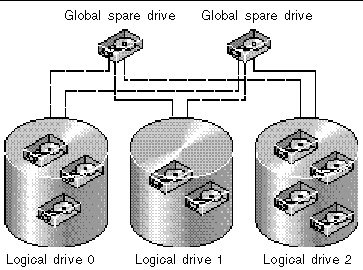

A global spare drive is available for all logical drives rather than serving only one logical drive (see FIGURE A-12). When a member drive from any of the logical drives fails, the global spare drive joins that logical drive and automatically starts to rebuild.

A local spare drive always has higher priority than a global spare drive; that is, if a drive fails and there is a local spare and a global spare drive available, the local spare drive is used.

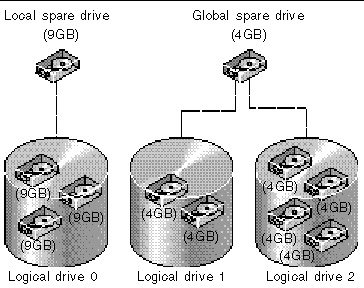

In FIGURE A-13, the member drives in logical drive 0 are 9-Gbyte drives, and the members in logical drives 1 and 2 are all 4-Gbyte drives.

FIGURE A-13 Mixing Local and Global Spares

A local spare drive always has higher priority than a global spare drive; that is, if a drive fails and both a local spare and a global spare drive are available, the local spare drive is used.

In FIGURE A-13, it is not possible for the 4-Gbyte global spare drive to join logical drive 0 because of its insufficient capacity. The 9-Gbyte local spare drive aids logical drive 0 once a drive in this logical drive fails. If the failed drive is in logical drive 1 or 2, the 4-Gbyte global spare drive immediately aids the failed drive.

Copyright © 2009 Sun Microsystems, Inc. All rights reserved.