| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris Cluster 3.3 With Sun StorEdge 3510 or 3511 FC RAID Array Manual |

| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris Cluster 3.3 With Sun StorEdge 3510 or 3511 FC RAID Array Manual |

1. Installing and Maintaining Sun StorEdge 3510 and 3511 Fibre Channel RAID Arrays

Storage Array Cabling Configurations

How to Install a Storage Array

Adding a Storage Array to a Running Cluster

How to Perform Initial Configuration Tasks on the Storage Array

How to Connect the Storage Array to FC Switches

How to Connect the Node to the FC Switches or the Storage Array

Configuring Storage Arrays in a Running Cluster

StorEdge 3510 and 3511 FC RAID Array FRUs

How to Remove a Storage Array From a Running Cluster

How to Upgrade Storage Array Firmware

Replacing a Node-to-Switch Component

How to Replace a Node-to-Switch Component in a Cluster That Uses Multipathing

How to Replace a Node-to-Switch Component in a Cluster Without Multipathing

This section contains the procedures listed in Table 1-1

Table 1-1 Task Map: Installing Storage Arrays

|

You can install the StorEdge 3510 and 3511 FC RAID arrays in several different configurations. Use the Sun StorEdge 3000 Family Best Practices Manual to help evaluate your needs and determine which configuration is best for your situation. See your Oracle service provider for currently supported Oracle Solaris Cluster configurations.

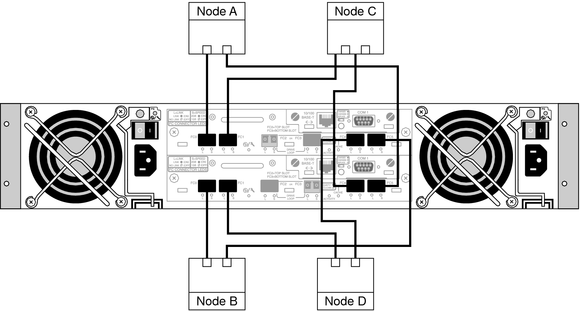

The following figures provide examples of configurations with multipathing solutions. With direct attach storage (DAS) configurations with multipathing, you map each LUN to each host channel. All nodes can see all 256 LUNs.

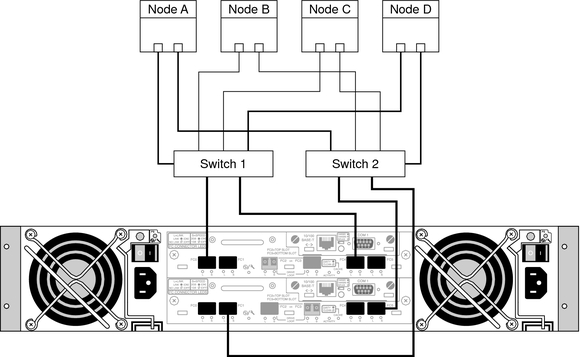

Figure 1-1 Sun StorEdge 3510 DAS Configuration With Multipathing and Two Controllers

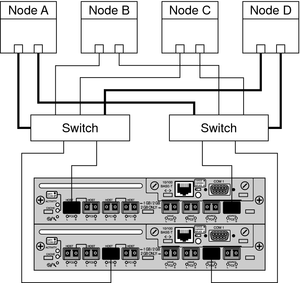

Figure 1-2 Sun StorEdge 3511 DAS Configuration With Multipathing and Two Controllers

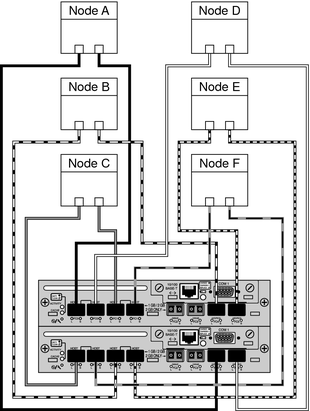

The two-controller SAN configurations allow 32 LUNs to be mapped to each pair of host channels. Since these configurations use multipathing, each node sees a total of 64 LUNs.

Figure 1-3 Sun StorEdge 3510 SAN Configuration With Multipathing and Two Controllers

Figure 1-4 Sun StorEdge 3511 SAN Configuration With Multipathing and Two Controllers

Use this procedure to install and configure storage arrays before installing the Oracle Solaris Operating System and Oracle Solaris Cluster software on your cluster nodes. If you need to add a storage array to an existing cluster, use the procedure in Adding a Storage Array to a Running Cluster.

Before You Begin

This procedure assumes that the hardware is not connected.

Note - If you plan to attach a StorEdge 3510 or 3511 FC expansion storage array to a StorEdge 3510 or 3511 FC RAID storage array, attach the expansion storage array before connecting the RAID storage array to the cluster nodes. See the Sun StorEdge 3000 Family Installation, Operation, and Service Manual for more information.

For the procedure on installing host adapters, see the documentation that shipped with your host adapters and nodes.

For the procedure on installing an FC switch, see the documentation that shipped with your switch hardware.

Note - You must use FC switches when installing storage arrays in a SAN configuration.

For the procedures on installing a GBIC or an SFP to an FC switch, see the documentation that shipped with your FC switch hardware.

For the procedures on connecting your FC storage array, see Sun StorEdge 3000 Family Installation, Operation, and Service Manual.

If you plan to create a storage area network (SAN), connect the storage array to the FC switches using fiber-optic cables.

If you plan to have a DAS configuration, connect the storage array to the nodes.

Verify that all components are powered on and functional.

For the procedure on powering up the storage arrays and checking LEDs, see the Sun StorEdge 3000 Family Installation, Operation, and Service Manual.

For procedures on setting up logical drives and LUNs, see the Sun StorEdge 3000 Family Installation, Operation, and Service Manual or the Sun StorEdge 3000 Family RAID Firmware 3.27 User's Guide.

For the procedure on configuring the storage array, see Sun StorEdge 3000 Family Installation, Operation, and Service Manual.

For the procedure about how to install the Solaris operating environment, see How to Install Solaris Software in Oracle Solaris Cluster Software Installation Guide.

Oracle Solaris Cluster software requires patch version 113723–03 or later for each Sun StorEdge 3510 array in the cluster.

See the Oracle Solaris Cluster release notes documentation for information about accessing Oracle's Sun EarlyNotifier web pages. The EarlyNotifier web pages list information about any required patches or firmware levels that are available for download.

When using these arrays, Oracle Solaris Cluster software requires Sun StorEdge SAN Foundation software:

# devfsadm -C

# luxadm probe

# format

For software installation procedures, see the Oracle Solaris Cluster software installation documentation.

See Also

To continue with Oracle Solaris Cluster software installation tasks, see the Oracle Solaris Cluster software installation documentation.

Use this procedure to add new storage array to a running cluster. To install to a new Oracle Solaris Cluster that is not running, use the procedure in How to Install a Storage Array.

If you need to add a storage array to more than two nodes, repeat the steps for each additional node that connects to the storage array.

Note - This procedure assumes that your nodes are not configured with dynamic reconfiguration functionality.

If your nodes are configured for dynamic reconfiguration, see the Oracle Solaris Cluster system administration documentation and skip steps that instruct you to shut down the node.

For the procedures on configuring the storage array, see the Sun StorEdge 3000 Family Installation, Operation, and Service Manual.

Oracle Solaris Cluster software requires patch version 113723-03 or later for each Sun StorEdge 3510 array in the cluster.

See the Oracle Solaris Cluster release notes documentation for information about accessing Oracle's Sun's EarlyNotifier web pages. The EarlyNotifier web pages list information about any required patches or firmware levels that are available for download. For the procedure on applying any host adapter's firmware patch, see the firmware patch README file.

For the procedures on setting up logical drives and LUNs, see the Sun StorEdge 3000 Family Installation, Operation, and Service Manual or Sun StorEdge 3000 Family RAID Firmware 3.27 User's Guide.

Use this procedure if you plan to add a storage array to a SAN environment. If you do not plan to add the storage array to a SAN environment, go to How to Connect the Node to the FC Switches or the Storage Array.

For the procedure on installing an SFP, see the Sun StorEdge 3000 Family Installation, Operation, and Service Manual.

For the procedure on installing a GBIC or an SFP to an FC switch, see the documentation that shipped with your FC switch hardware.

For the procedure on installing a fiber-optic cable, see the Sun StorEdge 3000 Family Installation, Operation, and Service Manual.

Use this procedure when you add a storage array to a SAN or DAS configuration. In SAN configurations, you connect the node to the FC switches. In DAS configurations, you connect the node directly to the storage array.

Before You Begin

This procedure provides the long forms of the Oracle Solaris Cluster commands. Most commands also have short forms. Except for the forms of the command names, the commands are identical.

To perform this procedure, become superuser or assume a role that provides solaris.cluster.read and solaris.cluster.modify role-based access control (RBAC) authorization.

Record this information because you will use it in Step 12 and Step 13 of this procedure to return resource groups and device groups to these nodes.

# clresourcegroup status + # cldevicegroup status +

# clnode evacuate nodename

For the procedure on installing a GBIC or an SFP to an FC switch, see the documentation that shipped with your FC switch hardware.

For the procedure on installing a GBIC or an SFP to a storage array, see the Sun StorEdge 3000 Family Installation, Operation, and Service Manual.

For the procedure on installing a fiber-optic cable, see the Sun StorEdge 3000 Family Installation, Operation, and Service Manual.

See the Oracle Solaris Cluster release notes documentation for information about accessing Oracle's EarlyNotifier web pages. The EarlyNotifier web pages list information about any required patches or firmware levels that are available for download. For the procedure on applying any host adapter's firmware patch, see the firmware patch README file.

# devfsadm -C

# cldevice populate

# format

# cldevice list -v

Perform the following step for each device group you want to return to the original node.

# cldevicegroup switch -n nodename devicegroup1[ devicegroup2 …]

The node to which you are restoring device groups.

The device group or groups that you are restoring to the node.

Perform the following step for each resource group you want to return to the original node.

# clresourcegroup switch -n nodename resourcegroup1[ resourcegroup2 …]

For failover resource groups, the node to which the groups are returned. For scalable resource groups, the node list to which the groups are returned.

The resource group or groups that you are returning to the node or nodes.

For more information, see your Veritas Volume Manager documentation.