| Skip Navigation Links | |

| Exit Print View | |

|

Using Sun QFS and Sun Storage Archive Manager With Oracle Solaris Cluster Sun QFS and Sun Storage Archive Manager 5.3 Information Library |

| Skip Navigation Links | |

| Exit Print View | |

|

Using Sun QFS and Sun Storage Archive Manager With Oracle Solaris Cluster Sun QFS and Sun Storage Archive Manager 5.3 Information Library |

1. Using SAM-QFS With Oracle Solaris Cluster

2. Requirements for Using SAM-QFS With Oracle Solaris Cluster

3. Configuring Sun QFS Local Failover File Systems With Oracle Solaris Cluster

4. Configuring Sun QFS Shared File Systems With Oracle Solaris Cluster

Task Map: Configuring Clustered File Systems With Oracle Solaris Cluster

Editing mcf Files for a Clustered File System

How to Edit mcf Files for a Clustered File System

Creating the Shared Hosts File on the Metadata Server

How Metadata Server Addresses Are Obtained

How to Enable a Shared File System as a SUNW.qfs Resource

5. Configuring SAM-QFS Archiving in an Oracle Solaris Cluster Environment (HA-SAM)

The shared hosts configuration file must reside in the following location on the metadata server:

/etc/opt/SUNWsamfs/hosts.fsname

Comments are permitted in the hosts configuration file. Comment lines must begin with a pound character (#). Characters to the right of the pound character are ignored.

The following table shows the fields in the hosts configuration file.

|

In a shared file system, each client host obtains the list of metadata server IP addresses from the metadata server host.

The metadata server and the client hosts use both the /etc/opt/SUNWsamfs/hosts.fsname file on the metadata server and the hosts.fsname.local file on each client host (if it exists) to determine the host interface to use when accessing the file system.

The information in this section might be useful when you are debugging.

In a shared file system, each client host obtains the list of metadata server IP addresses from the shared hosts file.

The metadata server and the client hosts use the shared hosts file on the metadata server and the hosts.fsname.local file on each client host (if it exists) to determine the host interface to use when accessing the metadata server.

Note - The term client, as in network client, is used to refer to both client hosts and the metadata server host.

This process is as follows:

The client obtains the list of metadata server host IP interfaces from the file system's on-disk shared hosts file. To examine this file, issue the samsharefs command from the metadata server or from a potential metadata server.

The client searches for an /etc/opt/SUNWsamfs/hosts.fsname.local file. Depending on the outcome of the search, one of the following activities occur:

If a hosts.fsname.local file does not exist, the client attempts to connect, in turn, to each address in the servers line in the shared hosts file until it succeeds in connecting.

If the hosts.fsname.local file exists, the client performs the following tasks:

It compares the list of addresses for the metadata server from both the shared hosts file on the file system and the hosts.fsname.local file.

It builds a list of addresses that are present in both places, and then it attempts to connect to each of these addresses, in turn, until it succeeds in connecting to the server. If the order of the addresses differs in these files, the client uses the ordering in the hosts.fsname.local file.

Example 4-2 Sun QFS Shared File System Hosts File Example

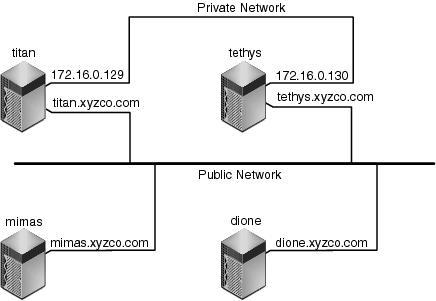

This set of examples shows a detailed scenario for a shared file system that comprises four hosts.

The following example shows a hosts file that lists four hosts.

# File /etc/opt/SUNWsamfs/hosts.sharefs1 # Host Host IP Server Not Server # Name Addresses Priority Used Host # ---- ----------------- -------- ---- ----- titan 172.16.0.129 1 - server tethys 172.16.0.130 2 - mimas mimas - - dione dione - -

Figure 4-1 Network Interfaces for Sun QFS Shared File System Hosts File Example

Example 4-3 File hosts.sharefs1.local on titan and tethys

Systems titan and tethys share a private network connection with interfaces 172.16.0.129 and 172.16.0.130. To guarantee that titan and tethys always communicate over their private network connection, the system administrator has created identical copies of /etc/opt/SUNWsamfs/hosts.sharefs1.local on each system.

The following example shows the information in the hosts.sharefs1.local files on titan and tethys.

# This is file /etc/opt/SUNWsamfs/hosts.sharefs1.local # Host Name Host Interfaces # --------- --------------- titan 172.16.0.129 tethys 172.16.0.130

Example 4-4 File hosts.sharefs1.local on mimas and dione

Systems mimas and dione are not on the private network. To guarantee that they always connect to titan and tethysthrough titan's and tethys's public interfaces, the system administrator has created identical copies of /etc/opt/SUNWsamfs/hosts.sharefs1.local on mimas and dione.

The following example shows the information in the hosts.sharefs1.local files on mimas and dione.

# This is file /etc/opt/SUNWsamfs/hosts.sharefs1.local # Host Name Host Interfaces # ---------- -------------- titan titan tethys tethys

Perform this task on each host that can mount the file system.

If it is not mounted, see Mounting the File System in Sun QFS and Sun Storage Archive Manager 5.3 Installation Guide and follow the instructions there.

For example:

# ps -ef | grep sam-sharefsd root 26167 26158 0 18:35:20 ? 0:00 sam-sharefsd sharefs1 root 27808 27018 0 10:48:46 pts/21 0:00 grep sam-sharefsd

This example shows that the sam-sharefsd daemon is active for the sharefs1 file system.

Note - If the sam-sharefsd daemon is active for your shared file system, you need to perform some diagnostic procedures.

The following code example shows sam-fsd output that indicates that the daemon is running.

cur% ps -ef | grep sam-fsd user1 16435 16314 0 16:52:36 pts/13 0:00 grep sam-fsd root 679 1 0 Aug 24 ? 0:00 /usr/lib/fs/samfs/sam-fsd

If the output indicates that the sam-fsd daemon is not running, and if no file system has been accessed since the system's last boot, issue the samd config command, as follows:

# samd config

If the output indicates that the sam-fsd daemon is running, enable tracing in the defaults.conf file and check the following files to determine whether configuration errors are causing the problem:

/var/opt/SUNWsamfs/trace/sam-fsd

/var/opt/SUNWsamfs/trace/sam-sharefsd

metadataserver# scrgadm -p | grep SUNW.qfs

metadataserver# scrgadm -a -t SUNW.qfs

The SUNW.qfs resource type is part of the Sun QFS software package. Configuring the resource type for use with your shared file system makes the shared file system's metadata server highly available. Oracle Solaris Cluster scalable applications can then access data contained in the file system.

The following code example shows how to use the scrgadm command to register and configure the SUNW.qfs resource type. In this example, the nodes are scnode-A and scnode-B. /global/sharefs1 is the mount point as specified in the /etc/vfstab file.

# scrgadm -a -g qfs-rg -h scnode-A,scnode-B

# scrgadm -a -g qfs-rg -t SUNW.qfs -j qfs-res \

-x QFSFileSystem=/global/sharefs1

Note - In a SAM-QFS environment, you can also configure the archiving features for high availability using Oracle Solaris Cluster software. For instructions, see Chapter 5, Configuring SAM-QFS Archiving in an Oracle Solaris Cluster Environment (HA-SAM).

Note - If you are using the SUNW.qfs resource type, you cannot use the bg mount option in the /etc/vfstab file.

For example:

metadataserver# scswitch -Z -g qfs-rg

For example:

metadataserver# scstat <information deleted from this output> -- Resources -- Resource Name Node Name State Status Message ------------- --------- ----- -------------- Resource: qfs-res ash Online Online Resource: qfs-res elm Offline Offline Resource: qfs-res oak Offline Offline

Perform these steps for each node in the cluster, with a final return to the metadata server.

For example:

server# scswitch -z -g qfs-rg -h elm

For example:

server# scstat -- Resources -- Resource Name Node Name State Status Message ------------- --------- ----- -------------- Resource: qfs-res ash Offline Offline Resource: qfs-res elm Online Online Resource: qfs-res oak Offline Offline