25 Overview of Analytics Architecture

This chapter provides an overview of the components that make up the Analytics suite, and outlines the scenarios that you can choose to implement when installing Analytics.

This chapter contains the following sections:

25.1 Components of an Analytics Installation

Analytics is a modular system allowing for a high degree of scalability. An Analytics installation consists of the following components, which communicate with each other through JDBC for database access, connections for HTTP, RMI, and proprietary Socket protocols:

Hadoop provides distributed data storage (HDFS) and distributed data processing (Map/Reduce). The Hadoop Distributed File System (HDFS) stores input and output files of Hadoop programs in a distributed manner throughout the Hadoop cluster, thus providing high aggregated bandwidth.

-

Analytics data capture application (also called 'Analytics Sensor') – web application that captures data on the activities of visitors as they browse your online site, and stores that data on the local file system.(For data capture to work, you must embed a special tag,

AddAnalyticsImgTag, into the pages that you wish to monitor. The tag triggers the data capture process.) -

Hadoop Distributed File System (HDFS) Agent takes the raw data collected by the data capture server and copies it from the local file system to HDFS.

-

Hadoop Jobs (Scheduler) runs jobs in a parallel and distributed fashion in order to efficiently compute statistics on the raw data that is stored in HDFS.

Hadoop implements a computational paradigm named

Map/Reduce, which divides a large computation into smaller fragments of work, each of which may be executed or re-executed on any node in the cluster.Map/Reducerequires a combination ofjarfiles and classes, all of which are collected into a singlejarfile that is usually referred to as ajobfile. To execute a job, you submit it to aJobTracker. Hadoop Jobs then responds with the following actions:-

Schedules and submits the jobs to

JobTracker. -

Processes raw data captured by the data capture server into statistical data and then writes it to the Analytics database.

Hadoop provides a web interface to browse HDFS and to determine the status of the jobs.

-

-

Analytics database – stores the aggregated and statistical data on the raw data captured by the data capture server.

-

Analytics reporting and administration web applications

-

The reporting component provides the user interface, used to generate reports.

-

The administration component provides the administration interface, used to integrate Analytics with your WebCenter Sites system.

Typically, the reporting and administration components reside on the same computer.

-

Load balancer is used to link multiple data capture servers in order to increase performance. Load balancing is also recommended for failover.

A firewall is highly recommended, to protect your WebCenter Sites and Analytics systems from intrusion. The modular nature of Analytics gives you the option to install Analytics in several ways. Section 25.2, "Installation Scenarios" describes the more common approaches.

25.2 Installation Scenarios

This section describes the different installation scenarios that you can choose to follow when implementing Analytics on your site. The scenarios are:

-

Section 25.2.1, "Single-Server Installation: Analytics and Its Database on a Single Server"

-

Section 25.2.2, "Dual-Server Installation: Analytics and Its Database on Separate Servers"

-

Section 25.2.3, "Enterprise-Level Installation: Fully Distributed"

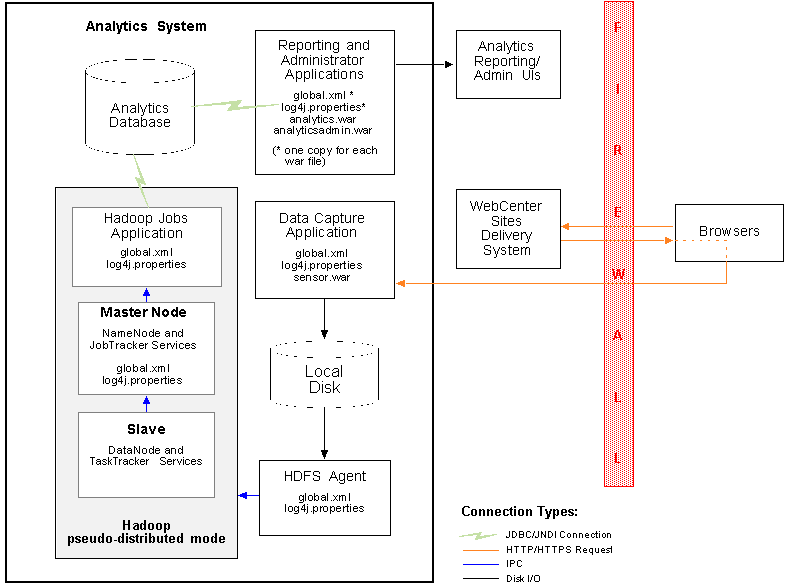

25.2.1 Single-Server Installation: Analytics and Its Database on a Single Server

In this scenario, all Analytics components reside on a single, dedicated computer. This scenario works best in situations when you need to test and experiment with Analytics. Figure 25-1 illustrates a single-server Analytics installation and indicates where configuration files reside and services run. Arrows represent data flow.

Figure 25-1 Single-Server Analytics Installation

Description of ''Figure 25-1 Single-Server Analytics Installation''

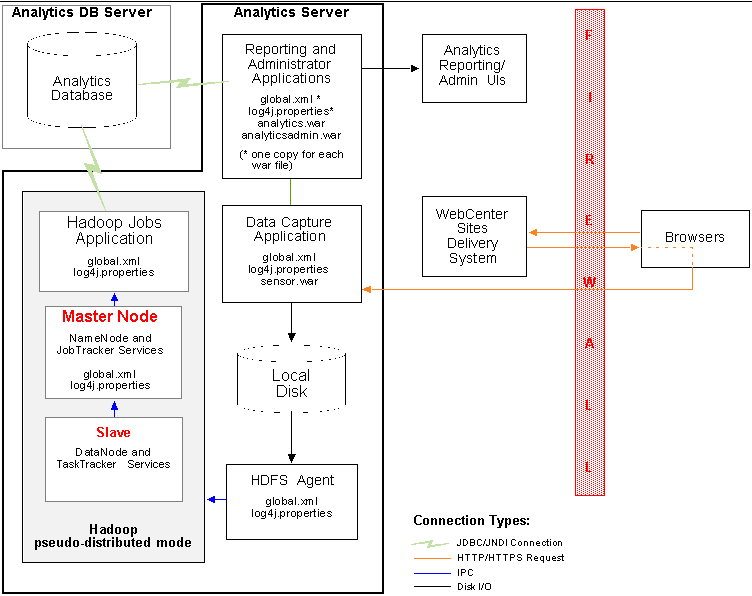

25.2.2 Dual-Server Installation: Analytics and Its Database on Separate Servers

In this scenario, Analytics components except for the Analytics database are hosted on a single, dedicated server; the Analytics database is installed on its own server. This scenario works best in situations when you need to test and experiment with Analytics under increased performance conditions (isolating database transactions from Hadoop jobs minimizes their competition for resources). Figure 25-2 illustrates a dual-server Analytics installation and indicates where configuration files reside and services run. Arrows represent data flow.

Figure 25-2 Dual-Server Analytics Installation

Description of ''Figure 25-2 Dual-Server Analytics Installation''

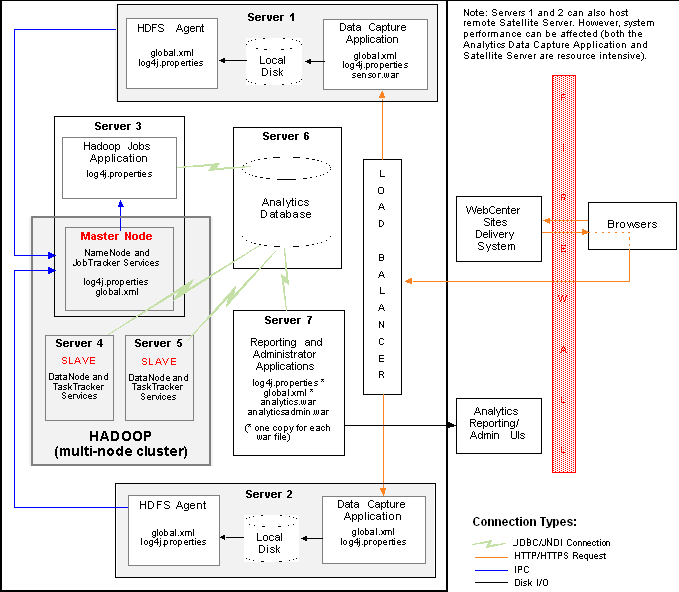

25.2.3 Enterprise-Level Installation: Fully Distributed

In this scenario, Analytics components run on separate computers. While more complex, this approach allows for scalability and provides better performance, as each component has dedicated processing power at its disposal. Figure 25-3 illustrates an enterprise-level installation and indicates where configuration files reside and services run. Arrows represent data flow. For information about installing Analytics with remote Satellite Server, see the note in Figure 25-3.

Figure 25-3 Enterprise-Level Analytics Installation

Description of ''Figure 25-3 Enterprise-Level Analytics Installation''

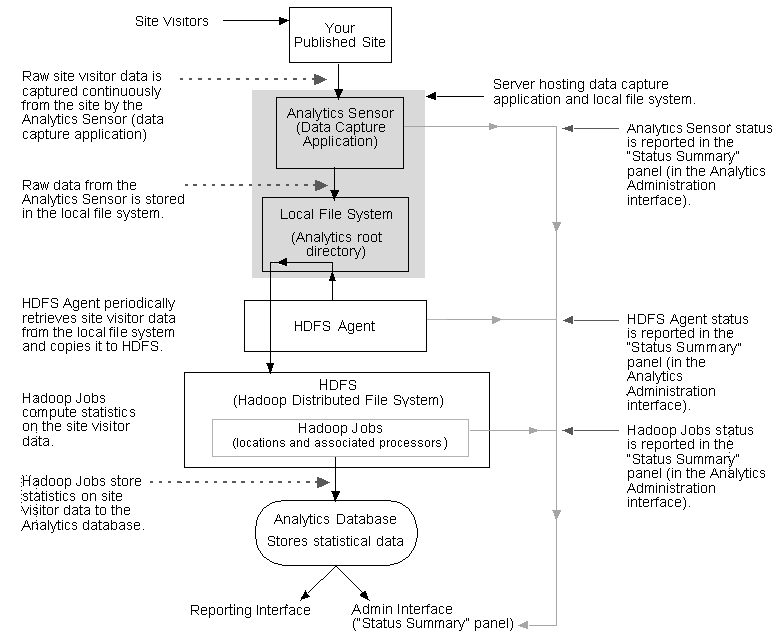

25.3 Process Flow

In a functional Analytics installation, raw site visitor data is continuously captured by the Analytics Sensor (data capture application), which then stores the data into the local file system. The raw data in the file system is called on periodically by the HDFS Agent. The HDFS Agent copies the raw data to the Hadoop Distributed File System (HDFS), where Hadoop jobs process the data (Figure 25-4).

Hadoop jobs consist of locations and Oracle-specific processors that read site visitor data in one location, statistically process that data, and write the results to another location for pickup by the next processor. When processing is complete, the results (statistics on the raw data) are injected into the Analytics database.

The status of Hadoop Jobs can be monitored from the "Status Summary" panel of the Analytics Administration interface. Detailed information about data processing and the "Status Summary" panel is available in the chapter "Reference: Hadoop Jobs Processors and Locations in the Oracle Fusion Middleware WebCenter Sites: Analytics Administrator's Guide.

25.4 Terms and Definitions

The terms listed below are used frequently throughout this guide. The glossary defines additional terms.

-

The "Analytics Data Capture Application" is also referred to as the "Analytics Sensor," or simply "sensor."

-

The term "site" in the context of installation/configuration procedures and in the interpretation of report statistics refers to the content management (CM) site that functions as the back end of your online site (or one of its sections).

-

"FirstSite II" is the sample content management site, used throughout this guide to support examples of reports and to provide code snippets. FirstSite II is also the back end of the online sample site named "etravel."